Organizations serving a number of tenants by way of AI purposes face a typical problem: easy methods to monitor, analyze, and optimize mannequin utilization throughout completely different buyer segments. Though Amazon Bedrock supplies highly effective basis fashions (FMs) by way of its Converse API, the true enterprise worth emerges when you may join mannequin interactions to particular tenants, customers, and use instances.

Utilizing the Converse API requestMetadata parameter affords an answer to this problem. By passing tenant-specific identifiers and contextual info with every request, you may remodel commonplace invocation logs into wealthy analytical datasets. This method means you may measure mannequin efficiency, monitor utilization patterns, and allocate prices with tenant-level precision—with out modifying your core utility logic.

Monitoring and managing price by way of utility inference profiles

Managing prices for generative AI workloads is a problem that organizations face every day, particularly when utilizing on-demand FMs that don’t help cost-allocation tagging. Whenever you monitor spending manually and depend on reactive controls, you create dangers of overspending whereas introducing operational inefficiencies.

Software inference profiles tackle this by permitting customized tags (for instance, tenant, challenge, or division) to be utilized on to on-demand fashions, enabling granular price monitoring. Mixed with AWS Budgets and price allocation instruments, organizations can automate price range alerts, prioritize vital workloads, and implement spending guardrails at scale. This shift from guide oversight to programmatic management reduces monetary dangers whereas fostering innovation by way of enhanced visibility into AI spend throughout groups, purposes, and tenants.

For monitoring multi-tenant prices when coping with tens to 1000’s of utility inference profiles check with Handle multi-tenant Amazon Bedrock prices utilizing utility inference profiles within the AWS Synthetic Intelligence Weblog submit.

Managing prices and sources in large-scale multi-tenant environments provides complexity while you use utility inference profiles in Amazon Bedrock. You face extra issues when coping with a whole lot of 1000’s to tens of millions of tenants and sophisticated tagging necessities.

The lifecycle administration of those profiles creates operational challenges. It is advisable to deal with profile creation, updates, and deletions at scale. Automating these processes requires strong error dealing with for edge instances like profile naming conflicts, Area-specific replication for prime availability, and cascading AWS Identification and Entry Administration (IAM) coverage updates that keep safe entry controls throughout tenants.

One other layer of complexity arises from price allocation tagging constraints. Though organizations and groups can add a number of tags per utility inference profile useful resource, organizations with granular monitoring wants—corresponding to combining tenant identifiers (tenantId), departmental codes (division), and price facilities (costCenter)—may discover this restrict restrictive, probably compromising the depth of price attribution. These issues encourage organizations to implement a client or client-side monitoring method, and that is the place metadata-based tagging is likely to be a greater match.

Utilizing Converse API with request metadata

You should utilize the Converse API to incorporate request metadata while you name FMs by way of Amazon Bedrock. This metadata doesn’t have an effect on the mannequin’s response, however you need to use it for monitoring and logging functions (JSON object with key-value pairs of metadata).Widespread makes use of for request metadata embody:

- Including distinctive identifiers for monitoring requests

- Together with timestamp info

- Tagging requests with application-specific info

- Including model numbers or different contextual knowledge

The request metadata just isn’t usually returned within the API response. It’s primarily used to your personal monitoring and logging functions on the client-side.

When utilizing the Converse API, you usually embody the request metadata as a part of your API name. For instance, utilizing the AWS SDK for Python (Boto3), you may construction your request like this:

Answer overview

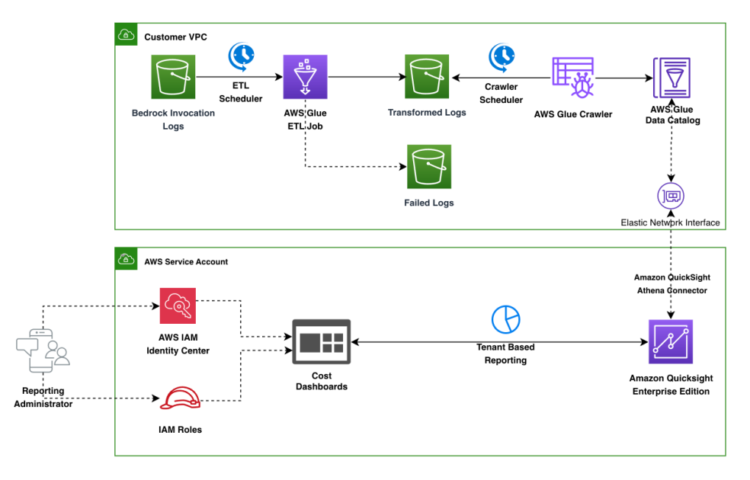

The next diagram illustrates a complete log processing and analytics structure throughout two foremost environments: a Buyer digital personal cloud (VPC) and an AWS Service Account.

Within the Buyer VPC, the stream begins with Amazon Bedrock invocation logs being processed by way of an extract, remodel, and cargo (ETL) pipeline managed by AWS Glue. The logs undergo a scheduler and transformation course of, with an AWS Glue crawler cataloging the information. Failed logs are captured in a separate storage location.

Within the AWS Service Account part, the structure exhibits the reporting and evaluation capabilities. Amazon QuickSight Enterprise version serves as the first analytics and visualization service, with tenant-based reporting dashboards.

To transform Amazon Bedrock invocation logs with tenant metadata into actionable enterprise intelligence (BI), we’ve designed a scalable knowledge pipeline that processes, transforms, and visualizes this info. The structure consists of three foremost parts working collectively to ship tenant-specific analytics.

The method begins in your buyer’s digital personal cloud (VPC), the place Amazon Bedrock invocation logs seize every interplay together with your AI utility. These logs comprise invaluable info together with the requestMetadata parameters you’ve configured to establish tenants, customers, and different enterprise contexts.

An ETL scheduler triggers AWS Glue jobs at common intervals to course of these logs. The AWS Glue ETL job extracts the tenant metadata from every log entry, transforms it right into a structured format optimized for evaluation, and hundreds the outcomes right into a reworked logs bucket. For knowledge high quality assurance, data that fail processing are mechanically routed to a separate failed logs bucket for troubleshooting.

After the information is reworked, a crawler scheduler prompts an AWS Glue crawler to scan the processed logs. The crawler updates the AWS Glue Information Catalog with the newest schema and partition info, making your tenant-specific knowledge instantly discoverable and queryable.

This automated cataloging creates a unified view of tenant interactions throughout your Amazon Bedrock purposes. The information catalog connects to your analytics atmosphere by way of an elastic community interface, that gives safe entry whereas sustaining community isolation.

Your reporting infrastructure within the Amazon QuickSight account transforms tenant knowledge into actionable insights. Amazon QuickSight Enterprise version serves as your visualization service and connects to the information catalog by way of the QuickSight to Amazon Athena connector.

Your reporting directors can create tenant-based dashboards that present utilization patterns, common queries, and efficiency metrics segmented by tenant. Price dashboards present monetary insights into mannequin utilization by tenant, serving to you perceive the economics of your multi-tenant AI utility.

Monitoring and analyzing Amazon Bedrock efficiency metrics

The next Amazon QuickSight dashboard demonstrates how one can visualize your Amazon Bedrock utilization knowledge throughout a number of dimensions. You may look at your utilization patterns by way of 4 key visualization panels.

Utilizing the Bedrock Utilization Abstract horizontal bar chart proven within the prime left, you may examine token utilization throughout tenant teams. You get clear visibility into every tenant’s consumption ranges. The Token Utilization by Firm pie chart within the prime proper breaks down token utilization distribution by firm, exhibiting relative shares amongst organizations.

Token Utilization by Division horizontal bar chart within the backside left reveals departmental consumption. You may see how completely different enterprise features corresponding to Finance, Analysis, HR, and Gross sales use Amazon Bedrock providers. The Mannequin Distribution graphic within the backside proper shows mannequin distribution metrics with a round gauge exhibiting full protection.

You may filter and drill down into your knowledge utilizing the highest filter controls for Yr, Month, Day, Tenant, and Mannequin choices. This allows detailed temporal and organizational evaluation of your Amazon Bedrock consumption patterns.

The excellent dashboard present within the following picture supplies important insights into AWS Amazon Bedrock utilization patterns and efficiency metrics throughout completely different environments. This “Utilization Traits” visualization suite contains key metrics corresponding to token utilization traits, enter and output token distribution, latency evaluation, and environment-wide utilization breakdown.

Utilizing the dashboard, stakeholders could make data-driven selections about useful resource allocation, efficiency optimization, and utilization patterns throughout completely different deployment phases. With intuitive controls for yr, month, day, tenant, and mannequin choice, groups can shortly filter and analyze particular utilization situations.

Entry to those insights is fastidiously managed by way of AWS IAM Identification Heart and role-based permissions, so tenant knowledge stays protected whereas nonetheless enabling highly effective analytics.

By implementing this structure, you remodel primary mannequin invocation logs right into a strategic asset. What you are promoting can reply refined questions on tenant conduct, optimize mannequin efficiency for particular buyer segments, and make data-driven selections about your AI utility’s future growth—all powered by the metadata you’ve thoughtfully included in your Amazon Bedrock Converse API requests.

Customise the answer

The Converse metadata price reporting resolution supplies a number of customization factors to adapt to your particular multi-tenant necessities and enterprise wants. You may modify the ETL course of by modifying the AWS Glue ETL script at `cdk/glue/bedrock_logs_transform.py` to extract extra metadata fields or remodel knowledge in response to your tenant construction. Schema definitions might be up to date within the corresponding JSON recordsdata to accommodate customized tenant attributes or hierarchical organizational knowledge.

For organizations with evolving pricing fashions, the pricing knowledge saved in `cdk/glue/pricing.csv` might be up to date to replicate present Amazon Bedrock prices, together with cache learn and write pricing. Edit the .csv file and add it to your reworked knowledge Amazon Easy Storage Service (Amazon S3) bucket, then run the pricing crawler to refresh the information catalog. This makes certain your price allocation dashboards are correct as pricing modifications.

QuickSight dashboards provide intensive customization capabilities instantly by way of the console interface. You may modify present visualizations to give attention to particular tenant metrics, add filters for departmental or regional views, and create new analytical insights that align with what you are promoting reporting necessities. It can save you custom-made variations within the dashboard editor whereas preserving the unique template for future reference.

Clear up

To keep away from incurring future costs, delete the sources. As a result of the answer is deployed utilizing AWS Cloud Improvement Package (AWS CDK) cleansing up sources is easy. From the command line grow to be the CDK listing on the root of the converse-metadata-cost-reporting repo and enter the next command to delete the deployed sources. You may also discover the directions in README.md.

Conclusion

Implementing tenant-specific metadata with Amazon Bedrock Converse API creates a robust basis for AI utility analytics. This method transforms commonplace invocation logs right into a strategic asset that drives enterprise selections and improves buyer experiences.

The structure can ship rapid advantages by way of automated processing of tenant metadata. You achieve visibility into utilization patterns throughout buyer segments. You may allocate prices precisely and establish alternatives for mannequin optimization based mostly on tenant-specific wants. For implementation particulars, check with the converse-metadata-cost-reporting GitHub repository.

This resolution permits measurable enterprise outcomes. Product groups can prioritize options on tenant utilization knowledge. Buyer success managers can present customized steerage utilizing tenant-specific insights. Finance groups can develop extra correct pricing fashions based mostly on precise utilization patterns throughout completely different buyer segments. As AI purposes turn out to be more and more central to enterprise operations, understanding how completely different tenants work together together with your fashions turns into important. Implementing the requestMetadata parameter in your Amazon Bedrock Converse API calls as we speak builds the analytics basis to your future AI technique. Begin small by figuring out key tenant identifiers to your metadata, then develop your analytics capabilities as you collect extra knowledge. The versatile structure described right here scales together with your wants. You may constantly refine your understanding of tenant conduct and ship more and more customized AI experiences.

In regards to the authors

Praveen Chamarthi brings distinctive experience to his function as a Senior AI/ML Specialist at Amazon Internet Providers (AWS), with over twenty years within the trade. His ardour for machine studying and generative AI, coupled along with his specialization in ML inference on Amazon SageMaker, permits him to empower organizations throughout the Americas to scale and optimize their ML operations. When he’s not advancing ML workloads, Praveen might be discovered immersed in books or having fun with science fiction movies.

Praveen Chamarthi brings distinctive experience to his function as a Senior AI/ML Specialist at Amazon Internet Providers (AWS), with over twenty years within the trade. His ardour for machine studying and generative AI, coupled along with his specialization in ML inference on Amazon SageMaker, permits him to empower organizations throughout the Americas to scale and optimize their ML operations. When he’s not advancing ML workloads, Praveen might be discovered immersed in books or having fun with science fiction movies.

Srikanth Reddy is a Senior AI/ML Specialist with Amazon Internet Providers (AWS). He’s answerable for offering deep, domain-specific experience to enterprise prospects, serving to them use AWS AI and ML capabilities to their fullest potential.

Srikanth Reddy is a Senior AI/ML Specialist with Amazon Internet Providers (AWS). He’s answerable for offering deep, domain-specific experience to enterprise prospects, serving to them use AWS AI and ML capabilities to their fullest potential.

Dhawal Patel is a Principal Machine Studying Architect at Amazon Internet Providers (AWS). He has labored with organizations starting from giant enterprises to mid-sized startups on issues associated to distributed computing and AI. He focuses on deep studying, together with pure language processing (NLP) and laptop imaginative and prescient domains. He helps prospects obtain high-performance mannequin inference on Amazon SageMaker.

Dhawal Patel is a Principal Machine Studying Architect at Amazon Internet Providers (AWS). He has labored with organizations starting from giant enterprises to mid-sized startups on issues associated to distributed computing and AI. He focuses on deep studying, together with pure language processing (NLP) and laptop imaginative and prescient domains. He helps prospects obtain high-performance mannequin inference on Amazon SageMaker.

Alma Mohapatra is an Enterprise Assist Supervisor serving to strategic AI/ML prospects optimize their workloads on HPC environments. She guides organizations by way of efficiency challenges and infrastructure optimization for LLMs throughout distributed GPU clusters. Alma interprets technical necessities into sensible options whereas collaborating with Technical Account Managers to make sure AI/ML initiatives meet enterprise aims.

Alma Mohapatra is an Enterprise Assist Supervisor serving to strategic AI/ML prospects optimize their workloads on HPC environments. She guides organizations by way of efficiency challenges and infrastructure optimization for LLMs throughout distributed GPU clusters. Alma interprets technical necessities into sensible options whereas collaborating with Technical Account Managers to make sure AI/ML initiatives meet enterprise aims.

John Boren is a Options Architect at AWS GenAI Labs in Seattle the place he develops full-stack Generative AI demos. Initially from Alaska, he enjoys mountaineering, touring, steady studying, and fishing.

John Boren is a Options Architect at AWS GenAI Labs in Seattle the place he develops full-stack Generative AI demos. Initially from Alaska, he enjoys mountaineering, touring, steady studying, and fishing.

Rahul Sharma is a Senior Specialist Options Architect at AWS, serving to AWS prospects construct ML and Generative AI options. Previous to becoming a member of AWS, Rahul has spent a number of years within the finance and insurance coverage industries, serving to prospects construct knowledge and analytics platforms.

Rahul Sharma is a Senior Specialist Options Architect at AWS, serving to AWS prospects construct ML and Generative AI options. Previous to becoming a member of AWS, Rahul has spent a number of years within the finance and insurance coverage industries, serving to prospects construct knowledge and analytics platforms.