This submit is cowritten with Mones Raslan, Ravi Sharma and Adele Gouttes from Zalando.

Zalando SE is considered one of Europe’s largest ecommerce vogue retailers with round 50 million energetic clients. Zalando faces the problem of normal (weekly or day by day) {discount} steering for greater than 1 million merchandise, additionally known as markdown pricing. Markdown pricing is a pricing strategy that adjusts costs over time and is a standard technique to maximise income from items which have a restricted lifespan or are topic to seasonal demand (Sul 2023).

As a result of many gadgets are ordered forward of season and never replenished afterwards, companies have an curiosity in promoting the merchandise evenly all through the season. The primary rationale is to keep away from overstock and understock conditions. An overstock scenario would result in excessive prices after the season ends, and an understock scenario would result in misplaced gross sales as a result of clients would select to purchase at opponents.

To handle this difficulty, {discount} steering is an efficient strategy as a result of it influences item-level demand and due to this fact inventory ranges.

The markdown pricing algorithmic answer Zalando depends on is a forecast-then-optimize strategy (Kunz et al. 2023 and Streeck et al. 2024). A high-level description of the markdown pricing algorithm answer may be damaged down into 4 steps:

- Low cost-dependent forecast – Utilizing previous information, forecast future discount-dependent portions which might be related for figuring out the longer term revenue of an merchandise. The next are vital metrics that should be forecasted:

-

- Demand – What number of gadgets will probably be offered within the subsequent X weeks for various reductions?

- Return price – What share of offered gadgets will probably be returned by the client?

- Return time – When will a returned merchandise reappear within the warehouse in order that it may be offered once more?

- Achievement prices – How a lot will transport and returning an merchandise value?

- Residual worth – At what value can an merchandise be realistically offered after the top of the season?

-

- Decide an optimum {discount} – Use the forecasts from Step 1 as enter to maximise revenue as a operate of {discount}, which is topic to enterprise and inventory constraints. Concrete particulars may be present in Streeck et al. 2024.

- Suggestions – Low cost suggestions decided in Step 2 are included into the store or overwritten by pricing managers.

- Knowledge assortment – Up to date store costs result in up to date demand. The brand new data is used to reinforce the coaching units utilized in Step 1 for forecasting reductions.

The next diagram illustrates this workflow.

The main focus of this submit is on Step 1, making a discount-dependent forecast. Relying on the complexity of the issue and the construction of underlying information, the predictive fashions at Zalando vary from easy statistical averages, over tree-based fashions to a Transformer-based deep studying structure (Kunz et al. 2023).

Whatever the fashions used, all of them embody information preprocessing, coaching, and inference over a number of billions of information containing weekly information spanning a number of years and markets to supply forecasts. Working such large-scale forecasting requires resilient, reusable, reproducible, and automatic machine studying (ML) workflows with quick experimentation and steady enhancements.

On this submit, we current the implementation and orchestration of the forecast mannequin’s coaching and inference. The answer was inbuilt a latest collaboration between AWS Skilled Companies, below which Properly-Architected machine studying design rules had been adopted.

The results of the collaboration is a blueprint that’s being reused for comparable use circumstances inside Zalando.

Motivation for streamlined ML operations and large-scale inference

As talked about earlier, {discount} steering of greater than 1,000,000 gadgets each week requires producing a considerable amount of forecast information (roughly 10 billion). Efficient {discount} steering requires steady enchancment of forecasting accuracy.

To enhance forecasting accuracy, all concerned ML fashions should be retrained, and predictions should be produced weekly, and in some circumstances day by day.

Given the quantity of knowledge and nature of ML fashions in query, coaching and inference takes from a number of hours to a number of days. Any error within the course of represents dangers by way of operational prices and alternative prices as a result of Zalando’s business pricing staff expects outcomes based on outlined service stage targets (SLOs).

If an ML mannequin coaching or inference fails in any given week, an ML mannequin with outdated information is used to generate the forecast information. This has a direct affect on income for Zalando as a result of the forecasts and reductions are much less correct when utilizing outdated information.

On this context, our motivation for streamlining ML operations (MLOps) may be summarized as follows:

- Velocity up experimentation and analysis, and allow fast prototyping and supply enough time to fulfill SLOs

- Design the structure in a templated strategy with the target of supporting a number of mannequin coaching and inference, offering a unified ML infrastructure and enabling automated integration for coaching and inference

- Present scalability to accommodate several types of forecasting fashions (additionally supporting GPU) and rising datasets

- Make end-to-end ML pipelines and experimentation repeatable, fault-tolerant, and traceable

To realize these targets, we explored a number of distributed computing instruments.

Throughout our evaluation part, we found two key elements that influenced our selection of distributed computing instrument. First, our enter datasets had been saved within the columnar Parquet format, unfold throughout a number of partitions. Second, the required inference operations exhibited embarrassingly parallel traits, that means they may very well be run independently with out necessitating inter-node communication. These elements guided our decision-making course of for choosing essentially the most appropriate distributed computing instrument.

We explored a number of massive information processing options and determined to make use of an Amazon SageMaker Processing job for the next causes:

- It’s extremely configurable, with help of pre-built pictures, customized cluster necessities, and containers. This makes it easy to handle and scale with no overhead of inter-node communication.

- Amazon SageMaker helps easy experimentation with Amazon SageMaker Studio.

- SageMaker Processing integrates seamlessly with AWS Identification and Entry Administration (IAM), Amazon Easy Storage Service (Amazon S3), AWS Step Features, and different AWS providers.

- SageMaker Processing helps the choice to improve to GPUs with minimal change within the structure.

- SageMaker Processing unifies our coaching and inference structure, enabling us to make use of inference structure for mannequin backtesting.

We additionally explored different instruments, however most well-liked SageMaker Processing jobs for the next causes:

- Apache Spark on Amazon EMR – As a result of inference operations displaying embarrassingly parallel traits and never requiring inter-node communication, we determined in opposition to utilizing Spark on Amazon EMR, which concerned extra overhead for inter-node communication.

- SageMaker batch rework jobs – Batch rework jobs have a tough restrict of 100 MB payload measurement, which couldn’t accommodate the dataset partitions. This proved to be a limiting issue for working batch inference on it.

Answer overview

Massive-scale inference requires a scalable inference and scalable coaching answer.

We approached this by designing an structure with an event-driven precept in thoughts that enabled us to construct ML workflows for coaching and inference utilizing infrastructure as code (IaC). On the similar time, we included steady integration and supply (CI/CD) processes, automated testing, and mannequin versioning into the answer. As a result of utilized scientists have to iterate and experiment, we created a versatile experimentation atmosphere very near the manufacturing one.

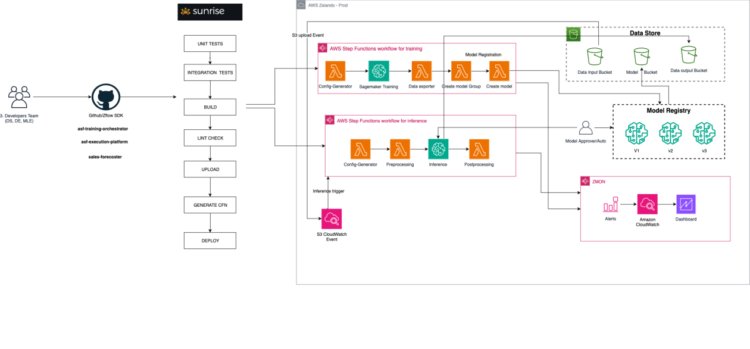

The next high-level structure diagram reveals the ML answer deployed on AWS, which is now utilized by Zalando’s forecasting staff to run pricing forecasting fashions.

The structure consists of the next parts:

- Dawn – Dawn is Zalando’s inside CI/CD instrument, which automates the deployment of the ML answer in an AWS atmosphere.

- AWS Step Features – AWS Step Features orchestrates the whole ML workflow, coordinating numerous phases reminiscent of mannequin coaching, versioning, and inference. Step Features can seamlessly combine with AWS providers reminiscent of SageMaker, AWS Lambda, and Amazon S3.

- Knowledge retailer – S3 buckets function the information retailer, holding enter and output information in addition to mannequin artifacts.

- Mannequin registry – Amazon SageMaker Mannequin Registry gives a centralized repository for organizing, versioning, and monitoring fashions.

- Logging and monitoring – Amazon CloudWatch handles logging and monitoring, forwarding the metrics to Zalando’s inside alerting instrument for additional evaluation and notifications.

To orchestrate a number of steps throughout the coaching and inference pipelines, we used Zflow, a Python-based SDK developed by Zalando that makes use of the AWS Cloud Growth Package (AWS CDK) to create Step Features workflows. It makes use of SageMaker coaching jobs for mannequin coaching, processing jobs for batch inference, and the mannequin registry for mannequin versioning.

All of the parts are declared utilizing Zflow and are deployed utilizing CI/CD (Dawn) to construct reusable end-to-end ML workflows, whereas integrating with AWS providers.

The reusable ML workflow permits experimentation and productionization of various fashions. This allows the separation of the mannequin orchestration and enterprise logic, permitting information scientists and utilized scientists to concentrate on the enterprise logic and use these predefined ML workflows.

A completely automated manufacturing workflow

The MLOps lifecycle begins with ingesting the coaching information within the S3 buckets. On the arrival of knowledge, Amazon EventBridge invokes the coaching workflow (containing SageMaker coaching jobs). Upon completion of the coaching job, a brand new mannequin is created and saved in SageMaker Mannequin Registry.

To keep up high quality management, the staff verifies the mannequin properties in opposition to the predetermined necessities. If the mannequin meets the standards, it’s authorized for inference. After a mannequin is authorized, the inference pipeline will level to the newest authorized model of that mannequin group.

When inference information is ingested on Amazon S3, EventBridge mechanically runs the inference pipeline.

This automated workflow streamlines the whole course of, from information ingestion to inference, decreasing handbook interventions and minimizing the danger of errors. Through the use of AWS providers reminiscent of Amazon S3, EventBridge, SageMaker, and Step Features, we had been capable of orchestrate the end-to-end MLOps lifecycle effectively and reliably.

Seamless integration of experiments

To permit for easy mannequin experimentation, we created SageMaker notebooks that use the Amazon SageMaker SDK to launch SageMaker coaching and processing jobs. The notebooks use the identical Docker pictures (SageMaker Studio pocket book kernels) as those utilized in CI/CD workflows all the best way to manufacturing. With these notebooks, utilized scientists can deliver their very own code and connect with completely different information sources, whereas additionally experimenting with completely different occasion sizes by scaling up or down computation and reminiscence necessities. The experimentation setup displays the manufacturing workflows.

Conclusion

On this submit, we described how MLOps, in collaboration between Zalando and AWS Skilled Companies, had been streamlined with the target of enhancing {discount} steering at Zalando.

MLOps greatest practices applied for forecast mannequin coaching and inference has offered Zalando a versatile and scalable structure with lowered engineering complexity.

The applied structure allows Zalando’s staff to conduct large-scale inference, with frequent experimentation and decreased dangers of lacking weekly SLOs.

Templatization and automation is predicted to supply engineers with weekly financial savings of three–4 hours per ML mannequin in operations and upkeep duties. Moreover, the transition from information science experimentation into mannequin productionization has been streamlined.

To study extra about ML streamlining, experimentation, and scalability, consult with the next weblog posts:

References

- Eleanor, L., R. Brian, Ok. Jalaj, and D. A. Little. 2022. “Promotheus: An Finish-to-Finish Machine Studying Framework for Optimizing Markdown in On-line Trend E-commerce.” arXiv. https://arxiv.org/abs/2207.01137.

- Kunz, M., S. Birr, M. Raslan, L. Ma, Z. Li, A. Gouttes, M. Koren, et al. 2023. “Deep Studying primarily based Forecasting: a case examine from the net vogue trade.” In Forecasting with Synthetic Intelligence: Concept and Purposes (Switzerland), 2023.

- Streeck, R., T. Gellert, A. Schmitt, A. Dipkaya, V. Fux, T. Januschowski, and T. Berthold. 2024. “Tips from the Commerce for Massive-Scale Markdown Pricing: Heuristic Minimize Era for Lagrangian Decomposition.” arXiv. https://arxiv.org/abs/2404.02996#.

- Sul, Inki. 2023. “Buyer-centric Pricing: Maximizing Income Via Understanding Buyer Habits.” The College of Texas at Dallas. https://utd-ir.tdl.org/gadgets/a2b9fde1-aa17-4544-a16e-c5a266882dda.

In regards to the Authors

Mones Raslan is an Utilized Scientist at Zalando’s Pricing Platform with a background in utilized arithmetic. His work encompasses the event of business-relevant and scalable forecasting fashions, stretching from prototyping to deployment. In his spare time, Mones enjoys operatic singing and scuba diving.

Mones Raslan is an Utilized Scientist at Zalando’s Pricing Platform with a background in utilized arithmetic. His work encompasses the event of business-relevant and scalable forecasting fashions, stretching from prototyping to deployment. In his spare time, Mones enjoys operatic singing and scuba diving.

Ravi Sharma is a Senior Software program Engineer at Zalando’s Pricing Platform, bringing expertise throughout numerous domains reminiscent of soccer betting, radio astronomy, healthcare, and ecommerce. His broad technical experience allows him to ship strong and scalable options persistently. Exterior work, he enjoys nature hikes, desk tennis, and badminton.

Ravi Sharma is a Senior Software program Engineer at Zalando’s Pricing Platform, bringing expertise throughout numerous domains reminiscent of soccer betting, radio astronomy, healthcare, and ecommerce. His broad technical experience allows him to ship strong and scalable options persistently. Exterior work, he enjoys nature hikes, desk tennis, and badminton.

Adele Gouttes is a Senior Utilized Scientist, with expertise in machine studying, time collection forecasting, and causal inference. She has expertise growing merchandise finish to finish, from the preliminary discussions with stakeholders to manufacturing, and creating technical roadmaps for cross-functional groups. Adele performs music and enjoys gardening.

Adele Gouttes is a Senior Utilized Scientist, with expertise in machine studying, time collection forecasting, and causal inference. She has expertise growing merchandise finish to finish, from the preliminary discussions with stakeholders to manufacturing, and creating technical roadmaps for cross-functional groups. Adele performs music and enjoys gardening.

Irem Gokcek is a Knowledge Architect on the AWS Skilled Companies staff, with experience spanning each analytics and AI/ML. She has labored with clients from numerous industries, reminiscent of retail, automotive, manufacturing, and finance, to construct scalable information architectures and generate precious insights from the information. In her free time, she is enthusiastic about swimming and portray.

Irem Gokcek is a Knowledge Architect on the AWS Skilled Companies staff, with experience spanning each analytics and AI/ML. She has labored with clients from numerous industries, reminiscent of retail, automotive, manufacturing, and finance, to construct scalable information architectures and generate precious insights from the information. In her free time, she is enthusiastic about swimming and portray.

Jean-Michel Lourier is a Senior Knowledge Scientist inside AWS Skilled Companies. He leads groups implementing data-driven functions aspect by aspect with AWS clients to generate enterprise worth out of their information. He’s enthusiastic about diving into tech and studying about AI, machine studying, and their enterprise functions. He’s additionally a biking fanatic.

Jean-Michel Lourier is a Senior Knowledge Scientist inside AWS Skilled Companies. He leads groups implementing data-driven functions aspect by aspect with AWS clients to generate enterprise worth out of their information. He’s enthusiastic about diving into tech and studying about AI, machine studying, and their enterprise functions. He’s additionally a biking fanatic.

Junaid Baba, a Senior DevOps Guide with AWS Skilled Companies, has experience in machine studying, generative AI operations, and cloud-centered architectures. He applies these abilities to design scalable options for shoppers within the world retail and monetary providers sectors. In his spare time, Junaid spends high quality time together with his household and finds pleasure in mountain climbing adventures.

Junaid Baba, a Senior DevOps Guide with AWS Skilled Companies, has experience in machine studying, generative AI operations, and cloud-centered architectures. He applies these abilities to design scalable options for shoppers within the world retail and monetary providers sectors. In his spare time, Junaid spends high quality time together with his household and finds pleasure in mountain climbing adventures.

Luis Bustamante is a Senior Engagement Supervisor inside AWS Skilled Companies. He helps clients speed up their journey to the cloud via experience in digital transformation, cloud migration, and IT distant supply. He enjoys touring and studying about historic occasions.

Luis Bustamante is a Senior Engagement Supervisor inside AWS Skilled Companies. He helps clients speed up their journey to the cloud via experience in digital transformation, cloud migration, and IT distant supply. He enjoys touring and studying about historic occasions.

Viktor Malesevic is a Senior Machine Studying Engineer inside AWS Skilled Companies, main groups to construct superior machine studying options within the cloud. He’s enthusiastic about making AI impactful, overseeing the whole course of from modeling to manufacturing. In his spare time, he enjoys browsing, biking, and touring.

Viktor Malesevic is a Senior Machine Studying Engineer inside AWS Skilled Companies, main groups to construct superior machine studying options within the cloud. He’s enthusiastic about making AI impactful, overseeing the whole course of from modeling to manufacturing. In his spare time, he enjoys browsing, biking, and touring.