AWS gives highly effective generative AI providers, together with Amazon Bedrock, which permits organizations to create tailor-made use instances comparable to AI chat-based assistants that give solutions primarily based on data contained within the prospects’ paperwork, and far more. Many companies need to combine these cutting-edge AI capabilities with their present collaboration instruments, comparable to Google Chat, to reinforce productiveness and decision-making processes.

This put up exhibits how one can implement an AI-powered enterprise assistant, comparable to a customized Google Chat app, utilizing the ability of Amazon Bedrock. The answer integrates massive language fashions (LLMs) together with your group’s information and gives an clever chat assistant that understands dialog context and gives related, interactive responses straight inside the Google Chat interface.

This answer showcases the best way to bridge the hole between Google Workspace and AWS providers, providing a sensible method to enhancing worker effectivity via conversational AI. By implementing this architectural sample, organizations that use Google Workspace can empower their workforce to entry groundbreaking AI options powered by Amazon Internet Companies (AWS) and make knowledgeable choices with out leaving their collaboration instrument.

With this answer, you may work together straight with the chat assistant powered by AWS out of your Google Chat setting, as proven within the following instance.

Resolution overview

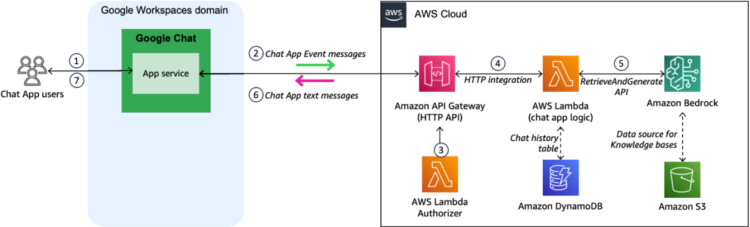

We use the next key providers to construct this clever chat assistant:

- Amazon Bedrock is a completely managed service that gives a selection of high-performing basis fashions (FMs) from main AI corporations comparable to AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon via a single API, together with a broad set of capabilities to construct generative AI purposes with safety, privateness, and accountable AI

- AWS Lambda, a serverless computing service, helps you to deal with the appliance logic, processing requests, and interplay with Amazon Bedrock

- Amazon DynamoDB helps you to retailer session reminiscence information to keep up context throughout conversations

- Amazon API Gateway helps you to create a safe API endpoint for the customized Google Chat app to speak with our AWS primarily based answer.

The next determine illustrates the high-level design of the answer.

The workflow contains the next steps:

- The method begins when a person sends a message via Google Chat, both in a direct message or in a chat house the place the appliance is put in.

- The customized Google Chat app, configured for HTTP integration, sends an HTTP request to an API Gateway endpoint. This request incorporates the person’s message and related metadata.

- Earlier than processing the request, a Lambda authorizer perform related to the API Gateway authenticates the incoming message. This verifies that solely reputable requests from the customized Google Chat app are processed.

- After it’s authenticated, the request is forwarded to a different Lambda perform that incorporates our core software logic. This perform is liable for decoding the person’s request and formulating an applicable response.

- The Lambda perform interacts with Amazon Bedrock via its runtime APIs, utilizing both the RetrieveAndGenerate API that connects to a data base, or the Converse API to talk straight with an LLM out there on Amazon Bedrock. This additionally permits the Lambda perform to look via the group’s data base and generate an clever, context-aware response utilizing the ability of LLMs. The Lambda perform additionally makes use of a DynamoDB desk to maintain monitor of the dialog historical past, both straight with a person or inside a Google Chat house.

- After receiving the generated response from Amazon Bedrock, the Lambda perform sends this reply again via API Gateway to the Google Chat app.

- Lastly, the AI-generated response seems within the person’s Google Chat interface, offering the reply to their query.

This structure permits for a seamless integration between Google Workspace and AWS providers, creating an AI-driven assistant that enhances data accessibility inside the acquainted Google Chat setting. You’ll be able to customise this structure to attach different options that you just develop in AWS to Google Chat.

Within the following sections, we clarify the best way to deploy this structure.

Stipulations

To implement the answer outlined on this put up, it’s essential to have the next:

- A Linux or MacOS growth setting with at the least 20 GB of free disk house. It may be a neighborhood machine or a cloud occasion. If you happen to use an AWS Cloud9 occasion, be sure to have elevated the disk measurement to twenty GB.

- The AWS Command Line Interface (AWS CLI) put in in your growth setting. This instrument permits you to work together with AWS providers via command line instructions.

- An AWS account and an AWS Id and Entry Administration (IAM) principal with enough permissions to create and handle the assets wanted for this software. If you happen to don’t have an AWS account, confer with How do I create and activate a brand new Amazon Internet Companies account? To configure the AWS CLI with the related credentials, sometimes, you arrange an AWS entry key ID and secret entry key for a delegated IAM person with applicable permissions.

- Request entry to Amazon Bedrock FMs. On this put up, we use both Anthropic’s Claude Sonnet 3 or Amazon Titan Textual content G1 Premier out there in Amazon Bedrock, however you can even select different fashions which might be supported for Amazon Bedrock data bases.

- Optionally, an Amazon Bedrock data base created in your account, which lets you combine your personal paperwork into your generative AI purposes. If you happen to don’t have an present data base, confer with Create an Amazon Bedrock data base. Alternatively, the answer proposes an possibility with no data base, with solutions generated solely by the FM on the backend.

- A Enterprise or Enterprise Google Workspace account with entry to Google Chat. You additionally want a Google Cloud mission with billing enabled. To test that an present mission has billing enabled, see Confirm the billing standing of your tasks.

- Docker put in in your growth setting.

Deploy the answer

The appliance offered on this put up is out there within the accompanying GitHub repository and supplied as an AWS Cloud Improvement Equipment (AWS CDK) mission. Full the next steps to deploy the AWS CDK mission in your AWS account:

- Clone the GitHub repository in your native machine.

- Set up the Python package deal dependencies which might be wanted to construct and deploy the mission. This mission is about up like a regular Python mission. We advocate that you just create a digital setting inside this mission, saved beneath the

.venv. To manually create a digital setting on MacOS and Linux, use the next command:

- After the initialization course of is full and the digital setting is created, you should utilize the next command to activate your digital setting:

- Set up the Python package deal dependencies which might be wanted to construct and deploy the mission. Within the root listing, run the next command:

- Run the cdk bootstrap command to organize an AWS setting for deploying the AWS CDK software.

- Run the script

init-script.bash:

This script prompts you for the next:

- The Amazon Bedrock data base ID to affiliate together with your Google Chat app (confer with the stipulations part). Maintain this clean should you resolve to not use an present data base.

- Which LLM you need to use in Amazon Bedrock for textual content era. For this answer, you may select between Anthropic’s Claude Sonnet 3 or Amazon Titan Textual content G1 – Premier

The next screenshot exhibits the enter variables to the init-script.bash script.

The script deploys the AWS CDK mission in your account. After it runs efficiently, it outputs the parameter ApiEndpoint, whose worth designates the invoke URL for the HTTP API endpoint deployed as a part of this mission. Observe the worth of this parameter since you use it later within the Google Chat app configuration.

The next screenshot exhibits the output of the init-script.bash script.

You too can discover this parameter on the AWS CloudFormation console, on the stack’s Outputs tab.

Register a brand new app in Google Chat

To combine the AWS powered chat assistant into Google Chat, you create a customized Google Chat app. Google Chat apps are extensions that carry exterior providers and assets straight into the Google Chat setting. These apps can take part in direct messages, group conversations, or devoted chat areas, permitting customers to entry data and take actions with out leaving their chat interface.

For our AI-powered enterprise assistant, we create an interactive customized Google Chat app that makes use of the HTTP integration methodology. This method permits our app to obtain and reply to person messages in actual time, offering a seamless conversational expertise.

After you have got deployed the AWS CDK stack within the earlier part, full the next steps to register a Google Chat app within the Google Cloud portal:

- Open the Google Cloud portal and log in together with your Google account.

- Seek for “Google Chat API” and navigate to the Google Chat API web page, which helps you to construct Google Chat apps to combine your providers with Google Chat.

- If that is your first time utilizing the Google Chat API, select ACTIVATE. In any other case, select MANAGE.

- On the Configuration tab, beneath Utility information, present the next data, as proven within the following screenshot:

- For App title, enter an app title (for instance,

bedrock-chat). - For Avatar URL, enter the URL in your app’s avatar picture. As a default, you may present the Google chat product icon.

- For Description, enter an outline of the app (for instance,

Chat App with Amazon Bedrock).

- For App title, enter an app title (for instance,

- Beneath Interactive options, activate Allow Interactive options.

- Beneath Performance, choose Obtain 1:1 messages and Be part of areas and group conversations, as proven within the following screenshot.

- Beneath Connection settings, present the next data:

- Choose App URL.

- For App URL, enter the Invoke URL related to the deployment stage of the HTTP API gateway. That is the

ApiEndpointparameter that you just famous on the finish of the deployment of the AWS CDK template. - For Authentication Viewers, choose App URL, as proven within the following screenshot.

- Beneath Visibility, choose Make this Chat app out there to particular individuals and teams in

and supply e-mail addresses for people and teams who will likely be approved to make use of your app. That you must add at the least your personal e-mail if you wish to entry the app.

- Select Save.

The next animation illustrates these steps on the Google Cloud console.

By finishing these steps, the brand new Amazon Bedrock chat app needs to be accessible on the Google Chat console for the individuals or teams that you just approved in your Google Workspace.

To dispatch interplay occasions to the answer deployed on this put up, Google Chat sends requests to your API Gateway endpoint. To confirm the authenticity of those requests, Google Chat features a bearer token within the Authorization header of each HTTPS request to your endpoint. The Lambda authorizer perform supplied with this answer verifies that the bearer token was issued by Google Chat and focused at your particular app utilizing the Google OAuth consumer library. You’ll be able to additional customise the Lambda authorizer perform to implement further management guidelines primarily based on Consumer or House objects included within the request from Google Chat to your API Gateway endpoint. This lets you fine-tune entry management, for instance, by limiting sure options to particular customers or limiting the app’s performance specifically chat areas, enhancing safety and customization choices in your group.

Converse together with your customized Google Chat app

Now you can converse with the brand new app inside your Google Chat interface. Hook up with Google Chat with an e-mail that you just approved in the course of the configuration of your app and provoke a dialog by discovering the app:

- Select New chat within the chat pane, then enter the title of the appliance (

bedrock-chat) within the search discipline. - Select Chat and enter a pure language phrase to work together with the appliance.

Though we beforehand demonstrated a utilization situation that entails a direct chat with the Amazon Bedrock software, you can even invoke the appliance from inside a Google chat house, as illustrated within the following demo.

Customise the answer

On this put up, we used Amazon Bedrock to energy the chat-based assistant. Nonetheless, you may customise the answer to make use of quite a lot of AWS providers and create an answer that matches your particular enterprise wants.

To customise the appliance, full the next steps:

- Edit the file

lambda/lambda-chat-app/lambda-chatapp-code.pywithin the GitHub repository you cloned to your native machine throughout deployment. - Implement your online business logic on this file.

The code runs in a Lambda perform. Every time a request is processed, Lambda runs the lambda_handler perform:

When Google Chat sends a request, the lambda_handler perform calls the handle_post perform.

- Let’s change the

handle_postperform with the next code:

- Save your file, then run the next command in your terminal to deploy your new code:

The deployment ought to take a couple of minute. When it’s full, you may go to Google Chat and check your new enterprise logic. The next screenshot exhibits an instance chat.

Because the picture exhibits, your perform will get the person message and an area title. You should utilize this house title as a novel ID for the dialog, which helps you to to handle historical past.

As you develop into extra conversant in the answer, chances are you’ll need to discover superior Amazon Bedrock options to considerably broaden its capabilities and make it extra strong and versatile. Take into account integrating Amazon Bedrock Guardrails to implement safeguards custom-made to your software necessities and accountable AI insurance policies. Take into account additionally increasing the assistant’s capabilities via perform calling, to carry out actions on behalf of customers, comparable to scheduling conferences or initiating workflows. You might additionally use Amazon Bedrock Immediate Flows to speed up the creation, testing, and deployment of workflows via an intuitive visible builder. For extra superior interactions, you could possibly discover implementing Amazon Bedrock Brokers able to reasoning about complicated issues, making choices, and executing multistep duties autonomously.

Efficiency optimization

The serverless structure used on this put up gives a scalable answer out of the field. As your person base grows or if in case you have particular efficiency necessities, there are a number of methods to additional optimize efficiency. You’ll be able to implement API caching to hurry up repeated requests or use provisioned concurrency for Lambda features to get rid of chilly begins. To beat API Gateway timeout limitations in eventualities requiring longer processing occasions, you may enhance the combination timeout on API Gateway, otherwise you may change it with an Utility Load Balancer, which permits for prolonged connection durations. You too can fine-tune your selection of Amazon Bedrock mannequin to steadiness accuracy and velocity. Lastly, Provisioned Throughput in Amazon Bedrock helps you to provision the next degree of throughput for a mannequin at a set value.

Clear up

On this put up, you deployed an answer that allows you to work together straight with a chat assistant powered by AWS out of your Google Chat setting. The structure incurs utilization value for a number of AWS providers. First, you may be charged for mannequin inference and for the vector databases you employ with Amazon Bedrock Information Bases. AWS Lambda prices are primarily based on the variety of requests and compute time, and Amazon DynamoDB fees depend upon learn/write capability items and storage used. Moreover, Amazon API Gateway incurs fees primarily based on the variety of API calls and information switch. For extra particulars about pricing, confer with Amazon Bedrock pricing.

There may also be prices related to utilizing Google providers. For detailed details about potential fees associated to Google Chat, confer with the Google Chat product documentation.

To keep away from pointless prices, clear up the assets created in your AWS setting once you’re completed exploring this answer. Use the cdk destroy command to delete the AWS CDK stack beforehand deployed on this put up. Alternatively, open the AWS CloudFormation console and delete the stack you deployed.

Conclusion

On this put up, we demonstrated a sensible answer for creating an AI-powered enterprise assistant for Google Chat. This answer seamlessly integrates Google Workspace with AWS hosted information by utilizing LLMs on Amazon Bedrock, Lambda for software logic, DynamoDB for session administration, and API Gateway for safe communication. By implementing this answer, organizations can present their workforce with a streamlined technique to entry AI-driven insights and data bases straight inside their acquainted Google Chat interface, enabling pure language interplay and data-driven discussions with out the necessity to swap between totally different purposes or platforms.

Moreover, we showcased the best way to customise the appliance to implement tailor-made enterprise logic that may use different AWS providers. This flexibility empowers you to tailor the assistant’s capabilities to their particular necessities, offering a seamless integration together with your present AWS infrastructure and information sources.

AWS gives a complete suite of cutting-edge AI providers to fulfill your group’s distinctive wants, together with Amazon Bedrock and Amazon Q. Now that you know the way to combine AWS providers with Google Chat, you may discover their capabilities and construct superior purposes!

Concerning the Authors

Nizar Kheir is a Senior Options Architect at AWS with greater than 15 years of expertise spanning varied trade segments. He at present works with public sector prospects in France and throughout EMEA to assist them modernize their IT infrastructure and foster innovation by harnessing the ability of the AWS Cloud.

Nizar Kheir is a Senior Options Architect at AWS with greater than 15 years of expertise spanning varied trade segments. He at present works with public sector prospects in France and throughout EMEA to assist them modernize their IT infrastructure and foster innovation by harnessing the ability of the AWS Cloud.

Lior Perez is a Principal Options Architect on the development staff primarily based in Toulouse, France. He enjoys supporting prospects of their digital transformation journey, utilizing large information, machine studying, and generative AI to assist clear up their enterprise challenges. He’s additionally personally enthusiastic about robotics and Web of Issues (IoT), and he always appears to be like for brand spanking new methods to make use of applied sciences for innovation.

Lior Perez is a Principal Options Architect on the development staff primarily based in Toulouse, France. He enjoys supporting prospects of their digital transformation journey, utilizing large information, machine studying, and generative AI to assist clear up their enterprise challenges. He’s additionally personally enthusiastic about robotics and Web of Issues (IoT), and he always appears to be like for brand spanking new methods to make use of applied sciences for innovation.