Massive language fashions (LLMs) have raised the bar for human-computer interplay the place the expectation from customers is that they’ll talk with their purposes by way of pure language. Past easy language understanding, real-world purposes require managing advanced workflows, connecting to exterior information, and coordinating a number of AI capabilities. Think about scheduling a physician’s appointment the place an AI agent checks your calendar, accesses your supplier’s system, verifies insurance coverage, and confirms all the pieces in a single go—no extra app-switching or maintain occasions. In these real-world eventualities, brokers generally is a recreation changer, delivering extra custom-made generative AI purposes.

LLM brokers function decision-making techniques for software management stream. Nonetheless, these techniques face a number of operational challenges throughout scaling and improvement. The first points embody device choice inefficiency, the place brokers with entry to quite a few instruments wrestle with optimum device choice and sequencing, context administration limitations that stop single brokers from successfully managing more and more advanced contextual data, and specialization necessities as advanced purposes demand various experience areas reminiscent of planning, analysis, and evaluation. The answer lies in implementing a multi-agent structure, which includes decomposing the principle system into smaller, specialised brokers that function independently. Implementation choices vary from primary prompt-LLM mixtures to classy ReAct (Reasoning and Appearing) brokers, permitting for extra environment friendly process distribution and specialised dealing with of various software elements. This modular strategy enhances system manageability and permits for higher scaling of LLM-based purposes whereas sustaining practical effectivity by way of specialised elements.

This put up demonstrates the way to combine open-source multi-agent framework, LangGraph, with Amazon Bedrock. It explains the way to use LangGraph and Amazon Bedrock to construct highly effective, interactive multi-agent purposes that use graph-based orchestration.

AWS has launched a multi-agent collaboration functionality for Amazon Bedrock Brokers, enabling builders to construct, deploy, and handle a number of AI brokers working collectively on advanced duties. This function permits for the creation of specialised brokers that deal with totally different facets of a course of, coordinated by a supervisor agent that breaks down requests, delegates duties, and consolidates outputs. This strategy improves process success charges, accuracy, and productiveness, particularly for advanced, multi-step duties.

Challenges with multi-agent techniques

In a single-agent system, planning includes the LLM agent breaking down duties right into a sequence of small duties, whereas a multi-agent system will need to have workflow administration involving process distribution throughout a number of brokers. Not like single-agent environments, multi-agent techniques require a coordination mechanism the place every agent should keep alignment with others whereas contributing to the general goal. This introduces distinctive challenges in managing inter-agent dependencies, useful resource allocation, and synchronization, necessitating sturdy frameworks that keep system-wide consistency whereas optimizing efficiency.

Reminiscence administration in AI techniques differs between single-agent and multi-agent architectures. Single-agent techniques use a three-tier construction: short-term conversational reminiscence, long-term historic storage, and exterior information sources like Retrieval Augmented Era (RAG). Multi-agent techniques require extra superior frameworks to handle contextual information, monitor interactions, and synchronize historic information throughout brokers. These techniques should deal with real-time interactions, context synchronization, and environment friendly information retrieval, necessitating cautious design of reminiscence hierarchies, entry patterns, and inter-agent sharing.

Agent frameworks are important for multi-agent techniques as a result of they supply the infrastructure for coordinating autonomous brokers, managing communication and sources, and orchestrating workflows. Agent frameworks alleviate the necessity to construct these advanced elements from scratch.

LangGraph, a part of LangChain, orchestrates agentic workflows by way of a graph-based structure that handles advanced processes and maintains context throughout agent interactions. It makes use of supervisory management patterns and reminiscence techniques for coordination.

LangGraph Studio enhances improvement with graph visualization, execution monitoring, and runtime debugging capabilities. The combination of LangGraph with Amazon Bedrock empowers you to make the most of the strengths of a number of brokers seamlessly, fostering a collaborative atmosphere that enhances the effectivity and effectiveness of LLM-based techniques.

Understanding LangGraph and LangGraph Studio

LangGraph implements state machines and directed graphs for multi-agent orchestration. The framework gives fine-grained management over each the stream and state of your agent purposes. LangGraph fashions agent workflows as graphs. You outline the habits of your brokers utilizing three key elements:

- State – A shared information construction that represents the present snapshot of your software.

- Nodes – Python features that encode the logic of your brokers.

- Edges – Python features that decide which Node to execute subsequent primarily based on the present state. They are often conditional branches or mounted transitions.

LangGraph implements a central persistence layer, enabling options which might be widespread to most agent architectures, together with:

- Reminiscence – LangGraph persists arbitrary facets of your software’s state, supporting reminiscence of conversations and different updates inside and throughout person interactions.

- Human-in-the-loop – As a result of state is checkpointed, execution might be interrupted and resumed, permitting for selections, validation, and corrections at key phases by way of human enter.

LangGraph Studio is an built-in improvement atmosphere (IDE) particularly designed for AI agent improvement. It gives builders with highly effective instruments for visualization, real-time interplay, and debugging capabilities. The important thing options of LangGraph Studio are:

- Visible agent graphs – The IDE’s visualization instruments permit builders to characterize agent flows as intuitive graphic wheels, making it simple to know and modify advanced system architectures.

- Actual-time debugging – The power to work together with brokers in actual time and modify responses mid-execution creates a extra dynamic improvement expertise.

- Stateful structure – Assist for stateful and adaptive brokers inside a graph-based structure permits extra refined behaviors and interactions.

The next screenshot exhibits the nodes, edges, and state of a typical LangGraph agent workflow as considered in LangGraph Studio.

Determine 1: LangGraph Studio UI

Within the previous instance, the state begins with __start__ and ends with __end__. The nodes for invoking the mannequin and instruments are outlined by you and the perimeters inform you which paths might be adopted by the workflow.

LangGraph Studio is obtainable as a desktop software for MacOS customers. Alternatively, you’ll be able to run an area in-memory improvement server that can be utilized to attach an area LangGraph software with an internet model of the studio.

Resolution overview

This instance demonstrates the supervisor agentic sample, the place a supervisor agent coordinates a number of specialised brokers. Every agent maintains its personal scratchpad whereas the supervisor orchestrates communication and delegates duties primarily based on agent capabilities. This distributed strategy improves effectivity by permitting brokers to deal with particular duties whereas enabling parallel processing and system scalability.

Let’s stroll by way of an instance with the next person question: “Counsel a journey vacation spot and search flight and resort for me. I need to journey on 15-March-2025 for five days.” The workflow consists of the next steps:

- The Supervisor Agent receives the preliminary question and breaks it down into sequential duties:

- Vacation spot suggestion required.

- Flight search wanted for March 15, 2025.

- Lodge reserving required for five days.

- The Vacation spot Agent begins its work by accessing the person’s saved profile. It searches its historic database, analyzing patterns from related person profiles to suggest the vacation spot. Then it passes the vacation spot again to the Supervisor Agent.

- The Supervisor Agent forwards the chosen vacation spot to the Flight Agent, which searches accessible flights for the given date.

- The Supervisor Agent prompts the Lodge Agent, which searches for motels within the vacation spot metropolis.

- The Supervisor Agent compiles the suggestions right into a complete journey plan, presenting the person with an entire itinerary together with vacation spot rationale, flight choices, and resort solutions.

The next determine exhibits a multi-agent workflow of how these brokers join to one another and which instruments are concerned with every agent.

Determine 2: Multi-agent workflow

Determine 2: Multi-agent workflow

Stipulations

You’ll need the next stipulations earlier than you’ll be able to proceed with this answer. For this put up, we use the us-west-2 AWS Area. For particulars on accessible Areas, see Amazon Bedrock endpoints and quotas.

Core elements

Every agent is structured with two main elements:

- graph.py – This script defines the agent’s workflow and decision-making logic. It implements the LangGraph state machine for managing agent habits and configures the communication stream between totally different elements. For instance:

- The Flight Agent’s graph manages the stream between chat and gear operations.

- The Lodge Agent’s graph handles conditional routing between search, reserving, and modification operations.

- The Supervisor Agent’s graph orchestrates the general multi-agent workflow.

- instruments.py – This script comprises the concrete implementations of agent capabilities. It implements the enterprise logic for every operation and handles information entry and manipulation. It gives particular functionalities like:

- Flight instruments:

search_flights,book_flights,change_flight_booking,cancel_flight_booking. - Lodge instruments:

suggest_hotels,book_hotels,change_hotel_booking,cancel_hotel_booking.

- Flight instruments:

This separation between graph (workflow) and instruments (implementation) permits for a clear structure the place the decision-making course of is separate from the precise execution of duties. The brokers talk by way of a state-based graph system carried out utilizing LangGraph, the place the Supervisor Agent directs the stream of knowledge and duties between the specialised brokers.

To arrange Amazon Bedrock with LangGraph, seek advice from the next GitHub repo. The high-level steps are as follows:

- Set up the required packages:

These packages are important for AWS Bedrock integration:

boto: AWS SDK for Python, handles AWS service communicationlangchain-aws: Offers LangChain integrations for AWS companies

- Import the modules:

- Create an LLM object:

LangGraph Studio configuration

This challenge makes use of a langgraph.json configuration file to outline the applying construction and dependencies. This file is crucial for LangGraph Studio to know the way to run and visualize your agent graphs.

LangGraph Studio makes use of this file to construct and visualize the agent workflows, permitting you to watch and debug the multi-agent interactions in actual time.

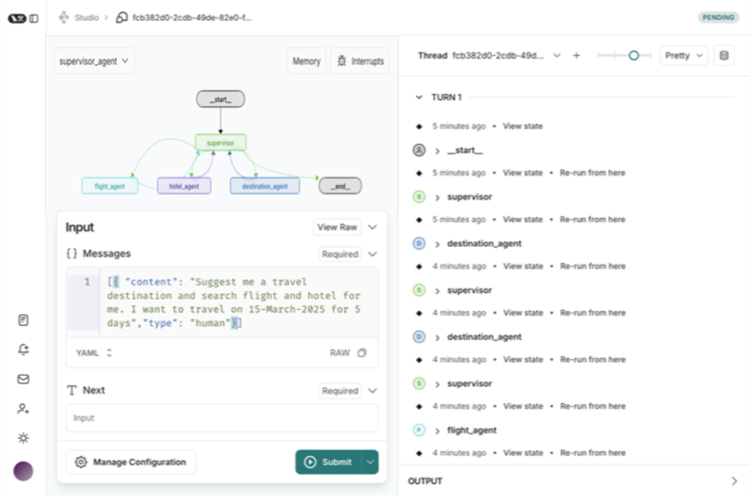

Testing and debugging

You’re now prepared to check the multi-agent journey assistant. You can begin the graph utilizing the langgraph dev command. It’s going to begin the LangGraph API server in improvement mode with sizzling reloading and debugging capabilities. As proven within the following screenshot, the interface gives a simple method to choose which graph you need to take a look at by way of the dropdown menu on the prime left. The Handle Configuration button on the backside allows you to arrange particular testing parameters earlier than you start. This improvement atmosphere gives all the pieces it’s good to totally take a look at and debug your multi-agent system with real-time suggestions and monitoring capabilities.

Determine 3: LangGraph studio with Vacation spot Agent suggestion

Determine 3: LangGraph studio with Vacation spot Agent suggestion

LangGraph Studio presents versatile configuration administration by way of its intuitive interface. As proven within the following screenshot, you’ll be able to create and handle a number of configuration variations (v1, v2, v3) to your graph execution. For instance, on this state of affairs, we need to use user_id to fetch historic use data. This versioning system makes it easy to trace and swap between totally different take a look at configurations whereas debugging your multi-agent system.

Determine 4: Runnable configuration particulars

Determine 4: Runnable configuration particulars

Within the previous instance, we arrange the user_id that instruments can use to retrieve historical past or different particulars.

Let’s take a look at the Planner Agent. This agent has the compare_and_recommend_destination device, which might examine previous journey information and suggest journey locations primarily based on the person profile. We use user_id within the configuration so that may or not it’s utilized by the device.

LangGraph has idea of checkpoint reminiscence that’s managed utilizing a thread. The next screenshot exhibits you could rapidly handle threads in LangGraph Studio.

Determine 5: View graph state within the thread

Determine 5: View graph state within the thread

On this instance, destination_agent is utilizing a device; it’s also possible to examine the device’s output. Equally, you’ll be able to take a look at flight_agent and hotel_agent to confirm every agent.

When all of the brokers are working nicely, you’re prepared to check the total workflow. You possibly can consider the state a confirm enter and output of every agent.

The next screenshot exhibits the total view of the Supervisor Agent with its sub-agents.

Determine 6: Supervisor Agent with full workflow

Determine 6: Supervisor Agent with full workflow

Issues

Multi-agent architectures should think about agent coordination, state administration, communication, output consolidation, and guardrails, sustaining processing context, error dealing with, and orchestration. Graph-based architectures provide vital benefits over linear pipelines, enabling advanced workflows with nonlinear communication patterns and clearer system visualization. These buildings permit for dynamic pathways and adaptive communication, ideally suited for large-scale deployments with simultaneous agent interactions. They excel in parallel processing and useful resource allocation however require refined setup and would possibly demand increased computational sources. Implementing these techniques necessitates cautious planning of system topology, sturdy monitoring, and well-designed fallback mechanisms for failed interactions.

When implementing multi-agent architectures in your group, it’s essential to align together with your firm’s established generative AI operations and governance frameworks. Previous to deployment, confirm alignment together with your group’s AI security protocols, information dealing with insurance policies, and mannequin deployment tips. Though this architectural sample presents vital advantages, its implementation needs to be tailor-made to suit inside your group’s particular AI governance construction and danger administration frameworks.

Clear up

Delete any IAM roles and insurance policies created particularly for this put up. Delete the native copy of this put up’s code. If you happen to not want entry to an Amazon Bedrock FM, you’ll be able to take away entry from it. For directions, see Add or take away entry to Amazon Bedrock basis fashions

Conclusion

The combination of LangGraph with Amazon Bedrock considerably advances multi-agent system improvement by offering a sturdy framework for classy AI purposes. This mix makes use of LangGraph’s orchestration capabilities and FMs in Amazon Bedrock to create scalable, environment friendly techniques. It addresses challenges in multi-agent architectures by way of state administration, agent coordination, and workflow orchestration, providing options like reminiscence administration, error dealing with, and human-in-the-loop capabilities. LangGraph Studio’s visualization and debugging instruments allow environment friendly design and upkeep of advanced agent interactions. This integration presents a robust basis for next-generation multi-agent techniques, offering efficient workflow dealing with, context upkeep, dependable outcomes, and optimum useful resource utilization.

For the instance code and demonstration mentioned on this put up, seek advice from the accompanying GitHub repository. You too can seek advice from the next GitHub repo for Amazon Bedrock multi-agent collaboration code samples.

Concerning the Authors

Jagdeep Singh Soni is a Senior Companion Options Architect at AWS primarily based within the Netherlands. He makes use of his ardour for generative AI to assist clients and companions construct generative AI purposes utilizing AWS companies. Jagdeep has 15 years of expertise in innovation, expertise engineering, digital transformation, cloud structure, and ML purposes.

Jagdeep Singh Soni is a Senior Companion Options Architect at AWS primarily based within the Netherlands. He makes use of his ardour for generative AI to assist clients and companions construct generative AI purposes utilizing AWS companies. Jagdeep has 15 years of expertise in innovation, expertise engineering, digital transformation, cloud structure, and ML purposes.

Ajeet Tewari is a Senior Options Architect for Amazon Net Providers. He works with enterprise clients to assist them navigate their journey to AWS. His specialties embody architecting and implementing scalable OLTP techniques and main strategic AWS initiatives.

Ajeet Tewari is a Senior Options Architect for Amazon Net Providers. He works with enterprise clients to assist them navigate their journey to AWS. His specialties embody architecting and implementing scalable OLTP techniques and main strategic AWS initiatives.

Rupinder Grewal is a Senior AI/ML Specialist Options Architect with AWS. He at the moment focuses on serving of fashions and MLOps on Amazon SageMaker. Previous to this function, he labored as a Machine Studying Engineer constructing and internet hosting fashions. Outdoors of labor, he enjoys enjoying tennis and biking on mountain trails.

Rupinder Grewal is a Senior AI/ML Specialist Options Architect with AWS. He at the moment focuses on serving of fashions and MLOps on Amazon SageMaker. Previous to this function, he labored as a Machine Studying Engineer constructing and internet hosting fashions. Outdoors of labor, he enjoys enjoying tennis and biking on mountain trails.