Info retrieval programs have powered the data age by their potential to crawl and sift by large quantities of knowledge and rapidly return correct and related outcomes. These programs, equivalent to serps and databases, usually work by indexing on key phrases and fields contained in information recordsdata.

Nonetheless, a lot of our information within the digital age additionally is available in non-text format, equivalent to audio and video recordsdata. Discovering related content material often requires looking by text-based metadata equivalent to timestamps, which have to be manually added to those recordsdata. This may be exhausting to scale as the amount of unstructured audio and video recordsdata continues to develop.

Happily, the rise of synthetic intelligence (AI) options that may transcribe audio and supply semantic search capabilities now provide extra environment friendly options for querying content material from audio recordsdata at scale. Amazon Transcribe is an AWS AI service that makes it easy to transform speech to textual content. Amazon Bedrock is a totally managed service that provides a selection of high-performing basis fashions (FMs) from main AI corporations by a single API, together with a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI.

On this publish, we present how Amazon Transcribe and Amazon Bedrock can streamline the method to catalog, question, and search by audio packages, utilizing an instance from the AWS re:Assume podcast sequence.

Answer overview

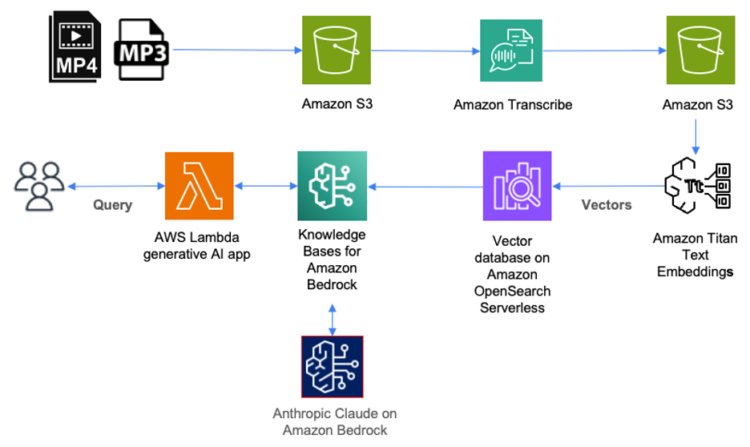

The next diagram illustrates how you need to use AWS providers to deploy an answer for cataloging, querying, and looking by content material saved in audio recordsdata.

On this resolution, audio recordsdata saved in mp3 format are first uploaded to Amazon Easy Storage Service (Amazon S3) storage. Video recordsdata (equivalent to mp4) that include audio in supported languages can be uploaded to Amazon S3 as a part of this resolution. Amazon Transcribe will then transcribe these recordsdata and retailer your entire transcript in JSON format as an object in Amazon S3.

To catalog these recordsdata, every JSON file in Amazon S3 ought to be tagged with the corresponding episode title. This permits us to later retrieve the episode title for every question consequence.

Subsequent, we use Amazon Bedrock to create numerical representations of the content material inside every file. These numerical representations are additionally known as embeddings, and so they’re saved as vectors inside a vector database that we will later question.

Amazon Bedrock is a totally managed service that makes FMs from main AI startups and Amazon obtainable by an API. Included with Amazon Bedrock is Data Bases for Amazon Bedrock. As a totally managed service, Data Bases for Amazon Bedrock makes it easy to arrange a Retrieval Augmented Era (RAG) workflow.

With Data Bases for Amazon Bedrock, we first arrange a vector database on AWS. Data Bases for Amazon Bedrock can then mechanically break up the info recordsdata saved in Amazon S3 into chunks after which create embeddings of every chunk utilizing Amazon Titan on Amazon Bedrock. Amazon Titan is a household of high-performing FMs from Amazon. Included with Amazon Titan is Amazon Titan Textual content Embeddings, which we use to create the numerical illustration of the textual content inside every chunk and retailer them in a vector database.

When a consumer queries the contents of the audio recordsdata by a generative AI software or AWS Lambda operate, it makes an API name to Data Bases for Amazon Bedrock. Data Bases for Amazon Bedrock will then orchestrate a name to the vector database to carry out a semantic search, which returns probably the most related outcomes. Subsequent, Data Bases for Amazon Bedrock augments the consumer’s unique question with these outcomes to a immediate, which is distributed to the massive language mannequin (LLM). The LLM will return outcomes which might be extra correct and related to the consumer question.

Let’s stroll by an instance of how one can catalog, question, and search by a library of audio recordsdata utilizing these AWS AI providers. For this publish, we use episodes of the re:Assume podcast sequence, which has over 20 episodes. Every episode is an audio program recorded in mp3 format. As we proceed so as to add new episodes, we are going to need to use AI providers to make the duty of querying and trying to find particular content material extra scalable with out the necessity to manually add metadata for every episode.

Stipulations

Along with gaining access to AWS providers by the AWS Administration Console, you want a number of different assets to deploy this resolution.

First, you want a library of audio recordsdata to catalog, question, and search. For this publish, we use episodes of the AWS re:Assume podcast sequence.

To make API calls to Amazon Bedrock from our generative AI software, we use Python model 3.11.4 and the AWS SDK for Python (Boto3).

Transcribe audio recordsdata

The primary activity is to transcribe every mp3 file utilizing Amazon Transcribe. For directions on transcribing with the AWS Administration Console or AWS CLI, confer with the Amazon Transcribe Developer information. Amazon Transcribe can create a transcript for every episode and retailer it as an S3 object in JSON format.

Catalog audio recordsdata utilizing tagging

To catalog every episode, we tag the S3 object for every episode with the corresponding episode title. For directions on tagging objects in S3, confer with the Amazon Easy Storage Service Consumer Information. For instance, for the S3 object AI-Accelerators.json, we tag it with key = “title” and worth = “Episode 20: AI Accelerators within the Cloud.”

The title is the one metadata we have to manually add for every audio file. There isn’t any must manually add timestamps for every chapter or part with a purpose to later seek for particular content material.

Arrange a vector database utilizing Data Bases for Amazon Bedrock

Subsequent, we arrange our absolutely managed RAG workflow utilizing Data Bases for Amazon Bedrock. For directions on making a information base, confer with the Amazon Bedrock Consumer Information. We start by specifying an information supply. In our case, we select the S3 bucket location the place our transcripts in JSON format are saved.

Subsequent, we choose an embedding mannequin. The embedding mannequin will convert every chunk of our transcript into embeddings. Embeddings are numbers, and the which means of every embedding depends upon the mannequin. In our instance, we choose Titan Textual content Embeddings v2 with a dimension dimension of 1024.

The embeddings are saved as vectors in a vector database. You possibly can both specify an present vector database you’ve gotten already created or have Data Bases for Amazon Bedrock create one for you. For our instance, we’ve got Data Bases for Amazon Bedrock create a vector database utilizing Amazon OpenSearch Serverless.

Earlier than you may question the vector database, you could first sync it with the info supply. Throughout every sync operation, Data Bases for Amazon Bedrock will break up the info supply into chunks after which use the chosen embedding mannequin to embed every chunk as a vector. Data Bases for Amazon Bedrock will then retailer these vectors within the vector database.

The sync operation in addition to different Amazon Bedrock operations described to this point might be carried out both utilizing the console or API calls.

Question the audio recordsdata

Now we’re prepared to question and seek for particular content material from our library of podcast episodes. In episode 20, titled “AI Accelerators within the Cloud,” our visitor Matthew McClean, a senior supervisor from AWS’s Annapurna staff, shared why AWS determined to purchase Annapurna Labs in 2015. For our first question, we ask, “Why did AWS purchase Annapurna Labs?”

We entered this question into Data Bases for Amazon Bedrock utilizing Anthropic Claude and obtained the next response:

“AWS acquired Annapurna Labs in 2015 as a result of Annapurna was offering AWS with nitro playing cards that offloaded virtualization, safety, networking and storage from EC2 cases to liberate CPU assets.”

That is an actual quote from Matthew McClean within the podcast episode. You wouldn’t get this quote in case you had entered the identical immediate into different publicly obtainable generative AI chatbots as a result of they don’t have the vector database with embeddings of the podcast transcript to supply extra related context.

Retrieve an episode title

Now let’s suppose that along with getting extra related responses, we additionally need to retrieve the proper podcast episode title that was related to this question from our catalog of podcast episodes.

To retrieve the episode title, we first use probably the most related information chunk from the question. Every time Data Bases for Amazon Bedrock responds to a question, it additionally gives a number of chunks of knowledge that it retrieved from the vector database that had been most related to the question so as of relevance. We will take the primary chunk that was returned. These chunks are returned as JSON paperwork. Nested contained in the JSON is the S3 location of the transcript object. In our instance, the S3 location is s3://rethinkpodcast/textual content/transcripts/AI-Accelerators.json.

The primary phrases within the chunk textual content are: “Yeah, certain. So possibly I can begin with the historical past of Annapurna…”

As a result of we’ve got already tagged this transcript object in Amazon S3 with the episode title, we will retrieve the title by retrieving the worth of the tag the place key = “title”. On this case, the title is “Episode 20: AI Accelerators within the Cloud.”

Search the beginning time

What if we additionally need to search and discover the beginning time contained in the episode the place the related content material begins? We need to achieve this with out having to manually learn by the transcript or take heed to the episode from the start, and with out manually including timestamps for each chapter.

We will discover the beginning time a lot quicker by having our generative AI software make a number of extra API calls. We begin by treating the chunk textual content as a substring of your entire transcript. We then seek for the beginning time of the primary phrase within the chunk textual content.

In our instance, the primary phrases returned had been “Yeah, certain. So possibly I can begin with the historical past of Annapurna…” We now want to look your entire transcript for the beginning time of the phrase “Yeah.”

Amazon Transcribe outputs the beginning time of each phrase within the transcript. Nonetheless, any phrase can seem greater than as soon as. The phrase “Yeah” happens 28 occasions within the transcript, and every prevalence has its personal begin time. So how will we decide the proper begin time for “Yeah” in our instance?

There are a number of approaches an software developer can use to search out the proper begin time. For our instance, we use the Python string discover() technique to search out the place of the chunk textual content inside the total transcript.

For the chunk textual content that begins with “Yeah, certain. So possibly I can begin with the historical past of Annapurna…” the discover() technique returned the place as 2047. If we deal with the transcript as one lengthy textual content string, the chunk “Yeah, certain. So possibly…” begins at character place 2047.

Discovering the beginning time now turns into a matter of counting the character place of every phrase within the transcript and utilizing it to lookup the proper begin time from the transcript file generated by Amazon Transcribe. This can be tedious for an individual to do manually, however trivial for a pc.

In our instance Python code, we loop by an array that incorporates the beginning time for every token whereas counting the variety of the character place that every token begins at. As a result of we’re looping by the tokens, we will construct a brand new array that shops the beginning time for every character place.

On this instance question, the beginning time for the phrase “Yeah” at place 2047 is 160 seconds, or 2 minutes and 40 seconds into the podcast. You possibly can verify the recording beginning at 2 minutes 40 seconds.

Clear up

This resolution incurs expenses based mostly on the providers you employ:

- Amazon Transcribe operates underneath a pay-as-you-go pricing mannequin. For extra particulars, see Amazon Transcribe Pricing.

- Amazon Bedrock makes use of an on-demand quota, so that you solely pay for what you employ. For extra data, confer with Amazon Bedrock pricing.

- With OpenSearch Serverless, you solely pay for the assets consumed by your workload.

- In the event you’re utilizing Data Bases for Amazon Bedrock with different vector databases in addition to OpenSearch Serverless, it’s possible you’ll proceed to incur expenses even when not working any queries. It’s endorsed you delete your information base and its related vector retailer together with audio recordsdata saved in Amazon S3 to keep away from pointless prices once you’re finished testing this resolution.

Conclusion

Cataloging, querying, and looking by giant volumes of audio recordsdata might be tough to scale. On this publish, we confirmed how Amazon Transcribe and Data Bases for Amazon Bedrock may help automate and make the method of retrieving related data from audio recordsdata extra scalable.

You possibly can start transcribing your personal library of audio recordsdata with Amazon Transcribe. To be taught extra on how Data Bases for Amazon Bedrock can then orchestrate a RAG workflow in your transcripts with vector shops, confer with Data Bases now delivers absolutely managed RAG expertise in Amazon Bedrock.

With the assistance of those AI providers, we will now increase the frontiers of our information bases.

In regards to the Creator

Nolan Chen is a Associate Options Architect at AWS, the place he helps startup corporations construct progressive options utilizing the cloud. Previous to AWS, Nolan specialised in information safety and serving to clients deploy high-performing huge space networks. Nolan holds a bachelor’s diploma in Mechanical Engineering from Princeton College.