Generative AI fashions proceed to broaden in scale and functionality, rising the demand for sooner and extra environment friendly inference. Functions want low latency and constant efficiency with out compromising output high quality. Amazon SageMaker AI introduces new enhancements to its inference optimization toolkit that carry EAGLE based mostly adaptive speculative decoding to extra mannequin architectures. These updates make it simpler to speed up decoding, optimize efficiency utilizing your individual information and deploy higher-throughput fashions utilizing the acquainted SageMaker AI workflow.

EAGLE, brief for Extrapolation Algorithm for Larger Language-model Effectivity, is a method that quickens massive language mannequin decoding by predicting future tokens instantly from the hidden layers of the mannequin. Whenever you information optimization utilizing your individual utility information, the enhancements align with the precise patterns and domains you serve, producing sooner inference that displays your actual workloads slightly than generic benchmarks. Based mostly on the mannequin structure, SageMaker AI trains EAGLE 3 or EAGLE 2 heads.

Be aware that this coaching and optimization is just not restricted to only a one time optimization operation. You can begin by using the datasets offered by SageMaker for the preliminary coaching, however as you proceed to collect and gather your individual information you may as well fine-tune utilizing your individual curated dataset for extremely adaptive, workload-specific efficiency. An instance could be using a instrument resembling Knowledge Seize to curate your individual dataset over time from real-time requests which are hitting your hosted mannequin. This may be an iterative function with a number of cycles of coaching to constantly enhance efficiency.

On this publish we’ll clarify the right way to use EAGLE 2 and EAGLE 3 speculative decoding in Amazon SageMaker AI.

Resolution overview

SageMaker AI now provides native assist for each EAGLE 2 and EAGLE 3 speculative decoding, enabling every mannequin structure to use the method that greatest matches its inside design. In your base LLM, you may make the most of both SageMaker JumpStart fashions or carry your individual mannequin artifacts to S3 from different mannequin hubs, resembling HuggingFace.

Speculative decoding is a extensively employed method for accelerating inference in LLMs with out compromising high quality. This methodology includes utilizing a smaller draft mannequin to generate preliminary tokens, that are then verified by the goal LLM. The extent of the speedup achieved by way of speculative decoding is closely depending on the collection of the draft mannequin.

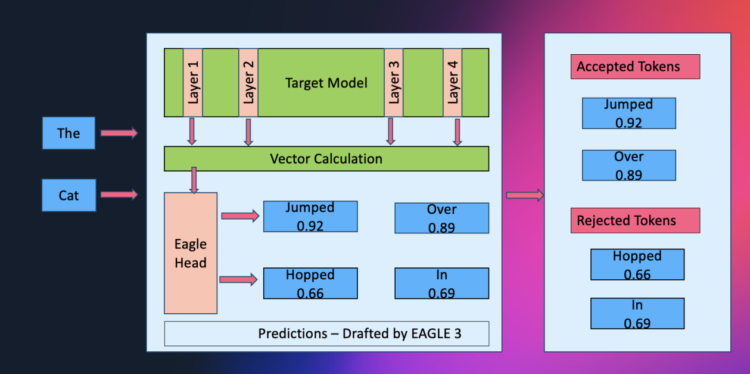

The sequential nature of recent LLMs makes them costly and gradual, and speculative decoding has confirmed to be an efficient answer to this drawback. Strategies like EAGLE enhance upon this by reusing options from the goal mannequin, main to higher outcomes. Nonetheless, a present development within the LLM neighborhood is to extend coaching information to spice up mannequin intelligence with out including inference prices. Sadly, this method has restricted advantages for EAGLE. This limitation is because of EAGLE’s constraints on function prediction. To handle this, EAGLE-3 is launched, which predicts tokens instantly as an alternative of options and combines options from a number of layers utilizing a method known as training-time testing. These adjustments considerably enhance efficiency and permit the mannequin to completely profit from elevated coaching information.

To present clients most flexibility, SageMaker helps each main workflow for constructing or refining an EAGLE mannequin. You’ll be able to practice an EAGLE mannequin completely from scratch utilizing the SageMaker curated open dataset, or practice it from scratch with your individual information to align speculative conduct together with your site visitors patterns. You may as well begin from an present EAGLE base mannequin: both retraining it with the default open dataset for a quick, high-quality baseline, or fine-tuning that base mannequin with your individual dataset for extremely adaptive, workload-specific efficiency. As well as, SageMaker JumpStart supplies totally pre-trained EAGLE fashions so you may start optimizing instantly with out making ready any artifacts.

The answer spans six supported architectures and features a pre-trained, pre-cached EAGLE base to speed up experimentation. SageMaker AI additionally helps extensively used coaching information codecs, particularly ShareGPT and OpenAI chat and completions, so present corpora can be utilized instantly. Prospects may present the information captured utilizing their very own SageMaker AI endpoints offered the information is within the above specified codecs. Whether or not you depend on the SageMaker open dataset or carry your individual, optimization jobs usually ship round a 2.5x thoughput over commonplace decoding whereas adapting naturally to the nuances of your particular use case.

All optimization jobs routinely produce benchmark outcomes supplying you with clear visibility into latency and throughput enhancements. You’ll be able to run the whole workflow utilizing SageMaker Studio or the AWS CLI and also you deploy the optimized mannequin by way of the identical interface you already use for traditional SageMaker AI inference.

SageMaker AI at the moment helps LlamaForCausalLM, Qwen3ForCausalLM, Qwen3MoeForCausalLM, Qwen2ForCausalLM and GptOssForCausalLM with EAGLE 3, and Qwen3NextForCausalLM with EAGLE 2. You need to use one optimization pipeline throughout a mixture of architectures whereas nonetheless gaining the advantages of model-specific conduct.

How EAGLE works contained in the mannequin

Speculative decoding could be considered like a seasoned chief scientist guiding the movement of discovery. In conventional setups, a smaller “assistant” mannequin runs forward, shortly sketching out a number of attainable token continuations, whereas the bigger mannequin examines and corrects these solutions. This pairing reduces the variety of gradual, sequential steps by verifying a number of drafts without delay.

EAGLE streamlines this course of even additional. As a substitute of relying on an exterior assistant, the mannequin successfully turns into its personal lab accomplice: it inspects its inside hidden-layer representations to anticipate a number of future tokens in parallel. As a result of these predictions come up from the mannequin’s personal discovered construction, they are typically extra correct upfront, resulting in deeper speculative steps, fewer rejections, and smoother throughput.

By eradicating the overhead of coordinating a secondary mannequin and enabling extremely parallel verification, this method alleviates reminiscence bandwidth bottlenecks and delivers notable speedups, typically round 2.5x, whereas sustaining the identical output high quality the baseline mannequin would produce.

Working optimization jobs from the SDK or CLI

You’ll be able to interface with the Optimization Toolkit utilizing the AWS Python Boto3 SDK, Studio UI. On this part we discover using the AWS CLI, the identical API calls will map over to the Boto3 SDK. Right here, the core API requires endpoint creation stay the identical: create_model, create_endpoint_config, and create_endpoint. The workflow we showcase right here begins with mannequin registration utilizing the create_model API name. With the create_model API name you may specify your serving container and stack. You don’t must create a SageMaker mannequin object and may specify the mannequin information within the Optimization Job API name as nicely.

For the EAGLE heads optimization, we specify the mannequin information by pointing in direction of to the Mannequin Knowledge Supply parameter, in the mean time specification of the HuggingFace Hub Mannequin ID is just not supported. Pull your artifacts and add them to an S3 bucket and specify it within the Mannequin Knowledge Supply parameter. By default checks are performed to confirm that the suitable information are uploaded so you’ve got the usual mannequin information anticipated for LLMs:

Let’s take a look at a number of paths right here:

- Utilizing your individual mannequin information with your individual EAGLE curated dataset

- Bringing your individual educated EAGLE that you could be wish to practice extra

- Deliver your individual mannequin information and use SageMaker AI built-in datasets

1. Utilizing your individual mannequin information with your individual EAGLE curated dataset

We will begin an optimization job with the create-optimization-job API name. Right here is an instance with a Qwen3 32B mannequin. Be aware that you may carry your individual information or additionally use the built-in SageMaker offered datasets. First we are able to create a SageMaker Mannequin object that specifies the S3 bucket with our mannequin artifacts:

Our optimization name then pulls down these mannequin artifacts while you specify the SageMaker Mannequin and a TrainingDataSource parameter as the next:

2. Bringing your individual educated EAGLE that you could be wish to practice extra

In your personal educated EAGLE you may specify one other parameter within the create_model API name the place you level in direction of your EAGLE artifacts, optionally you may as well specify a SageMaker JumpStart Mannequin ID to tug down the packaged mannequin artifacts.

Equally the optimization API then inherits this mannequin object with the mandatory mannequin information:

3. Deliver your individual mannequin information and use SageMaker built-in datasets

Optionally, we are able to make the most of the SageMaker offered datasets:

After completion, SageMaker AI shops analysis metrics in S3 and information the optimization lineage in Studio. You’ll be able to deploy the optimized mannequin to an inference endpoint with both the create_endpoint API name or within the UI.

Benchmarks

To benchmark this additional we in contrast three states:

- No EAGLE: Base mannequin with out EAGLE as a baseline

- Base EAGLE: EAGLE coaching utilizing built-in datasets offered by SageMaker AI

- Educated EAGLE: EAGLE coaching utilizing built-in datasets offered by SageMaker AI and retraining with personal customized dataset

The numbers displayed beneath are for qwen3-32B throughout metrics resembling Time to First Token (TTFT) and general throughput.

| Configuration | Concurrency | TTFT (ms) | TPOT (ms) | ITL (ms) | Request Throughput | Output Throughput (tokens/sec) | OTPS per request (tokens/sec) |

| No EAGLE | 4 | 168.04 | 45.95 | 45.95 | 0.04 | 86.76 | 21.76 |

| No EAGLE | 8 | 219.53 | 51.02 | 51.01 | 0.08 | 156.46 | 19.6 |

| Base EAGLE | 1 | 89.76 | 21.71 | 53.01 | 0.02 | 45.87 | 46.07 |

| Base EAGLE | 2 | 132.15 | 20.78 | 50.75 | 0.05 | 95.73 | 48.13 |

| Base EAGLE | 4 | 133.06 | 20.11 | 49.06 | 0.1 | 196.67 | 49.73 |

| Base EAGLE | 8 | 154.44 | 20.58 | 50.15 | 0.19 | 381.86 | 48.59 |

| Educated EAGLE | 1 | 83.6 | 17.32 | 46.37 | 0.03 | 57.63 | 57.73 |

| Educated EAGLE | 2 | 129.07 | 18 | 48.38 | 0.05 | 110.86 | 55.55 |

| Educated EAGLE | 4 | 133.11 | 18.46 | 49.43 | 0.1 | 214.27 | 54.16 |

| Educated EAGLE | 8 | 151.19 | 19.15 | 51.5 | 0.2 | 412.25 | 52.22 |

Pricing issues

Optimization jobs run on SageMaker AI coaching situations, you’ll be billed relying on the occasion sort and job period. Deployment of the ensuing optimized mannequin makes use of commonplace SageMaker AI Inference pricing.

Conclusion

EAGLE based mostly adaptive speculative decoding offers you a sooner and more practical path to enhance generative AI inference efficiency on Amazon SageMaker AI. By working contained in the mannequin slightly than counting on a separate draft community, EAGLE accelerates decoding, will increase throughput and maintains era high quality. Whenever you optimize utilizing your individual dataset, the enhancements mirror the distinctive conduct of your functions, leading to higher end-to-end efficiency. With built-in dataset assist, benchmark automation and streamlined deployment, the inference optimization toolkit helps you ship low-latency generative functions at scale.

In regards to the authors

Kareem Syed-Mohammed is a Product Supervisor at AWS. He’s focuses on enabling generative AI mannequin growth and governance on SageMaker HyperPod. Previous to this, at Amazon QuickSight, he led embedded analytics, and developer expertise. Along with QuickSight, he has been with AWS Market and Amazon retail as a Product Supervisor. Kareem began his profession as a developer for name middle applied sciences, Native Professional and Adverts for Expedia, and administration advisor at McKinsey.

Kareem Syed-Mohammed is a Product Supervisor at AWS. He’s focuses on enabling generative AI mannequin growth and governance on SageMaker HyperPod. Previous to this, at Amazon QuickSight, he led embedded analytics, and developer expertise. Along with QuickSight, he has been with AWS Market and Amazon retail as a Product Supervisor. Kareem began his profession as a developer for name middle applied sciences, Native Professional and Adverts for Expedia, and administration advisor at McKinsey.

Xu Deng is a Software program Engineer Supervisor with the SageMaker workforce. He focuses on serving to clients construct and optimize their AI/ML inference expertise on Amazon SageMaker. In his spare time, he loves touring and snowboarding.

Xu Deng is a Software program Engineer Supervisor with the SageMaker workforce. He focuses on serving to clients construct and optimize their AI/ML inference expertise on Amazon SageMaker. In his spare time, he loves touring and snowboarding.

Ram Vegiraju is an ML Architect with the Amazon SageMaker Service workforce. He focuses on serving to clients construct and optimize their AI/ML options on SageMaker. In his spare time, he loves touring and writing.

Ram Vegiraju is an ML Architect with the Amazon SageMaker Service workforce. He focuses on serving to clients construct and optimize their AI/ML options on SageMaker. In his spare time, he loves touring and writing.

Vinay Arora is a Specialist Resolution Architect for Generative AI at AWS, the place he collaborates with clients in designing cutting-edge AI options leveraging AWS applied sciences. Previous to AWS, Vinay has over 20 years of expertise in finance—together with roles at banks and hedge funds—he has constructed danger fashions, buying and selling programs, and market information platforms. Vinay holds a grasp’s diploma in laptop science and enterprise administration.

Vinay Arora is a Specialist Resolution Architect for Generative AI at AWS, the place he collaborates with clients in designing cutting-edge AI options leveraging AWS applied sciences. Previous to AWS, Vinay has over 20 years of expertise in finance—together with roles at banks and hedge funds—he has constructed danger fashions, buying and selling programs, and market information platforms. Vinay holds a grasp’s diploma in laptop science and enterprise administration.

Siddharth Shah is a Principal Engineer at AWS SageMaker, specializing in large-scale mannequin internet hosting and optimization for Giant Language Fashions. He beforehand labored on the launch of Amazon Textract, efficiency enhancements within the model-hosting platform, and expedited retrieval programs for Amazon S3 Glacier. Outdoors of labor, he enjoys mountain climbing, video video games, and passion robotics.

Siddharth Shah is a Principal Engineer at AWS SageMaker, specializing in large-scale mannequin internet hosting and optimization for Giant Language Fashions. He beforehand labored on the launch of Amazon Textract, efficiency enhancements within the model-hosting platform, and expedited retrieval programs for Amazon S3 Glacier. Outdoors of labor, he enjoys mountain climbing, video video games, and passion robotics.

Andy Peng is a builder with curiosity, motivated by scientific analysis and product innovation. He helped construct key initiatives that span AWS SageMaker and Bedrock, Amazon S3, AWS App Runner, AWS Fargate, Alexa Well being & Wellness, and AWS Funds, from 0-1 incubation to 10x scaling. Open-source fanatic.

Andy Peng is a builder with curiosity, motivated by scientific analysis and product innovation. He helped construct key initiatives that span AWS SageMaker and Bedrock, Amazon S3, AWS App Runner, AWS Fargate, Alexa Well being & Wellness, and AWS Funds, from 0-1 incubation to 10x scaling. Open-source fanatic.

Johna Liu is a Software program Improvement Engineer on the Amazon SageMaker workforce, the place she builds and explores AI/LLM-powered instruments that improve effectivity and allow new capabilities. Outdoors of labor, she enjoys tennis, basketball and baseball.

Johna Liu is a Software program Improvement Engineer on the Amazon SageMaker workforce, the place she builds and explores AI/LLM-powered instruments that improve effectivity and allow new capabilities. Outdoors of labor, she enjoys tennis, basketball and baseball.

Anisha Kolla is a Software program Improvement Engineer with SageMaker Inference workforce with over 10+ years of business expertise. She is captivated with constructing scalable and environment friendly options that empower clients to deploy and handle machine studying functions seamlessly. Anisha thrives on tackling complicated technical challenges and contributing to modern AI capabilities. Outdoors of labor, she enjoys exploring new Seattle eating places, touring, and spending time with household and associates.

Anisha Kolla is a Software program Improvement Engineer with SageMaker Inference workforce with over 10+ years of business expertise. She is captivated with constructing scalable and environment friendly options that empower clients to deploy and handle machine studying functions seamlessly. Anisha thrives on tackling complicated technical challenges and contributing to modern AI capabilities. Outdoors of labor, she enjoys exploring new Seattle eating places, touring, and spending time with household and associates.