This submit was written with Mohamed Hossam of Brightskies.

Analysis universities engaged in large-scale AI and high-performance computing (HPC) usually face vital infrastructure challenges that impede innovation and delay analysis outcomes. Conventional on-premises HPC clusters include lengthy GPU procurement cycles, inflexible scaling limits, and sophisticated upkeep necessities. These obstacles prohibit researchers’ capacity to iterate rapidly on AI workloads corresponding to pure language processing (NLP), pc imaginative and prescient, and basis mannequin (FM) coaching. Amazon SageMaker HyperPod alleviates the undifferentiated heavy lifting concerned in constructing AI fashions. It helps rapidly scale mannequin growth duties corresponding to coaching, fine-tuning, or inference throughout a cluster of a whole bunch or hundreds of AI accelerators (NVIDIA GPUs H100, A100, and others) built-in with preconfigured HPC instruments and automatic scaling.

On this submit, we display how a analysis college carried out SageMaker HyperPod to speed up AI analysis through the use of dynamic SLURM partitions, fine-grained GPU useful resource administration, budget-aware compute price monitoring, and multi-login node load balancing—all built-in seamlessly into the SageMaker HyperPod setting.

Resolution overview

Amazon SageMaker HyperPod is designed to assist large-scale machine studying operations for researchers and ML scientists. The service is totally managed by AWS, eradicating operational overhead whereas sustaining enterprise-grade safety and efficiency.

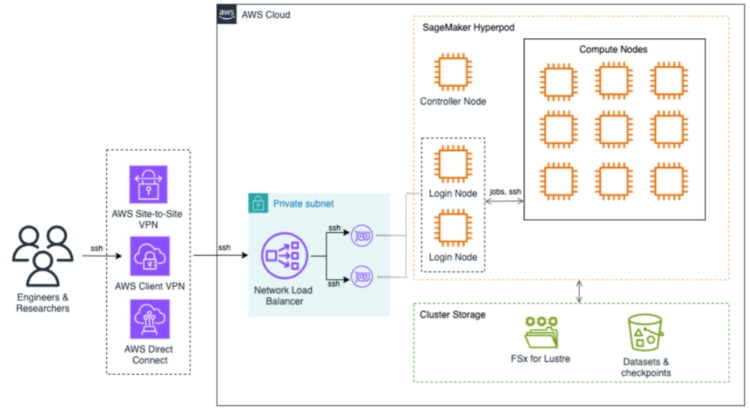

The next structure diagram illustrates the way to entry SageMaker HyperPod to submit jobs. Finish customers can use AWS Web site-to-Web site VPN, AWS Consumer VPN, or AWS Direct Join to securely entry the SageMaker HyperPod cluster. These connections terminate on the Community Load Balancer that effectively distributes SSH site visitors to login nodes, that are the first entry factors for job submission and cluster interplay. On the core of the structure is SageMaker HyperPod compute, a controller node that orchestrates cluster operations, and a number of compute nodes organized in a grid configuration. This setup helps environment friendly distributed coaching workloads with high-speed interconnects between nodes, all contained inside a personal subnet for enhanced safety.

The storage infrastructure is constructed round two foremost parts: Amazon FSx for Lustre gives high-performance file system capabilities, and Amazon S3 for devoted storage for datasets and checkpoints. This dual-storage method gives each quick knowledge entry for coaching workloads and safe persistence of helpful coaching artifacts.

The implementation consisted of a number of phases. Within the following steps, we display the way to deploy and configure the answer.

Stipulations

Earlier than deploying Amazon SageMaker HyperPod, ensure that the next stipulations are in place:

- AWS configuration:

- The AWS Command Line Interface (AWS CLI) configured with applicable permissions

- Cluster configuration recordsdata ready:

cluster-config.jsonandprovisioning-parameters.json

- Community setup:

- An AWS Identification and Administration (IAM) position with permissions for the next:

Launch the CloudFormation stack

We launched an AWS CloudFormation stack to provision the required infrastructure parts, together with a VPC and subnet, FSx for Lustre file system, S3 bucket for lifecycle scripts and coaching knowledge, and IAM roles with scoped permissions for cluster operation. Check with the Amazon SageMaker HyperPod workshop for CloudFormation templates and automation scripts.

Customise SLURM cluster configuration

To align compute sources with departmental analysis wants, we created SLURM partitions to mirror the organizational construction, for instance NLP, pc imaginative and prescient, and deep studying groups. We used the SLURM partition configuration to outline slurm.conf with customized partitions. SLURM accounting was enabled by configuring slurmdbd and linking utilization to departmental accounts and supervisors.

To assist fractional GPU sharing and environment friendly utilization, we enabled Generic Useful resource (GRES) configuration. With GPU stripping, a number of customers can entry GPUs on the identical node with out rivalry. The GRES setup adopted the rules from the Amazon SageMaker HyperPod workshop.

Provision and validate the cluster

We validated the cluster-config.json and provisioning-parameters.json recordsdata utilizing the AWS CLI and a SageMaker HyperPod validation script:

Then we created the cluster:

Implement price monitoring and finances enforcement

To observe utilization and management prices, every SageMaker HyperPod useful resource (for instance, Amazon EC2, FSx for Lustre, and others) was tagged with a singular ClusterName tag. AWS Budgets and AWS Value Explorer reviews have been configured to trace month-to-month spending per cluster. Moreover, alerts have been set as much as notify researchers in the event that they approached their quota or finances thresholds.

This integration helped facilitate environment friendly utilization and predictable analysis spending.

Allow load balancing for login nodes

Because the variety of concurrent customers elevated, the college adopted a multi-login node structure. Two login nodes have been deployed in EC2 Auto Scaling teams. A Community Load Balancer was configured with goal teams to route SSH and Programs Supervisor site visitors. Lastly, AWS Lambda features enforced session limits per consumer utilizing Run-As tags with Session Supervisor, a functionality of Programs Supervisor.

For particulars in regards to the full implementation, see Implementing login node load balancing in SageMaker HyperPod for enhanced multi-user expertise.

Configure federated entry and consumer mapping

To facilitate safe and seamless entry for researchers, the establishment built-in AWS IAM Identification Heart with their on-premises Lively Listing (AD) utilizing AWS Listing Service. This allowed for unified management and administration of consumer identities and entry privileges throughout SageMaker HyperPod accounts. The implementation consisted of the next key parts:

- Federated consumer integration – We mapped AD customers to POSIX consumer names utilizing Session Supervisor

run-astags, permitting fine-grained management over compute node entry - Safe session administration – We configured Programs Supervisor to ensure customers entry compute nodes utilizing their very own accounts, not the default

ssm-user - Identification-based tagging – Federated consumer names have been routinely mapped to consumer directories, workloads, and budgets by way of useful resource tags

For full step-by-step steering, discuss with the Amazon SageMaker HyperPod workshop.

This method streamlined consumer provisioning and entry management whereas sustaining robust alignment with institutional insurance policies and compliance necessities.

Publish-deployment optimizations

To assist forestall pointless consumption of compute sources by idle classes, the college configured SLURM with Pluggable Authentication Modules (PAM). This setup enforces automated logout for customers after their SLURM jobs are full or canceled, supporting immediate availability of compute nodes for queued jobs.

The configuration improved job scheduling throughput by liberating idle nodes instantly and diminished administrative overhead in managing inactive classes.

Moreover, QoS insurance policies have been configured to manage useful resource consumption, restrict job durations, and implement truthful GPU entry throughout customers and departments. For instance:

- MaxTRESPerUser – Makes positive GPU or CPU utilization per consumer stays inside outlined limits

- MaxWallDurationPerJob – Helps forestall excessively lengthy jobs from monopolizing nodes

- Precedence weights – Aligns precedence scheduling based mostly on analysis group or mission

These enhancements facilitated an optimized, balanced HPC setting that aligns with the shared infrastructure mannequin of educational analysis establishments.

Clear up

To delete the sources and keep away from incurring ongoing costs, full the next steps:

- Delete the SageMaker HyperPod cluster:

- Delete the CloudFormation stack used for the SageMaker HyperPod infrastructure:

It will routinely take away related sources, such because the VPC and subnets, FSx for Lustre file system, S3 bucket, and IAM roles. For those who created these sources outdoors of CloudFormation, you could delete them manually.

Conclusion

SageMaker HyperPod gives analysis universities with a strong, totally managed HPC answer tailor-made for the distinctive calls for of AI workloads. By automating infrastructure provisioning, scaling, and useful resource optimization, establishments can speed up innovation whereas sustaining finances management and operational effectivity. By way of personalized SLURM configurations, GPU sharing utilizing GRES, federated entry, and sturdy login node balancing, this answer highlights the potential of SageMaker HyperPod to remodel analysis computing, so researchers can deal with science, not infrastructure.

For extra particulars on profiting from SageMaker HyperPod, take a look at the SageMaker HyperPod workshop and discover additional weblog posts about SageMaker HyperPod.

In regards to the authors

Tasneem Fathima is Senior Options Architect at AWS. She helps Larger Schooling and Analysis clients within the United Arab Emirates to undertake cloud applied sciences, enhance their time to science, and innovate on AWS.

Tasneem Fathima is Senior Options Architect at AWS. She helps Larger Schooling and Analysis clients within the United Arab Emirates to undertake cloud applied sciences, enhance their time to science, and innovate on AWS.

Mohamed Hossam is a Senior HPC Cloud Options Architect at Brightskies, specializing in high-performance computing (HPC) and AI infrastructure on AWS. He helps universities and analysis establishments throughout the Gulf and Center East in harnessing GPU clusters, accelerating AI adoption, and migrating HPC/AI/ML workloads to the AWS Cloud. In his free time, Mohamed enjoys taking part in video video games.

Mohamed Hossam is a Senior HPC Cloud Options Architect at Brightskies, specializing in high-performance computing (HPC) and AI infrastructure on AWS. He helps universities and analysis establishments throughout the Gulf and Center East in harnessing GPU clusters, accelerating AI adoption, and migrating HPC/AI/ML workloads to the AWS Cloud. In his free time, Mohamed enjoys taking part in video video games.