This put up was co-written with Renato Nascimento, Felipe Viana, Andre Von Zuben from Articul8.

Generative AI is reshaping industries, providing new efficiencies, automation, and innovation. Nonetheless, generative AI requires highly effective, scalable, and resilient infrastructures that optimize large-scale mannequin coaching, offering speedy iteration and environment friendly compute utilization with purpose-built infrastructure and automatic cluster administration.

On this put up, we share how Articul8 is accelerating their coaching and deployment of domain-specific fashions (DSMs) by utilizing Amazon SageMaker HyperPod and attaining over 95% cluster utilization and a 35% enchancment in productiveness.

What’s SageMaker HyperPod?

SageMaker HyperPod is a sophisticated distributed coaching answer designed to speed up the event of scalable, dependable, and safe generative AI mannequin growth. Articul8 makes use of SageMaker HyperPod to effectively prepare giant language fashions (LLMs) on numerous, consultant knowledge and makes use of its observability and resiliency options to maintain the coaching atmosphere steady over the lengthy period of coaching jobs. SageMaker HyperPod gives the next options:

- Fault-tolerant compute clusters with automated defective node alternative throughout mannequin coaching

- Environment friendly cluster utilization by way of observability and efficiency monitoring

- Seamless mannequin experimentation with streamlined infrastructure orchestration utilizing Slurm and Amazon Elastic Kubernetes Service (Amazon EKS)

Who’s Articul8?

Articul8 was established to handle the gaps in enterprise generative AI adoption by creating autonomous, production-ready merchandise. For example, they discovered that the majority general-purpose LLMs usually fall quick in delivering the accuracy, effectivity, and domain-specific data wanted for real-world enterprise challenges. They’re pioneering a set of DSMs that supply twofold higher accuracy and completeness, in comparison with general-purpose fashions, at a fraction of the price. (See their current weblog put up for extra particulars.)

The corporate’s proprietary ModelMesh™ expertise serves as an autonomous layer that decides, selects, executes, and evaluates the correct fashions at runtime. Consider it as a reasoning system that determines what to run, when to run it, and in what sequence, based mostly on the duty and context. It evaluates responses at each step to refine its decision-making, enabling extra dependable and interpretable AI options whereas dramatically bettering efficiency.

Articul8’s ModelMesh™ helps:

- LLMs for common duties

- Area-specific fashions optimized for industry-specific functions

- Non-LLMs for specialised reasoning duties or established domain-specific duties (for instance, scientific simulation)

Articul8’s domain-specific fashions are setting new {industry} requirements throughout provide chain, vitality, and semiconductor sectors. The A8-SupplyChain mannequin, constructed for complicated workflows, achieves 92% accuracy and threefold efficiency good points over general-purpose LLMs in sequential reasoning. In vitality, A8-Power fashions have been developed with EPRI and NVIDIA as a part of the Open Energy AI Consortium, enabling superior grid optimization, predictive upkeep, and gear reliability. The A8-Semicon mannequin has set a brand new benchmark, outperforming high open-source (DeepSeek-R1, Meta Llama 3.3/4, Qwen 2.5) and proprietary fashions (GPT-4o, Anthropic’s Claude) by twofold in Verilog code accuracy, all whereas working at 50–100 instances smaller mannequin sizes for real-time AI deployment.

Articul8 develops a few of their domain-specific fashions utilizing Meta’s Llama household as a versatile, open-weight basis for expert-level reasoning. By a rigorous fine-tuning pipeline with reasoning trajectories and curated benchmarks, common Llama fashions are reworked into area specialists. To tailor fashions for areas like {hardware} description languages, Articul8 applies Reinforcement Studying with Verifiable Rewards (RLVR), utilizing automated reward pipelines to specialize the mannequin’s coverage. In a single case, a dataset of fifty,000 paperwork was routinely processed into 1.2 million photos, 360,000 tables, and 250,000 summaries, clustered right into a data graph of over 11 million entities. These structured insights gasoline A8-DSMs throughout analysis, product design, growth, and operations.

How SageMaker HyperPod accelerated the event of Articul8’s DSMs

Value and time to coach DSMs is important for achievement for Articul8 in a quickly evolving ecosystem. Coaching high-performance DSMs requires intensive experimentation, speedy iteration, and scalable compute infrastructure. With SageMaker HyperPod, Articul8 was capable of:

- Quickly iterate on DSM coaching – SageMaker HyperPod resiliency options enabled Articul8 to coach and fine-tune its DSMs in a fraction of the time required by conventional infrastructure

- Optimize mannequin coaching efficiency – By utilizing the automated failure restoration function in SageMaker HyperPod, Articul8 supplied steady and resilient coaching processes

- Cut back AI deployment time by 4 instances and decrease complete price of possession by 5 instances – The orchestration capabilities of SageMaker HyperPod alleviated the guide overhead of cluster administration, permitting Articul8’s analysis groups to concentrate on mannequin optimization quite than infrastructure maintenance

These benefits contributed to record-setting benchmark outcomes by Articul8, proving that domain-specific fashions ship superior real-world efficiency in comparison with general-purpose fashions.

Distributed coaching challenges and the position of SageMaker HyperPod

Distributed coaching throughout lots of of nodes faces a number of important challenges past primary useful resource constraints. Managing large coaching clusters requires strong infrastructure orchestration and cautious useful resource allocation for operational effectivity. SageMaker HyperPod affords each managed Slurm and Amazon EKS orchestration expertise that streamlines cluster creation, infrastructure resilience, job submission, and observability. The next particulars concentrate on the Slurm implementation for reference:

- Cluster setup – Though establishing a cluster is a one-time effort, the method is streamlined with a setup script that walks the administrator by way of every step of cluster creation. This put up reveals how this may be executed in discrete steps.

- Resiliency – Fault tolerance turns into paramount when working at scale. SageMaker HyperPod handles node failures and community interruptions by changing defective nodes routinely. You may add the flag

--auto-resume=1with the Slurm srun command, and the distributed coaching job will get better from the final checkpoint. - Job submission – SageMaker HyperPod managed Slurm orchestration is a strong manner for knowledge scientists to submit and handle distributed coaching jobs. Check with the next instance within the AWS-samples distributed coaching repo for reference. For example, a distributed coaching job will be submitted with a Slurm sbatch command:

sbatch 1.distributed-training-llama2.sbatch. You should utilizesqueueandscancelto view and cancel jobs, respectively. - Observability – SageMaker HyperPod makes use of Amazon CloudWatch and open supply managed Prometheus and Grafana providers for monitoring and logging. Cluster directors can view the well being of the infrastructure (community, storage, compute) and utilization.

Resolution overview

The SageMaker HyperPod platform allows Articul8 to effectively handle high-performance compute clusters with out requiring a devoted infrastructure group. The service routinely displays cluster well being and replaces defective nodes, making the deployment course of frictionless for researchers.

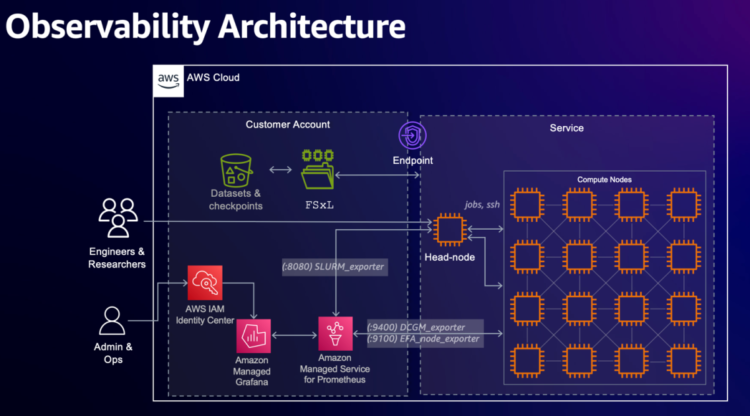

To boost their experimental capabilities, Articul8 built-in SageMaker HyperPod with Amazon Managed Grafana, offering real-time observability of GPU sources by way of a single-pane-of-glass dashboard. In addition they used SageMaker HyperPod lifecycle scripts to customise their cluster atmosphere and set up required libraries and packages. This complete setup empowers Articul8 to conduct speedy experimentation whereas sustaining excessive efficiency and reliability—they diminished their clients’ AI deployment time by 4 instances and lowered their complete price of possession by 5 instances.

The next diagram illustrates the observability structure.

The platform’s effectivity in managing computational sources with minimal downtime has been significantly invaluable for Articul8’s analysis and growth efforts, empowering them to rapidly iterate on their generative AI options whereas sustaining enterprise-grade efficiency requirements. The next sections describe the setup and ends in element.

For the setup for this put up, we start with the AWS printed workshop for SageMaker HyperPod, and regulate it to swimsuit our workload.

Stipulations

The next two AWS CloudFormation templates deal with the stipulations of the answer setup.

For SageMaker HyperPod

This CloudFormation stack addresses the stipulations for SageMaker HyperPod:

- VPC and two subnets – A public subnet and a non-public subnet are created in an Availability Zone (supplied as a parameter). The digital personal cloud (VPC) incorporates two CIDR blocks with 10.0.0.0/16 (for the general public subnet) and 10.1.0.0/16 (for the personal subnet). An web gateway and NAT gateway are deployed within the public subnet.

- Amazon FSx for Lustre file system – An Amazon FSx for Lustre quantity is created within the specified Availability Zone, with a default of 1.2 TB storage, which will be overridden by a parameter. For this case examine, we elevated the storage measurement to 7.2 TB.

- Amazon S3 bucket – The stack deploys endpoints for Amazon Easy Storage Service (Amazon S3) to retailer lifecycle scripts.

- IAM position – An AWS Id and Entry Administration (IAM) position can also be created to assist execute SageMaker HyperPod cluster operations.

- Safety group – The script creates a safety group to allow EFA communication for multi-node parallel batch jobs.

For cluster observability

To get visibility into cluster operations and ensure workloads are working as anticipated, an optionally available CloudFormation stack has been used for this case examine. This stack consists of:

- Node exporter – Helps visualization of CPU load averages, reminiscence and disk utilization, community site visitors, file system, and disk I/O metrics

- NVIDIA DCGM – Helps visualization of GPU utilization, temperatures, energy utilization, and reminiscence utilization

- EFA metrics – Helps visualization of EFA community and error metrics, EFA RDMA efficiency, and so forth.

- FSx for Lustre – Helps visualization of file system learn/write operations, free capability, and metadata operations

Observability will be configured by way of YAML scripts to observe SageMaker HyperPod clusters on AWS. Amazon Managed Service for Prometheus and Amazon Managed Grafana workspaces with related IAM roles are deployed within the AWS account. Prometheus and exporter providers are additionally arrange on the cluster nodes.

Utilizing Amazon Managed Grafana with SageMaker HyperPod helps you create dashboards to observe GPU clusters and ensure they function effectively with minimal downtime. As well as, dashboards have turn into a important device to present you a holistic view of how specialised workloads devour totally different sources of the cluster, serving to builders optimize their implementation.

Cluster setup

The cluster is about up with the next parts (outcomes would possibly differ based mostly on buyer use case and deployment setup):

- Head node and compute nodes – For this case examine, we use a head node and SageMaker HyperPod compute nodes. The pinnacle node has an ml.m5.12xlarge occasion, and the compute queue consists of ml.p4de.24xlarge situations.

- Shared quantity – The cluster has an FSx for Lustre file system mounted at /fsx on each the top and compute nodes.

- Native storage – Every node has 8 TB native NVME quantity connected for native storage.

- Scheduler – Slurm is used as an orchestrator. Slurm is an open supply and extremely scalable cluster administration device and job scheduling system for high-performance computing (HPC) clusters.

- Accounting – As a part of cluster configuration, an area MariaDB is deployed that retains monitor of job runtime info.

Outcomes

Throughout this mission, Articul8 was capable of affirm the anticipated efficiency of A100 with the additional benefit of making a cluster utilizing Slurm and offering observability metrics to observe the well being of varied parts (storage, GPU nodes, fiber). The first validation was on the benefit of use and speedy ramp-up of information science experiments. Moreover, they have been capable of reveal close to linear scaling with distributed coaching, attaining a 3.78 instances discount in time to coach for Meta Llama-2 13B with 4x nodes. Having the pliability to run a number of experiments, with out dropping growth time from infrastructure overhead was an essential accomplishment for the Articul8 knowledge science group.

Clear up

Should you run the cluster as a part of the workshop, you may comply with the cleanup steps to delete the CloudFormation sources after deleting the cluster.

Conclusion

This put up demonstrated how Articul8 AI used SageMaker HyperPod to beat the scalability and effectivity challenges of coaching a number of high-performing DSMs throughout key industries. By assuaging infrastructure complexity, SageMaker HyperPod empowered Articul8 to concentrate on constructing AI programs with measurable enterprise outcomes. From semiconductor and vitality to provide chain, Articul8’s DSMs are proving that the way forward for enterprise AI shouldn’t be common—it’s purpose-built. Key takeaways embrace:

- DSMs considerably outperform general-purpose LLMs in important domains

- SageMaker HyperPod accelerated the event of Articul8’s A8-Semicon, A8-SupplyChain, and Power DSM fashions

- Articul8 diminished AI deployment time by 4 instances and lowered complete price of possession by 5 instances utilizing the scalable, automated coaching infrastructure of SageMaker HyperPod

Be taught extra about SageMaker HyperPod by following this workshop. Attain out to your account group on how you should use this service to speed up your personal coaching workloads.

In regards to the Authors

Yashesh A. Shroff, PhD. is a Sr. GTM Specialist within the GenAI Frameworks group, answerable for scaling buyer foundational mannequin coaching and inference on AWS utilizing self-managed or specialised providers to fulfill price and efficiency necessities. He holds a PhD in Pc Science from UC Berkeley and an MBA from Columbia Graduate College of Enterprise.

Yashesh A. Shroff, PhD. is a Sr. GTM Specialist within the GenAI Frameworks group, answerable for scaling buyer foundational mannequin coaching and inference on AWS utilizing self-managed or specialised providers to fulfill price and efficiency necessities. He holds a PhD in Pc Science from UC Berkeley and an MBA from Columbia Graduate College of Enterprise.

Amit Bhatnagar is a Sr Technical Account Supervisor with AWS, within the Enterprise Assist group, with a concentrate on generative AI startups. He’s answerable for serving to key AWS clients with their strategic initiatives and operational excellence within the cloud. When he’s not chasing expertise, Amit likes to cook dinner vegan delicacies and hit the highway together with his household to chase the horizon.

Amit Bhatnagar is a Sr Technical Account Supervisor with AWS, within the Enterprise Assist group, with a concentrate on generative AI startups. He’s answerable for serving to key AWS clients with their strategic initiatives and operational excellence within the cloud. When he’s not chasing expertise, Amit likes to cook dinner vegan delicacies and hit the highway together with his household to chase the horizon.

Renato Nascimento is the Head of Expertise at Articul8, the place he leads the event and execution of the corporate’s expertise technique. With a concentrate on innovation and scalability, he ensures the seamless integration of cutting-edge options into Articul8’s merchandise, enabling industry-leading efficiency and enterprise adoption.

Renato Nascimento is the Head of Expertise at Articul8, the place he leads the event and execution of the corporate’s expertise technique. With a concentrate on innovation and scalability, he ensures the seamless integration of cutting-edge options into Articul8’s merchandise, enabling industry-leading efficiency and enterprise adoption.

Felipe Viana is the Head of Utilized Analysis at Articul8, the place he leads the design, growth, and deployment of modern generative AI applied sciences, together with domain-specific fashions, new mannequin architectures, and multi-agent autonomous programs.

Felipe Viana is the Head of Utilized Analysis at Articul8, the place he leads the design, growth, and deployment of modern generative AI applied sciences, together with domain-specific fashions, new mannequin architectures, and multi-agent autonomous programs.

Andre Von Zuben is the Head of Structure at Articul8, the place he’s answerable for designing and implementing scalable generative AI platform components, novel generative AI mannequin architectures, and distributed mannequin coaching and deployment pipelines.

Andre Von Zuben is the Head of Structure at Articul8, the place he’s answerable for designing and implementing scalable generative AI platform components, novel generative AI mannequin architectures, and distributed mannequin coaching and deployment pipelines.