This publish is the second a part of the DeepSeek collection specializing in mannequin customization with Amazon SageMaker HyperPod recipes (or recipes for brevity). In Half 1, we demonstrated the efficiency and ease of fine-tuning DeepSeek-R1 distilled fashions utilizing these recipes. On this publish, we use the recipes to fine-tune the unique DeepSeek-R1 671b parameter mannequin. We reveal this by way of the step-by-step implementation of those recipes utilizing each SageMaker coaching jobs and SageMaker HyperPod.

Enterprise use case

After its public launch, DeepSeek-R1 mannequin, developed by DeepSeek AI, confirmed spectacular outcomes throughout a number of analysis benchmarks. The mannequin follows the Combination of Specialists (MoE) structure and has 671 billion parameters. Historically, massive fashions are nicely tailored for a large spectrum of generalized duties by the advantage of being educated on the massive quantity of knowledge. The DeepSeek-R1 mannequin was educated on 14.8 trillion tokens. The unique R1 mannequin demonstrates robust few-shot or zero-shot studying capabilities, permitting it to generalize to new duties and eventualities that weren’t a part of its authentic coaching.

Nonetheless, many shoppers favor to both fine-tune or run steady pre-training of those fashions to adapt it to their particular enterprise functions or to optimize it for particular duties. A monetary group would possibly need to customise the mannequin with their customized knowledge to help with their knowledge processing duties. Or a hospital community can fine-tune it with their affected person data to behave as a medical assistant for his or her medical doctors. High quality-tuning may also prolong the mannequin’s generalization capacity. Prospects can fine-tune it with a corpus of textual content in particular languages that aren’t totally represented within the authentic coaching knowledge. For instance, a mannequin fine-tuned with an extra trillion tokens of Hindi language will be capable to develop the identical generalization capabilities to Hindi.

The choice on which mannequin to fine-tune relies on the top utility in addition to the accessible dataset. Based mostly on the quantity of proprietary knowledge, clients can determine to fine-tune the bigger DeepSeek-R1 mannequin as an alternative of doing it for one of many distilled variations. As well as, the R1 fashions have their very own set of guardrails. Prospects would possibly need to fine-tune to replace these guardrails or develop on them.

High quality-tuning bigger fashions like DeepSeek-R1 requires cautious optimization to steadiness value, deployment necessities, and efficiency effectiveness. To realize optimum outcomes, organizations should meticulously choose an applicable setting, decide the most effective hyperparameters, and implement environment friendly mannequin sharding methods.

Resolution structure

SageMaker HyperPod recipes successfully deal with these necessities by offering a rigorously curated mixture of distributed coaching strategies, optimizations, and configurations for state-of-the-art (SOTA) open supply fashions. These recipes have undergone intensive benchmarking, testing, and validation to supply seamless integration with the SageMaker coaching and fine-tuning processes.

On this publish, we discover options that reveal find out how to fine-tune the DeepSeek-R1 mannequin utilizing these recipes on both SageMaker HyperPod or SageMaker coaching jobs. Your alternative between these companies will rely in your particular necessities and preferences. In case you require granular management over coaching infrastructure and intensive customization choices, SageMaker HyperPod is the perfect alternative. SageMaker coaching jobs, then again, is tailor-made for organizations that desire a totally managed expertise for his or her coaching workflows. To be taught extra particulars about these service options, confer with Generative AI basis mannequin coaching on Amazon SageMaker.

The next diagram illustrates the answer structure for coaching utilizing SageMaker HyperPod. With HyperPod, customers can start the method by connecting to the login/head node of the Slurm cluster. Every step is run as a Slurm job and makes use of Amazon FSx for Lustre for storing mannequin checkpoints. For DeepSeek-R1, the method consists of the next steps:

- Obtain the DeepSeek-R1 mannequin and convert weights from FP8 to BF16 format

- Load the mannequin into reminiscence and carry out fine-tuning utilizing Quantized Low-Rank Adaptation (QLoRA)

- Merge QLoRA adapters with the bottom mannequin

- Convert and cargo the mannequin for batch analysis

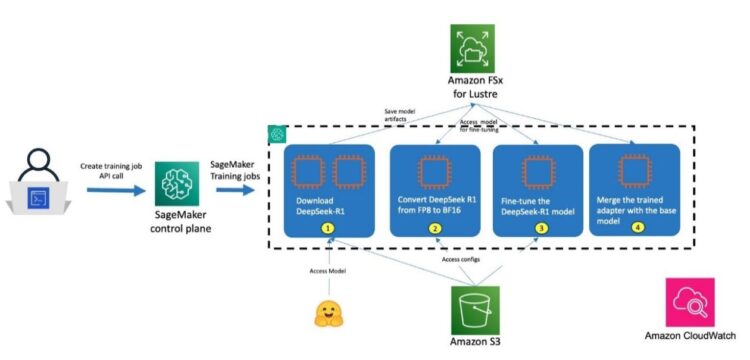

The next diagram illustrates the answer structure for SageMaker coaching jobs. You may execute every step within the coaching pipeline by initiating the method by way of the SageMaker management airplane utilizing APIs, AWS Command Line Interface (AWS CLI), or the SageMaker ModelTrainer SDK. In response, SageMaker launches coaching jobs with the requested quantity and kind of compute situations to run particular duties. For DeepSeek-R1, the method consists of three predominant steps:

- Obtain and convert R1 to BF16 datatype format

- Load the mannequin into reminiscence and carry out fine-tuning

- Consolidate and cargo the checkpoints into reminiscence, then run inference and metrics to guage efficiency enhancements

Conditions

Full the next stipulations earlier than operating the DeepSeek-R1 671B mannequin fine-tuning pocket book:

- Make the next quota improve requests for SageMaker. You must request a minimal of two

ml.p5.48xlargesituations (with 8 x NVIDIA H100 GPUs) ranging to a most of 4ml.p5.48xlargesituations (relying on time-to-train and cost-to-train trade-offs on your use case). On the Service Quotas console, request the next SageMaker quotas. It could actually take as much as 24 hours for the quota improve to be authorised:- P5 situations (

ml.p5.48xlarge) for coaching job utilization: 2–4 - P5 situations (

ml.p5.48xlarge) for HyperPod clusters (ml.p5.48xlarge for cluster utilization): 2–4

- P5 situations (

- In case you select to make use of HyperPod clusters to run your coaching, arrange a HyperPod Slurm cluster, referring to Amazon SageMaker HyperPod Developer Information. Alternatively, you can too use the AWS CloudFormation template supplied within the Personal Account workshop and observe the directions to arrange a cluster and a improvement setting to entry and submit jobs to the cluster.

- (Optionally available) In case you select to make use of SageMaker coaching jobs, you may create an Amazon SageMaker Studio area (confer with Use fast setup for Amazon SageMaker AI) to entry Jupyter notebooks with the previous position (You should utilize JupyterLab in your native setup too).

- Create an AWS Identification and Entry Administration (IAM) position with managed insurance policies

AmazonSageMakerFullAccess,AmazonFSxFullAccess, andAmazonS3FullAccessto offer the required entry to SageMaker to run the examples.

- Create an AWS Identification and Entry Administration (IAM) position with managed insurance policies

- Clone the GitHub repository with the property for this deployment. This repository consists of a pocket book that references coaching property:

Resolution walkthrough

To carry out the answer, observe the steps within the subsequent sections.

Technical concerns

The default weights supplied by the DeepSeek crew on their official R1 repository are of kind FP8. Nonetheless, we selected to disable FP8 in our recipes as a result of we empirically discovered that coaching with BF16 enhances generalization throughout various datasets with minimal adjustments to the recipe hyperparameters. Subsequently, to attain steady fine-tuning for a mannequin of 671b parameter measurement, we suggest first changing the mannequin from FP8 to BF16 utilizing the fp8_cast_bf16.py command-line script supplied by DeepSeek. Executing this script will copy over the transformed BF16 weights in Safetensor format to the required output listing. Keep in mind to repeat over the mannequin’s config.yaml to the output listing so the weights are loaded precisely. These steps are encapsulated in a prologue script and are documented step-by-step below the High quality-tuning part.

Prospects can use a sequence size of 8K for coaching, as examined on a p5.48xlarge occasion, every geared up with eight NVIDIA H100 GPUs. It’s also possible to select a smaller sequence size if wanted. Coaching with a sequence size larger than 8K would possibly result in out-of-memory points with GPUs. Additionally, changing mannequin weights from FP8 to BF16 requires a p5.48xlarge occasion, which can be advisable for coaching because of the mannequin’s excessive host reminiscence necessities throughout initialization.

Prospects should improve their transformers model to transformers==4.48.2 to run the coaching.

High quality-tuning

Run the finetune_deepseek_r1_671_qlora.ipynb pocket book to fine-tune the DeepSeek-R1 mannequin utilizing QLoRA on SageMaker.

Put together the dataset

This part covers loading the FreedomIntelligence/medical-o1-reasoning-SFT dataset, tokenizing and chunking the dataset, and configuring the information channels for SageMaker coaching on Amazon Easy Storage Service (Amazon S3). Full the next steps:

- Format the dataset by making use of the immediate format for DeepSeek-R1:

- Load the FreedomIntelligence/medical-o1-reasoning-SFT dataset and cut up it into coaching and validation datasets:

- Load the DeepSeek-R1 tokenizer from the Hugging Face Transformers library and generate tokens for the prepare and validation datasets. We use the unique sequence size of 8K:

- Put together the coaching and validation datasets for SageMaker coaching by saving them as

arrowrecordsdata, required by SageMaker HyperPod recipes, and developing the S3 paths the place these recordsdata shall be uploaded. This dataset shall be utilized in each SageMaker coaching jobs and SageMaker HyperPod examples:

The following part describes find out how to run a fine-tuning instance with SageMaker coaching jobs.

Possibility A: High quality-tune utilizing SageMaker coaching jobs

Observe these high-level steps:

- Obtain DeepSeek-R1 to the FSx for Lustre mounted listing

- Convert DeepSeek-R1 from FP8 to BF16

- High quality-tune the DeepSeek-R1 mannequin

- Merge the educated adapter with the bottom mannequin

Outline a utility perform to create the ModelTrainer class for each step of the SageMaker coaching jobs pipeline:

Obtain DeepSeek-R1 to the FSx for Lustre mounted listing

Observe these steps:

- Choose the occasion kind, Amazon FSx knowledge channel, community configuration for the coaching job, and supply code, then outline the ModelTrainer class to run the coaching job on the

ml.c5.18xlargeoccasion to obtain DeepSeek-R1 from the Hugging Face DeepSeek-R1 hub:

- Provoke the coaching calling prepare perform of the ModelTrainer class:

Convert DeepSeek R1 from FP8 to BF16

Use ModelTrainer to transform the DeepSeek-R1 downloaded mannequin weights from FP8 to BF16 format for optimum PEFT coaching. We use script convert.sh to run the execution utilizing the ml.c5.18xlarge occasion.

Use SageMaker coaching heat pool configuration to retain and reuse provisioned infrastructure after the completion of a mannequin obtain coaching job within the earlier step:

High quality-tune the DeepSeek-R1 mannequin

The following section includes fine-tuning the DeepSeek-R1 mannequin utilizing two ml.p5.48xlarge situations, utilizing distributed coaching. You implement this by way of the SageMaker recipe hf_deepseek_r1_671b_seq8k_gpu_qlora, which contains the QLoRA methodology. QLoRA makes the massive language mannequin (LLM) trainable on restricted compute by quantizing the bottom mannequin to 4-bit precision whereas utilizing small, trainable low-rank adapters for fine-tuning, dramatically decreasing reminiscence necessities with out sacrificing mannequin high quality:

Provoke the coaching job to fine-tune the mannequin. SageMaker coaching jobs will provision two P5 situations, orchestrate the SageMaker mannequin parallel container smdistributed-modelparallel:2.4.1-gpu-py311-cu121, and execute the recipe to fine-tune DeepSeek-R1 with the QLoRA technique on an ephemeral cluster:

Merge the educated adapter with the bottom mannequin

Merge the educated adapters with the bottom mannequin so it may be used for inference:

The following part exhibits how one can run comparable steps on HyperPod to run your generative AI workloads.

Possibility B: High quality-tune utilizing SageMaker HyperPod with Slurm

To fine-tune the mannequin utilizing HyperPod, ensure that your cluster is up and prepared by following the stipulations talked about earlier. To entry the login/head node of the HyperPod Slurm cluster out of your improvement setting, observe the login directions at SSH into Cluster within the workshop.

Alternatively, you can too use AWS Techniques Supervisor and run a command corresponding to the next to start out the session. You will discover the cluster ID, occasion group identify, and occasion ID on the Amazon SageMaker console.

- Whenever you’re within the cluster’s login/head node, run the next instructions to arrange the setting. Run

sudo su - ubuntuto run the remaining instructions as the foundation consumer, until you could have a selected consumer ID to entry the cluster and your POSIX consumer is created by way of a lifecycle script on the cluster. Discuss with the multi-user setup for extra particulars.

- Create a squash file utilizing Enroot to run the job on the cluster. Enroot runtime presents GPU acceleration, rootless container help, and seamless integration with HPC environments, making it perfect for operating workflows securely.

- After you’ve created the squash file, replace the

recipes_collection/config.yamlfile with absolutely the path to the squash file (created within the previous step), and replace theinstance_typeif wanted. The ultimate config file ought to have the next parameters:

Additionally replace the file recipes_collection/cluster/slurm.yaml so as to add container_mounts pointing to the FSx for Lustre file system utilized in your cluster.

Observe these high-level steps to arrange, fine-tune, and consider the mannequin utilizing HyperPod recipes:

- Obtain the mannequin and convert weights to BF16

- High quality-tune the mannequin utilizing QLoRA

- Merge the educated mannequin adapter

- Consider the fine-tuned mannequin

Obtain the mannequin and convert weights to BF16

Obtain the DeepSeek-R1 mannequin from the HuggingFace hub and convert the mannequin weights from FP8 to BF16. You must convert this to make use of QLoRA for fine-tuning. Copy and execute the next bash script:

High quality-tune the mannequin utilizing QLoRA

Obtain the ready dataset that you simply uploaded to Amazon S3 into your FSx for Lustre quantity connected to the cluster.

- Enter the next instructions to obtain the recordsdata from Amazon S3:

- Replace the launcher script to fine-tune the DeepSeek-R1 671B mannequin. The launcher scripts function handy wrappers for executing the coaching script, predominant.py file, simplifying the method of fine-tuning and parameter adjustment. For fine-tuning the DeepSeek R1 671B mannequin, you’ll find the precise script at:

Earlier than operating the script, it is advisable to modify the placement of the coaching and validation recordsdata, replace the HuggingFace mannequin ID, and optionally the entry token for personal fashions and datasets. The script ought to seem like the next (replace recipes.coach.num_nodes when you’re utilizing a multi-node cluster):

You may view the recipe for this fine-tuning activity below recipes_collection/recipes/fine-tuning/deepseek/hf_deepseek_r1_671b_seq8k_gpu_qlora.yaml and override extra parameters as wanted.

- Submit the job by operating the launcher script:

Monitor the job utilizing Slurm instructions corresponding to squeue and scontrol present to view the standing of the job and the corresponding logs. The logs will be discovered within the outcomes folder within the launch listing. When the job is full, the mannequin adapters are saved within the EXP_DIR that you simply outlined within the launch. The construction of the listing ought to seem like this:

You may see the educated adapter weights are saved as a part of the checkpointing below ./checkpoints/peft_sharded/step_N. We’ll later use this to merge with the bottom mannequin.

Merge the educated mannequin adapter

Observe these steps:

- Run a job utilizing the

smdistributed-modelparallelenroot picture to merge the adapter with the bottom mannequin.

- Obtain the

merge_peft_checkpoint.pycode from sagemaker-hyperpod-training-adapter-for-nemo repository and retailer it in Amazon FSx. Modify the export variables within the following scripts accordingly to replicate the paths forSOURCE_DIR,ADAPTER_PATH,BASE_MODEL_BF16andMERGE_MODEL_PATH.

Consider the fine-tuned mannequin

Use the fundamental testing scripts supplied by DeekSeek to deploy the merged mannequin.

- Begin by cloning their repo:

- You must convert the merged mannequin to a selected format for operating inference. On this case, you want 4*P5 situations to deploy the mannequin as a result of the merged mannequin is in BF16. Enter the next command to transform the mannequin:

- When the conversion is full, use the next sbatch script to run the batch inference, making the next changes:

- Replace the

ckpt-pathto the transformed mannequin path from the earlier step. - Create a brand new

prompts.txtfile with every line containing a immediate. The job will use the prompts from this file and generate output.

- Replace the

Cleanup

To wash up your sources to keep away from incurring extra fees, observe these steps:

- Delete any unused SageMaker Studio sources.

- (Optionally available) Delete the SageMaker Studio area.

- Confirm that your coaching job isn’t operating anymore. To take action, in your SageMaker console, select Coaching and examine Coaching jobs.

- In case you created a HyperPod cluster, delete the cluster to cease incurring prices. In case you created the networking stack from the HyperPod workshop, delete the stack as nicely to scrub up the digital non-public cloud (VPC) sources and the FSx for Lustre quantity.

Conclusion

On this publish, we demonstrated find out how to fine-tune massive fashions corresponding to DeepSeek-R1 671B utilizing both SageMaker coaching jobs or SageMaker HyperPod with HyperPod recipes in just a few steps. This method minimizes the complexity of figuring out optimum distributed coaching configurations and offers a easy technique to correctly measurement your workloads with the most effective price-performance structure on AWS.

To start out utilizing SageMaker HyperPod recipes, go to our sagemaker-hyperpod-recipes GitHub repository for complete documentation and instance implementations. Our crew regularly expands our recipes primarily based on buyer suggestions and rising machine studying (ML) traits, ensuring you could have the required instruments for profitable AI mannequin coaching.

Concerning the Authors

Kanwaljit Khurmi is a Principal Worldwide Generative AI Options Architect at AWS. He collaborates with AWS product groups, engineering departments, and clients to supply steering and technical help, serving to them improve the worth of their hybrid machine studying options on AWS. Kanwaljit focuses on aiding clients with containerized functions and high-performance computing options.

Kanwaljit Khurmi is a Principal Worldwide Generative AI Options Architect at AWS. He collaborates with AWS product groups, engineering departments, and clients to supply steering and technical help, serving to them improve the worth of their hybrid machine studying options on AWS. Kanwaljit focuses on aiding clients with containerized functions and high-performance computing options.

Arun Kumar Lokanatha is a Senior ML Options Architect with the Amazon SageMaker crew. He focuses on massive language mannequin coaching workloads, serving to clients construct LLM workloads utilizing SageMaker HyperPod, SageMaker coaching jobs, and SageMaker distributed coaching. Outdoors of labor, he enjoys operating, mountain climbing, and cooking.

Arun Kumar Lokanatha is a Senior ML Options Architect with the Amazon SageMaker crew. He focuses on massive language mannequin coaching workloads, serving to clients construct LLM workloads utilizing SageMaker HyperPod, SageMaker coaching jobs, and SageMaker distributed coaching. Outdoors of labor, he enjoys operating, mountain climbing, and cooking.

Anoop Saha is a Sr GTM Specialist at Amazon Internet Companies (AWS) specializing in generative AI mannequin coaching and inference. He companions with high frontier mannequin builders, strategic clients, and AWS service groups to allow distributed coaching and inference at scale on AWS and lead joint GTM motions. Earlier than AWS, Anoop held a number of management roles at startups and enormous firms, primarily specializing in silicon and system structure of AI infrastructure.

Anoop Saha is a Sr GTM Specialist at Amazon Internet Companies (AWS) specializing in generative AI mannequin coaching and inference. He companions with high frontier mannequin builders, strategic clients, and AWS service groups to allow distributed coaching and inference at scale on AWS and lead joint GTM motions. Earlier than AWS, Anoop held a number of management roles at startups and enormous firms, primarily specializing in silicon and system structure of AI infrastructure.

Rohith Nadimpally is a Software program Growth Engineer engaged on AWS SageMaker, the place he accelerates large-scale AI/ML workflows. Earlier than becoming a member of Amazon, he graduated with Honors from Purdue College with a level in Laptop Science. Outdoors of labor, he enjoys enjoying tennis and watching motion pictures.

Rohith Nadimpally is a Software program Growth Engineer engaged on AWS SageMaker, the place he accelerates large-scale AI/ML workflows. Earlier than becoming a member of Amazon, he graduated with Honors from Purdue College with a level in Laptop Science. Outdoors of labor, he enjoys enjoying tennis and watching motion pictures.