A typical use case with generative AI that we often see prospects consider for a manufacturing use case is a generative AI-powered assistant. Nonetheless, earlier than it may be deployed, there may be the standard manufacturing readiness evaluation that features considerations resembling understanding the safety posture, monitoring and logging, value monitoring, resilience, and extra. The best precedence of those manufacturing readiness assessments is often safety. If there are safety dangers that may’t be clearly recognized, then they’ll’t be addressed, and that may halt the manufacturing deployment of the generative AI software.

On this publish, we present you an instance of a generative AI assistant software and exhibit easy methods to assess its safety posture utilizing the OWASP Prime 10 for Massive Language Mannequin Purposes, in addition to easy methods to apply mitigations for frequent threats.

Generative AI scoping framework

Begin by understanding the place your generative AI software suits throughout the spectrum of managed vs. customized. Use the AWS generative AI scoping framework to grasp the precise mixture of the shared duty for the safety controls relevant to your software. For instance, Scope 1 “Shopper Apps” like PartyRock or ChatGPT are often publicly going through functions, the place many of the software inner safety is owned and managed by the supplier, and your duty for safety is on the consumption facet. Distinction that with Scope 4/5 functions, the place not solely do you construct and safe the generative AI software your self, however you’re additionally accountable for fine-tuning and coaching the underlying giant language mannequin (LLM). The safety controls in scope for Scope 4/5 functions will vary extra broadly from the frontend to LLM mannequin safety. This publish will give attention to the Scope 3 generative AI assistant software, which is likely one of the extra frequent use instances seen within the subject.

The next determine of the AWS Generative AI Safety Scoping Matrix summarizes the kinds of fashions for every scope.

OWASP Prime 10 for LLMs

Utilizing the OWASP Prime 10 for understanding threats and mitigations to an software is likely one of the commonest methods software safety is assessed. The OWASP Prime 10 for LLMs takes a tried and examined framework and applies it to generative AI functions to assist us uncover, perceive, and mitigate the novel threats for generative AI.

Resolution overview

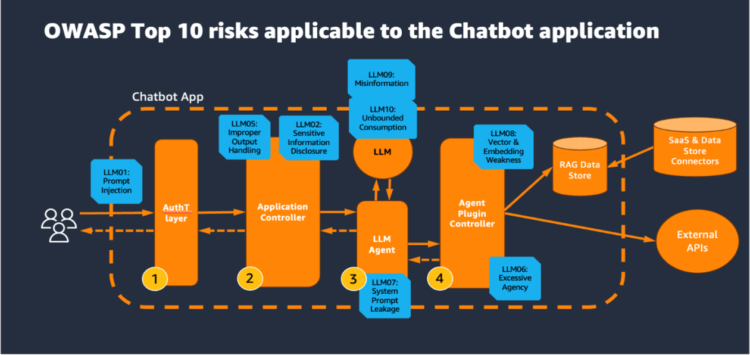

Let’s begin with a logical structure of a typical generative AI assistant software overlying the OWASP Prime 10 for LLM threats, as illustrated within the following diagram.

On this structure, the end-user request often goes by the next elements:

- Authentication layer – This layer validates that the consumer connecting to the appliance is who they are saying they’re. That is sometimes accomplished by some form of an identification supplier (IdP) functionality like Okta, AWS IAM Id Heart, or Amazon Cognito.

- Utility controller – This layer incorporates many of the software enterprise logic and determines easy methods to course of the incoming consumer request by producing the LLM prompts and processing LLM responses earlier than they’re despatched again to the consumer.

- LLM and LLM agent – The LLM supplies the core generative AI functionality to the assistant. The LLM agent is an orchestrator of a set of steps that is perhaps obligatory to finish the specified request. These steps would possibly contain each using an LLM and exterior information sources and APIs.

- Agent plugin controller – This element is accountable for the API integration to exterior information sources and APIs. This element additionally holds the mapping between the logical identify of an exterior element, which the LLM agent would possibly consult with, and the bodily identify.

- RAG information retailer – The Retrieval Augmented Era (RAG) information retailer delivers up-to-date, exact, and access-controlled data from varied information sources resembling information warehouses, databases, and different software program as a service (SaaS) functions by information connectors.

The OWASP Prime 10 for LLM dangers map to numerous layers of the appliance stack, highlighting vulnerabilities from UIs to backend techniques. Within the following sections, we talk about dangers at every layer and supply an software design sample for a generative AI assistant software in AWS that mitigates these dangers.

The next diagram illustrates the assistant structure on AWS.

Authentication layer (Amazon Cognito)

Frequent safety threats resembling brute power assaults, session hijacking, and denial of service (DoS) assaults can happen. To mitigate these dangers, implement finest practices like multi-factor authentication (MFA), price limiting, safe session administration, automated session timeouts, and common token rotation. Moreover, deploying edge safety measures resembling AWS WAF and distributed denial of service (DDoS) mitigation helps block frequent net exploits and preserve service availability throughout assaults.

Within the previous structure diagram, AWS WAF is built-in with Amazon API Gateway to filter incoming site visitors, blocking unintended requests and defending functions from threats like SQL injection, cross-site scripting (XSS), and DoS assaults. AWS WAF Bot Management additional enhances safety by offering visibility and management over bot site visitors, permitting directors to dam or rate-limit undesirable bots. This characteristic may be centrally managed throughout a number of accounts utilizing AWS Firewall Supervisor, offering a constant and strong method to software safety.

Amazon Cognito enhances these defenses by enabling consumer authentication and information synchronization. It helps each consumer swimming pools and identification swimming pools, enabling seamless administration of consumer identities throughout gadgets and integration with third-party identification suppliers. Amazon Cognito gives security measures, together with MFA, OAuth 2.0, OpenID Join, safe session administration, and risk-based adaptive authentication, to assist shield towards unauthorized entry by evaluating sign-in requests for suspicious exercise and responding with extra safety measures like MFA or blocking sign-ins. Amazon Cognito additionally enforces password reuse prevention, additional defending towards compromised credentials.

AWS Protect Superior provides an additional layer of protection by offering enhanced safety towards refined DDoS assaults. Built-in with AWS WAF, Protect Superior delivers complete perimeter safety, utilizing tailor-made detection and health-based assessments to boost response to assaults. It additionally gives round the clock assist from the AWS Protect Response Group and contains DDoS value safety, making functions stay safe and cost-effective. Collectively, Protect Superior and AWS WAF create a safety framework that protects functions towards a variety of threats whereas sustaining availability.

This complete safety setup addresses LLM10:2025 Unbound Consumption and LLM02:2025 Delicate Data Disclosure, ensuring that functions stay each resilient and safe.

Utility controller layer (LLM orchestrator Lambda perform)

The appliance controller layer is often weak to dangers resembling LLM01:2025 Immediate Injection, LLM05:2025 Improper Output Dealing with, and LLM 02:2025 Delicate Data Disclosure. Outdoors events would possibly ceaselessly try to take advantage of this layer by crafting unintended inputs to control the LLM, doubtlessly inflicting it to disclose delicate data or compromise downstream techniques.

Within the bodily structure diagram, the appliance controller is the LLM orchestrator AWS Lambda perform. It performs strict enter validation by extracting the occasion payload from API Gateway and conducting each syntactic and semantic validation. By sanitizing inputs, making use of allowlisting and deny itemizing of key phrases, and validating inputs towards predefined codecs or patterns, the Lambda perform helps forestall LLM01:2025 Immediate Injection assaults. Moreover, by passing the user_id downstream, it permits the downstream software elements to mitigate the chance of delicate data disclosure, addressing considerations associated to LLM02:2025 Delicate Data Disclosure.

Amazon Bedrock Guardrails supplies a further layer of safety by filtering and blocking delicate content material, resembling personally identifiable data (PII) and different customized delicate information outlined by regex patterns. Guardrails may also be configured to detect and block offensive language, competitor names, or different undesirable phrases, ensuring that each inputs and outputs are secure. You can too use guardrails to stop LLM01:2025 Immediate Injection assaults by detecting and filtering out dangerous or manipulative prompts earlier than they attain the LLM, thereby sustaining the integrity of the immediate.

One other important facet of safety is managing LLM outputs. As a result of the LLM would possibly generate content material that features executable code, resembling JavaScript or Markdown, there’s a threat of XSS assaults if this content material is just not correctly dealt with. To mitigate this threat, apply output encoding methods, resembling HTML entity encoding or JavaScript escaping, to neutralize any doubtlessly dangerous content material earlier than it’s introduced to customers. This method addresses the chance of LLM05:2025 Improper Output Dealing with.

Implementing Amazon Bedrock immediate administration and versioning permits for steady enchancment of the consumer expertise whereas sustaining the general safety of the appliance. By rigorously managing adjustments to prompts and their dealing with, you possibly can improve performance with out introducing new vulnerabilities and mitigating LLM01:2025 Immediate Injection assaults.

Treating the LLM as an untrusted consumer and making use of human-in-the-loop processes over sure actions are methods to decrease the probability of unauthorized or unintended operations.

LLM and LLM agent layer (Amazon Bedrock LLMs)

The LLM and LLM agent layer ceaselessly handles interactions with the LLM and faces dangers resembling LLM10: Unbounded Consumption, LLM05:2025 Improper Output Dealing with, and LLM02:2025 Delicate Data Disclosure.

DoS assaults can overwhelm the LLM with a number of resource-intensive requests, degrading total service high quality whereas rising prices. When interacting with Amazon Bedrock hosted LLMs, setting request parameters resembling the utmost size of the enter request will decrease the chance of LLM useful resource exhaustion. Moreover, there’s a onerous restrict on the utmost variety of queued actions and complete actions an Amazon Bedrock agent can take to satisfy a buyer’s intent, which limits the variety of actions in a system reacting to LLM responses, avoiding pointless loops or intensive duties that would exhaust the LLM’s sources.

Improper output dealing with results in vulnerabilities resembling distant code execution, cross-site scripting, server-side request forgery (SSRF), and privilege escalation. The insufficient validation and administration of the LLM-generated outputs earlier than they’re despatched downstream can grant oblique entry to extra performance, successfully enabling these vulnerabilities. To mitigate this threat, deal with the mannequin as some other consumer and apply validation of the LLM-generated responses. The method is facilitated with Amazon Bedrock Guardrails utilizing filters resembling content material filters with configurable thresholds to filter dangerous content material and safeguard towards immediate assaults earlier than they’re processed additional downstream by different backend techniques. Guardrails mechanically consider each consumer enter and mannequin responses to detect and assist forestall content material that falls into restricted classes.

Amazon Bedrock Brokers execute multi-step duties and securely combine with AWS native and third-party companies to cut back the chance of insecure output dealing with, extreme company, and delicate data disclosure. Within the structure diagram, the motion group Lambda perform beneath the brokers is used to encode all of the output textual content, making it mechanically non-executable by JavaScript or Markdown. Moreover, the motion group Lambda perform parses every output from the LLM at each step executed by the brokers and controls the processing of the outputs accordingly, ensuring they’re secure earlier than additional processing.

Delicate data disclosure is a threat with LLMs as a result of malicious immediate engineering could cause LLMs to by accident reveal unintended particulars of their responses. This will result in privateness and confidentiality violations. To mitigate the difficulty, implement information sanitization practices by content material filters in Amazon Bedrock Guardrails.

Moreover, implement customized information filtering insurance policies based mostly on user_id and strict consumer entry insurance policies. Amazon Bedrock Guardrails helps filter content material deemed delicate, and Amazon Bedrock Brokers additional reduces the chance of delicate data disclosure by permitting you to implement customized logic within the preprocessing and postprocessing templates to strip any sudden data. When you have enabled mannequin invocation logging for the LLM or carried out customized logging logic in your software to file the enter and output of the LLM in Amazon CloudWatch, measures resembling CloudWatch Log information safety are essential in masking delicate data recognized within the CloudWatch logs, additional mitigating the chance of delicate data disclosure.

Agent plugin controller layer (motion group Lambda perform)

The agent plugin controller ceaselessly integrates with inner and exterior companies and applies customized authorization to inner and exterior information sources and third-party APIs. At this layer, the chance of LLM08:2025 Vector & Embedding Weaknesses and LLM06:2025 Extreme Company are in impact. Untrusted or unverified third-party plugins might introduce backdoors or vulnerabilities within the type of sudden code.

Apply least privilege entry to the AWS Id and Entry Administration (IAM) roles of the motion group Lambda perform, which interacts with plugin integrations to exterior techniques to assist mitigate the chance of LLM06:2025 Extreme Company and LLM08:2025 Vector & Embedding Weaknesses. That is demonstrated within the bodily structure diagram; the agent plugin layer Lambda perform is related to a least privilege IAM function for safe entry and interface with different inner AWS companies.

Moreover, after the consumer identification is set, limit the information aircraft by making use of user-level entry management by passing the user_id to downstream layers just like the agent plugin layer. Though this user_id parameter can be utilized within the agent plugin controller Lambda perform for customized authorization logic, its major function is to allow fine-grained entry management for third-party plugins. The duty lies with the appliance proprietor to implement customized authorization logic throughout the motion group Lambda perform, the place the user_id parameter can be utilized together with predefined guidelines to use the suitable degree of entry to third-party APIs and plugins. This method wraps deterministic entry controls round a non-deterministic LLM and permits granular entry management over which customers can entry and execute particular third-party plugins.

Combining user_id-based authorization on information and IAM roles with least privilege on the motion group Lambda perform will typically decrease the chance of LLM08:2025 Vector & Embedding Weaknesses and LLM06:2025 Extreme Company.

RAG information retailer layer

The RAG information retailer is accountable for securely retrieving up-to-date, exact, and consumer access-controlled data from varied first-party and third-party information sources. By default, Amazon Bedrock encrypts all data base-related information utilizing an AWS managed key. Alternatively, you possibly can select to make use of a buyer managed key. When establishing a knowledge ingestion job to your data base, you can too encrypt the job utilizing a customized AWS Key Administration Service (AWS KMS) key.

If you happen to resolve to make use of the vector retailer in Amazon OpenSearch Service to your data base, Amazon Bedrock can go a KMS key of your option to it for encryption. Moreover, you possibly can encrypt the periods during which you generate responses from querying a data base with a KMS key. To facilitate safe communication, Amazon Bedrock Data Bases makes use of TLS encryption when interacting with third-party vector shops, supplied that the service helps and permits TLS encryption in transit.

Relating to consumer entry management, Amazon Bedrock Data Bases makes use of filters to handle permissions. You’ll be able to construct a segmented entry answer on high of a data base utilizing metadata and filtering characteristic. Throughout runtime, your software should authenticate and authorize the consumer, and embody this consumer data within the question to take care of correct entry controls. To maintain the entry controls up to date, you must periodically resync the information to mirror any adjustments in permissions. Moreover, teams may be saved as a filterable attribute, additional refining entry management.

This method helps mitigate the chance of LLM02:2025 Delicate Data Disclosure and LLM08:2025 Vector & Embedding Weaknesses, to help in that solely licensed customers can entry the related information.

Abstract

On this publish, we mentioned easy methods to classify your generative AI software from a safety shared duty perspective utilizing the AWS Generative AI Safety Scoping Matrix. We reviewed a standard generative AI assistant software structure and assessed its safety posture utilizing the OWASP Prime 10 for LLMs framework, and confirmed easy methods to apply the OWASP Prime 10 for LLMs menace mitigations utilizing AWS service controls and companies to strengthen the structure of your generative AI assistant software. Study extra about constructing generative AI functions with AWS Workshops for Bedrock.

In regards to the Authors

Syed Jaffry is a Principal Options Architect with AWS. He advises software program corporations on AI and helps them construct fashionable, strong and safe software architectures on AWS.

Syed Jaffry is a Principal Options Architect with AWS. He advises software program corporations on AI and helps them construct fashionable, strong and safe software architectures on AWS.

Amit Kumar Agrawal is a Senior Options Architect at AWS the place he has spent over 5 years working with giant ISV prospects. He helps organizations construct and function cost-efficient and scalable options within the cloud, driving their enterprise and technical outcomes.

Amit Kumar Agrawal is a Senior Options Architect at AWS the place he has spent over 5 years working with giant ISV prospects. He helps organizations construct and function cost-efficient and scalable options within the cloud, driving their enterprise and technical outcomes.

Tej Nagabhatla is a Senior Options Architect at AWS, the place he works with a various portfolio of purchasers starting from ISVs to giant enterprises. He makes a speciality of offering architectural steerage throughout a variety of subjects round AI/ML, safety, storage, containers, and serverless applied sciences. He helps organizations construct and function cost-efficient, scalable cloud functions. In his free time, Tej enjoys music, enjoying basketball, and touring.

Tej Nagabhatla is a Senior Options Architect at AWS, the place he works with a various portfolio of purchasers starting from ISVs to giant enterprises. He makes a speciality of offering architectural steerage throughout a variety of subjects round AI/ML, safety, storage, containers, and serverless applied sciences. He helps organizations construct and function cost-efficient, scalable cloud functions. In his free time, Tej enjoys music, enjoying basketball, and touring.