Amazon SageMaker HyperPod is designed to assist large-scale machine studying (ML) operations, offering a sturdy surroundings for coaching basis fashions (FMs) over prolonged durations. A number of customers — similar to ML researchers, software program engineers, information scientists, and cluster directors — can work concurrently on the identical cluster, every managing their very own jobs and recordsdata with out interfering with others.

When utilizing HyperPod, you need to use acquainted orchestration choices similar to Slurm or Amazon Elastic Kubernetes Service (Amazon EKS). This weblog submit particularly applies to HyperPod clusters utilizing Slurm because the orchestrator. In these clusters, the idea of login nodes is obtainable, which cluster directors can add to facilitate person entry. These login nodes function the entry level by way of which customers work together with the cluster’s computational assets. Through the use of login nodes, customers can separate their interactive actions, similar to searching recordsdata, submitting jobs, and compiling code, from the cluster’s head node. This separation helps forestall any single person’s actions from affecting the general efficiency of the cluster.

Nonetheless, though HyperPod gives the aptitude to make use of login nodes, it doesn’t present an built-in mechanism for load balancing person exercise throughout these nodes. Because of this, customers manually choose a login node, which may result in imbalances the place some nodes are overutilized whereas others stay underutilized. This not solely impacts the effectivity of useful resource utilization however may also result in uneven efficiency experiences for various customers.

On this submit, we discover an answer for implementing load balancing throughout login nodes in Slurm-based HyperPod clusters. By distributing person exercise evenly throughout all accessible nodes, this method gives extra constant efficiency, higher useful resource utilization, and a smoother expertise for all customers. We information you thru the setup course of, offering sensible steps to realize efficient load balancing in your HyperPod clusters.

Resolution overview

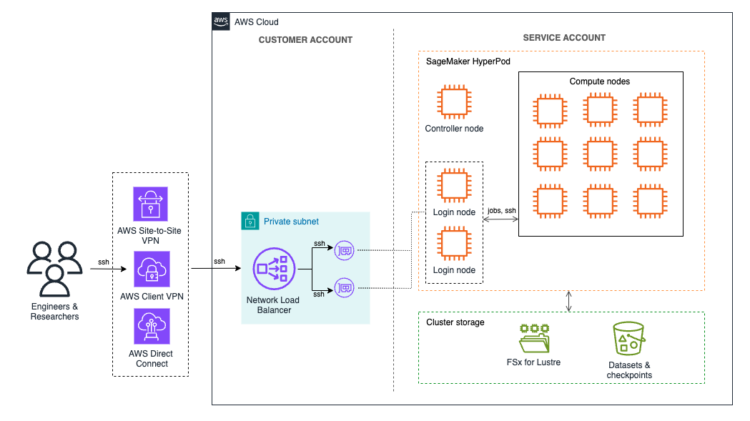

In HyperPod, login nodes function entry factors for customers to work together with the cluster’s computational assets to allow them to handle their duties with out impacting the pinnacle node. Though the default technique for accessing these login nodes is thru AWS Programs Supervisor, there are circumstances the place direct Safe Shell (SSH) entry is extra appropriate. SSH gives a extra conventional and versatile method of managing interactions, particularly for customers who require particular networking configurations or want options similar to TCP load balancing, which Programs Supervisor doesn’t assist.

Provided that HyperPod is often deployed in a digital personal cloud (VPC) utilizing personal subnets, direct SSH entry to the login nodes requires safe community connectivity into the personal subnet. There are a number of choices to realize this:

- AWS Website-to-Website VPN – Establishes a safe connection between your on-premises community and your VPC, appropriate for enterprise environments

- AWS Direct Join – Affords a devoted community connection for high-throughput and low-latency wants

- AWS VPN Shopper – A software-based answer that distant customers can use to securely hook up with the VPC, offering versatile and easy accessibility to the login nodes

This submit demonstrates learn how to use the AWS VPN Shopper to ascertain a safe connection to the VPC. We arrange a Community Load Balancer (NLB) inside the personal subnet to evenly distribute SSH site visitors throughout the accessible login nodes and use the VPN connection to hook up with the NLB within the VPC. The NLB ensures that person periods are balanced throughout the nodes, stopping any single node from changing into a bottleneck and thereby bettering total efficiency and useful resource utilization.

For environments the place VPN connectivity may not be possible, another possibility is to deploy the NLB in a public subnet to permit direct SSH entry from the web. On this configuration, the NLB might be secured by limiting entry by way of a safety group that permits SSH site visitors solely from specified, trusted IP addresses. Because of this, licensed customers can join on to the login nodes whereas sustaining some stage of management over entry to the cluster. Nonetheless, this public-facing technique is exterior the scope of this submit and isn’t beneficial for manufacturing environments, as exposing SSH entry to the web can introduce extra safety dangers.

The next diagram gives an outline of the answer structure.

Stipulations

Earlier than following the steps on this submit, ensure you have the foundational parts of a HyperPod cluster setup in place. This contains the core infrastructure for the HyperPod cluster and the community configuration required for safe entry. Particularly, you want:

- HyperPod cluster – This submit assumes you have already got a HyperPod cluster deployed. If not, confer with Getting began with SageMaker HyperPod and the HyperPod workshop for steering on creating and configuring your cluster.

- VPC, subnets, and safety group – Your HyperPod cluster ought to reside inside a VPC with related subnets. To deploy a brand new VPC and subnets, observe the directions within the Personal Account part of the HyperPod workshop. This course of contains deploying an AWS CloudFormation stack to create important assets such because the VPC, subnets, safety group, and an Amazon FSx for Lustre quantity for shared storage.

Establishing login nodes for cluster entry

Login nodes are devoted entry factors that customers can use to work together with the HyperPod cluster’s computational assets with out impacting the pinnacle node. By connecting by way of login nodes, customers can browse recordsdata, submit jobs, and compile code independently, selling a extra organized and environment friendly use of the cluster’s assets.

In case you haven’t arrange login nodes but, confer with the Login Node part of the HyperPod Workshop, which gives detailed directions on including these nodes to your cluster configuration.

Every login node in a HyperPod cluster has an related community interface inside your VPC. A community interface, also referred to as an elastic community interface, represents a digital community card that connects every login node to your VPC, permitting it to speak over the community. These interfaces have assigned IPv4 addresses, that are important for routing site visitors from the NLB to the login nodes.

To proceed with the load balancer setup, you might want to acquire the IPv4 addresses of every login node. You’ll be able to acquire these addresses from the AWS Administration Console or by invoking a command in your HyperPod cluster’s head node.

Utilizing the AWS Administration Console

To arrange login nodes for cluster entry utilizing the AWS Administration Console, observe these steps:

- On the Amazon EC2 console, choose Community interfaces within the navigation pane

- Within the Search bar, choose VPC ID = (Equals) and select the VPC id of the VPC containing the HyperPod cluster

- Within the Search bar, choose Description : (Accommodates) and enter the title of the occasion group that features your login nodes (usually, that is login-group)

For every login node, you can see an entry within the listing, as proven within the following screenshot. Be aware down the IPv4 addresses for all login nodes of your cluster.

Utilizing the HyperPod head node

Alternatively, you may also retrieve the IPv4 addresses by coming into the next command in your HyperPod cluster’s head node:

Create a Community Load Balancer

The subsequent step is to create a NLB to handle site visitors throughout your cluster’s login nodes.

For the NLB deployment, you want the IPv4 addresses of the login nodes collected earlier and the suitable safety group configurations. In case you deployed your cluster utilizing the HyperPod workshop directions, a safety group that allows communication between all cluster nodes ought to already be in place.

This safety group might be utilized to the load balancer, as demonstrated within the following directions. Alternatively, you possibly can decide to create a devoted safety group that grants entry particularly to the login nodes.

Create goal group

First, we create the goal group that will likely be utilized by the NLB.

- On the Amazon EC2 console, choose Goal teams within the navigation pane

- Select Create goal group

- Create a goal group with the next parameters:

- For Select a goal kind, select IP addresses

- For Goal group title, enter smhp-login-node-tg

- For Protocol : Port, select TCP and enter 22

- For IP tackle kind, select IPv4

- For VPC, select SageMaker HyperPod VPC (which was created with the CloudFormation template)

- For Well being verify protocol, select TCP

- Select Subsequent, as proven within the following screenshot

- Within the Register targets part, register the login node IP addresses because the targets

- For Ports, enter 22 and select Embrace as pending under, as proven within the following screenshot

- The login node IPs will seem as targets with Pending well being standing. Select Create goal group, as proven within the following screenshot

Create load balancer

To create the load balancer, observe these steps:

- On the Amazon EC2 console, choose Load Balancers within the navigation pane

- Select Create load balancer

- Select Community Load Balancer and select Create, as proven within the following screenshot

- Present a reputation (for instance, smhp-login-node-lb) and select Inside as Scheme

- For community mapping, choose the VPC that incorporates your HyperPod cluster and an related personal subnet, as proven within the following screenshot

- Choose a safety group that permits entry on port 22 to the login nodes. In case you deployed your cluster utilizing the HyperPod workshop directions, you need to use the safety group from this deployment.

- Choose the Goal group that you just created earlier than and select TCP as Protocol and 22 for Port, as proven within the following screenshot

- Select Create load balancer

After the load balancer has been created, you could find its DNS title on the load balancer’s element web page, as proven within the following screenshot.

Ensuring host keys are constant throughout login nodes

When utilizing a number of login nodes in a load-balanced surroundings, it’s essential to take care of constant SSH host keys throughout all nodes. SSH host keys are distinctive identifiers that every server makes use of to show its id to connecting shoppers. If every login node has a distinct host key, customers will encounter “WARNING: SSH HOST KEY CHANGED” messages each time they hook up with a distinct node, inflicting confusion and probably main customers to query the safety of the connection.

To keep away from these warnings, configure the identical SSH host keys on all login nodes within the load balancing rotation. This setup makes certain that customers gained’t obtain host key mismatch alerts when routed to a distinct node by the load balancer.

You’ll be able to enter the next script on the cluster’s head node to repeat the SSH host keys from the primary login node to the opposite login nodes in your HyperPod cluster:

Create AWS Shopper VPN endpoint

As a result of the NLB has been created with Inside scheme, it’s solely accessible from inside the HyperPod VPC. To entry the VPC and ship requests to the NLB, we use AWS Shopper VPN on this submit.

AWS Shopper VPN is a managed client-based VPN service that allows safe entry to your AWS assets and assets in your on-premises community.

We’ll arrange an AWS Shopper VPN endpoint that gives shoppers with entry to the HyperPod VPC and makes use of mutual authentication. With mutual authentication, Shopper VPN makes use of certificates to carry out authentication between shoppers and the Shopper VPN endpoint.

To deploy a consumer VPN endpoint with mutual authentication, you possibly can observe the steps outlined in Get began with AWS Shopper VPN. When configuring the consumer VPN to entry the HyperPod VPC and the login nodes, hold these diversifications to the next steps in thoughts:

- Step 2 (create a Shopper VPN endpoint) – By default, all consumer site visitors is routed by way of the Shopper VPN tunnel. To permit web entry with out routing site visitors by way of the VPN, you possibly can allow the choice Allow split-tunnel when creating the endpoint. When this selection is enabled, solely site visitors destined for networks matching a route within the Shopper VPN endpoint route desk is routed by way of the VPN tunnel. For extra particulars, confer with Cut up-tunnel on Shopper VPN endpoints.

- Step 3 (goal community associations) – Choose the VPC and personal subnet utilized by your HyperPod cluster, which incorporates the cluster login nodes.

- Step 4 (authorization guidelines) – Select the Classless Inter-Area Routing (CIDR) vary related to the HyperPod VPC. In case you adopted the HyperPod workshop directions, the CIDR vary is 10.0.0.0/16.

- Step 6 (safety teams) – Choose the safety group that you just beforehand used when creating the NLB.

Connecting to the login nodes

After the AWS Shopper VPN is configured, shoppers can set up a VPN connection to the HyperPod VPC. With the VPN connection in place, shoppers can use SSH to hook up with the NLB, which is able to route them to one of many login nodes.

ssh -i /path/to/your/private-key.pem person@

To permit SSH entry to the login nodes, you should create person accounts on the cluster and add their public keys to the authorized_keys file on every login node (or on all nodes, if needed). For detailed directions on managing multi-user entry, confer with the Multi-Person part of the HyperPod workshop.

Along with utilizing the AWS Shopper VPN, you may also entry the NLB from different AWS companies, similar to Amazon Elastic Compute Cloud (Amazon EC2) situations, in the event that they meet the next necessities:

- VPC connectivity – The EC2 situations should be both in the identical VPC because the NLB or capable of entry the HyperPod VPC by way of a peering connection or related community setup.

- Safety group configuration – The EC2 occasion’s safety group should permit outbound connections on port 22 to the NLB safety group. Likewise, the NLB safety group ought to be configured to just accept inbound SSH site visitors on port 22 from the EC2 occasion’s safety group.

Clear up

To take away deployed assets, you possibly can clear them up within the following order:

- Delete the Shopper VPN endpoint

- Delete the Community Load Balancer

- Delete the goal group related to the load balancer

In case you additionally wish to delete the HyperPod cluster, observe these extra steps:

- Delete the HyperPod cluster

- Delete the CloudFormation stack, which incorporates the VPC, subnets, safety group, and FSx for Lustre quantity

Conclusion

On this submit, we explored learn how to implement login node load balancing for SageMaker HyperPod clusters. Through the use of a Community Load Balancer to distribute person site visitors throughout login nodes, you possibly can optimize useful resource utilization and improve the general multi-user expertise, offering seamless entry to cluster assets for every person.

This method represents just one approach to customise your HyperPod cluster. Due to the pliability of SageMaker HyperPod you possibly can adapt configurations to your distinctive wants whereas benefiting from a managed, resilient surroundings. Whether or not you might want to scale basis mannequin workloads, share compute assets throughout totally different duties, or assist long-running coaching jobs, SageMaker HyperPod provides a flexible answer that may evolve along with your necessities.

For extra particulars on benefiting from SageMaker HyperPod, dive into the HyperPod workshop and discover additional weblog posts overlaying HyperPod.

In regards to the Authors

Janosch Woschitz is a Senior Options Architect at AWS, specializing in AI/ML. With over 15 years of expertise, he helps clients globally in leveraging AI and ML for modern options and constructing ML platforms on AWS. His experience spans machine studying, information engineering, and scalable distributed techniques, augmented by a powerful background in software program engineering and trade experience in domains similar to autonomous driving.

Janosch Woschitz is a Senior Options Architect at AWS, specializing in AI/ML. With over 15 years of expertise, he helps clients globally in leveraging AI and ML for modern options and constructing ML platforms on AWS. His experience spans machine studying, information engineering, and scalable distributed techniques, augmented by a powerful background in software program engineering and trade experience in domains similar to autonomous driving.

Giuseppe Angelo Porcelli is a Principal Machine Studying Specialist Options Architect for Amazon Internet Providers. With a number of years of software program engineering and an ML background, he works with clients of any dimension to know their enterprise and technical wants and design AI and ML options that make the perfect use of the AWS Cloud and the Amazon Machine Studying stack. He has labored on initiatives in numerous domains, together with MLOps, pc imaginative and prescient, and NLP, involving a broad set of AWS companies. In his free time, Giuseppe enjoys taking part in soccer.

Giuseppe Angelo Porcelli is a Principal Machine Studying Specialist Options Architect for Amazon Internet Providers. With a number of years of software program engineering and an ML background, he works with clients of any dimension to know their enterprise and technical wants and design AI and ML options that make the perfect use of the AWS Cloud and the Amazon Machine Studying stack. He has labored on initiatives in numerous domains, together with MLOps, pc imaginative and prescient, and NLP, involving a broad set of AWS companies. In his free time, Giuseppe enjoys taking part in soccer.