As generative AI fashions advance in creating multimedia content material, the distinction between good and nice output typically lies within the particulars that solely human suggestions can seize. Audio and video segmentation offers a structured solution to collect this detailed suggestions, permitting fashions to study by way of reinforcement studying from human suggestions (RLHF) and supervised fine-tuning (SFT). Annotators can exactly mark and consider particular moments in audio or video content material, serving to fashions perceive what makes content material really feel genuine to human viewers and listeners.

Take, for example, text-to-video era, the place fashions must study not simply what to generate however how one can preserve consistency and pure stream throughout time. When making a scene of an individual performing a sequence of actions, elements just like the timing of actions, visible consistency, and smoothness of transitions contribute to the standard. By means of exact segmentation and annotation, human annotators can present detailed suggestions on every of those elements, serving to fashions study what makes a generated video sequence really feel pure quite than synthetic. Equally, in text-to-speech purposes, understanding the delicate nuances of human speech—from the size of pauses between phrases to modifications in emotional tone—requires detailed human suggestions at a section stage. This granular enter helps fashions learn to produce speech that sounds pure, with applicable pacing and emotional consistency. As massive language fashions (LLMs) more and more combine extra multimedia capabilities, human suggestions turns into much more essential in coaching them to generate wealthy, multi-modal content material that aligns with human high quality requirements.

The trail to creating efficient AI fashions for audio and video era presents a number of distinct challenges. Annotators must establish exact moments the place generated content material matches or deviates from pure human expectations. For speech era, this implies marking actual factors the place intonation modifications, the place pauses really feel unnatural, or the place emotional tone shifts unexpectedly. In video era, annotators should pinpoint frames the place movement turns into jerky, the place object consistency breaks, or the place lighting modifications seem synthetic. Conventional annotation instruments, with primary playback and marking capabilities, typically fall brief in capturing these nuanced particulars.

Amazon SageMaker Floor Reality allows RLHF by permitting groups to combine detailed human suggestions instantly into mannequin coaching. By means of {custom} human annotation workflows, organizations can equip annotators with instruments for high-precision segmentation. This setup allows the mannequin to study from human-labeled information, refining its capability to supply content material that aligns with pure human expectations.

On this submit, we present you how one can implement an audio and video segmentation resolution within the accompanying GitHub repository utilizing SageMaker Floor Reality. We information you thru deploying the mandatory infrastructure utilizing AWS CloudFormation, creating an inner labeling workforce, and establishing your first labeling job. We reveal how one can use Wavesurfer.js for exact audio visualization and segmentation, configure each segment-level and full-content annotations, and construct the interface in your particular wants. We cowl each console-based and programmatic approaches to creating labeling jobs, and supply steerage on extending the answer with your personal annotation wants. By the top of this submit, you’ll have a completely purposeful audio/video segmentation workflow that you may adapt for numerous use circumstances, from coaching speech synthesis fashions to bettering video era capabilities.

Characteristic Overview

The combination of Wavesurfer.js in our UI offers an in depth waveform visualization the place annotators can immediately see patterns in speech, silence, and audio depth. As an example, when engaged on speech synthesis, annotators can visually establish unnatural gaps between phrases or abrupt modifications in quantity that may make generated speech sound robotic. The power to zoom into these waveform patterns means they’ll work with millisecond precision—marking precisely the place a pause is simply too lengthy or the place an emotional transition occurs too abruptly.

On this snapshot of audio segmentation, we’re capturing a customer-representative dialog, annotating speaker segments, feelings, and transcribing the dialogue. The UI permits for playback pace adjustment and zoom performance for exact audio evaluation.

The multi-track characteristic lets annotators create separate tracks for evaluating completely different elements of the content material. In a text-to-speech job, one observe would possibly concentrate on pronunciation accuracy, one other on emotional consistency, and a 3rd on pure pacing. For video era duties, annotators can mark segments the place movement flows naturally, the place object consistency is maintained, and the place scene transitions work nicely. They will alter playback pace to catch delicate particulars, and the visible timeline for exact begin and finish factors for every marked section.

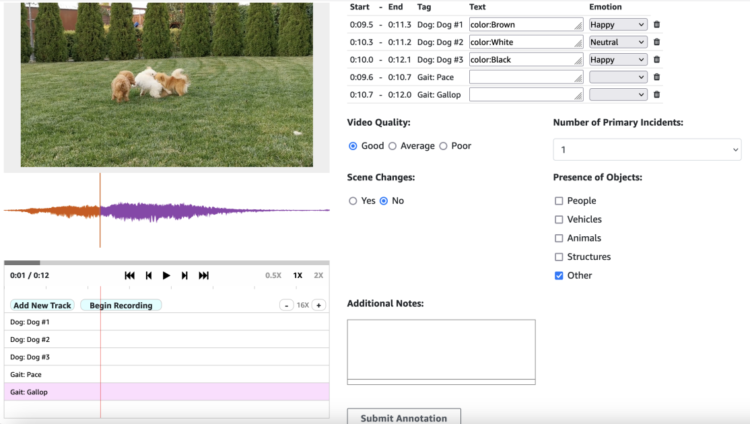

On this snapshot of video segmentation, we’re annotating a scene with canines, monitoring particular person animals, their colours, feelings, and gaits. The UI additionally allows general video high quality evaluation, scene change detection, and object presence classification.

Annotation course of

Annotators start by selecting Add New Monitor and deciding on applicable classes and tags for his or her annotation job. After you create the observe, you’ll be able to select Start Recording on the level the place you need to begin a section. Because the content material performs, you’ll be able to monitor the audio waveform or video frames till you attain the specified finish level, then select Cease Recording. The newly created section seems in the proper pane, the place you’ll be able to add classifications, transcriptions, or different related labels. This course of will be repeated for as many segments as wanted, with the flexibility to regulate section boundaries, delete incorrect segments, or create new tracks for various annotation functions.

Significance of high-quality information and decreasing labeling errors

Excessive-quality information is crucial for coaching generative AI fashions that may produce pure, human-like audio and video content material. The efficiency of those fashions relies upon instantly on the accuracy and element of human suggestions, which stems from the precision and completeness of the annotation course of. For audio and video content material, this implies capturing not simply what sounds or seems to be unnatural, however precisely when and the way these points happen.

Our goal constructed UI in SageMaker Floor Reality addresses frequent challenges in audio and video annotation that usually result in inconsistent or imprecise suggestions. When annotators work with lengthy audio or video recordsdata, they should mark exact moments the place generated content material deviates from pure human expectations. For instance, in speech era, an unnatural pause would possibly final solely a fraction of a second, however its affect on perceived high quality is critical. The instrument’s zoom performance permits annotators to increase these temporary moments throughout their display screen, making it potential to mark the precise begin and finish factors of those delicate points. This precision helps fashions study the tremendous particulars that separate pure from artificial-sounding speech.

Resolution overview

This audio/video segmentation resolution combines a number of AWS providers to create a sturdy annotation workflow. At its core, Amazon Easy Storage Service (Amazon S3) serves because the safe storage for enter recordsdata, manifest recordsdata, annotation outputs, and the online UI elements. SageMaker Floor Reality offers annotators with an online portal to entry their labeling jobs and manages the general annotation workflow. The next diagram illustrates the answer structure.

The UI template, which incorporates our specialised audio/video segmentation interface constructed with Wavesurfer.js, requires particular JavaScript and CSS recordsdata. These recordsdata are hosted by way of Amazon CloudFront distribution, offering dependable and environment friendly supply to annotators’ browsers. By utilizing CloudFront with an origin entry identification and applicable bucket insurance policies, we permit the UI elements to be served to annotators. This setup follows AWS greatest practices for least-privilege entry, ensuring CloudFront can solely entry the precise UI recordsdata wanted for the annotation interface.

Pre-annotation and post-annotation AWS Lambda features are elective elements that may improve the workflow. The pre-annotation Lambda perform can course of the enter manifest file earlier than information is offered to annotators, enabling any crucial formatting or modifications. Equally, the post-annotation Lambda perform can rework the annotation outputs into particular codecs required for mannequin coaching. These features present flexibility to adapt the workflow to particular wants with out requiring modifications to the core annotation course of.

The answer makes use of AWS Id and Entry Administration (IAM) roles to handle permissions:

- A SageMaker Floor Reality IAM position allows entry to Amazon S3 for studying enter recordsdata and writing annotation outputs

- If used, Lambda perform roles present the mandatory permissions for preprocessing and postprocessing duties

Let’s stroll by way of the method of establishing your annotation workflow. We begin with a easy state of affairs: you might have an audio file saved in Amazon S3, together with some metadata like a name ID and its transcription. By the top of this walkthrough, you’ll have a completely purposeful annotation system the place your staff can section and classify this audio content material.

Conditions

For this walkthrough, ensure you have the next:

Create your inner workforce

Earlier than we dive into the technical setup, let’s create a personal workforce in SageMaker Floor Reality. This lets you check the annotation workflow along with your inner staff earlier than scaling to a bigger operation.

- On the SageMaker console, select Labeling workforces.

- Select Non-public for the workforce kind and create a brand new non-public staff.

- Add staff members utilizing their e mail addresses—they are going to obtain directions to arrange their accounts.

Deploy the infrastructure

Though this demonstrates utilizing a CloudFormation template for fast deployment, you may as well arrange the elements manually. The belongings (JavaScript and CSS recordsdata) can be found in our GitHub repository. Full the next steps for handbook deployment:

- Obtain these belongings instantly from the GitHub repository.

- Host them in your personal S3 bucket.

- Arrange your personal CloudFront distribution to serve these recordsdata.

- Configure the mandatory permissions and CORS settings.

This handbook method provides you extra management over infrastructure setup and may be most popular you probably have present CloudFront distributions or a must customise safety controls and belongings.

The remainder of this submit will concentrate on the CloudFormation deployment method, however the labeling job configuration steps stay the identical no matter the way you select to host the UI belongings.

This CloudFormation template creates and configures the next AWS sources:

- S3 bucket for UI elements:

- Shops the UI JavaScript and CSS recordsdata

- Configured with CORS settings required for SageMaker Floor Reality

- Accessible solely by way of CloudFront, circuitously public

- Permissions are set utilizing a bucket coverage that grants learn entry solely to the CloudFront Origin Entry Id (OAI)

- CloudFront distribution:

- Offers safe and environment friendly supply of UI elements

- Makes use of an OAI to securely entry the S3 bucket

- Is configured with applicable cache settings for optimum efficiency

- Entry logging is enabled, with logs being saved in a devoted S3 bucket

- S3 bucket for CloudFront logs:

- Shops entry logs generated by CloudFront

- Is configured with the required bucket insurance policies and ACLs to permit CloudFront to put in writing logs

- Object possession is ready to ObjectWriter to allow ACL utilization for CloudFront logging

- Lifecycle configuration is ready to mechanically delete logs older than 90 days to handle storage

- Lambda perform:

- Downloads UI recordsdata from our GitHub repository

- Shops them within the S3 bucket for UI elements

- Runs solely throughout preliminary setup and makes use of least privilege permissions

- Permissions embrace Amazon CloudWatch Logs for monitoring and particular S3 actions (learn/write) restricted to the created bucket

After the CloudFormation stack deployment is full, you’ll find the CloudFront URLs for accessing the JavaScript and CSS recordsdata on the AWS CloudFormation console. You want these CloudFront URLs to replace your UI template earlier than creating the labeling job. Word these values—you’ll use them when creating the labeling job.

Put together your enter manifest

Earlier than you create the labeling job, you might want to put together an enter manifest file that tells SageMaker Floor Reality what information to current to annotators. The manifest construction is versatile and will be custom-made primarily based in your wants. For this submit, we use a easy construction:

You’ll be able to adapt this construction to incorporate further metadata that your annotation workflow requires. For instance, you would possibly need to add speaker data, timestamps, or different contextual information. The secret is ensuring your UI template is designed to course of and show these attributes appropriately.

Create your labeling job

With the infrastructure deployed, let’s create the labeling job in SageMaker Floor Reality. For full directions, consult with Speed up {custom} labeling workflows in Amazon SageMaker Floor Reality with out utilizing AWS Lambda.

- On the SageMaker console, select Create labeling job.

- Give your job a reputation.

- Specify your enter information location in Amazon S3.

- Specify an output bucket the place annotations shall be saved.

- For the duty kind, choose Customized labeling job.

- Within the UI template discipline, find the placeholder values for the JavaScript and CSS recordsdata and replace as follows:

- Substitute

audiovideo-wavesufer.jsalong with your CloudFront JavaScript URL from the CloudFormation stack outputs. - Substitute

audiovideo-stylesheet.cssalong with your CloudFront CSS URL from the CloudFormation stack outputs.

- Substitute

- Earlier than you launch the job, use the Preview characteristic to confirm your interface.

You must see the Wavesurfer.js interface load accurately with all controls working correctly. This preview step is essential—it confirms that your CloudFront URLs are accurately specified and the interface is correctly configured.

Programmatic setup

Alternatively, you’ll be able to create your labeling job programmatically utilizing the CreateLabelingJob API. That is notably helpful for automation or when you might want to create a number of jobs. See the next code:

The API method provides the identical performance because the SageMaker console, however permits for automation and integration with present workflows. Whether or not you select the SageMaker console or API method, the outcome is identical: a completely configured labeling job prepared in your annotation staff.

Understanding the output

After your annotators full their work, SageMaker Floor Reality will generate an output manifest in your specified S3 bucket. This manifest comprises wealthy data at two ranges:

- Phase-level classifications – Particulars about every marked section, together with begin and finish instances and assigned classes

- Full-content classifications – General scores and classifications for your complete file

Let’s have a look at a pattern output to grasp its construction:

This two-level annotation construction offers beneficial coaching information in your AI fashions, capturing each fine-grained particulars and general content material evaluation.

Customizing the answer

Our audio/video segmentation resolution is designed to be extremely customizable. Let’s stroll by way of how one can adapt the interface to match your particular annotation necessities.

Customise segment-level annotations

The segment-level annotations are managed within the report() perform of the JavaScript code. The next code snippet reveals how one can modify the annotation choices for every section: