Organizations constructing {custom} machine studying (ML) fashions typically have specialised necessities that normal platforms can’t accommodate. For instance, healthcare corporations want particular environments to guard affected person information whereas assembly HIPAA compliance, monetary establishments require particular {hardware} configurations to optimize proprietary buying and selling algorithms, and analysis groups want flexibility to experiment with cutting-edge strategies utilizing {custom} frameworks. These specialised wants drive organizations to construct {custom} coaching environments that give them management over {hardware} choice, software program variations, and safety configurations.

These {custom} environments present the required flexibility, however they create vital challenges for ML lifecycle administration. Organizations usually attempt to clear up these issues by constructing further {custom} instruments, and a few groups piece collectively numerous open supply options. These approaches additional enhance operational prices and require engineering assets that may very well be higher used elsewhere.

AWS Deep Studying Containers (DLCs) and managed MLflow on Amazon SageMaker AI provide a strong answer that addresses each wants. DLCs present preconfigured Docker containers with frameworks like TensorFlow and PyTorch, together with NVIDIA CUDA drivers for GPU help. DLCs are optimized for efficiency on AWS, repeatedly maintained to incorporate the newest framework variations and patches, and designed to combine seamlessly with AWS providers for coaching and inference. AWS Deep Studying AMIs (DLAMIs) are preconfigured Amazon Machine Photographs (AMIs) for Amazon Elastic Compute Cloud (Amazon EC2) cases. DLAMIs include in style deep studying frameworks like PyTorch and TensorFlow, and can be found for CPU-based cases and high-powered GPU-accelerated cases. They embody NVIDIA CUDA, cuDNN, and different vital instruments, with AWS managing the updates of DLAMIs. Collectively, DLAMIs and DLCs present ML practitioners with the infrastructure and instruments to speed up deep studying within the cloud at scale.

SageMaker managed MLflow delivers complete lifecycle administration with one-line automated logging, enhanced comparability capabilities, and full lineage monitoring. As a totally managed service on SageMaker AI, it alleviates the operational burden of sustaining monitoring infrastructure.

On this put up, we present easy methods to combine AWS DLCs with MLflow to create an answer that balances infrastructure management with strong ML governance. We stroll via a purposeful setup that your workforce can use to fulfill your specialised necessities whereas considerably decreasing the time and assets wanted for ML lifecycle administration.

Resolution overview

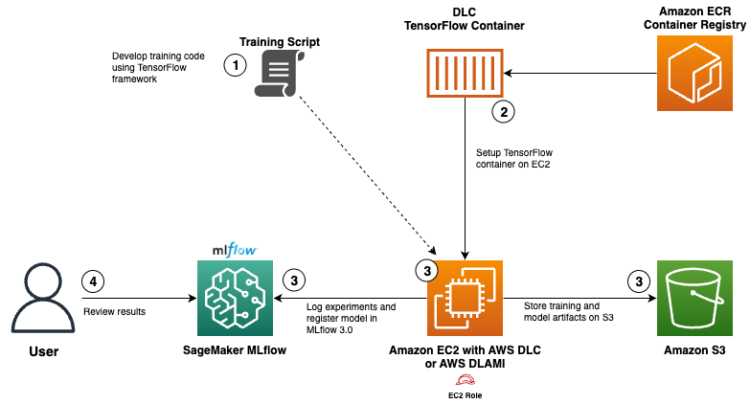

On this part, we describe the structure and AWS providers used to combine AWS DLCs with SageMaker managed MLflow to implement the answer.The answer makes use of a number of AWS providers collectively to create a scalable atmosphere for ML growth:

- AWS DLCs present preconfigured Docker photos with optimized ML frameworks

- SageMaker managed MLflow introduces enhanced mannequin registry capabilities with fine-grained entry controls and provides generative AI help via specialised monitoring for LLM experiments and immediate administration

- Amazon Elastic Container Registry (Amazon ECR) shops and manages container photos

- Amazon Easy Storage Service (Amazon S3) shops enter and output artifacts

- Amazon EC2 runs the AWS DLCs

For this use case, you’ll develop a TensorFlow neural community mannequin for abalone age prediction with built-in SageMaker managed MLflow monitoring code. Subsequent, you’ll pull an optimized TensorFlow coaching container from the AWS public ECR repository and configure an EC2 occasion with entry to the MLflow monitoring server. You’ll then execute the coaching course of inside the DLC whereas storing mannequin artifacts in Amazon S3 and logging experiment outcomes to MLflow. Lastly, you’ll view and evaluate experiment leads to the MLflow UI to guage mannequin efficiency.

The next diagram that exhibits the interplay between numerous AWS providers, AWS DLCs, and SageMaker managed MLflow for the answer.

The workflow consists of the next steps:

- Develop a TensorFlow neural community mannequin for abalone age prediction. Combine SageMaker managed MLflow monitoring inside the mannequin code to log parameters, metrics, and artifacts.

- Pull an optimized TensorFlow coaching container from the AWS public ECR repository. Configure Amazon EC2 and DLAMI with entry to the MLflow monitoring server utilizing an AWS Id and Entry Administration (IAM) function for EC2.

- Execute the coaching course of inside the DLC operating on Amazon EC2, retailer mannequin artifacts in Amazon S3, and log all experiment outcomes and register mannequin in MLflow.

- Examine experiment outcomes via the MLflow UI.

Stipulations

To comply with together with this walkthrough, be sure you have the next conditions:

- An AWS account with billing enabled.

- An EC2 occasion (t3.giant or bigger) operating Ubuntu 20.4 or later with not less than 20 GB of accessible disk area for Docker photos and containers.

- Docker (newest) put in on the EC2 occasion.

- The AWS Command Line Interface (AWS CLI) model 2.0 or later.

- An IAM function with permissions for the next:

- Amazon EC2 to speak to SageMaker managed MLflow.

- Amazon ECR to drag the TensorFlow container.

- SageMaker managed MLflow to trace experiments and register fashions.

- An Amazon SageMaker Studio area. To create a website, confer with Information to getting arrange with Amazon SageMaker AI. Add the

sagemaker-mlflow:AccessUIpermission to the SageMaker execution function created. This permission makes it doable to navigate to MLflow from the SageMaker Studio console. - A SageMaker managed MLflow monitoring server arrange in SageMaker AI.

- Web entry from the EC2 occasion to obtain the abalone dataset.

- The GitHub repository cloned to your EC2 occasion.

Deploy the answer

Detailed step-by-step directions can be found within the accompanying GitHub repository’s README file. The walkthrough covers your complete workflow—from provisioning infrastructure and organising permissions to executing your first coaching job with complete experiment monitoring.

Analyze experiment outcomes

After you’ve applied the answer following the steps within the README file, you possibly can entry and analyze your experiment outcomes. The next screenshots exhibit how SageMaker managed MLflow supplies complete experiment monitoring, mannequin governance, and auditability on your deep studying workloads. When coaching is full, all experiment metrics, parameters, and artifacts are robotically captured in MLflow, offering a central location to trace and evaluate your mannequin growth journey. The next screenshot exhibits the experiment abalone-tensorflow-experiment with a run named unique-cod-104. This dashboard offers you an entire overview of all experiment runs, so you possibly can evaluate completely different approaches and mannequin iterations at a look.

The next screenshot exhibits the detailed data for run unique-cod-104, together with the registered mannequin abalone-tensorflow-custom-callback-model (model v2). This view supplies vital details about mannequin provenance, exhibiting precisely which experiment run produced which mannequin model, which is a key part of mannequin governance.

The next visualization tracks the coaching loss throughout epochs, captured utilizing a {custom} callback. Such metrics allow you to perceive mannequin convergence patterns and consider coaching efficiency, giving insights into potential optimization alternatives.

The registered fashions view illustrated within the following screenshot exhibits how abalone-tensorflow-custom-callback-model is tracked within the mannequin registry. This integration allows versioning, mannequin lifecycle administration, and deployment monitoring.

The next screenshot illustrates one of many answer’s strongest governance options. When logging a mannequin with mlflow.tensorflow.log_model() utilizing the registered_model_name parameter, the mannequin is robotically registered within the Amazon SageMaker Mannequin Registry. This creates full traceability from experiment to deployed mannequin, establishing an audit path that connects your coaching runs on to manufacturing fashions.

This seamless integration between your {custom} coaching environments and SageMaker governance instruments helps you preserve visibility and compliance all through your ML lifecycle.

The mannequin artifacts are robotically uploaded to Amazon S3 after coaching completion, as illustrated within the following screenshot. This organized storage construction makes certain all mannequin parts together with weights, configurations, and related metadata are securely preserved and accessible via a standardized path.

Price implications

The next assets incur prices. Consult with the respective pricing web page to estimate prices.

- Amazon EC2 On-Demand – Consult with the occasion dimension and AWS Area the place it has been provisioned. Storage utilizing Amazon Elastic Block Retailer (Amazon EBS) is further.

- SageMaker managed MLflow – You could find the main points beneath On-demand pricing for SageMaker MLflow for monitoring and storage.

- Amazon S3 – Consult with pricing for storage and requests.

- SageMaker Studio – There is no such thing as a further fees for utilizing the SageMaker Studio UI. Nevertheless, any EFS or EBS volumes hooked up, jobs or assets launched from SageMaker Studio functions, or JupyterLab functions will incur prices.

Clear up

Full the next steps to scrub up your assets:

- Delete the MLflow monitoring server, as a result of it continues to incur prices so long as it’s operating:

- Cease the EC2 occasion to keep away from incurring further prices:

- Take away coaching information, mannequin artifacts, and MLflow experiment information from S3 buckets:

- Evaluate and clear up any momentary IAM roles created for the EC2 instnce.

- Delete your SageMaker Studio area.

Conclusion

AWS DLCs and SageMaker managed MLflow present ML groups an answer that balances the trade-off between governance and adaptability. This integration helps information scientists seamlessly monitor experiments and deploy fashions for inference, and helps directors set up safe, scalable SageMaker managed MLflow environments. Organizations can now standardize their ML workflows utilizing both AWS DLCs or DLAMIs whereas accommodating specialised necessities, in the end accelerating the journey from mannequin experimentation to enterprise influence with higher management and effectivity.

On this put up, we explored easy methods to combine {custom} coaching environments with SageMaker managed MLflow to realize complete experiment monitoring and mannequin governance. This method maintains the flexibleness of your most well-liked growth atmosphere whereas benefiting from centralized monitoring, mannequin registration, and lineage monitoring. The combination supplies an ideal steadiness between customization and standardization, so groups can innovate whereas sustaining governance greatest practices.

Now that you simply perceive easy methods to monitor coaching in DLCs with SageMaker managed MLflow, you possibly can implement this answer in your individual atmosphere. All code examples and implementation particulars from this put up can be found in our GitHub repository.

For extra data, confer with the next assets:

Concerning the authors

Gunjan Jain, an AWS Options Architect primarily based in Southern California, makes a speciality of guiding giant monetary providers corporations via their cloud transformation journeys. He expertly facilitates cloud adoption, optimization, and implementation of Effectively-Architected greatest practices. Gunjan’s skilled focus extends to machine studying and cloud resilience, areas the place he demonstrates specific enthusiasm. Outdoors of his skilled commitments, he finds steadiness by spending time in nature.

Gunjan Jain, an AWS Options Architect primarily based in Southern California, makes a speciality of guiding giant monetary providers corporations via their cloud transformation journeys. He expertly facilitates cloud adoption, optimization, and implementation of Effectively-Architected greatest practices. Gunjan’s skilled focus extends to machine studying and cloud resilience, areas the place he demonstrates specific enthusiasm. Outdoors of his skilled commitments, he finds steadiness by spending time in nature.

Rahul Easwar is a Senior Product Supervisor at AWS, main managed MLflow and Accomplice AI Apps inside the SageMaker AIOps workforce. With over 15 years of expertise spanning startups to enterprise expertise, he leverages his entrepreneurial background and MBA from Chicago Sales space to construct scalable ML platforms that simplify AI adoption for organizations worldwide. Join with Rahul on LinkedIn to study extra about his work in ML platforms and enterprise AI options.

Rahul Easwar is a Senior Product Supervisor at AWS, main managed MLflow and Accomplice AI Apps inside the SageMaker AIOps workforce. With over 15 years of expertise spanning startups to enterprise expertise, he leverages his entrepreneurial background and MBA from Chicago Sales space to construct scalable ML platforms that simplify AI adoption for organizations worldwide. Join with Rahul on LinkedIn to study extra about his work in ML platforms and enterprise AI options.