We’ve witnessed exceptional advances in mannequin capabilities as generative AI firms have invested in creating their choices. Language fashions similar to Anthropic’s Claude Opus 4 & Sonnet 4 and Amazon Nova on Amazon Bedrock can purpose, write, and generate responses with growing sophistication. However at the same time as these fashions develop extra highly effective, they will solely work with the data out there to them.

Irrespective of how spectacular a mannequin is perhaps, it’s confined to the information it was skilled on or what’s manually offered in its context window. It’s like having the world’s greatest analyst locked in a room with incomplete recordsdata—sensible, however remoted out of your group’s most present and related info.

This isolation creates three essential challenges for enterprises utilizing generative AI:

- Info silos lure worthwhile information behind customized APIs and proprietary interfaces

- Integration complexity requires constructing and sustaining bespoke connectors and glue code for each information supply or device offered to the language mannequin for each information supply

- Scalability bottlenecks seem as organizations try to attach extra fashions to extra programs and instruments

Sound acquainted? In case you’re an AI-focused developer, technical decision-maker, or answer architect working with Amazon Internet Providers (AWS) and language fashions, you’ve possible encountered these obstacles firsthand. Let’s discover how the Mannequin Context Protocol (MCP) presents a path ahead.

What’s the MCP?

The MCP is an open normal that creates a common language for AI programs to speak with exterior information sources, instruments, and providers. Conceptually, MCP capabilities as a common translator, enabling seamless dialogue between language fashions and the varied programs the place your worthwhile info resides.

Developed by Anthropic and launched as an open supply mission, MCP addresses a basic problem: the best way to present AI fashions with constant, safe entry to the data they want, once they want it, no matter the place that info lives.

At its core, MCP implements a client-server structure:

- MCP shoppers are AI functions like Anthropic’s Claude Desktop or customized options constructed on Amazon Bedrock that want entry to exterior information

- MCP servers present standardized entry to particular information sources, whether or not that’s a GitHub repository, Slack workspace, or AWS service

- Communication stream between shoppers and servers follows a well-defined protocol that may run domestically or remotely

This structure helps three important primitives that kind the muse of MCP:

- Instruments – Features that fashions can name to retrieve info or carry out actions

- Sources – Information that may be included within the mannequin’s context similar to database information, photographs, or file contents

- Prompts – Templates that information how fashions work together with particular instruments or sources

What makes MCP particularly highly effective is its capability to work throughout each native and distant implementations. You’ll be able to run MCP servers immediately in your growth machine for testing or deploy them as distributed providers throughout your AWS infrastructure for enterprise-scale functions.

Fixing the M×N integration downside

Earlier than diving deeper into the AWS particular implementation particulars, it’s price understanding the basic integration problem MCP solves.

Think about you’re constructing AI functions that have to entry a number of information sources in your group. With out a standardized protocol, you face what we name the “M×N downside”: for M completely different AI functions connecting to N completely different information sources, it’s good to construct and preserve M×N customized integrations.

This creates an integration matrix that shortly turns into unmanageable as your group provides extra AI functions and information sources. Every new system requires a number of customized integrations, with growth groups duplicating efforts throughout tasks. MCP transforms this M×N downside into an easier M+N equation: with MCP, you construct M shoppers and N servers, requiring solely M+N implementations. These options to the MCP downside are proven within the following diagram.

This method attracts inspiration from different profitable protocols that solved comparable challenges:

- APIs standardized how net functions work together with the backend

- Language Server Protocol (LSP) standardizes how built-in growth environments (IDEs) work together with language-specific instruments for coding

In the identical means that these protocols revolutionized their domains, MCP is poised to rework how AI functions work together with the varied panorama of information sources in trendy enterprises.

Why MCP issues for AWS customers

For AWS clients, MCP represents a very compelling alternative. AWS presents a whole bunch of providers, every with its personal APIs and information codecs. By adopting MCP as a standardized protocol for AI interactions, you possibly can:

- Streamline integration between Amazon Bedrock language fashions and AWS information providers

- Use current AWS safety mechanisms similar to AWS Id and Entry Administration (IAM) for constant entry management

- Construct composable, scalable AI options that align with AWS architectural greatest practices

MCP and the AWS service panorama

What makes MCP notably highly effective within the AWS context is the way it can interface with the broader AWS service panorama. Think about AI functions that may seamlessly entry info from:

MCP servers act as constant interfaces to those various information sources, offering language fashions with a unified entry sample whatever the underlying AWS service structure. This alleviates the necessity for customized integration code for every service and allows AI programs to work together with your AWS sources in a means that respects your current safety boundaries and entry controls.

Within the remaining sections of this publish, we discover how MCP works with AWS providers, study particular implementation examples, and supply steerage for technical decision-makers contemplating undertake MCP of their organizations.

How MCP works with AWS providers, notably Amazon Bedrock

Now that we’ve proven the basic worth proposition of MCP, we dive into the way it integrates with AWS providers, with a particular give attention to Amazon Bedrock. This integration creates a robust basis for constructing context-aware AI functions that may securely entry your group’s information and instruments.

Amazon Bedrock and language fashions

Amazon Bedrock represents the strategic dedication by AWS to make basis fashions (FMs) accessible, safe, and enterprise-ready. It’s a totally managed service that gives a unified API throughout a number of main language fashions, together with:

- Anthropic’s Claude

- Meta’s Llama

- Amazon Titan and Amazon Nova

What makes Amazon Bedrock notably compelling for enterprise deployments is its integration with the broader AWS panorama. You’ll be able to run FMs with the identical safety, compliance, and operational instruments you already use to your AWS workloads. This consists of IAM for entry management and CloudWatch for monitoring.

On the coronary heart of the flexibility of Amazon Bedrock is the Converse API—the interface that allows multiturn conversations with language fashions. The Converse API consists of built-in help for what AWS calls “device use,” permitting fashions to:

- Acknowledge once they want info outdoors their coaching information

- Request that info from exterior programs utilizing well-defined operate calls

- Incorporate the returned information into their responses

This device use functionality within the Amazon Bedrock Converse API dovetails completely with MCP’s design, making a pure integration level.

MCP and Amazon Bedrock integration structure

Integrating MCP with Amazon Bedrock entails making a bridge between the mannequin’s capability to request info (by the Converse API) and MCP’s standardized protocol for accessing exterior programs.

Integration stream walkthrough

That will help you perceive how MCP and Amazon Bedrock work collectively in observe, we stroll by a typical interplay stream, step-by-step:

- The consumer initiates a question by your utility interface:

"What have been our Q1 gross sales figures for the Northwest area?"

- Your utility forwards the question to Amazon Bedrock by the Converse API:

- Amazon Bedrock processes the question and determines that it wants monetary information that isn’t in its coaching information

- Amazon Bedrock returns a

toolUsemessage, requesting entry to a selected device:

- Your MCP consumer utility receives this

toolUsemessage and interprets it into an MCP protocol

device name - The MCP consumer routes the request to the suitable MCP server (on this case, a server linked to your

monetary database) - The MCP server executes the device, retrieving the requested information out of your programs:

- The device outcomes are returned by the MCP protocol to your consumer utility

- Your utility sends the outcomes again to Amazon Bedrock as a

toolResultmessage:

- Amazon Bedrock generates a remaining response incorporating the device outcomes:

- Your utility returns the ultimate response to the consumer

This whole course of, illustrated within the following diagram, occurs in seconds, giving customers the impression of a seamless dialog with an AI that has direct entry to their group’s information. Behind the scenes, MCP is dealing with the advanced work of securely routing requests to the correct instruments and information sources.

Within the subsequent part, we discover a sensible implementation instance that exhibits the best way to join an MCP server to Amazon Bedrock Data Bases, offering a blueprint to your personal implementations.

Sensible implementation instance: Amazon Bedrock Data Bases integration

As you would possibly recall from our earlier dialogue of strategic use circumstances, enterprise information bases characterize some of the worthwhile functions of MCP on AWS. Now, we discover a concrete implementation of MCP that connects language fashions to Amazon Bedrock Data Bases. The code for the MCP server could be discovered within the AWS Labs MCP code repository and for the consumer in the identical AWS Labs MCP samples listing on GitHub. This instance brings to life the “common translator” idea we launched earlier, demonstrating how MCP can rework the best way AI programs work together with enterprise information repositories.

Understanding the problem

Enterprise information bases comprise huge repositories of knowledge—from documentation and insurance policies to technical guides and product specs. Conventional search approaches are sometimes insufficient when customers ask pure language questions, failing to know context or establish probably the most related content material.

Amazon Bedrock Data Bases present vector search capabilities that enhance upon conventional key phrase search, however even this method has limitations:

- Guide filter configuration requires predefined information of metadata constructions

- Question-result mismatch happens when customers don’t use the precise terminology within the information base

- Relevance challenges come up when comparable paperwork compete for consideration

- Context switching between looking out and reasoning disrupts consumer expertise

The MCP server we discover addresses these challenges by creating an clever layer between language fashions and information bases.

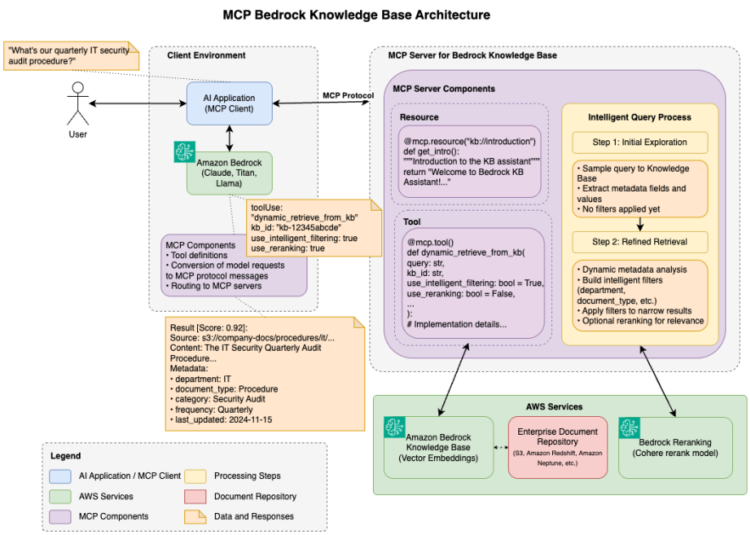

Structure overview

At a excessive degree, our MCP server for Amazon Bedrock Data Bases follows a clear, well-organized structure that builds upon the client-server sample we outlined beforehand. The server exposes two key interfaces to language fashions:

- A information bases useful resource that gives discovery capabilities for out there information bases

- A question device that allows dynamic looking out throughout these information bases

Keep in mind the M×N integration downside we mentioned earlier? This implementation gives a tangible instance of how MCP solves it – making a standardized interface between a big language mannequin and your Amazon Bedrock Data Base repositories.

Data base discovery useful resource

The server begins with a useful resource that allows language fashions to find out there information bases:

This useful resource serves as each documentation and a discovery mechanism that language fashions can use to establish out there information bases earlier than querying them.

Querying information bases with the MCP device

The core performance of this MCP server resides in its QueryKnowledgeBases device:

What makes this device highly effective is its flexibility in querying information bases with pure language. It helps a number of key options:

- Configurable consequence sizes – Regulate the variety of outcomes primarily based on whether or not you want centered or complete info

- Non-compulsory reranking – Enhance relevance utilizing language fashions (similar to reranking fashions from Amazon or Cohere)

- Information supply filtering – Goal particular sections of the information base when wanted

Reranking is disabled by default on this implementation however could be shortly enabled by surroundings variables or direct parameter configuration.

Enhanced relevance with reranking

A notable function of this implementation is the flexibility to rerank search outcomes utilizing language fashions out there by Amazon Bedrock. This functionality permits the system to rescore search outcomes primarily based on deeper semantic understanding:

Reranking is especially worthwhile for queries the place semantic similarity may not be sufficient to find out the

most related content material. For instance, when answering a selected query, probably the most related doc isn’t essentially

the one with probably the most key phrase matches, however the one which immediately addresses the query being requested.

Full interplay stream

This part walks by an entire interplay stream to indicate how all these elements work

collectively:

- The consumer asks a query to a language mannequin similar to Anthropic’s Claude by an utility:

- The language mannequin acknowledges it must entry the information base and calls the MCP device:

- The MCP server processes the request by querying the information base with the desired parameters

- The MCP server returns formatted outcomes to the language mannequin, together with content material, location, and relevance scores:

- The language mannequin incorporates these outcomes into its response to the consumer:

This interplay, illustrated within the following diagram, demonstrates the seamless fusion of language mannequin capabilities with enterprise information, enabled by the MCP protocol. The consumer doesn’t have to specify advanced search parameters or know the construction of the information base—the combination layer handles these particulars robotically.

Trying forward: The MCP journey continues

As we’ve explored all through this publish, the Mannequin Context Protocol gives a robust framework for connecting language fashions to your enterprise information and instruments on AWS. However that is just the start of the journey.

The MCP panorama is quickly evolving, with new capabilities and implementations rising recurrently. In future posts on this collection, we’ll dive deeper into superior MCP architectures and use circumstances, with a selected give attention to distant MCP implementation.

The introduction of the brand new Streamable HTTP transport layer represents a big development for MCP, enabling really enterprise-scale deployments with options similar to:

- Stateless server choices for simplified scaling

- Session ID administration for request routing

- Sturdy authentication and authorization mechanisms for safe entry management

- Horizontal scaling throughout server nodes

- Enhanced resilience and fault tolerance

These capabilities shall be important as organizations transfer from proof-of-concept implementations to production-grade MCP deployments that serve a number of groups and use circumstances.

We invite you to comply with this weblog publish collection as we proceed to discover how MCP and AWS providers can work collectively to create extra highly effective, context-aware AI functions to your group.

Conclusion

As language fashions proceed to rework how we work together with expertise, the flexibility to attach these fashions to enterprise information and programs turns into more and more essential. The Mannequin Context Protocol (MCP) presents a standardized, safe, and scalable method to integration.

Via MCP, AWS clients can:

- Set up a standardized protocol for AI-data connections

- Cut back growth overhead and upkeep prices

- Implement constant safety and governance insurance policies

- Create extra highly effective, context-aware AI experiences

The Amazon Bedrock Data Bases implementation we explored demonstrates how MCP can rework easy retrieval into clever discovery, including worth far past what both element might ship independently.

Getting began

Prepared to start your MCP journey on AWS? Listed below are some sources that can assist you get began:

Studying sources:

Implementation steps:

- Determine a high-value use case the place AI wants entry to enterprise information

- Choose the suitable MCP servers to your information sources

- Arrange a growth surroundings with native MCP implementations

- Combine with Amazon Bedrock utilizing the patterns described on this publish

- Deploy to manufacturing with applicable safety and scaling concerns

Keep in mind that MCP presents a “begin small, scale incrementally” method. You’ll be able to start with a single server connecting to at least one information supply, then increase your implementation as you validate the worth and set up patterns to your group.

We encourage you to attempt the MCP with AWS providers immediately. Begin with a easy implementation, maybe connecting a language mannequin to your documentation or code repositories, and expertise firsthand the facility of context-aware AI.

Share your experiences, challenges, and successes with the group. The open supply nature of MCP signifies that your contributions—whether or not code, use circumstances, or suggestions—will help form the way forward for this vital protocol.

In a world the place AI capabilities are advancing quickly, the distinction between good and nice implementations typically comes all the way down to context. With MCP and AWS, you’ve got the instruments to verify your AI programs have the correct context on the proper time, unlocking their full potential to your group.

This weblog publish is a part of a collection exploring the Mannequin Context Protocol (MCP) on AWS. In our subsequent installment, we’ll discover the world of agentic AI, demonstrating the best way to construct autonomous brokers utilizing the open-source Strands Brokers SDK with MCP to create clever programs that may purpose, plan, and execute advanced multi-step workflows. We’ll additionally discover superior implementation patterns, distant MCP architectures, and uncover further use circumstances for MCP.

In regards to the authors

Aditya Addepalli is a Supply Marketing consultant at AWS, the place he works to guide, architect, and construct functions immediately with clients. With a powerful ardour for Utilized AI, he builds bespoke options and contributes to the ecosystem whereas constantly maintaining himself on the fringe of expertise. Outdoors of labor, you’ll find him assembly new folks, understanding, taking part in video video games and basketball, or feeding his curiosity by private tasks.

Aditya Addepalli is a Supply Marketing consultant at AWS, the place he works to guide, architect, and construct functions immediately with clients. With a powerful ardour for Utilized AI, he builds bespoke options and contributes to the ecosystem whereas constantly maintaining himself on the fringe of expertise. Outdoors of labor, you’ll find him assembly new folks, understanding, taking part in video video games and basketball, or feeding his curiosity by private tasks.

Elie Schoppik leads reside training at Anthropic as their Head of Technical Coaching. He has spent over a decade in technical training, working with a number of coding faculties and beginning one in all his personal. With a background in consulting, training, and software program engineering, Elie brings a sensible method to educating Software program Engineering and AI. He’s shared his insights at quite a lot of technical conferences in addition to universities together with MIT, Columbia, Wharton, and UC Berkeley.

Elie Schoppik leads reside training at Anthropic as their Head of Technical Coaching. He has spent over a decade in technical training, working with a number of coding faculties and beginning one in all his personal. With a background in consulting, training, and software program engineering, Elie brings a sensible method to educating Software program Engineering and AI. He’s shared his insights at quite a lot of technical conferences in addition to universities together with MIT, Columbia, Wharton, and UC Berkeley.

Jawhny Cooke is a Senior Anthropic Specialist Options Architect for Generative AI at AWS. He focuses on integrating and deploying Anthropic fashions on AWS infrastructure. He companions with clients and AI suppliers to implement production-grade generative AI options by Amazon Bedrock, providing knowledgeable steerage on structure design and system implementation to maximise the potential of those superior fashions.

Jawhny Cooke is a Senior Anthropic Specialist Options Architect for Generative AI at AWS. He focuses on integrating and deploying Anthropic fashions on AWS infrastructure. He companions with clients and AI suppliers to implement production-grade generative AI options by Amazon Bedrock, providing knowledgeable steerage on structure design and system implementation to maximise the potential of those superior fashions.

Kenton Blacutt is an AI Marketing consultant inside the GenAI Innovation Middle. He works hands-on with clients serving to them remedy real-world enterprise issues with innovative AWS applied sciences, particularly Amazon Q and Bedrock. In his free time, he likes to journey, experiment with new AI strategies, and run an occasional marathon.

Kenton Blacutt is an AI Marketing consultant inside the GenAI Innovation Middle. He works hands-on with clients serving to them remedy real-world enterprise issues with innovative AWS applied sciences, particularly Amazon Q and Bedrock. In his free time, he likes to journey, experiment with new AI strategies, and run an occasional marathon.

Mani Khanuja is a Principal Generative AI Specialist Options Architect, creator of the e-book Utilized Machine Studying and Excessive-Efficiency Computing on AWS, and a member of the Board of Administrators for Ladies in Manufacturing Schooling Basis Board. She leads machine studying tasks in varied domains similar to laptop imaginative and prescient, pure language processing, and generative AI. She speaks at inner and exterior conferences such AWS re:Invent, Ladies in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seashore.

Mani Khanuja is a Principal Generative AI Specialist Options Architect, creator of the e-book Utilized Machine Studying and Excessive-Efficiency Computing on AWS, and a member of the Board of Administrators for Ladies in Manufacturing Schooling Basis Board. She leads machine studying tasks in varied domains similar to laptop imaginative and prescient, pure language processing, and generative AI. She speaks at inner and exterior conferences such AWS re:Invent, Ladies in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seashore.

Nicolai van der Smagt is a Senior Specialist Options Architect for Generative AI at AWS, specializing in third-party mannequin integration and deployment. He collaborates with AWS’ largest AI companions to deliver their fashions to Amazon Bedrock, whereas serving to clients architect and implement production-ready generative AI options with these fashions.

Nicolai van der Smagt is a Senior Specialist Options Architect for Generative AI at AWS, specializing in third-party mannequin integration and deployment. He collaborates with AWS’ largest AI companions to deliver their fashions to Amazon Bedrock, whereas serving to clients architect and implement production-ready generative AI options with these fashions.