OpenAI just lately introduced assist for Structured Outputs in its newest gpt-4o-2024–08–06 fashions. Structured outputs in relation to giant language fashions (LLMs) are nothing new — builders have both used varied immediate engineering strategies, or third occasion instruments.

On this article we’ll clarify what structured outputs are, how they work, and how one can apply them in your individual LLM based mostly functions. Though OpenAI’s announcement makes it fairly simple to implement utilizing their APIs (as we’ll display right here), you could need to as an alternative go for the open supply Outlines bundle (maintained by the stunning of us over at dottxt), since it may be utilized to each the self-hosted open-weight fashions (e.g. Mistral and LLaMA), in addition to the proprietary APIs (Disclaimer: as a consequence of this problem Outlines doesn’t as of this writing assist structured JSON era through OpenAI APIs; however that can change quickly!).

If RedPajama dataset is any indication, the overwhelming majority of pre-training knowledge is human textual content. Subsequently “pure language” is the native area of LLMs — each within the enter, in addition to the output. After we construct functions nonetheless, we wish to use machine-readable formal constructions or schemas to encapsulate our knowledge enter/output. This manner we construct robustness and determinism into our functions.

Structured Outputs is a mechanism by which we implement a pre-defined schema on the LLM output. This sometimes implies that we implement a JSON schema, nonetheless it isn’t restricted to JSON solely — we may in precept implement XML, Markdown, or a very custom-made schema. The advantages of Structured Outputs are two-fold:

- Easier immediate design — we want not be overly verbose when specifying how the output ought to appear to be

- Deterministic names and kinds — we are able to assure to acquire for instance, an attribute

agewith aQuantityJSON kind within the LLM response

For this instance, we’ll use the primary sentence from Sam Altman’s Wikipedia entry…

Samuel Harris Altman (born April 22, 1985) is an American entrepreneur and investor greatest often known as the CEO of OpenAI since 2019 (he was briefly fired and reinstated in November 2023).

…and we’re going to use the newest GPT-4o checkpoint as a named-entity recognition (NER) system. We are going to implement the next JSON schema:

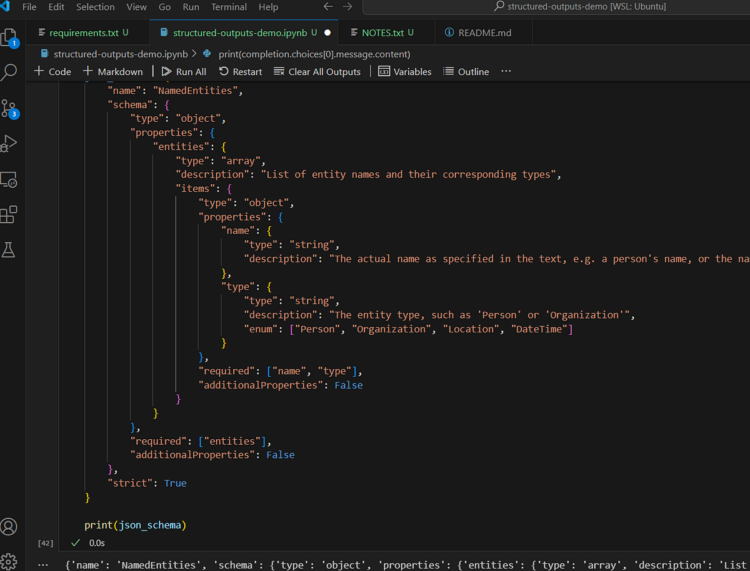

json_schema = {

"title": "NamedEntities",

"schema": {

"kind": "object",

"properties": {

"entities": {

"kind": "array",

"description": "Listing of entity names and their corresponding sorts",

"gadgets": {

"kind": "object",

"properties": {

"title": {

"kind": "string",

"description": "The precise title as specified within the textual content, e.g. an individual's title, or the title of the nation"

},

"kind": {

"kind": "string",

"description": "The entity kind, equivalent to 'Particular person' or 'Group'",

"enum": ["Person", "Organization", "Location", "DateTime"]

}

},

"required": ["name", "type"],

"additionalProperties": False

}

}

},

"required": ["entities"],

"additionalProperties": False

},

"strict": True

}

In essence, our LLM response ought to include a NamedEntities object, which consists of an array of entities, each containing a title and kind. There are some things to notice right here. We are able to for instance implement Enum kind, which may be very helpful in NER since we are able to constrain the output to a set set of entity sorts. We should specify all of the fields within the required array: nonetheless, we are able to additionally emulate “non-obligatory” fields by setting the kind to e.g. ["string", null] .

We are able to now move our schema, along with the information and the directions to the API. We have to populate the response_format argument with a dict the place we set kind to "json_schema” after which provide the corresponding schema.

completion = consumer.beta.chat.completions.parse(

mannequin="gpt-4o-2024-08-06",

messages=[

{

"role": "system",

"content": """You are a Named Entity Recognition (NER) assistant.

Your job is to identify and return all entity names and their

types for a given piece of text. You are to strictly conform

only to the following entity types: Person, Location, Organization

and DateTime. If uncertain about entity type, please ignore it.

Be careful of certain acronyms, such as role titles "CEO", "CTO",

"VP", etc - these are to be ignore.""",

},

{

"role": "user",

"content": s

}

],

response_format={

"kind": "json_schema",

"json_schema": json_schema,

}

)

The output ought to look one thing like this:

{ 'entities': [ {'name': 'Samuel Harris Altman', 'type': 'Person'},

{'name': 'April 22, 1985', 'type': 'DateTime'},

{'name': 'American', 'type': 'Location'},

{'name': 'OpenAI', 'type': 'Organization'},

{'name': '2019', 'type': 'DateTime'},

{'name': 'November 2023', 'type': 'DateTime'}]}

The total supply code used on this article is on the market right here.

The magic is within the mixture of constrained sampling, and context free grammar (CFG). We talked about beforehand that the overwhelming majority of pre-training knowledge is “pure language”. Statistically because of this for each decoding/sampling step, there’s a non-negligible likelihood of sampling some arbitrary token from the realized vocabulary (and in trendy LLMs, vocabularies sometimes stretch throughout 40 000+ tokens). Nonetheless, when coping with formal schemas, we would love to quickly eradicate all unbelievable tokens.

Within the earlier instance, if now we have already generated…

{ 'entities': [ {'name': 'Samuel Harris Altman',

…then ideally we would like to place a very high logit bias on the 'typ token in the next decoding step, and very low probability on all the other tokens in the vocabulary.

This is in essence what happens. When we supply the schema, it gets converted into a formal grammar, or CFG, which serves to guide the logit bias values during the decoding step. CFG is one of those old-school computer science and natural language processing (NLP) mechanisms that is making a comeback. A very nice introduction to CFG was actually presented in this StackOverflow answer, but essentially it is a way of describing transformation rules for a collection of symbols.

Structured Outputs are nothing new, but are certainly becoming top-of-mind with proprietary APIs and LLM services. They provide a bridge between the erratic and unpredictable “natural language” domain of LLMs, and the deterministic and structured domain of software engineering. Structured Outputs are essentially a must for anyone designing complex LLM applications where LLM outputs must be shared or “presented” in various components. While API-native support has finally arrived, builders should also consider using libraries such as Outlines, as they provide a LLM/API-agnostic way of dealing with structured output.