Generative AI is revolutionizing industries by streamlining operations and enabling innovation. Whereas textual chat interactions with GenAI stay fashionable, real-world purposes typically rely on structured information for APIs, databases, data-driven workloads, and wealthy person interfaces. Structured information may also improve conversational AI, enabling extra dependable and actionable outputs. A key problem is that LLMs (Massive Language Fashions) are inherently unpredictable, which makes it troublesome for them to supply persistently structured outputs like JSON. This problem arises as a result of their coaching information primarily contains unstructured textual content, comparable to articles, books, and web sites, with comparatively few examples of structured codecs. Because of this, LLMs can battle with precision when producing JSON outputs, which is essential for seamless integration into present APIs and databases. Fashions range of their potential to help structured responses, together with recognizing information sorts and managing advanced hierarchies successfully. These capabilities could make a distinction when selecting the best mannequin.

This weblog demonstrates how Amazon Bedrock, a managed service for securely accessing prime AI fashions, might help handle these challenges by showcasing two different choices:

- Immediate Engineering: A simple strategy to shaping structured outputs utilizing well-crafted prompts.

- Instrument Use with the Bedrock Converse API: A complicated technique that permits higher management, consistency, and native JSON schema integration.

We are going to use a buyer assessment evaluation instance to display how Bedrock generates structured outputs, comparable to sentiment scores, with simplified Python code.

Constructing a immediate engineering answer

This part will display the way to use immediate engineering successfully to generate structured outputs utilizing Amazon Bedrock. Immediate engineering includes crafting exact enter prompts to information massive language fashions (LLMs) in producing constant and structured responses. It’s a elementary approach for growing Generative AI purposes, notably when structured outputs are required.Listed below are the 5 key steps we are going to comply with:

- Configure the Bedrock shopper and runtime parameters.

- Create a JSON schema for structured outputs.

- Craft a immediate and information the mannequin with clear directions and examples.

- Add a buyer assessment as enter information to analyse.

- Invoke Bedrock, name the mannequin, and course of the response.

Whereas we display buyer assessment evaluation to generate a JSON output, these strategies can be used with different codecs like XML or CSV.

Step 1: Configure Bedrock

To start, we’ll arrange some constants and initialize a Python Bedrock shopper connection object utilizing the Python Boto3 SDK for Bedrock runtime, which facilitates interplay with Bedrock:

The REGION specifies the AWS area for mannequin execution, whereas the MODEL_ID identifies the precise Bedrock mannequin. The TEMPERATURE fixed controls the output randomness, the place greater values enhance creativity, and decrease values preserve precision, comparable to when producing structured output. MAX_TOKENS determines the output size, balancing cost-efficiency and information completeness.

Step 2: Outline the Schema

Defining a schema is crucial for facilitating structured and predictable mannequin outputs, sustaining information integrity, and enabling seamless API integration. With no well-defined schema, fashions could generate inconsistent or incomplete responses, resulting in errors in downstream purposes. The JSON normal schema used within the code beneath serves as a blueprint for structured information technology, guiding the mannequin on the way to format its output with specific directions.

Let’s create a JSON schema for buyer critiques with three required fields: reviewId (string, max 50 chars), sentiment (quantity, -1 to 1), and abstract (string, max 200 chars).

Step 3: Craft the Immediate textual content

To generate constant, structured, and correct responses, prompts have to be clear and well-structured, as LLMs depend on exact enter to supply dependable outputs. Poorly designed prompts can result in ambiguity, errors, or formatting points, disrupting structured workflows, so we comply with these greatest practices:

- Clearly define the AI’s position and targets to keep away from ambiguity.

- Divide duties into smaller, manageable numbered steps for readability.

- Point out {that a} JSON schema will likely be supplied (see Step 5 beneath) to take care of a constant and legitimate construction.

- Use one-shot prompting with a pattern output to information the mannequin; add extra examples if wanted for consistency, however keep away from too many, as they could restrict the mannequin’s potential to deal with new inputs.

- Outline the way to deal with lacking or invalid information.

Step 4: Combine Enter Knowledge

For demonstration functions, we’ll embody a assessment textual content within the immediate as a Python variable:

Separating the enter information with tags enhance readability and readability, making it easy to establish and reference. This hardcoded enter simulates real-world information integration. For manufacturing use, you would possibly dynamically populate enter information from APIs or person submissions.

Step 5: Name Bedrock

On this part, we assemble a Bedrock request by defining a physique object that features the JSON schema, immediate, and enter assessment information from earlier steps. This structured request makes positive the mannequin receives clear directions, adheres to a predefined schema, and processes pattern enter information appropriately. As soon as the request is ready, we invoke Amazon Bedrock to generate a structured JSON response.

We reuse the MAX_TOKENS, TEMPERATURE, and MODEL_ID constants outlined in Step 1. The physique object has important inference configurations like anthropic_version for mannequin compatibility and the messages array, which features a single message to offer the mannequin with job directions, the schema, and the enter information. The position defines the “speaker” within the interplay context, with person worth representing this system sending the request. Alternatively, we might simplify the enter by combining directions, schema, and information into one textual content immediate, which is simple to handle however much less modular.

Lastly, we use the shopper.invoke_model technique to ship the request. After invoking, the mannequin processes the request, and the JSON information have to be correctly (not defined right here) extracted from the Bedrock response. For instance:

Instrument Use with the Amazon Bedrock Converse API

Within the earlier chapter, we explored an answer utilizing Bedrock Immediate Engineering. Now, let’s take a look at another strategy for producing structured responses with Bedrock.

We are going to prolong the earlier answer through the use of the Amazon Bedrock Converse API, a constant interface designed to facilitate multi-turn conversations with Generative AI fashions. The API abstracts model-specific configurations, together with inference parameters, simplifying integration.

A key function of the Converse API is Instrument Use (often known as Perform Calling), which allows the mannequin to execute exterior instruments, comparable to calling an exterior API. This technique helps normal JSON schema integration straight into instrument definitions, facilitating output alignment with predefined codecs. Not all Bedrock fashions help Instrument Use, so be sure you test which fashions are suitable with these function.

Constructing on the beforehand outlined information, the next code offers a simple instance of Instrument Use tailor-made to our curstomer assessment use case:

On this code the tool_list defines a customized buyer assessment evaluation instrument with its enter schema and goal, whereas the messages present the sooner outlined directions and enter information. Not like within the earlier immediate engineering instance we used the sooner outlined JSON schema within the definition of a instrument. Lastly, the shopper.converse name combines these parts, specifying the instrument to make use of and inference configurations, leading to outputs tailor-made to the given schema and job. After exploring Immediate Engineering and Instrument Use in Bedrock options for structured response technology, let’s now consider how completely different basis fashions carry out throughout these approaches.

Check Outcomes: Claude Fashions on Amazon Bedrock

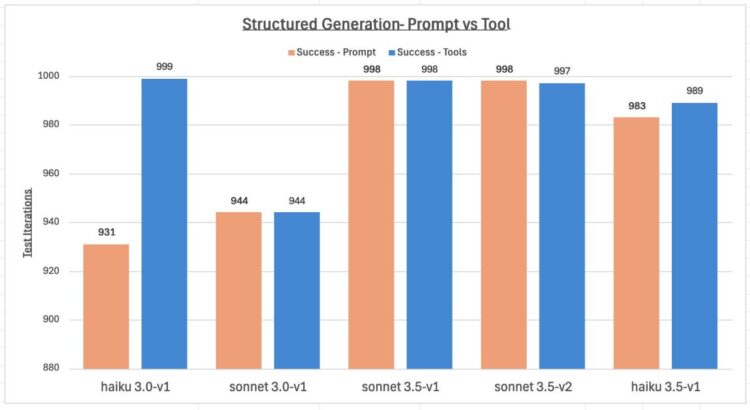

Understanding the capabilities of basis fashions in structured response technology is crucial for sustaining reliability, optimizing efficiency, and constructing scalable, future-proof Generative AI purposes with Amazon Bedrock. To guage how properly fashions deal with structured outputs, we carried out intensive testing of Anthropic’s Claude fashions, evaluating prompt-based and tool-based approaches throughout 1,000 iterations per mannequin. Every iteration processed 100 randomly generated gadgets, offering broad check protection throughout completely different enter variations.The examples proven earlier on this weblog are deliberately simplified for demonstration functions, the place Bedrock carried out seamlessly with no points. To higher assess the fashions beneath real-world challenges, we used a extra advanced schema that featured nested buildings, arrays, and numerous information sorts to establish edge instances and potential points. The outputs had been validated for adherence to the JSON format and schema, sustaining consistency and accuracy. The next diagram summarizes the outcomes, displaying the variety of profitable, legitimate JSON responses for every mannequin throughout the 2 demonstrated approaches: Immediate Engineering and Instrument Use.

The outcomes demonstrated that every one fashions achieved over 93% success throughout each approaches, with Instrument Use strategies persistently outperforming prompt-based ones. Whereas the analysis was carried out utilizing a extremely advanced JSON schema, less complicated schemas lead to considerably fewer points, typically practically none. Future updates to the fashions are anticipated to additional improve efficiency.

Ultimate Ideas

In conclusion, we demonstrated two strategies for producing structured responses with Amazon Bedrock: Immediate Engineering and Instrument Use with the Converse API. Immediate Engineering is versatile, works with Bedrock fashions (together with these with out Instrument Use help), and handles varied schema sorts (e.g., Open API schemas), making it a fantastic start line. Nevertheless, it may be fragile, requiring actual prompts and scuffling with advanced wants. Then again, Instrument Use gives better reliability, constant outcomes, seamless API integration, and runtime validation of JSON schema for enhanced management.

For simplicity, we didn’t display a number of areas on this weblog. Different strategies for producing structured responses embody utilizing fashions with built-in help for configurable response codecs, comparable to JSON, when invoking fashions, or leveraging constraint decoding strategies with third-party libraries like LMQL. Moreover, producing structured information with GenAI could be difficult attributable to points like invalid JSON, lacking fields, or formatting errors. To keep up information integrity and deal with surprising outputs or API failures, efficient error dealing with, thorough testing, and validation are important.

To attempt the Bedrock strategies demonstrated on this weblog, comply with the steps to Run instance Amazon Bedrock API requests via the AWS SDK for Python (Boto3). With pay-as-you-go pricing, you’re solely charged for API calls, so little to no cleanup is required after testing. For extra particulars on greatest practices, discuss with the Bedrock immediate engineering tips and model-specific documentation, comparable to Anthropic’s greatest practices.

Structured information is essential to leveraging Generative AI in real-world situations like APIs, data-driven workloads, and wealthy person interfaces past text-based chat. Begin utilizing Amazon Bedrock immediately to unlock its potential for dependable structured responses.

In regards to the authors

Adam Nemeth is a Senior Options Architect at AWS, the place he helps international monetary clients embrace cloud computing via architectural steerage and technical help. With over 24 years of IT experience, Adam beforehand labored at UBS earlier than becoming a member of AWS. He lives in Switzerland along with his spouse and their three youngsters.

Adam Nemeth is a Senior Options Architect at AWS, the place he helps international monetary clients embrace cloud computing via architectural steerage and technical help. With over 24 years of IT experience, Adam beforehand labored at UBS earlier than becoming a member of AWS. He lives in Switzerland along with his spouse and their three youngsters.

Dominic Searle is a Senior Options Architect at Amazon Net Providers, the place he has had the pleasure of working with World Monetary Providers clients as they discover how Generative AI could be built-in into their know-how methods. Offering technical steerage, he enjoys serving to clients successfully leverage AWS Providers to resolve actual enterprise issues.

Dominic Searle is a Senior Options Architect at Amazon Net Providers, the place he has had the pleasure of working with World Monetary Providers clients as they discover how Generative AI could be built-in into their know-how methods. Offering technical steerage, he enjoys serving to clients successfully leverage AWS Providers to resolve actual enterprise issues.