Prospects are more and more wanting to make use of the ability of enormous language fashions (LLMs) to unravel real-world issues. Nonetheless, bridging the hole between these LLMs and sensible functions has been a problem. AI brokers have appeared as an revolutionary know-how that bridges this hole.

The muse fashions (FMs) obtainable by Amazon Bedrock function the cognitive engine for AI brokers, offering the reasoning and pure language understanding capabilities important for decoding consumer requests and producing acceptable responses. You’ll be able to combine these fashions with varied agent frameworks and orchestration layers to create AI functions that may perceive context, make selections, and take actions. You’ll be able to construct with Amazon Bedrock Brokers or different frameworks like LangGraph and the just lately launched Strands Agent SDK.

This weblog put up explores tips on how to create highly effective agentic functions utilizing the Amazon Bedrock FMs, LangGraph, and the Mannequin Context Protocol (MCP), with a sensible state of affairs of dealing with a GitHub workflow of situation evaluation, code fixes, and pull request technology.

For groups in search of a managed resolution to streamline GitHub workflows, Amazon Q Developer in GitHub provides native integration with GitHub repositories. It offers built-in capabilities for code technology, evaluation, and code transformation with out requiring customized agent growth. Whereas Amazon Q Developer offers out-of-the-box performance for widespread growth workflows, organizations with particular necessities or distinctive use instances might profit from constructing customized options utilizing Amazon Bedrock and agent frameworks. This flexibility permits groups to decide on between a ready-to-use resolution with Amazon Q Developer or a personalized strategy utilizing Amazon Bedrock, relying on their particular wants, technical necessities, and desired stage of management over the implementation.

Challenges with the present state of AI brokers

Regardless of the exceptional developments in AI agent know-how, the present state of agent growth and deployment faces vital challenges that restrict their effectiveness, reliability, and broader adoption. These challenges span technical, operational, and conceptual domains, creating limitations that builders and organizations should navigate when implementing agentic options.

One of many vital challenges is device integration. Though frameworks like Amazon Bedrock Brokers, LangGraph, and the Strands Agent SDK present mechanisms for brokers to work together with exterior instruments and providers, the present approaches typically lack standardization and adaptability. Builders should create customized integrations for every device, outline exact schemas, and deal with a mess of edge instances in device invocation and response processing. Moreover, the inflexible nature of many device integration frameworks signifies that brokers wrestle to adapt to adjustments in device interfaces or to find and use new capabilities dynamically.

How MCP helps in creating brokers

Showing as a response to the restrictions and challenges of present agent architectures, MCP offers a standardized framework that basically redefines the connection between FMs, context administration, and gear integration. This protocol addresses most of the core challenges which have hindered the broader adoption and effectiveness of AI brokers, significantly in enterprise environments and complicated use instances.

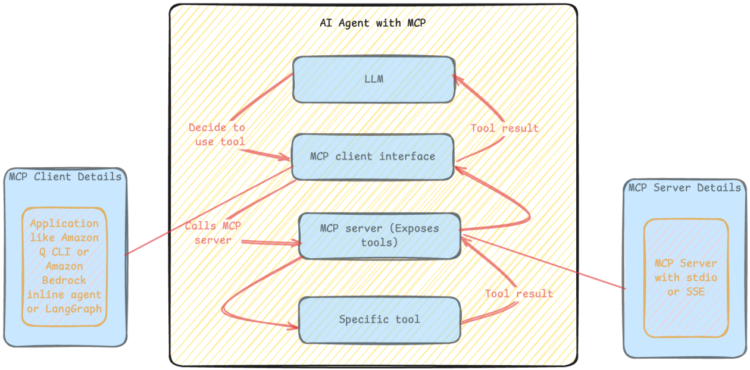

The next diagram illustrates an instance structure.

Software integration is dramatically simplified by MCP’s Software Registry and standardized invocation patterns. Builders can register instruments with the registry utilizing a constant format, and the protocol manages the complexities of device choice, parameter preparation, and response processing. This not solely reduces the event effort required to combine new instruments but additionally permits extra refined device utilization patterns, reminiscent of device chaining and parallel device invocation, which might be difficult to implement in present frameworks.

This mix takes benefit of the strengths of every know-how—high-quality FMs in Amazon Bedrock, MCP’s context administration capabilities, and LangGraph’s orchestration framework—to create brokers that may deal with more and more complicated duties with better reliability and effectiveness.

Think about your growth workforce wakes as much as discover yesterday’s GitHub points already analyzed, mounted, and ready as pull requests — all dealt with autonomously in a single day.

Current advances in AI, significantly LLMs with code technology capabilities, have resulted in an impactful strategy to growth workflows. By utilizing brokers, growth groups can automate easy adjustments—reminiscent of dependency updates or easy bug fixes.

Resolution Overview

Amazon Bedrock is a completely managed service that makes high-performing FMs from main AI firms and Amazon obtainable by a unified API. Amazon Bedrock additionally provides a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI.

LangGraph orchestrates agentic workflows by a graph-based structure that handles complicated processes and maintains context throughout agent interactions. It makes use of supervisory management patterns and reminiscence programs for coordination. For extra particulars, check with Construct multi-agent programs with LangGraph and Amazon Bedrock.

The Mannequin Context Protocol (MCP) is an open customary that empowers builders to construct safe, two-way connections between their information sources and AI-powered instruments. The GitHub MCP Server is an MCP server that gives seamless integration with GitHub APIs. It provides a typical means for AI instruments to work with GitHub’s repositories. Builders can use it to automate duties, analyze code, and enhance workflows with out dealing with complicated API calls.

This put up makes use of these three applied sciences in a complementary vogue. Amazon Bedrock provides the AI capabilities for understanding points and producing code fixes. LangGraph orchestrates the end-to-end workflow, managing the state and decision-making all through the method. The GitHub MCP Server interfaces with GitHub repositories, offering context to the FM and implementing the generated adjustments. Collectively, these applied sciences allow an automation system that may perceive and analyze GitHub points, extract related code context, generate code fixes, create well-documented pull requests, and combine seamlessly with present GitHub workflows.

The determine under exhibits high-level view of how LangGraph integrates with GitHub by MCP whereas leveraging LLMs from Amazon Bedrock.

Within the following sections, we discover the technical strategy for constructing an AI-powered automation system, utilizing Amazon Bedrock, LangGraph, and the GitHub MCP Server. We talk about the core ideas of constructing the answer; we don’t concentrate on deploying the agent or operating the MCP server within the AWS surroundings. For an in depth clarification, check with the GitHub repository.

Conditions

You could have the next stipulations earlier than you possibly can deploy this resolution. For this put up, we use the us-west-2 AWS Area. For particulars on obtainable Areas, see Amazon Bedrock endpoints and quotas.

Surroundings configuration and setup

The MCP server acts as a bridge between our LangGraph agent and GitHub’s API. As an alternative of instantly calling GitHub APIs, we use the containerized the GitHub MCP Server, which offers standardized device interfaces.

It is advisable to outline the MCP configuration utilizing the non-public entry token that you simply outlined within the stipulations. This configuration will begin the GitHub MCP Server utilizing Docker or Finch.

Agent state

LangGraph wants a shared state object that flows between the nodes within the workflow. This state acts as reminiscence, permitting every step to entry information from earlier steps and cross outcomes to later ones.

Structured output

As an alternative of parsing free-form LLM responses, we use Pydantic fashions to implement constant, machine-readable outputs. This reduces parsing errors and ensure downstream nodes obtain information in anticipated codecs. The Discipline descriptions information the LLM to supply precisely what we’d like.

MCP instruments integration

The load_mcp_tools perform from the LangChain’s MCP adapter robotically converts the MCP server capabilities into LangChain-compatible instruments. This abstraction makes it doable to make use of the GitHub operations (checklist points, create branches, replace recordsdata) as in the event that they had been built-in LangChain instruments.

Workflow construction

Every node is stateless — it takes the present state, performs one particular activity, and returns state updates. This makes the workflow predictable, testable, and easy to debug. These nodes are linked utilizing edges or conditional edges. Not each GitHub situation requires code adjustments. Some is perhaps documentation requests, duplicates, or want clarification. The routing features use the structured LLM output to dynamically determine the following step, making the workflow adaptive moderately than inflexible.

Lastly, we begin the agent by invoking the compiled graph with an preliminary state. The agent then follows the steps and selections outlined within the graph. The next diagram illustrates the workflow.

Agent Execution and Consequence

We are able to invoke the compiled graph with the initial_state and recursion_limit. It can fetch open points from the given GitHub repository, analyze them one by one, make the code adjustments if wanted after which create the pull request in GitHub.

Issues

To allow automated workflows, Amazon EventBridge provides an integration with GitHub by its SaaS companion occasion sources. After it’s configured, EventBridge receives these GitHub occasions in close to real-time. You’ll be able to create guidelines that match particular situation patterns and route them to varied AWS providers like AWS Lambda features, AWS Step Features state machines, or Amazon Easy Notification Service (Amazon SNS) subjects for additional processing. This integration permits automated workflows that may set off your evaluation pipelines or code technology processes when related GitHub situation actions happen.

When deploying the system, contemplate a phased rollout technique. Begin with a pilot section in two or three non-critical repositories to verify effectiveness and discover points. Throughout this pilot section, it’s essential to totally consider the answer throughout a various set of code recordsdata. This check ought to cowl totally different programming languages, frameworks, codecs (reminiscent of – Jupyter pocket book), and ranging ranges of complexity in quantity and measurement of code recordsdata. Steadily broaden to extra repositories, prioritizing these with excessive upkeep burdens or standardized code patterns.

Infrastructure greatest practices embody containerization, designing for scalability, offering excessive availability, and implementing complete monitoring for software, system, and enterprise metrics. Safety concerns are paramount, together with working with least privilege entry, correct secrets and techniques administration, enter validation, and vulnerability administration by common updates and safety scanning.

It’s essential to align along with your firm’s generative AI operations and governance frameworks. Previous to deployment, confirm alignment along with your group’s AI security protocols, information dealing with insurance policies, and mannequin deployment tips. Though this architectural sample provides vital advantages, it’s best to adapt implementation to suit inside your group’s particular AI governance construction and danger administration frameworks.

Clear up

Clear up your surroundings by finishing the next steps:

- Delete IAM roles and insurance policies created particularly for this put up.

- Delete the native copy of this put up’s code.

- In the event you not want entry to an Amazon Bedrock FM, you possibly can take away entry to it. For directions, see Add or take away entry to Amazon Bedrock basis fashions.

- Delete the non-public entry token. For directions, see Deleting a private entry token.

Conclusion

The combination of Amazon Bedrock FMs with the MCP and LangGraph is a major development within the subject of AI brokers. By addressing the elemental challenges of context administration and gear integration, this mix permits the event of extra refined, dependable, and highly effective agentic functions.

The GitHub points workflow state of affairs demonstrates advantages that embody productiveness enhancement, consistency enchancment, sooner response instances, scalable upkeep, and data amplification. Necessary insights embody the position of FMs as growth companions, the need of workflow orchestration, the significance of repository context, the necessity for confidence evaluation, and the worth of suggestions loops for steady enchancment.

The way forward for AI-powered growth automation will see tendencies like multi-agent collaboration programs, proactive code upkeep, context-aware code technology, enhanced developer collaboration, and moral AI growth. Challenges embody ability evolution, governance complexity, high quality assurance, and integration complexity, whereas alternatives embody developer expertise transformation, accelerated innovation, data democratization, and accessibility enhancements. Organizations can put together by beginning small, investing in data seize, constructing suggestions loops, growing AI literacy, and experimenting with new capabilities. The purpose is to boost developer capabilities, not substitute them, fostering a collaborative future the place AI and human builders work collectively to construct higher software program.

For the instance code and demonstration mentioned on this put up, check with the accompanying GitHub repository.

Confer with the next sources for added steerage to get began:

Concerning the authors

Jagdeep Singh Soni is a Senior Companion Options Architect at AWS primarily based within the Netherlands. He makes use of his ardour for generative AI to assist prospects and companions construct generative AI functions utilizing AWS providers. Jagdeep has 15 years of expertise in innovation, expertise engineering, digital transformation, cloud structure, and ML functions.

Jagdeep Singh Soni is a Senior Companion Options Architect at AWS primarily based within the Netherlands. He makes use of his ardour for generative AI to assist prospects and companions construct generative AI functions utilizing AWS providers. Jagdeep has 15 years of expertise in innovation, expertise engineering, digital transformation, cloud structure, and ML functions.

Ajeet Tewari is a Senior Options Architect for Amazon Internet Providers. He works with enterprise prospects to assist them navigate their journey to AWS. His specialties embody architecting and implementing scalable OLTP programs and main strategic AWS initiatives.

Ajeet Tewari is a Senior Options Architect for Amazon Internet Providers. He works with enterprise prospects to assist them navigate their journey to AWS. His specialties embody architecting and implementing scalable OLTP programs and main strategic AWS initiatives.

Mani Khanuja is a Tech Lead – Generative AI Specialists, writer of the guide Utilized Machine Studying and Excessive-Efficiency Computing on AWS, and a member of the Board of Administrators for Girls in Manufacturing Schooling Basis Board. She leads machine studying initiatives in varied domains reminiscent of pc imaginative and prescient, pure language processing, and generative AI. She speaks at inner and exterior conferences such AWS re:Invent, Girls in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seashore.

Mani Khanuja is a Tech Lead – Generative AI Specialists, writer of the guide Utilized Machine Studying and Excessive-Efficiency Computing on AWS, and a member of the Board of Administrators for Girls in Manufacturing Schooling Basis Board. She leads machine studying initiatives in varied domains reminiscent of pc imaginative and prescient, pure language processing, and generative AI. She speaks at inner and exterior conferences such AWS re:Invent, Girls in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seashore.