Information science groups working with synthetic intelligence and machine studying (AI/ML) face a rising problem as fashions change into extra complicated. Whereas Amazon Deep Studying Containers (DLCs) supply strong baseline environments out-of-the-box, customizing them for particular initiatives typically requires important time and experience.

On this put up, we discover how you can use Amazon Q Developer and Mannequin Context Protocol (MCP) servers to streamline DLC workflows to automate creation, execution, and customization of DLC containers.

AWS DLCs

AWS DLCs present generative AI practitioners with optimized Docker environments to coach and deploy massive language fashions (LLMs) of their pipelines and workflows throughout Amazon Elastic Compute Cloud (Amazon EC2), Amazon Elastic Kubernetes Service (Amazon EKS), and Amazon Elastic Container Service (Amazon ECS). AWS DLCs are focused for self-managed machine studying (ML) clients preferring to construct and preserve their AI/ML environments on their very own, need instance-level management over their infrastructure, and handle their very own coaching and inference workloads. Offered at no extra value, the DLCs come pre-packaged with CUDA libraries, in style ML frameworks, and the Elastic Cloth Adapter (EFA) plug-in for distributed coaching and inference on AWS. They mechanically configure a steady related setting, which eliminates the necessity for patrons to troubleshoot frequent points resembling model incompatibilities. DLCs can be found as Docker photos for coaching and inference with PyTorch and TensorFlow on Amazon Elastic Container Registry (Amazon ECR).

The next determine illustrates the ML software program stack on AWS.

DLCs are saved present with the newest model of frameworks and drivers, examined for compatibility and safety, and supplied at no extra value. They’re additionally easy to customise by following our recipe guides. Utilizing AWS DLCs as a constructing block for generative AI environments reduces the burden on operations and infrastructure groups, lowers TCO for AI/ML infrastructure, accelerates the event of generative AI merchandise, and helps generative AI groups give attention to the value-added work of deriving generative AI-powered insights from the group’s knowledge.

Challenges with DLC customization

Organizations typically encounter a standard problem: they’ve a DLC that serves as a superb basis, however it requires customization with particular libraries, patches, or proprietary toolkits. The normal strategy to this customization includes the next steps:

- Rebuilding containers manually

- Putting in and configuring extra libraries

- Executing intensive testing cycles

- Creating automation scripts for updates

- Managing model management throughout a number of environments

- Repeating this course of a number of instances yearly

This course of typically requires days of labor from specialised groups, with every iteration introducing potential errors and inconsistencies. For organizations managing a number of AI initiatives, these challenges compound shortly, resulting in important operational overhead and potential delays in growth cycles.

Utilizing the Amazon Q CLI with a DLC MCP server

Amazon Q acts as your AI-powered AWS professional, providing real-time help that will help you construct, lengthen, and function AWS purposes by means of pure conversations. It combines deep AWS data with contextual understanding to supply actionable steerage if you want it. This device might help you navigate AWS structure, handle sources, implement finest practices, and entry documentation—all by means of pure language interactions.

The Mannequin Context Protocol (MCP) is an open customary that permits AI assistants to work together with exterior instruments and providers. Amazon Q Developer CLI now helps MCP, permitting you to increase Q’s capabilities by connecting it to customized instruments and providers.

By making the most of the advantages of each Amazon Q and MCP, now we have applied a DLC MCP server that transforms container administration from complicated command line operations into easy conversational directions. Builders can securely create, customise, and deploy DLCs utilizing pure language prompts. This answer probably reduces the technical overhead related to DLC workflows.

Resolution overview

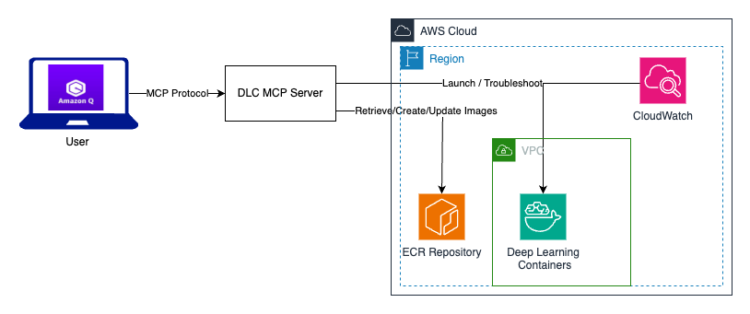

The next diagram reveals the interplay between customers utilizing Amazon Q with a DLC MCP server.

The DLC MCP server offers six core instruments:

- Container administration service – This service helps with core container operations and DLC picture administration:

- Picture discovery – Listing and filter accessible DLC photos by framework, Python model, CUDA model, and repository kind

- Container runtime – Run DLC containers regionally with GPU assist

- Distributed coaching setup – Configure multi-node distributed coaching environments

- AWS integration – Automated Amazon ECR authentication and AWS configuration validation

- Surroundings setup – Examine GPU availability and Docker configuration

- Picture constructing service – This service helps create and customise DLC photos for particular ML workloads:

- Base picture choice – Browse accessible DLC base photos by framework and use case

- Customized Dockerfile technology – Create optimized Dockerfiles with customized packages and configurations

- Picture constructing – Construct customized DLC photos regionally or push to Amazon ECR

- Bundle administration – Set up system packages, Python packages, and customized dependencies

- Surroundings configuration – Set setting variables and customized instructions

- Deployment service – This service helps with deploying DLC photos throughout AWS compute providers:

- Multi-service deployment – Help for Amazon EC2, Amazon SageMaker, Amazon ECS, and Amazon EKS

- SageMaker integration – Create fashions and endpoints for inference

- Container orchestration – Deploy to ECS clusters and EKS clusters

- Amazon EC2 deployment – Launch EC2 cases with DLC photos

- Standing monitoring – Examine deployment standing and endpoint well being

- Improve service – This service helps improve or migrate DLC photos to newer framework variations:

- Improve path evaluation – Analyze compatibility between present and goal framework variations

- Migration planning – Generate improve methods with compatibility warnings

- Dockerfile technology – Create improve Dockerfiles that protect customizations

- Model migration – Improve PyTorch, TensorFlow, and different frameworks

- Customized file preservation – Keep customized information and configurations throughout upgrades

- Troubleshooting service – This service helps diagnose and resolve DLC-related points:

- Error prognosis – Analyze error messages and supply particular options

- Framework compatibility – Examine model compatibility and necessities

- Efficiency optimization – Get framework-specific efficiency tuning suggestions

- Widespread points – Keep a database of options for frequent DLC issues

- Surroundings validation – Confirm system necessities and configurations

- Finest practices service – This service offers finest practices on the next:

- Safety tips – Complete safety finest practices for DLC deployments

- Value optimization – Methods to scale back prices whereas sustaining efficiency

- Deployment patterns – System-specific deployment suggestions

- Framework steerage – Framework-specific finest practices and optimizations

- Customized picture tips – Finest practices for creating maintainable customized photos

Conditions

Comply with the set up steps within the GitHub repo to arrange the DLC MCP server and Amazon Q CLI in your workstation.

Work together with the DLC MPC server

You’re now prepared to start out utilizing the Amazon Q CLI with DLC MCP server. Let’s begin with the CLI, as proven within the following screenshot. It’s also possible to examine the default instruments and loaded server instruments within the CLI with the /instruments command.

Within the following sections, we display three separate use instances utilizing the DLC MPC server.

Run a DLC coaching container

On this state of affairs, our purpose is to determine a PyTorch base picture, launch the picture in an area Docker container, and run a easy check script to confirm the container.

We begin with the immediate “Run Pytorch container for coaching.”

The MCP server mechanically handles the complete workflow: it authenticates with Amazon ECR and pulls the suitable PyTorch DLC picture.

Amazon Q used the GPU picture as a result of we didn’t specify the machine kind. Let’s strive asking for a CPU picture and see its response. After figuring out the picture, the server pulls the picture from the ECR repository efficiently and runs the container in your setting. Amazon Q has built-in instruments that deal with bash scripting and file operations, and some different customary instruments that velocity up the runtime.

After the picture is recognized, the run_the_container device from the DLC MCP server is used to start out the container regionally, and Amazon Q assessments it with easy scripts to verify the container is loading and working the scripts as anticipated. In our instance, our check script checks the PyTorch model.

We additional immediate the server to carry out a coaching activity on the PyTorch CPU coaching container utilizing a preferred dataset. Amazon Q autonomously selects the CIFAR-10 dataset for this instance. Amazon Q gathers the dataset and mannequin data based mostly on its pretrained data with out human intervention. Amazon Q prompts the consumer in regards to the decisions it’s making in your behalf. If wanted, you possibly can specify the required mannequin or dataset instantly within the immediate.

When the scripts are prepared for execution, the server runs the coaching job on the container. After efficiently coaching, it summarizes the coaching job outcomes together with the mannequin path.

Create a customized DLC with NVIDIA’s NeMO Toolkit

On this state of affairs, we stroll by means of the method of enhancing an present DLC with NVIDIA’s NeMo toolkit. NeMo, a strong framework for conversational AI, is constructed on PyTorch Lightning and is designed for environment friendly growth of AI fashions. Our purpose is to create a customized Docker picture that integrates NeMo into the present PyTorch GPU coaching container. This part demonstrates how you can create a customized DLC picture that mixes the PyTorch GPU setting with the superior capabilities of the NeMo toolkit.

The server invokes the create_custom_dockerfile device from our MCP server’s picture constructing module. We will use this device to specify our base picture from Amazon ECR and add customized instructions to put in NeMo.

This Dockerfile serves as a blueprint for our customized DLC picture, ensuring the required parts are in place. Discuss with the Dockerfile within the GitHub repo.

After the customized Dockerfile is created, the server begins constructing our customized DLC picture. To attain this, Amazon Q makes use of the build_custom_dlc_image device within the picture constructing module. This device streamlines the method by organising the construct setting with specified arguments. This step transforms our base picture right into a specialised container tailor-made for NeMo-based AI growth.

The construct command pulls from a specified ECR repository, ensuring we’re working with essentially the most up-to-date base picture. The picture additionally comes with associated packages and libraries to check NeMo; you possibly can specify the necessities within the immediate if required.

NeMo is now prepared to make use of with a fast setting examine to verify our instruments are within the toolbox earlier than we start. You’ll be able to run a easy Python script within the Docker container that reveals you every part you need to know. Within the following screenshot, you possibly can see the PyTorch model 2.7.1+cu128 and PyTorch Lightning model 2.5.2. The NeMo modules are loaded and prepared to be used.

The DLC MCP server has reworked the way in which we create customized DLC photos. Historically, organising environments, managing dependencies, and writing Dockerfiles for AI growth was a time-consuming and error-prone course of. It typically took hours, if not days, to get every part excellent. However now, with Amazon Q together with the DLC MCP server, you possibly can accomplish this in just some minutes.

For NeMo-based AI purposes, you possibly can focus extra on mannequin growth and fewer on infrastructure setup. The standardized course of makes it easy to maneuver from growth to manufacturing, and you’ll be assured that your container will work the identical approach every time it’s constructed.

Add the newest model of the DeepSeek mannequin to a DLC

On this state of affairs, we discover how you can improve an present PyTorch GPU DLC by including the DeepSeek mannequin. In contrast to our earlier instance the place we added the NeMo toolkit, right here we combine a strong language mannequin utilizing the newest PyTorch GPU container as our base. Let’s begin with the immediate proven within the following screenshot.

Amazon Q interacts with DLC MCP server to listing the DLC photos and examine for accessible PyTorch GPU photos. After the bottom picture is picked, a number of instruments from the DLC MCP server, resembling create_custom_dockerfile and build_custom_dlc_image, are used to create and construct the Dockerfile. The important thing parts in Dockerfile for this instance are:

This configuration units up our working directories, handles the PyTorch improve to 2.7.1 (newest), and units important setting variables for DeepSeek integration. The server additionally consists of necessary Python packages like transformers, speed up, and Flask for a production-ready setup.

Earlier than diving into the construct course of, let’s perceive how the MCP server prepares the groundwork. If you provoke the method, the server mechanically generates a number of scripts and configuration information. This consists of:

- A customized Dockerfile tailor-made to your necessities

- Construct scripts for container creation and pushing to Amazon ECR

- Check scripts for post-build verification

- Inference server setup scripts

- Requirement information itemizing crucial dependencies

The construct course of first handles authentication with Amazon ECR, establishing a safe connection to the AWS container registry. Then, it both locates your present repository or creates a brand new one if wanted. Within the picture constructing part, the bottom PyTorch 2.6.0 picture will get reworked with an improve to model 2.7.1, full with CUDA 12.8 assist. The DeepSeek Coder 6.7B Instruct mannequin integration occurs seamlessly.

After the construct is profitable, we transfer to the testing part utilizing the mechanically generated check scripts. These scripts assist confirm each the essential performance and manufacturing readiness of the DeepSeek container. To ensure our container is prepared for deployment, we spin it up utilizing the code proven within the following screenshot.

The container initialization takes about 3 seconds—a remarkably fast startup time that’s essential for manufacturing environments. The server performs a easy inference examine utilizing a curl command that sends a POST request to our native endpoint. This check is especially necessary as a result of it verifies not simply the mannequin’s performance, but additionally the complete infrastructure we’ve arrange.

We now have efficiently created a strong inference picture that makes use of the DLC PyTorch container’s efficiency optimizations and GPU acceleration whereas seamlessly integrating DeepSeek’s superior language mannequin capabilities. The result’s greater than only a growth device—it’s a production-ready answer full with well being checks, error dealing with, and optimized inference efficiency. This makes it ultimate for deployment in environments the place reliability and efficiency are essential. This integration creates new alternatives for builders and organizations seeking to implement superior language fashions of their purposes.

Conclusion

The mixture of DLC MCP and Amazon Q transforms what was weeks of DevOps work right into a dialog together with your instruments. This not solely saves time and reduces errors, but additionally helps groups give attention to their core ML duties moderately than infrastructure administration.

For extra details about Amazon Q Developer, check with the Amazon Q Developer product web page to seek out video sources and weblog posts. You’ll be able to share your ideas with us within the feedback part or within the points part of the mission’s GitHub repository.

Concerning the authors

Sathya Balakrishnan is a Sr. Cloud Architect within the Skilled Companies crew at AWS, specializing in knowledge and ML options. He works with US federal monetary purchasers. He’s obsessed with constructing pragmatic options to unravel clients’ enterprise issues. In his spare time, he enjoys watching motion pictures and climbing along with his household.

Sathya Balakrishnan is a Sr. Cloud Architect within the Skilled Companies crew at AWS, specializing in knowledge and ML options. He works with US federal monetary purchasers. He’s obsessed with constructing pragmatic options to unravel clients’ enterprise issues. In his spare time, he enjoys watching motion pictures and climbing along with his household.

Jyothirmai Kottu is a Software program Improvement Engineer within the Deep Studying Containers crew at AWS, specializing in constructing and sustaining strong AI and ML infrastructure. Her work focuses on enhancing the efficiency, reliability, and usefulness of DLCs, that are essential instruments for AI/ML practitioners working with AI frameworks. She is obsessed with making AI/ML instruments extra accessible and environment friendly for builders world wide. Exterior of her skilled life, she enjoys a great espresso, yoga, and exploring new locations with household and buddies.

Jyothirmai Kottu is a Software program Improvement Engineer within the Deep Studying Containers crew at AWS, specializing in constructing and sustaining strong AI and ML infrastructure. Her work focuses on enhancing the efficiency, reliability, and usefulness of DLCs, that are essential instruments for AI/ML practitioners working with AI frameworks. She is obsessed with making AI/ML instruments extra accessible and environment friendly for builders world wide. Exterior of her skilled life, she enjoys a great espresso, yoga, and exploring new locations with household and buddies.

Arindam Paul is a Sr. Product Supervisor in SageMaker AI crew at AWS answerable for Deep Studying workloads on SageMaker, EC2, EKS, and ECS. He’s obsessed with utilizing AI to unravel buyer issues. In his spare time, he enjoys understanding and gardening.

Arindam Paul is a Sr. Product Supervisor in SageMaker AI crew at AWS answerable for Deep Studying workloads on SageMaker, EC2, EKS, and ECS. He’s obsessed with utilizing AI to unravel buyer issues. In his spare time, he enjoys understanding and gardening.