Many enterprises are burdened with mission-critical techniques constructed on outdated applied sciences which have turn out to be more and more tough to take care of and prolong.

This submit demonstrates how you should utilize the Amazon Bedrock Converse API with Amazon Nova Premier inside an agentic workflow to systematically migrate legacy C code to fashionable Java/Spring framework purposes. By breaking down the migration course of into specialised agent roles and implementing strong suggestions loops, organizations can accomplish the next:

- Cut back migration time and price – Automation handles repetitive conversion duties whereas human engineers concentrate on high-value work.

- Enhance code high quality – Specialised validation brokers be certain that the migrated code follows fashionable finest practices.

- Decrease threat – The systematic method prevents important enterprise logic loss throughout migration.

- Allow cloud integration – The ensuing Java/Spring code can seamlessly combine with AWS providers.

Challenges

Code migration from legacy techniques to fashionable frameworks presents a number of vital challenges that require a balanced method combining AI capabilities with human experience:

- Language paradigm variations – Changing C code to Java includes navigating basic variations in reminiscence administration, error dealing with, and programming paradigms. C’s procedural nature and direct reminiscence manipulation distinction sharply with Java’s object-oriented method and computerized reminiscence administration. Though AI can deal with many syntactic transformations robotically, builders should evaluate and validate the semantic correctness of those conversions.

- Architectural complexity – Legacy techniques typically characteristic advanced interdependencies between elements that require human evaluation and planning. In our case, the C code base contained intricate relationships between modules, with some TPs (Transaction Applications) linked to as many as 12 different modules. Human builders should create dependency mappings and decide migration order, usually ranging from leaf nodes with minimal dependencies. AI can help in figuring out these relationships, however the strategic choices about migration sequencing require human judgment.

- Sustaining enterprise logic – Ensuring important enterprise logic is precisely preserved throughout translation requires steady human oversight. Our evaluation confirmed that though computerized migration is very profitable for easy, well-structured code, advanced enterprise logic embedded in bigger information (over 700 strains) requires cautious human evaluate and sometimes handbook refinement to forestall errors or omissions.

- Inconsistent naming and constructions – Legacy code typically accommodates inconsistent naming conventions and constructions that have to be standardized throughout migration. AI can deal with many routine transformations—changing alphanumeric IDs in perform names, reworking C-style error codes to Java exceptions, and changing C structs into Java lessons—however human builders should set up naming requirements and evaluate edge instances the place automated conversion could also be ambiguous.

- Integration complexity – After changing particular person information, human-guided integration is important for making a cohesive utility. Variable names that had been constant throughout the unique C information typically turn out to be inconsistent throughout particular person file conversion, requiring builders to carry out reconciliation work and facilitate correct inter-module communication.

- High quality assurance – Validating that transformed code maintains purposeful equivalence with the unique requires a mixture of automated testing and human verification. That is notably important for advanced enterprise logic, the place refined variations can result in vital points. Builders should design complete check suites and carry out thorough code opinions to make sure migration accuracy.

These challenges necessitate a scientific method that mixes the sample recognition capabilities of enormous language fashions (LLMs) with structured workflows and important human oversight to supply profitable migration outcomes. The secret’s utilizing AI to deal with routine transformations whereas preserving people within the loop for strategic choices, advanced logic validation, and high quality assurance.

Resolution overview

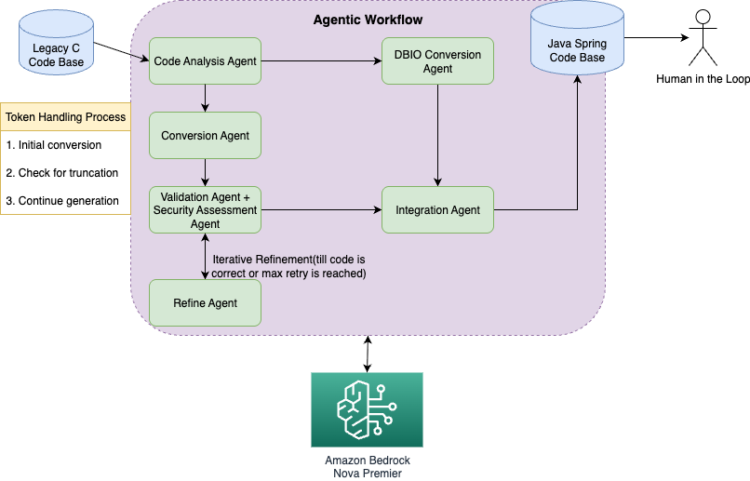

The answer employs the Amazon Bedrock Converse API with Amazon Nova Premier to transform legacy C code to fashionable Java/Spring framework code by means of a scientific agentic workflow. This method breaks down the advanced migration course of into manageable steps, permitting for iterative refinement and dealing with of token limitations. The answer structure consists of a number of key elements:

- Code evaluation agent – Analyzes C code construction and dependencies

- Conversion agent – Transforms C code to Java/Spring code

- Safety evaluation agent – Identifies vulnerabilities in legacy and migrated code

- Validation agent – Verifies conversion completeness and accuracy

- Refine agent – Rewrites the code based mostly on the suggestions from the validation agent

- Integration agent – Combines individually transformed information

Our agentic workflow is applied utilizing a Strands Brokers framework mixed with the Amazon Bedrock Converse API for strong agent orchestration and LLM inference. The structure (as proven within the following diagram) makes use of a hybrid method that mixes Strands’s session administration capabilities with customized BedrockInference dealing with for token continuation.

The answer makes use of the next core applied sciences:

- Strands Brokers framework (v1.1.0+) – Supplies agent lifecycle administration, session dealing with, and structured agent communication

- Amazon Bedrock Converse API – Powers the LLM inference with Amazon Nova Premier mannequin

- Customized BedrockInference class – Handles token limitations by means of textual content prefilling and response continuation

- Asyncio-based orchestration – Permits concurrent processing and non-blocking agent execution

The workflow consists of the next steps:

1. Code evaluation:

- Code evaluation agent – Performs enter code evaluation to know the conversion necessities. Examines C code base construction, identifies dependencies, and assesses complexity.

- Framework integration – Makes use of Strands for session administration whereas utilizing

BedrockInferencefor evaluation. - Output – JSON-structured evaluation with dependency mapping and conversion suggestions.

2. File categorization and metadata creation:

- Implementation –

FileMetadatainformation class with complexity evaluation. - Classes – Easy (0–300 strains), Medium (300–700 strains), Advanced (over 700 strains).

- File varieties – Normal C information, header information, and database I/O (DBIO) information.

3. Particular person file conversion:

- Conversion agent – Performs code migration on particular person information based mostly on the data from the code evaluation agent.

- Token dealing with – Makes use of the

stitch_output()methodology for dealing with massive information that exceed token limits.

4. Safety evaluation part:

- Safety evaluation agent – Performs complete vulnerability evaluation on each legacy C code and transformed Java code.

- Danger categorization – Classifies safety points by severity (Essential, Excessive, Medium, Low).

- Mitigation suggestions – Supplies particular code fixes and safety finest practices.

- Output – Detailed safety report with actionable remediation steps.

5. Validation and suggestions loop:

- Validation agent – Analyzes conversion completeness and accuracy.

- Refine agent – Applies iterative enhancements based mostly on validation outcomes.

- Iteration management – Most 5 suggestions iterations with early termination on passable outcomes.

- Session persistence – Strands framework maintains dialog context throughout iterations.

6. Integration and finalization:

- Integration agent – Makes an attempt to mix individually transformed information.

- Consistency decision – Standardizes variable naming and offers correct dependencies.

- Output technology – Creates cohesive Java/Spring utility construction.

7. DBIO conversion (specialised)

- Objective – Converts SQL DBIO C supply code to MyBatis XML mapper information.

- Framework – Makes use of the identical Strands and

BedrockInferencehybrid method for consistency.

The answer consists of the next key orchestration options:

- Session persistence – Every conversion maintains session state throughout agent interactions.

- Error restoration – Complete error dealing with with swish degradation.

- Efficiency monitoring – Constructed-in metrics for processing time, iteration counts, and success charges.

- Token continuation – Seamless dealing with of enormous information by means of response stitching.

This framework-specific implementation facilitates dependable, scalable code conversion whereas sustaining the pliability to deal with various C code base constructions and complexities.

Stipulations

Earlier than implementing this code conversion resolution, ensure you have the next elements configured:

- AWS surroundings:

- AWS account with acceptable permissions for Amazon Bedrock with Amazon Nova Premier mannequin entry

- Amazon Elastic Compute Cloud (Amazon EC2) occasion (t3.medium or bigger) for improvement and testing or improvement surroundings in native machine

- Growth setup:

- Python 3.10+ put in with Boto3 SDK and Strands Brokers

- AWS Command Line Interface (AWS CLI) configured with acceptable credentials and AWS Area

- Git for model management of legacy code base and transformed code

- Textual content editor or built-in improvement surroundings (IDE) able to dealing with each C and Java code bases

- Supply and goal code base necessities:

- C supply code organized in a structured listing format

- Java 11+ and Maven/Gradle construct instruments

- Spring Framework 5.x or Spring Boot 2.x+ dependencies

The supply code and prompts used within the submit may be discovered within the GitHub repo.

Agent-based conversion course of

The answer makes use of a classy multi-agent system applied utilizing the Strands framework, the place every agent makes a speciality of a particular side of the code conversion course of. This distributed method offers thorough evaluation, correct conversion, and complete validation whereas sustaining the pliability to deal with various code constructions and complexities.

Strands framework integration

Every agent extends the BaseStrandsConversionAgent class, which offers a hybrid structure combining Strands session administration with customized BedrockInference capabilities:

Code evaluation agent

The code evaluation agent examines the construction of the C code base, figuring out dependencies between information and figuring out the optimum conversion technique. This agent helps prioritize which information to transform first and identifies potential challenges. The next is the immediate template for the code evaluation agent:

Conversion agent

The conversion agent handles the precise transformation of C code to Java/Spring code. This agent is assigned the function of a senior software program developer with experience in each C and Java/Spring frameworks. The immediate template for the conversion agent is as follows:

Safety evaluation agent

The safety evaluation agent performs complete vulnerability evaluation on the unique C code and the transformed Java code, figuring out potential safety dangers and offering particular mitigation methods. This agent is essential for ensuring safety vulnerabilities should not carried ahead throughout migration and new code follows safety finest practices. The next is the immediate template for the safety evaluation agent:

Validation agent

The validation agent opinions the transformed code to establish lacking or incorrectly transformed elements. This agent offers detailed suggestions that’s utilized in subsequent conversion iterations. The immediate template for the validation agent is as follows:

Suggestions loop implementation with refine agent

The suggestions loop is a important part that allows iterative refinement of the transformed code. This course of includes the next steps:

- Preliminary conversion by the conversion agent.

- Safety evaluation by the safety evaluation agent.

- Validation by the validation agent.

- Suggestions incorporation by the refine agent (incorporating each validation and safety suggestions).

- Repeat till passable outcomes are achieved.

The refine agent incorporates safety vulnerability fixes alongside purposeful enhancements, and safety evaluation outcomes are offered to improvement groups for ultimate evaluate and approval earlier than manufacturing deployment. The next code is the immediate template for code refinement:

Integration agent

The combination agent combines individually transformed Java information right into a cohesive utility, resolving inconsistencies in variable naming and offering correct dependencies. The immediate template for the combination agent is as follows:

DBIO conversion agent

This specialised agent handles the conversion of SQL DBIO C supply code to XML information suitable with persistence framework within the Java Spring framework. The next is the immediate template for the DBIO conversion agent:

Dealing with token limitations

To handle token limitations within the Amazon Bedrock Converse API, we applied a textual content prefilling method that enables the mannequin to proceed producing code the place it left off. This method is especially essential for big information that exceed the mannequin’s context window and represents a key technical innovation in our Strands-based implementation.

Technical implementation

The next code implements the BedrockInference class with continuation assist:

Continuation technique particulars

The continuation technique consists of the next steps:

- Response monitoring:

- The system displays the

stopReasonarea in Amazon Bedrock responses. - When

stopReasonequalsmax_tokens, continuation is triggered robotically. This makes positive no code technology is misplaced because of token limitations.

- The system displays the

- Context preservation:

- The system extracts the previous few strains of generated code as continuation context.

- It makes use of textual content prefilling to take care of code construction and formatting. It preserves variable names, perform signatures, and code patterns throughout continuations.

- Response stitching:

Optimizing conversion high quality

By means of our experiments, we recognized a number of elements that considerably affect conversion high quality:

- File measurement administration – Recordsdata with greater than 300 strains of code profit from being damaged into smaller logical items earlier than conversion.

- Centered conversion – Changing completely different file varieties (C, header, DBIO) individually yields higher outcomes as every file sort has distinct conversion patterns. Throughout conversion, C capabilities are remodeled into Java strategies inside lessons, and C structs turn out to be Java lessons. Nonetheless, as a result of information are transformed individually with out cross-file context, attaining optimum object-oriented design would possibly require human intervention to consolidate associated performance, set up correct class hierarchies, and facilitate acceptable encapsulation throughout the transformed code base.

- Iterative refinement – A number of suggestions loops (4–5 iterations) produce extra complete conversions.

- Position task – Assigning the mannequin a particular function (senior software program developer) improves output high quality.

- Detailed directions – Offering particular transformation guidelines for frequent patterns improves consistency.

Assumptions

This migration technique makes the next key assumptions:

- Code high quality – Legacy C code follows cheap coding practices with discernible construction. Obfuscated or poorly structured code would possibly require preprocessing earlier than automated conversion.

- Scope limitations – This method targets enterprise logic conversion quite than low-level system code. C code with {hardware} interactions or platform-specific options would possibly require handbook intervention.

- Check protection – Complete check instances exist for the legacy utility to validate purposeful equivalence after migration. With out satisfactory checks, extra validation steps are mandatory.

- Area data – Though the agentic workflow reduces the necessity for experience in each C and Java, entry to material consultants who perceive the enterprise area is required to validate preservation of important enterprise logic.

- Phased migration – The method assumes an incremental migration technique is suitable, the place elements may be transformed and validated individually quite than a full venture stage migration.

Outcomes and efficiency

To judge the effectiveness of our migration method powered by Amazon Nova Premier, we measured efficiency throughout enterprise-grade code bases representing typical buyer situations. Our evaluation centered on two success elements: structural completeness (preservation of all enterprise logic and capabilities) and framework compliance (adherence to Spring Boot finest practices and conventions).

Migration accuracy by code base complexity

The agentic workflow demonstrated various effectiveness based mostly on file complexity, with all outcomes validated by material consultants. The next desk summarizes the outcomes.

| File Dimension Class | Structural Completeness | Framework Compliance | Common Processing Time |

| Small (0–300 strains) | 93% | 100% | 30 –40 seconds |

| Medium (300–700 strains) | 81%* | 91%* | 7 minutes |

| Massive (greater than 700 strains) | 62%* | 84%* | 21 minutes |

*After a number of suggestions cycles

Key insights for enterprise adoption

These outcomes reveal an essential sample: the agentic method excels at dealing with the majority of migration work (small to medium information) whereas nonetheless offering vital worth for advanced information that require human oversight. This creates a hybrid method the place AI handles routine conversions and safety assessments, and builders concentrate on integration and architectural choices.

Conclusion

Our resolution demonstrates that the Amazon Bedrock Converse API with Amazon Nova Premier, when applied inside an agentic workflow, can successfully convert legacy C code to fashionable Java/Spring framework code. The method handles advanced code constructions, manages token limitations, and produces high-quality conversions with minimal human intervention. The answer breaks down the conversion course of into specialised agent roles, implements strong suggestions loops, and handles token limitations by means of continuation methods. This method accelerates the migration course of, improves code high quality, and reduces the potential for errors. Check out the answer on your personal use case, and share your suggestions and questions within the feedback.

Concerning the authors

Aditya Prakash is a Senior Knowledge Scientist on the Amazon Generative AI Innovation Middle. He helps clients leverage AWS AI/ML providers to unravel enterprise challenges by means of generative AI options. Specializing in code transformation, RAG techniques, and multimodal purposes, Aditya allows organizations to implement sensible AI options throughout various industries.

Aditya Prakash is a Senior Knowledge Scientist on the Amazon Generative AI Innovation Middle. He helps clients leverage AWS AI/ML providers to unravel enterprise challenges by means of generative AI options. Specializing in code transformation, RAG techniques, and multimodal purposes, Aditya allows organizations to implement sensible AI options throughout various industries.

Jihye Search engine optimization is a Senior Deep Studying Architect who makes a speciality of designing and implementing generative AI options. Her experience spans mannequin optimization, distributed coaching, RAG techniques, AI agent improvement, and real-time information pipeline building throughout manufacturing, healthcare, gaming, and e-commerce sectors. As an AI/ML guide, Jihye has delivered production-ready options for shoppers, together with good manufacturing unit management techniques, predictive upkeep platforms, demand forecasting fashions, suggestion engines, and MLOps frameworks

Jihye Search engine optimization is a Senior Deep Studying Architect who makes a speciality of designing and implementing generative AI options. Her experience spans mannequin optimization, distributed coaching, RAG techniques, AI agent improvement, and real-time information pipeline building throughout manufacturing, healthcare, gaming, and e-commerce sectors. As an AI/ML guide, Jihye has delivered production-ready options for shoppers, together with good manufacturing unit management techniques, predictive upkeep platforms, demand forecasting fashions, suggestion engines, and MLOps frameworks

Yash Shah is a Science Supervisor within the AWS Generative AI Innovation Middle. He and his group of utilized scientists, architects and engineers work on a spread of machine studying use instances from healthcare, sports activities, automotive and manufacturing, serving to clients notice artwork of the potential with GenAI. Yash is a graduate of Purdue College, specializing in human elements and statistics. Outdoors of labor, Yash enjoys images, mountain climbing and cooking.

Yash Shah is a Science Supervisor within the AWS Generative AI Innovation Middle. He and his group of utilized scientists, architects and engineers work on a spread of machine studying use instances from healthcare, sports activities, automotive and manufacturing, serving to clients notice artwork of the potential with GenAI. Yash is a graduate of Purdue College, specializing in human elements and statistics. Outdoors of labor, Yash enjoys images, mountain climbing and cooking.