As organizations more and more undertake AI capabilities throughout their functions, the necessity for centralized administration, safety, and value management of AI mannequin entry is a required step in scaling AI options. The Generative AI Gateway on AWS steerage addresses these challenges by offering steerage for a unified gateway that helps a number of AI suppliers whereas providing complete governance and monitoring capabilities.

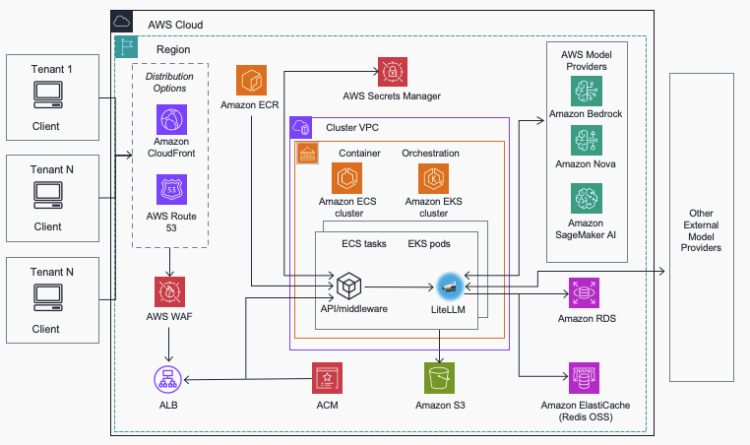

The Generative AI Gateway is a reference structure for enterprises trying to implement end-to-end generative AI options that includes a number of fashions, data-enriched responses, and agent capabilities in a self-hosted approach. This steerage combines the broad mannequin entry of Amazon Bedrock, unified developer expertise of Amazon SageMaker AI, and the sturdy administration capabilities of LiteLLM, all whereas supporting buyer entry to fashions from exterior mannequin suppliers in a safer and dependable method.

LiteLLM is an open supply venture that addresses frequent challenges confronted by clients deploying generative AI workloads. LiteLLM simplifies multi-provider mannequin entry whereas standardizing manufacturing operational necessities together with value monitoring, observability, immediate administration, and extra. On this submit we’ll introduce how the Multi-Supplier Generative AI Gateway reference structure gives steerage for deploying LiteLLM into an AWS surroundings for manufacturing generative AI workload administration and governance.

The problem: Managing multi-provider AI infrastructure

Organizations constructing with generative AI face a number of advanced challenges as they scale their AI initiatives:

- Supplier fragmentation: Groups usually want entry to completely different AI fashions from varied suppliers—Amazon Bedrock, Amazon SageMaker AI, OpenAI, Anthropic, and others—every with completely different APIs, authentication strategies, and billing fashions.

- Decentralized governance mannequin: With no unified entry level, organizations battle to implement constant safety insurance policies, utilization monitoring, and value controls throughout completely different AI companies.

- Operational complexity: Managing a number of entry paradigms starting from AWS Id and Entry Administration roles to API keys, model-specific charge limits, and failover methods throughout suppliers creates operational overhead and will increase the danger of service disruptions.

- Price administration: Understanding and controlling AI spending throughout a number of suppliers and groups turns into more and more troublesome, notably as utilization scales.

- Safety and compliance: Facilitating constant safety insurance policies and audit trails throughout completely different AI suppliers presents vital challenges for enterprise governance.

Multi-Supplier Generative AI Gateway reference structure

This steerage addresses these frequent buyer challenges by offering a centralized gateway that abstracts the complexity of a number of AI suppliers behind a single, managed interface.

Constructed on AWS companies and utilizing the open supply LiteLLM venture, organizations can use this answer to combine with AI suppliers whereas sustaining centralized management, safety, and observability.

Versatile deployment choices on AWS

The Multi-Supplier Generative AI Gateway helps a number of deployment patterns to fulfill various organizational wants:

Amazon ECS deployment

For groups preferring containerized functions with managed infrastructure, the ECS deployment gives serverless container orchestration with automated scaling and built-in load balancing.

Amazon EKS deployment

Organizations with current Kubernetes experience can use the EKS deployment choice, which gives full management over container orchestration whereas benefiting from a managed Kubernetes management aircraft. Prospects can deploy a brand new cluster or leverage current clusters for deployment.

The reference structure supplied for these deployment choices is topic to extra safety testing primarily based in your group’s particular safety necessities. Conduct extra safety testing and evaluate as crucial earlier than deploying something into manufacturing.

Community structure choices

The Multi-Supplier Generative AI Gateway helps a number of community structure choices:

International Public-Going through Deployment

For AI companies with world person bases, mix the gateway with Amazon CloudFront (CloudFront) and Amazon Route 53. This configuration gives:

- Enhanced safety with AWS Protect DDoS safety

- Simplified HTTPS administration with the Amazon CloudFront default certificates

- International edge caching for improved latency

- Clever site visitors routing throughout areas

Regional direct entry

For single-Area deployments prioritizing low latency and value optimization, direct entry to the Utility Load Balancer (ALB) removes the CloudFront layer whereas sustaining safety by way of correctly configured safety teams and community ACLs.

Personal inner entry

Organizations requiring full isolation can deploy the gateway inside a personal VPC with out web publicity. This configuration makes certain that the AI mannequin entry stays inside your safe community perimeter, with ALB safety teams proscribing site visitors to licensed non-public subnet CIDRs solely.

Complete AI governance and administration

The Multi-Supplier Generative AI Gateway is constructed to allow sturdy AI governance requirements from a simple administrative interface. Along with policy-based configuration and entry administration, customers can configure superior capabilities like load-balancing and immediate caching.

Centralized administration interface

The Generative AI Gateway features a web-based administrative interface in LiteLLM that helps complete administration of LLM utilization throughout your group.

Key capabilities embrace:

Consumer and workforce administration: Configure entry controls at granular ranges, from particular person customers to whole groups, with role-based permissions that align together with your organizational construction.

API key administration: Centrally handle and rotate API keys for the linked AI suppliers whereas sustaining audit trails of key utilization and entry patterns.

Finances controls and alerting: Set spending limits throughout suppliers, groups, and particular person customers with automated alerts when thresholds are approached or exceeded.

Complete value controls: Prices are influenced by AWS infrastructure and LLM suppliers. Whereas it’s the buyer’s accountability to configure this answer to fulfill their value necessities, clients could evaluate the present value settings for added steerage.

Helps a number of mannequin suppliers: Appropriate with Boto3, OpenAI, and LangGraph SDK, permitting clients to make use of one of the best mannequin for the workload whatever the supplier.

Assist for Amazon Bedrock Guardrails: Prospects can leverage guardrails created on Amazon Bedrock Guardrails for his or her generative AI workloads, whatever the mannequin supplier.

Clever routing and resilience

Frequent issues round mannequin deployment embrace mannequin and immediate resiliency. These components are vital to contemplate how failures are dealt with when responding to a immediate or accessing knowledge shops.

Load balancing and failover: The gateway implements subtle routing logic that distributes requests throughout a number of mannequin deployments and mechanically fails over to backup suppliers when points are detected.

Retry logic: Constructed-in retry mechanisms with exponential back-off facilitate dependable service supply even when particular person suppliers expertise transient points.

Immediate caching: Clever caching helps cut back prices by avoiding duplicate requests to costly AI fashions whereas sustaining response accuracy.

Superior coverage administration

Mannequin deployment structure can vary from the straightforward to extremely advanced. The Multi-Supplier Generative AI Gateway options the superior coverage administration instruments wanted to take care of a robust governance posture.

Price limiting: Configure subtle charge limiting insurance policies that may range by person, API key, mannequin kind, or time of day to facilitate honest useful resource allocation and assist stop abuse.

Mannequin entry controls: Limit entry to particular AI fashions primarily based on person roles, ensuring that delicate or costly fashions are solely accessible to licensed personnel.

Customized routing guidelines: Implement enterprise logic that routes requests to particular suppliers primarily based on standards similar to request kind, person location, or value optimization necessities.

Monitoring and observability

As AI workloads develop to incorporate extra parts, so to do observability wants. The Multi-Supplier Generative AI Gateway structure integrates with Amazon CloudWatch. This integration permits customers to configure myriad monitoring and observability options, together with open-source instruments similar to Langfuse.

Complete logging and analytics

The gateway interactions are mechanically logged to CloudWatch, offering detailed insights into:

- Request patterns and utilization traits throughout suppliers and groups

- Efficiency metrics together with latency, error charges, and throughput

- Price allocation and spending patterns by person, workforce, and mannequin kind

- Safety occasions and entry patterns for compliance reporting

Constructed-in troubleshooting

The executive interface gives real-time log viewing capabilities so directors can shortly diagnose and resolve utilization points while not having to entry CloudWatch immediately.

Amazon SageMaker integration for expanded mannequin entry

Amazon SageMaker helps improve the Multi-Supplier Generative AI Gateway steerage by offering a complete machine studying system that seamlessly integrates with the gateway’s structure. By utilizing the Amazon SageMaker managed infrastructure for mannequin coaching, deployment, and internet hosting, organizations can develop customized basis fashions or fine-tune current ones that may be accessed by way of the gateway alongside fashions from different suppliers. This integration removes the necessity for separate infrastructure administration whereas facilitating constant governance throughout each customized and third-party fashions. SageMaker AI mannequin internet hosting capabilities expands the gateway’s mannequin entry to incorporate self-hosted fashions, in addition to these accessible on Amazon Bedrock, OpenAI, and different suppliers.

Our open supply contributions

This reference structure builds upon our contributions to the LiteLLM open supply venture, enhancing its capabilities for enterprise deployment on AWS. Our enhancements embrace improved error dealing with, enhanced security measures, and optimized efficiency for cloud-native deployments.

Getting began

The Multi-Supplier Generative AI Gateway reference structure is on the market at the moment by way of our GitHub repository, full with:

The code repository describes a number of versatile deployment choices to get began.

Public gateway with world CloudFront distribution

Use CloudFront to supply a globally distributed, low-latency entry level to your generative AI companies. The CloudFront edge areas ship content material shortly to customers around the globe, whereas AWS Protect Normal helps defend towards DDoS assaults. That is the advisable configuration for public-facing AI companies with a worldwide person base.

Customized area with CloudFront

For a extra branded expertise, you possibly can configure the gateway to make use of your personal customized area title, whereas nonetheless benefiting from the efficiency and security measures of CloudFront. This feature is good if you wish to keep consistency together with your firm’s on-line presence.

Direct entry through public Utility Load Balancer

Prospects who prioritize low-latency over world distribution can go for a direct-to-ALB deployment, with out the CloudFront layer. This simplified structure can provide value financial savings, although it requires additional consideration for net utility firewall safety.

Personal VPC-only entry

For a excessive degree of safety, you possibly can deploy the gateway totally inside a personal VPC, remoted from the general public web. This configuration is well-suited for processing delicate knowledge or deploying internal-facing generative AI companies. Entry is restricted to trusted networks like VPN, Direct Join, VPC peering, or AWS Transit Gateway.

Study extra and deploy at the moment

Able to simplify your multi-provider AI infrastructure? Entry the whole answer package deal to discover an interactive studying expertise with step-by-step steerage describing every step of the deployment and administration course of.

Conclusion

The Multi-Supplier Generative AI Gateway is an answer steerage meant to assist clients get began engaged on generative AI options in a well-architected method, whereas making the most of the AWS surroundings of companies and complimentary open-source packages. Prospects can work with fashions from Amazon Bedrock, Amazon SageMaker JumpStart, or third-party mannequin suppliers. Operations and administration of workloads is performed through the LiteLLM administration interface, and clients can select to host on ECS or EKS primarily based on their choice.

As well as, we’ve got revealed a pattern that integrates the gateway into an agentic customer support utility. The agentic system is orchestrated utilizing LangGraph and deployed on Amazon Bedrock AgentCore. LLM calls are routed by way of the gateway, offering the flexibleness to check brokers with completely different fashions–whether or not hosted on AWS or one other supplier.

This steerage is only one a part of a mature generative AI basis on AWS. For deeper studying on the parts of a generative AI system on AWS, see Architect a mature generative AI basis on AWS, which describes extra parts of a generative AI system.

Concerning the authors

Dan Ferguson is a Sr. Options Architect at AWS, primarily based in New York, USA. As a machine studying companies knowledgeable, Dan works to assist clients on their journey to integrating ML workflows effectively, successfully, and sustainably.

Dan Ferguson is a Sr. Options Architect at AWS, primarily based in New York, USA. As a machine studying companies knowledgeable, Dan works to assist clients on their journey to integrating ML workflows effectively, successfully, and sustainably.

Bobby Lindsey is a Machine Studying Specialist at Amazon Internet Providers. He’s been in expertise for over a decade, spanning varied applied sciences and a number of roles. He’s at present targeted on combining his background in software program engineering, DevOps, and machine studying to assist clients ship machine studying workflows at scale. In his spare time, he enjoys studying, analysis, mountaineering, biking, and path working.

Bobby Lindsey is a Machine Studying Specialist at Amazon Internet Providers. He’s been in expertise for over a decade, spanning varied applied sciences and a number of roles. He’s at present targeted on combining his background in software program engineering, DevOps, and machine studying to assist clients ship machine studying workflows at scale. In his spare time, he enjoys studying, analysis, mountaineering, biking, and path working.

Nick McCarthy is a Generative AI Specialist at AWS. He has labored with AWS shoppers throughout varied industries together with healthcare, finance, sports activities, telecoms and vitality to speed up their enterprise outcomes by way of using AI/ML. Outdoors of labor he likes to spend time touring, making an attempt new cuisines and studying about science and expertise. Nick has a Bachelors diploma in Astrophysics and a Masters diploma in Machine Studying.

Nick McCarthy is a Generative AI Specialist at AWS. He has labored with AWS shoppers throughout varied industries together with healthcare, finance, sports activities, telecoms and vitality to speed up their enterprise outcomes by way of using AI/ML. Outdoors of labor he likes to spend time touring, making an attempt new cuisines and studying about science and expertise. Nick has a Bachelors diploma in Astrophysics and a Masters diploma in Machine Studying.

Chaitra Mathur is as a GenAI Specialist Options Architect at AWS. She works with clients throughout industries in constructing scalable generative AI platforms and operationalizing them. All through her profession, she has shared her experience at quite a few conferences and has authored a number of blogs within the Machine Studying and Generative AI domains.

Chaitra Mathur is as a GenAI Specialist Options Architect at AWS. She works with clients throughout industries in constructing scalable generative AI platforms and operationalizing them. All through her profession, she has shared her experience at quite a few conferences and has authored a number of blogs within the Machine Studying and Generative AI domains.

Sreedevi Velagala is a Resolution Architect inside the World-Broad Specialist Group Know-how Options workforce at Amazon Internet Providers, primarily based in New Jersey. She has been targeted on delivering tailor-made options and steerage aligned with the distinctive wants of various clientele throughout AI/ML, Compute, Storage, Networking and Analytics domains. She has been instrumental in serving to clients learn the way AWS can decrease the compute prices for machine studying workloads utilizing Graviton, Inferentia and Trainium. She leverages her deep technical data and trade experience to ship tailor-made options that align with every shopper’s distinctive enterprise wants and necessities.

Sreedevi Velagala is a Resolution Architect inside the World-Broad Specialist Group Know-how Options workforce at Amazon Internet Providers, primarily based in New Jersey. She has been targeted on delivering tailor-made options and steerage aligned with the distinctive wants of various clientele throughout AI/ML, Compute, Storage, Networking and Analytics domains. She has been instrumental in serving to clients learn the way AWS can decrease the compute prices for machine studying workloads utilizing Graviton, Inferentia and Trainium. She leverages her deep technical data and trade experience to ship tailor-made options that align with every shopper’s distinctive enterprise wants and necessities.