This weblog publish relies on work co-developed with Flo Well being.

Healthcare science is quickly advancing. Sustaining correct and up-to-date medical content material immediately impacts folks’s lives, well being choices, and well-being. When somebody searches for well being data, they’re typically at their most weak, making accuracy not simply vital, however doubtlessly life-saving.

Flo Well being creates 1000’s of medical articles yearly, offering tens of millions of customers worldwide with medically credible data on ladies’s well being. Verifying the accuracy and relevance of this huge content material library is a major problem. Medical information evolves repeatedly, and guide assessment of every article shouldn’t be solely time-consuming but additionally vulnerable to human error. This is the reason the group at Flo Well being, the corporate behind the main ladies’s well being app Flo, is utilizing generative AI to facilitate medical content material accuracy at scale. Via a partnership with AWS Generative AI Innovation Heart, Flo Well being is creating an revolutionary strategy, additional known as, “Medical Automated Content material Assessment and Revision Optimization Resolution” (MACROS) to confirm and preserve the accuracy of its intensive well being data library. This AI-powered answer is able to:

- Effectively processing massive volumes of medical content material based mostly on credible scientific sources.

- Figuring out potential inaccuracies or outdated data based mostly on credible scientific assets.

- Proposing updates based mostly on the most recent medical analysis and tips, in addition to incorporating consumer suggestions.

The system powered by Amazon Bedrock allows Flo Well being to conduct medical content material evaluations and revision assessments at scale, making certain up-to-date accuracy and supporting extra knowledgeable healthcare decision-making. This method performs detailed content material evaluation, offering complete insights on medical requirements and tips adherence for Flo’s medical specialists to assessment. Additionally it is designed for seamless integration with Flo’s present tech infrastructure, facilitating computerized updates the place applicable.

This two-part collection explores Flo Well being’s journey with generative AI for medical content material verification. Half 1 examines our proof of idea (PoC), together with the preliminary answer, capabilities, and early outcomes. Half 2 covers specializing in scaling challenges and real-world implementation. Every article stands alone whereas collectively exhibiting how AI transforms medical content material administration at scale.

Proof of Idea targets and success standards

Earlier than diving into the technical answer, we established clear goals for our PoC medical content material assessment system:

Key Targets:

- Validate the feasibility of utilizing generative AI for medical content material verification

- Decide accuracy ranges in comparison with guide assessment

- Assess processing time and price enhancements

Success Metrics:

- Accuracy: Content material piece recall of 90%

- Effectivity: Scale back detection time from hours to minutes per guideline

- Value Discount: Scale back professional assessment workload

- High quality: Keep Flo’s editorial requirements and medical accuracy

- Pace: 10x quicker than guide assessment course of

To confirm the answer meets Flo Well being’s excessive requirements for medical content material, Flo Well being’s medical specialists and content material groups had been working intently with AWS technical specialists by means of common assessment periods, offering crucial suggestions and medical experience to repeatedly improve the AI mannequin’s efficiency and accuracy. The result’s MACROS, our custom-built answer for AI-assisted medical content material verification.

Resolution overview

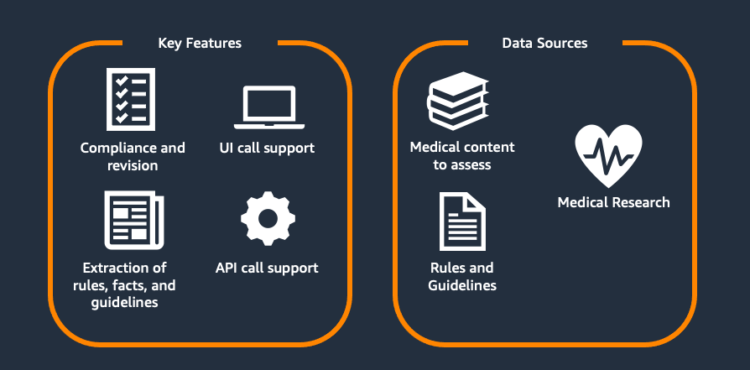

On this part, we define how the MACROS answer makes use of Amazon Bedrock and different AWS companies to automate medical content material assessment and revisions.

Determine 1. Medical Automated Content material Assessment and Revision Optimization Resolution Overview

As proven in Determine 1, the developed answer helps two main processes:

- Content material Assessment and Revision: Allows the medical requirements and elegance adherence of present medical articles at scale given the pre-specified {custom} guidelines and tips and proposes a revision that conforms to the brand new medical requirements in addition to Flo’s fashion and tone tips.

- Rule Optimization: MACROS accelerates the method of extracting the brand new (medical) tips from the (medical) analysis, pre-processing them into the format wanted for content material assessment, in addition to optimizing their high quality.

Each steps could be carried out by means of the consumer interface (UI) in addition to the direct API name. The UI help allows medical specialists to immediately see the content material assessment statistics, work together with modifications, and do guide changes. The API name help is meant for the mixing into pipeline for periodic evaluation.

Structure

Determine 2 depicts the structure of MACROS. It consists of two main components: backend and frontend.

Determine 2. MACROS structure

Within the following, the movement of main app elements is introduced:

1. Customers start by gathering and getting ready content material that should meet medical requirements and guidelines.

2. Within the second step, the info is supplied as PDF, TXT information or textual content by means of the Streamlit UI that’s hosted in Amazon Elastic Container Service (ECS). The authentication for file add occurs by means of Amazon API Gateway

3. Alternatively, {custom} Flo Well being JSON information could be immediately uploaded to the Amazon Easy Storage Service (S3) bucket of the answer stack.

4. The ECS hosted frontend has AWS IAM permissions to orchestrate duties utilizing AWS Step Capabilities.

5. Additional, the ECS container has entry to the S3 for itemizing, downloading and importing information both through pre-signed URL or boto3.

6. Optionally, if the enter file is uploaded through the UI, the answer invokes AWS Step Capabilities service that begins the pre-processing performance inside hosted by an AWS Lambda perform. This Lambda has entry to Amazon Textract for extracting textual content from PDF information. The information are saved in S3 and likewise returned to the UI.

7-9. Hosted on AWS Lambda, Rule Optimizer, Content material Assessment and Revision capabilities are orchestrated through AWS Step Operate. They’ve entry to Amazon Bedrock for generative AI capabilities to carry out rule extraction from unstructured knowledge, content material assessment and revision, respectively. Moreover, they’ve entry to S3 through boto3 SDK to retailer the outcomes.

10. The Compute Stats AWS Lambda perform has entry to S3 and may learn and mix the outcomes of particular person revision and assessment runs.

11. The answer leverages Amazon CloudWatch for system monitoring and log administration. For manufacturing deployments coping with crucial medical content material, the monitoring capabilities may very well be prolonged with {custom} metrics and alarms to offer extra granular insights into system efficiency and content material processing patterns.

Future enhancements

Whereas our present structure makes use of AWS Step Capabilities for workflow orchestration, we’re exploring the potential of Amazon Bedrock Flows for future iterations. Bedrock Flows provides promising capabilities for streamlining AI-driven workflows, doubtlessly simplifying our structure and enhancing integration with different Bedrock companies. This different might present extra seamless administration of our AI processes, particularly as we scale and evolve our answer.

Content material assessment and revision

On the core of MACROS lies its Content material Assessment and Revision performance with Amazon Bedrock basis fashions. The Content material Assessment and Revision block consists of 5 main elements: 1) The optionally available Filtering stage 2) Chunking 3) Assessment 4) Revision and 5) Submit-processing, depicted in Determine 3.

Determine 3. Content material assessment and revision pipeline

Right here’s how MACROS processes the uploaded medical content material:

- Filtering (Optionally available): The journey begins with an optionally available filtering step. This good characteristic checks whether or not the algorithm is related for the article, doubtlessly saving time and assets on pointless processing.

- Chunking: The supply textual content is then break up into paragraphs. This significant step facilitates good high quality evaluation and helps stop unintended revisions to unrelated textual content. Chunking could be carried out utilizing heuristics, comparable to punctuation or common expression-based splits, in addition to utilizing massive language fashions (LLM) to establish semantically full chunks of textual content.

- Assessment: Every paragraph or part undergoes a radical assessment towards the related guidelines and tips.

- Revision: Solely the paragraphs flagged as non-adherent transfer ahead to the revision stage, streamlining the method and sustaining the integrity of adherent content material. The AI suggests updates to carry non-adherent paragraphs consistent with the most recent tips and Flo’s fashion necessities.

- Submit-processing: Lastly, the revised paragraphs are seamlessly built-in again into the unique textual content, leading to an up to date, adherent doc.

The Filtering step could be carried out utilizing an extra LLM through Amazon Bedrock name that assesses every part individually with the next immediate construction:

Determine 4. Simplified LLM-based filtering step

Additional, non-LLM approaches could be possible to help the Filtering step:

- Encoding the principles and the articles into dense embedding vectors and calculating similarity between them. By setting the similarity threshold we are able to establish which rule set is taken into account to be related for the enter doc.

- Equally, the direct keyword-level overlap between the doc and the rule could be recognized utilizing BLEU or ROUGE metrics.

Content material assessment, as already talked about, is carried out on a textual content part foundation towards group of guidelines and results in response in XML format, comparable to:

Right here, 1 signifies adherence and 0 – non-adherence of the textual content to the required guidelines. Utilizing XML format helps to realize dependable parsing of the output.

This Assessment step iterates over the sections within the textual content to guarantee that the LLM pays consideration to every part individually, which led to extra sturdy ends in our experimentation. To facilitate increased non-adherent part detection accuracy, the consumer can even use the Multi-call mode, the place as an alternative of 1 Amazon Bedrock name assessing adherence of the article towards all guidelines, we now have one unbiased name per rule.

The Revision step receives the output of the Assessment (non-adherent sections and the explanations for non-adherence), in addition to the instruction to create the revision in the same tone. It then suggests revisions of the non-adherent sentences in a mode much like the unique textual content. Lastly, the Submit-processing step combines the unique textual content with new revisions, ensuring that no different sections are modified.

Completely different steps of the movement require totally different ranges of LLM mannequin complexity. Whereas less complicated duties like chunking could be finished effectively with a comparatively small mannequin like Claude Haiku fashions household, extra complicated reasoning duties like content material assessment and revision require bigger fashions like Claude Sonnet or Opus fashions household to facilitate correct evaluation and high-quality content material era. This tiered strategy to mannequin choice optimizes each efficiency and cost-efficiency of the answer.

Working modes

The Content material Assessment and Revision characteristic operates in two UI modes: Detailed Doc Processing and Multi Doc Processing, every catering to totally different scales of content material administration. The Detailed Doc Processing mode provides a granular strategy to content material evaluation and is depicted in Determine 5. Customers can add paperwork in numerous codecs (PDF, TXT, JSON or paste textual content immediately) and specify the rules towards which the content material must be evaluated.

Determine 5. Detailed Doc Processing instance

Customers can select from predefined rule units, right here, Vitamin D, Breast Well being, and Premenstrual Syndrome and Dysphoric Dysfunction (PMS and PMDD), or enter {custom} tips. These {custom} tips can embrace guidelines comparable to “The title of the article have to be medically correct” in addition to adherent and non-adherent to the rule examples of content material.

The rulesets guarantee that the evaluation aligns with particular medical requirements and Flo’s distinctive fashion information. The interface permits for on-the-fly changes, making it ultimate for thorough, particular person doc evaluations. For larger-scale operations, the Multi Doc Processing mode must be used. This mode is designed to deal with quite a few {custom} JSON information concurrently, mimicking how Flo would combine MACROS into their content material administration system.

Extracting guidelines and tips from unstructured knowledge

Actionable and well-prepared tips should not at all times instantly obtainable. Typically they’re given in unstructured information or should be discovered. Utilizing the Rule Optimizer characteristic, we are able to extract and refine actionable tips from a number of complicated paperwork.

Rule Optimizer processes uncooked PDF paperwork to extract textual content, which is then chunked into significant sections based mostly on doc headers. This segmented content material is processed by means of Amazon Bedrock utilizing specialised system prompts, with two distinct modes: Fashion/tonality and Medical mode.

Fashion/tonality mode focuses on extracting the rules on how the textual content must be written, its fashion, what codecs and phrases can or can’t be used.

Rule Optimizer assigns a precedence for every rule: excessive, medium, and low. The precedence stage signifies the rule’s significance, guiding the order of content material assessment and focusing consideration on crucial areas first. Rule Optimizer features a guide enhancing interface the place customers can refine rule textual content, alter classifications, and handle priorities. Due to this fact, if customers must replace a given rule, the modifications are saved for future use in Amazon S3.

The Medical mode is designed to course of medical paperwork and is tailored to a extra scientific language. It permits grouping of extracted guidelines into three lessons:

- Medical situation tips

- Remedy particular tips

- Adjustments to recommendation and traits in well being

Determine 6. Simplified medical rule optimization immediate

Determine 6 gives an instance of a medical rule optimization immediate, consisting of three essential elements: function setting – medical AI professional, description of what makes a great rule, and eventually the anticipated output. We establish the sufficiently good high quality for a rule whether it is:

- Clear, unambiguous, and actionable

- Related, constant, and concise (max two sentences)

- Written in lively voice

- Avoids pointless jargon

Implementation concerns and challenges

Throughout our PoC growth, we recognized a number of essential concerns that may profit others implementing related options:

- Knowledge preparation: This emerged as a basic problem. We realized the significance of standardizing enter codecs for each medical content material and tips whereas sustaining constant doc buildings. Creating various take a look at units throughout totally different medical subjects proved important for complete validation.

- Value administration: Monitoring and optimizing price rapidly turned a key precedence. We carried out token utilization monitoring and optimized immediate design and batch processing to stability efficiency and effectivity.

- Regulatory and moral compliance: Given the delicate nature of medical content material, strict regulatory and moral safeguards had been crucial. We established sturdy documentation practices for AI choices, carried out strict model management for medical tips and steady human medical professional oversight for the AI-generated options. Regional healthcare rules had been rigorously thought-about all through implementation.

- Integration and scaling: We advocate beginning with a standalone testing atmosphere whereas planning for future content material administration system (CMS) integration by means of well-designed API endpoints. Constructing with modularity in thoughts proved useful for future enhancements. All through the method, we confronted frequent challenges comparable to sustaining context in lengthy medical articles, balancing processing velocity with accuracy, and facilitating constant tone throughout AI-suggested revisions.

- Mannequin optimization: The various mannequin choice functionality of Amazon Bedrock proved notably useful. Via its platform, we are able to select optimum fashions for particular duties, obtain price effectivity with out sacrificing accuracy, and easily improve to newer fashions – all whereas sustaining our present structure.

Preliminary Outcomes

Our Proof of Idea delivered robust outcomes throughout the crucial success metrics, demonstrating the potential of AI-assisted medical content material assessment. The answer exceeded goal processing velocity enhancements whereas sustaining 80% accuracy and over 90% recall in figuring out content material requiring updates. Most notably, the AI-powered system utilized medical tips extra constantly than guide evaluations and considerably lowered the time burden on medical specialists.

Key Takeaways

Throughout implementation, we uncovered a number of insights crucial for optimizing AI efficiency in medical content material evaluation. Content material chunking was important for correct evaluation throughout lengthy paperwork, and professional validation of parsing guidelines helped medical specialists to keep up scientific precision.Most significantly, the venture confirmed that human-AI collaboration – not full automation – is essential to profitable implementation. Common professional suggestions and clear efficiency metrics guided system refinements and incremental enhancements. Whereas the system considerably streamlines the assessment course of, it really works finest as an augmentation device, with medical specialists remaining important for closing validation, making a extra environment friendly hybrid strategy to medical content material administration.

Conclusion and subsequent steps

This primary a part of our collection demonstrates how generative AI could make the medical content material assessment course of quicker, extra environment friendly, and scalable whereas sustaining excessive accuracy. Keep tuned for Half 2 of this collection, the place we cowl the manufacturing journey, deep diving into challenges and scaling methods.Are you prepared to maneuver your AI initiatives into manufacturing?

In regards to the authors

Liza (Elizaveta) Zinovyeva, Ph.D., is an Utilized Scientist at AWS Generative AI Innovation Heart and relies in Berlin. She helps clients throughout totally different industries to combine Generative AI into their present purposes and workflows. She is enthusiastic about AI/ML, finance and software program safety subjects. In her spare time, she enjoys spending time along with her household, sports activities, studying new applied sciences, and desk quizzes.

Liza (Elizaveta) Zinovyeva, Ph.D., is an Utilized Scientist at AWS Generative AI Innovation Heart and relies in Berlin. She helps clients throughout totally different industries to combine Generative AI into their present purposes and workflows. She is enthusiastic about AI/ML, finance and software program safety subjects. In her spare time, she enjoys spending time along with her household, sports activities, studying new applied sciences, and desk quizzes.

Callum Macpherson is a Knowledge Scientist on the AWS Generative AI Innovation Heart, the place cutting-edge AI meets real-world enterprise transformation. Callum companions immediately with AWS clients to design, construct, and scale generative AI options that unlock new alternatives, speed up innovation, and ship measurable influence throughout industries.

Callum Macpherson is a Knowledge Scientist on the AWS Generative AI Innovation Heart, the place cutting-edge AI meets real-world enterprise transformation. Callum companions immediately with AWS clients to design, construct, and scale generative AI options that unlock new alternatives, speed up innovation, and ship measurable influence throughout industries.

Arefeh Ghahvechi is a Senior AI Strategist on the AWS GenAI Innovation Heart, specializing in serving to clients understand speedy worth from generative AI applied sciences by bridging innovation and implementation. She identifies high-impact AI alternatives whereas constructing the organizational capabilities wanted for scaled adoption throughout enterprises and nationwide initiatives.

Arefeh Ghahvechi is a Senior AI Strategist on the AWS GenAI Innovation Heart, specializing in serving to clients understand speedy worth from generative AI applied sciences by bridging innovation and implementation. She identifies high-impact AI alternatives whereas constructing the organizational capabilities wanted for scaled adoption throughout enterprises and nationwide initiatives.

Nuno Castro is a Sr. Utilized Science Supervisor. He’s has 19 years expertise within the discipline in industries comparable to finance, manufacturing, and journey, main ML groups for 11 years.

Nuno Castro is a Sr. Utilized Science Supervisor. He’s has 19 years expertise within the discipline in industries comparable to finance, manufacturing, and journey, main ML groups for 11 years.

![]() Dmitrii Ryzhov is a Senior Account Supervisor at Amazon Internet Providers (AWS), serving to digital-native corporations unlock enterprise potential by means of AI, generative AI, and cloud applied sciences. He works intently with clients to establish high-impact enterprise initiatives and speed up execution by orchestrating strategic AWS help, together with entry to the correct experience, assets, and innovation applications.

Dmitrii Ryzhov is a Senior Account Supervisor at Amazon Internet Providers (AWS), serving to digital-native corporations unlock enterprise potential by means of AI, generative AI, and cloud applied sciences. He works intently with clients to establish high-impact enterprise initiatives and speed up execution by orchestrating strategic AWS help, together with entry to the correct experience, assets, and innovation applications.

Nikita Kozodoi, PhD, is a Senior Utilized Scientist on the AWS Generative AI Innovation Heart engaged on the frontier of AI analysis and enterprise. Nikita builds and deploys generative AI and ML options that clear up real-world issues and drive enterprise influence for AWS clients throughout industries.

Nikita Kozodoi, PhD, is a Senior Utilized Scientist on the AWS Generative AI Innovation Heart engaged on the frontier of AI analysis and enterprise. Nikita builds and deploys generative AI and ML options that clear up real-world issues and drive enterprise influence for AWS clients throughout industries.

Aiham Taleb, PhD, is a Senior Utilized Scientist on the Generative AI Innovation Heart, working immediately with AWS enterprise clients to leverage Gen AI throughout a number of high-impact use instances. Aiham has a PhD in unsupervised illustration studying, and has business expertise that spans throughout numerous machine studying purposes, together with laptop imaginative and prescient, pure language processing, and medical imaging.

Aiham Taleb, PhD, is a Senior Utilized Scientist on the Generative AI Innovation Heart, working immediately with AWS enterprise clients to leverage Gen AI throughout a number of high-impact use instances. Aiham has a PhD in unsupervised illustration studying, and has business expertise that spans throughout numerous machine studying purposes, together with laptop imaginative and prescient, pure language processing, and medical imaging.