Information publishers need to present a personalised and informative expertise to their readers, however the brief shelf life of reports articles could make this fairly tough. In information publishing, articles usually have peak readership throughout the identical day of publication. Moreover, information publishers steadily publish new articles and need to present these articles to readers as shortly as doable. This poses challenges for interaction-based recommender system methodologies corresponding to collaborative filtering and the deep learning-based approaches utilized in Amazon Personalize, a managed service that may be taught consumer preferences from their previous habits and shortly alter suggestions to account for altering consumer habits in close to actual time.

Information publishers usually don’t have the finances or the employees to experiment with in-house algorithms, and wish a totally managed answer. On this put up, we reveal tips on how to present high-quality suggestions for articles with brief shelf lives through the use of textual content embeddings in Amazon Bedrock. Amazon Bedrock a totally managed service that provides a alternative of high-performing basis fashions (FMs) from main synthetic intelligence (AI) firms like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon by way of a single API, together with a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI.

Embeddings are a mathematical illustration of a chunk of knowledge corresponding to a textual content or a picture. Particularly, they’re a vector or ordered listing of numbers. This illustration helps seize the which means of the picture or textual content in such a means that you should utilize it to find out how comparable photos or textual content are to one another by taking their distance from one another within the embedding area. For our put up, we use the Amazon Titan Textual content Embeddings mannequin.

Resolution overview

By combining the advantages of Amazon Titan Textual content Embeddings on Amazon Bedrock with the real-time nature of Amazon Personalize, we are able to advocate articles to customers in an clever means inside seconds of the article being revealed. Though Amazon Personalize can present articles shortly after they’re revealed, it typically takes a couple of hours (and a filter to pick out objects from the right time-frame) to floor objects to the proper customers. For our use case, we need to advocate articles instantly after they’re revealed.

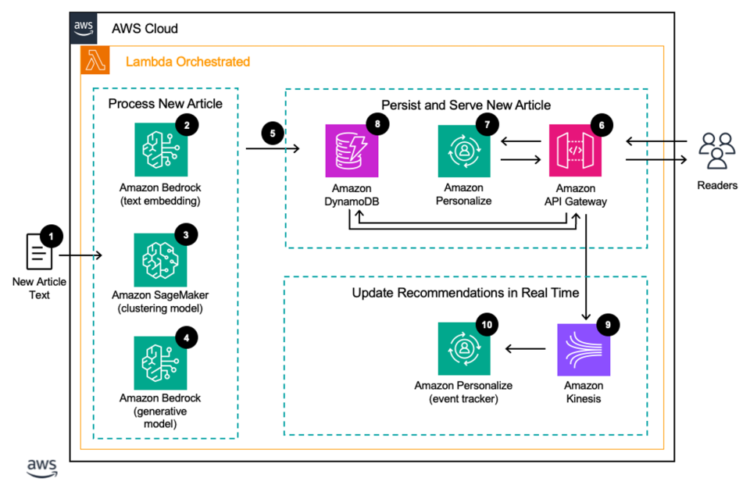

The next diagram reveals the structure of the answer and the high-level steps of the workflow. The structure follows AWS finest practices to make use of managed and serverless providers the place doable.

The workflow consists of the next steps:

- A set off invokes an AWS Lambda operate each time a brand new article is revealed, which runs Steps 2–5.

- A textual content embedding mannequin hosted on Amazon Bedrock creates an embedding of the textual content of the article.

- An Amazon SageMaker hosted mannequin assigns the article to a cluster of comparable articles.

- An Amazon Bedrock hosted mannequin may also generate headlines and summaries of the brand new article if wanted.

- The brand new articles are added to Amazon DynamoDB with data on their kind and after they have been revealed, with a Time-To-Stay (TTL) representing when the articles are not thought-about breaking information.

- When customers arrive on the web site, their requests are processed by Amazon API Gateway.

- API Gateway makes a request to Amazon Personalize to be taught what particular person articles and article varieties a reader is most involved in, which might be instantly proven to the reader.

- To advocate breaking information articles, a name is made to DynamoDB to find out what articles have been just lately revealed of every kind. This enables newly revealed articles to be proven to readers in seconds.

- As customers learn articles, their interactions are streamed utilizing Amazon Kinesis Knowledge Streams to an Amazon Personalize occasion tracker.

- The Amazon Personalize occasion tracker updates the deployed personalization fashions inside 1–2 seconds.

Conditions

To implement the proposed answer, it’s best to have the next:

- An AWS account and familiarity with Amazon Personalize, SageMaker, DynamoDB, and Amazon Bedrock.

- The Amazon Titan Textual content Embeddings V2 mannequin enabled on Amazon Bedrock. You’ll be able to affirm it’s enabled on the Mannequin entry web page of the Amazon Bedrock console. If Amazon Titan Textual content Embeddings is enabled, the entry standing will present as Entry granted, as proven within the following screenshot. You’ll be able to allow entry to the mannequin by selecting Handle mannequin entry, choosing Amazon Titan Textual content Embeddings V2, after which selecting Save Adjustments.

Create embeddings of the textual content of beforehand revealed articles

First, you have to load a set of traditionally revealed articles so you’ve gotten a historical past of consumer interactions with these articles after which create embeddings for them utilizing Amazon Titan Textual content Embeddings. AWS additionally has machine studying (ML) providers that may carry out duties corresponding to translation, summarization, and the identification of an article’s tags, title, or style, if required. The next code snippet reveals tips on how to generate embeddings utilizing Amazon Titan Textual content Embeddings:

def titan_embeddings(textual content, bedrock_client):

immediate = f"{textual content}"

physique = json.dumps({

"inputText": immediate,

})

model_id = 'amazon.titan-embed-text-v2:0'

settle for="utility/json"

content_type="utility/json"

response = bedrock_client.invoke_model(

physique=physique,

modelId=model_id,

settle for=settle for,

contentType=content_type

)

response_body = json.masses(response['body'].learn())

return response_body.get('embedding')

Practice and deploy a clustering mannequin

Subsequent, you deploy a clustering mannequin for the historic articles. A clustering mannequin identifies clusters of article embeddings and assigns every cluster an ID. On this case, we use a k-means mannequin hosted on SageMaker, however you should utilize a special clustering method in case you choose.

The next code snippet is an instance of tips on how to create an inventory of the textual content embeddings utilizing the Python operate above after which practice a k-means cluster for article embeddings. On this case, the selection of 100 clusters is bigoted. You must experiment to discover a quantity that’s finest on your use case. The occasion kind represents the Amazon Elastic Compute Cloud (Amazon EC2) compute occasion that runs the SageMaker k-means coaching job. For detailed data on which occasion varieties suit your use case and their efficiency capabilities, see Amazon EC2 Occasion varieties. For details about pricing for these occasion varieties, see Amazon EC2 Pricing. For details about accessible SageMaker pocket book occasion varieties, see CreateNotebookInstance. For many experimentation, it’s best to use an ml.t3.medium occasion. That is the default occasion kind for CPU-based SageMaker photos, and is obtainable as a part of the AWS Free Tier.

text_embeddings_list = []

for textual content in text_list:

text_embeddings_list.append(titan_embeddings(textual content, bedrock_client))

num_clusters = 100

kmeans = KMeans(

function=function,

instance_count=1,

instance_type="ml.t3.medium",

output_path="s3://your_unique_s3bucket_name/",

ok=num_clusters,

num_trials=num_clusters,

epochs=10

)

kmeans.match(kmeans.record_set(np.asarray(text_embeddings_list, dtype=np.float32)))

After you end coaching and deploying the clustering mannequin, you possibly can assign a cluster ID to every of the historic articles by passing their embeddings by way of the k-means (or different) clustering mannequin. Additionally, importantly, you assign clusters to any articles you contemplate breaking information (article shelf life can differ from a few days to a few hours relying on the publication).

Arrange a DynamoDB desk

The following step of the method is to arrange a DynamoDB desk to include the breaking information articles, their identifiers, and their clusters. This DynamoDB desk will assist you to later while you attempt to question the mapping of the article merchandise ID with the cluster ID.

The breaking information desk has the next attributes:

- Article cluster ID – An preliminary cluster ID

- Article ID – The ID of the article (numeric for this instance)

- Article timestamp – The time when the article was created

- Article style – The style of article, corresponding to tech, design finest practices, and so forth

- Article language – A two-letter language code of the article

- Article textual content – The precise article textual content

The article cluster ID is the partition key and the article timestamp (in Unix Epoch Time) is the type key for the breaking information desk.

Replace the article interactions dataset with article clusters

Whenever you’re creating your Amazon Personalize consumer personalization marketing campaign, the merchandise interactions dataset represents the consumer interactions historical past together with your objects. For our use case, we practice our recommender on the article clusters as an alternative of the person articles. It will give the mannequin the chance to advocate based mostly on the cluster-level interactions and perceive consumer preferences to article varieties versus particular person articles. That means, when a brand new article is revealed, we merely must establish what kind of article it’s, and we are able to instantly advocate it to customers.

To take action, you have to replace the interactions dataset, changing the person article ID with the cluster ID of the article and retailer the merchandise interactions dataset in an Amazon Easy Storage Service (Amazon S3) bucket, at which level it may be introduced into Amazon Personalize.

Create an Amazon Personalize consumer personalization marketing campaign

The USER_PERSONALIZATION recipe generates an inventory of suggestions for a selected consumer topic to the constraints of filters added to it. That is helpful for populating house pages of internet sites and subsections the place particular article varieties, merchandise, or different items of content material are targeted on. Discuss with the next Amazon Personalize consumer personalization pattern on GitHub for step-by-step directions to create a consumer personalization mannequin.

The steps in an Amazon Personalize workflow are as follows:

- Create a dataset group.

- Put together and import knowledge.

- Create recommenders or customized assets.

- Get suggestions.

To create and deploy a consumer personalization marketing campaign, you first have to create a consumer personalization answer. A answer is a mix of a dataset group and a recipe, which is principally a set of directions for Amazon Personalize for tips on how to put together a mannequin to unravel a selected kind of enterprise use case. After this, you practice an answer model, then deploy it as a marketing campaign.

This following code snippet reveals tips on how to create a consumer personalization answer useful resource:

create_solution_response = personalize.create_solution (

identify = "personalized-articles-solution”,

datasetGroupArn = dataset_group_arn,

recipeArn = "arn:aws:personalize:::recipe/aws-user-personalization-v2",

)

solution_arn = create_solution_response['solutionArn']

The next code snippet reveals tips on how to create a consumer personalization answer model useful resource:

create_solution_version_response = personalize.create_solution_version(

solutionArn = solution_arn

)

solution_version_arn = create_solution_version_response['solutionVersionArn']

The next code snippet reveals tips on how to create a consumer personalization marketing campaign useful resource:

create_campaign_response = personalize.create_campaign (

identify = "personalized-articles-campaign”,

solutionVersionArn = solution_version_arn,

)

campaign_arn = create_campaign_response['campaignArn']

Ship a curated and hyper-personalized breaking information expertise

Articles for the breaking information part of the entrance web page might be drawn from the Amazon Personalize marketing campaign you skilled on the article clusters within the earlier part. This mannequin identifies the kinds of articles aligned with every consumer’s preferences and pursuits.

The articles of this sort can then be obtained by querying DynamoDB for all articles of that kind, then choosing the newest ones of every related kind. This answer additionally permits the editorial staff a level of curation over the variety of articles proven to particular person customers. This makes positive customers can see the breadth of content material accessible on the location and see a various array of views whereas nonetheless having a hyper-personalized expertise.

That is completed by setting a most variety of articles that may be proven per kind (a price that may be decided experimentally or by the editorial staff). Essentially the most just lately revealed articles, as much as the utmost, might be chosen from every cluster till the specified variety of articles is obtained.

The next Python operate obtains probably the most just lately revealed articles (as measured by their timestamp) within the article cluster. In manufacturing, the person articles ought to have a TTL representing the shelf lifetime of the articles. The next code assumes the article IDs are numeric and improve over time. If you wish to use string values on your article IDs and the article’s timestamp as the kind key for this desk, you’ll want to regulate the code.

The next arguments are handed to the operate:

- cluster (str or int) – A string or integer representing the cluster in query for which we need to get hold of the listing of customers

- dynamo_client – A Boto3 DynamoDB shopper

- table_name (str) – The desk identify of the DynamoDB desk through which we retailer the data

- index_name (str) – The identify of the index

- max_per_cluster (int) – The utmost variety of objects to tug per cluster

def query_dynamo_db_articles(

cluster,

index_name,

dynamo_client,

table_name,

max_per_cluster):

arguments = {

"TableName": table_name,

"IndexName" : index_name,

"ScanIndexForward": False,

"KeyConditionExpression": "articleClusterId = :V1",

"ExpressionAttributeValues": {

":V1": {"S": str(cluster)}

},

"Restrict": max_per_cluster

}

return dynamo_client.question(**arguments)

Utilizing the previous operate, the next operate selects the related articles in every cluster really helpful by the Amazon Personalize consumer personalization mannequin that we created earlier and continues iterating by way of every cluster till it obtains the utmost desired variety of articles. Its arguments are as follows:

- personalize_runtime – A Boto3 shopper representing Amazon Personalize Runtime

- personalize_campaign – The marketing campaign ARN generated while you deployed the consumer personalization marketing campaign

- user_id (str) – The consumer ID of the reader

- dynamo_client – A Boto3 DynamoDB shopper

- table_name (str) – The desk identify of the DynamoDB desk storing the data

- index_name (str) – The identify of the index

- max_per_cluster (str) – The utmost variety of articles to tug per cluster

- desired_items (int) – The overall variety of articles to return

def breaking_news_cluster_recommendation(personalize_runtime,

personalize_campaign,

user_id,

dynamo_client,

table_name,

index_name,

max_per_cluster,

desired_items):

suggestion = personalize_runtime.get_recommendations(

campaignArn=personalize_campaign,

userId=user_id

) # Returns really helpful clusterId listing

item_count = 0

item_list = []

for cluster_number in suggestion['itemList']:

cluster = cluster_number['itemId']

dynamo_query_response = query_dynamo_db_articles(

cluster,

index_name,

dynamo_client,

table_name,

max_per_cluster

)

for merchandise in dynamo_query_response['Items']:

item_list.append(merchandise)

item_count += 1

if item_count == desired_items:

break

if item_count == desired_items:

break

return item_list

Maintain suggestions updated for customers

When customers work together with an article, the interactions are despatched to an occasion tracker. Nonetheless, not like a typical Amazon Personalize deployment, on this case we ship an interplay as if it occurred with the cluster the article is a member of. There are a number of methods to do that; one is to embed the article’s cluster in its metadata together with the article ID to allow them to be fed again to the occasion tracker. One other is to search for the article’s cluster utilizing its ID in some type of light-weight cache (or key-value database).

Whichever means you select, after you get hold of the article’s cluster, you stream in an interplay with it utilizing the occasion tracker.

The next code snippet units up the occasion tracker:

create_event_tracker_response = personalize.create_event_tracker(

identify = event_tracker_name,

datasetGroupArn=dataset_group_arn

)

The next code snippet feeds in new interactions to the occasion tracker:

event_tracker_id = create_event_tracker_response['trackingId']

response = personalize_events.put_events(

trackingId=event_tracker_id,

userId=sample_user,

sessionId=session_id, # a singular id for this customers session

eventList=[]# accommodates an inventory of as much as 10 item-interactions

)

These new interactions will trigger Amazon Personalize to replace its suggestions in actual time. Let’s see what this appears like in observe.

With a pattern dataset derived from the CI&T DeskDrop dataset, a consumer logging in to their homepage would see these articles. (The dataset is a combination of Portuguese and English articles; the uncooked textual content has been translated however the titles haven’t. The answer described on this put up works for multilingual audiences with out requiring separate deployments.) All of the articles proven are thought-about breaking information, which means we haven’t tracked interactions with them in our dataset and they’re being really helpful utilizing the clustering strategies described earlier.

Nonetheless, we are able to work together with the extra technical articles, as proven within the following screenshot.

Once we refresh our suggestions, the web page is up to date.

Let’s change our habits and work together with articles extra about design finest practices and profession growth.

We get the next suggestions.

If we restrict the variety of articles that we are able to draw per cluster, we are able to additionally implement a bit extra variety in our suggestions.

As new articles are added as a part of the information publishing course of, the articles are saved to an S3 bucket first. A Lambda set off on the bucket invokes a collection of steps:

- Generate an embedding of the textual content of the article utilizing the mannequin on Amazon Bedrock.

- Decide the cluster ID of the article utilizing the k-means clustering mannequin on SageMaker that you just skilled earlier.

- Retailer the related data on the article in a DynamoDB desk.

Clear up

To keep away from incurring future costs, delete the assets you created whereas constructing this answer:

- Delete the SageMaker assets.

- Delete the Amazon Personalize assets.

- Delete the Amazon DynamoDB tables.

Conclusion

On this put up, we described how one can advocate breaking information to a consumer utilizing AWS AI/ML providers. By benefiting from the facility of Amazon Personalize and Amazon Titan Textual content Embeddings on Amazon Bedrock, you possibly can present articles to customers inside seconds of them being revealed.

As all the time, AWS welcomes your suggestions. Depart your ideas and questions within the feedback part. To be taught extra in regards to the providers mentioned on this weblog, you possibly can join an AWS Ability Builder account, the place yow will discover free digital programs on Amazon Personalize, Amazon Bedrock, Amazon SageMaker and different AWS providers.

In regards to the Authors

Eric Bolme is a Specialist Resolution Architect with AWS based mostly on the East Coast of the US. He has 8 years of expertise constructing out quite a lot of deep studying and different AI use instances and focuses on Personalization and Suggestion use instances with AWS.

Eric Bolme is a Specialist Resolution Architect with AWS based mostly on the East Coast of the US. He has 8 years of expertise constructing out quite a lot of deep studying and different AI use instances and focuses on Personalization and Suggestion use instances with AWS.

Joydeep Dutta is a Principal Options Architect at AWS. Joydeep enjoys working with AWS prospects emigrate their workloads to the cloud, optimize for value, and assist with architectural finest practices. He’s captivated with enterprise structure to assist cut back value and complexity within the enterprise. He lives in New Jersey and enjoys listening to music and having fun with the outside in his spare time.

Joydeep Dutta is a Principal Options Architect at AWS. Joydeep enjoys working with AWS prospects emigrate their workloads to the cloud, optimize for value, and assist with architectural finest practices. He’s captivated with enterprise structure to assist cut back value and complexity within the enterprise. He lives in New Jersey and enjoys listening to music and having fun with the outside in his spare time.