Welcome to Half 3 of our illustrated journey by way of the thrilling world of Pure Language Processing! In case you caught Half 2, you’ll keep in mind that we chatted about phrase embeddings and why they’re so cool.

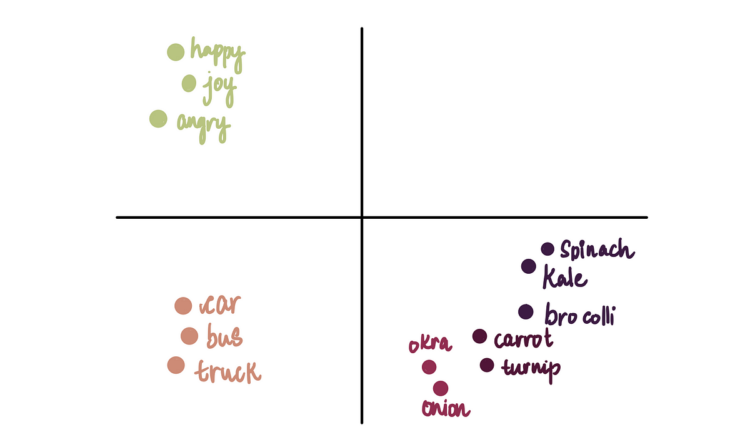

Phrase embeddings enable us to create maps of phrases that seize their nuances and complicated relationships.

This text will break down the mathematics behind constructing phrase embeddings utilizing a method referred to as Word2Vec — a machine studying mannequin particularly designed to generate significant phrase embeddings.

Word2Vec provides two strategies — Skip-gram and CBOW — however we’ll deal with how the Skip-gram methodology works, because it’s probably the most extensively used.

These phrases and ideas may sound complicated proper now however don’t fear — at its core, it’s just a few intuitive math (and a sprinkle of machine studying magic).

Actual fast — earlier than diving into this text, I strongly encourage you to learn my collection on the fundamentals of machine…