Organizations are constantly looking for methods to make use of their proprietary information and area experience to realize a aggressive edge. With the appearance of basis fashions (FMs) and their exceptional pure language processing capabilities, a brand new alternative has emerged to unlock the worth of their knowledge property.

As organizations try to ship customized experiences to prospects utilizing generative AI, it turns into paramount to specialize the habits of FMs utilizing their very own—and their prospects’—knowledge. Retrieval Augmented Technology (RAG) has emerged as a easy but efficient method to attain a desired degree of specialization.

Amazon Bedrock Information Bases is a totally managed functionality that simplifies the administration of the complete RAG workflow, empowering organizations to offer FMs and brokers contextual data from firm’s non-public knowledge sources to ship extra related and correct responses tailor-made to their particular wants.

For organizations creating multi-tenant merchandise, comparable to unbiased software program distributors (ISVs) creating software program as a service (SaaS) choices, the power to personalize experiences for every of their prospects (tenants of their SaaS utility) is especially vital. This personalization might be achieved by implementing a RAG method that selectively makes use of tenant-specific knowledge.

On this put up, we talk about and supply examples of methods to obtain personalization utilizing Amazon Bedrock Information Bases. We focus significantly on addressing the multi-tenancy challenges that ISVs face, together with knowledge isolation, safety, tenant administration, and price administration. We deal with situations the place the RAG structure is built-in into the ISV utility and never straight uncovered to tenants. Though the precise implementations introduced on this put up use Amazon OpenSearch Service as a vector database to retailer tenants’ knowledge, the challenges and structure options proposed might be prolonged and tailor-made to different vector retailer implementations.

Multi-Tenancy design concerns

When architecting a multi-tenanted RAG system, organizations have to take a number of concerns into consideration:

- Tenant isolation – One essential consideration in designing multi-tenanted techniques is the extent of isolation between the information and assets associated to every tenant. These assets embrace knowledge sources, ingestion pipelines, vector databases, and RAG shopper utility. The extent of isolation is usually ruled by safety, efficiency, and the scalability necessities of your resolution, collectively together with your regulatory necessities. For instance, chances are you’ll have to encrypt the information associated to every of your tenants utilizing a special encryption key. You might also have to be sure that excessive exercise generated by one of many tenants doesn’t have an effect on different tenants.

- Tenant variability – The same but distinct consideration is the extent of variability of the options offered to every tenant. Within the context of RAG techniques, tenants may need various necessities for knowledge ingestion frequency, doc chunking technique, or vector search configuration.

- Tenant administration simplicity – Multi-tenant options want a mechanism for onboarding and offboarding tenants. This dimension determines the diploma of complexity for this course of, which could contain provisioning or tearing down tenant-specific infrastructure, comparable to knowledge sources, ingestion pipelines, vector databases, and RAG shopper purposes. This course of might additionally contain including or deleting tenant-specific knowledge in its knowledge sources.

- Price-efficiency – The working prices of a multi-tenant resolution rely on the best way it gives the isolation mechanism for tenants, so designing a cost-efficient structure for the answer is essential.

These 4 concerns should be fastidiously balanced and weighted to swimsuit the wants of the precise resolution. On this put up, we current a mannequin to simplify the decision-making course of. Utilizing the core isolation ideas of silo, pool, and bridge outlined within the SaaS Tenant Isolation Methods whitepaper, we suggest three patterns for implementing a multi-tenant RAG resolution utilizing Amazon Bedrock Information Bases, Amazon Easy Storage Service (Amazon S3), and OpenSearch Service.

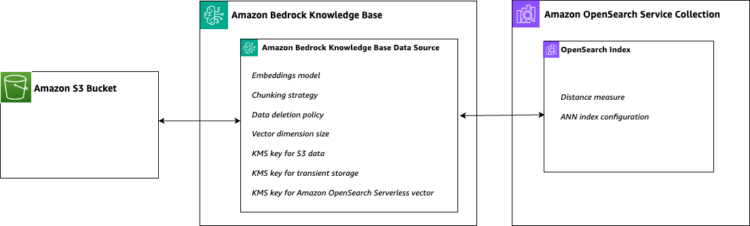

A typical RAG resolution utilizing Amazon Bedrock Information Bases consists of a number of parts, as proven within the following determine:

The principle problem in adapting this structure for multi-tenancy is figuring out methods to present isolation between tenants for every of the parts. We suggest three prescriptive patterns that cater to totally different use circumstances and provide carrying ranges of isolation, variability, administration simplicity, and cost-efficiency. The next determine illustrates the trade-offs between these three architectural patterns by way of attaining tenant isolation, variability, cost-efficiency, and ease of tenant administration.

Multi-tenancy patterns

On this part, we describe the implementation of those three totally different multi-tenancy patterns in a RAG structure based mostly on Amazon Bedrock Information Bases, discussing their use circumstances in addition to their execs and cons.

Silo

The silo sample, illustrated within the following determine, provides the best degree of tenant isolation, as a result of the complete stack is deployed and managed independently for every single tenant.

Within the context of the RAG structure applied by Amazon Bedrock Information Bases, this sample prescribes the next:

- A separate knowledge supply per tenant – On this put up, we think about the situation through which tenant paperwork to be vectorized are saved in Amazon S3, subsequently a separate S3 bucket is provisioned per tenant. This enables for per-tenant AWS Key Administration Service (AWS KMS) encryption keys, in addition to per-tenant S3 lifecycle insurance policies to handle object expiration, and object versioning insurance policies to keep up a number of variations of objects. Having separate buckets per tenant gives isolation and permits for custom-made configurations based mostly on tenant necessities.

- A separate information base per tenant – This enables for a separate chunking technique per tenant, and it’s significantly helpful in case you envision the doc foundation of your tenants to be totally different in nature. For instance, one in all your tenants may need a doc base composed of flat textual content paperwork, which might be handled with fixed-size chunking, whereas one other tenant may need a doc base with specific sections, for which semantic chunking can be higher suited to part. Having a special information base per tenant additionally permits you to determine on totally different embedding fashions, providing you with the chance to decide on totally different vector dimensions, balancing accuracy, price, and latency. You’ll be able to select a special KMS key per tenant for the transient knowledge shops, which Amazon Bedrock makes use of for end-to-end per-tenant encryption. You can too select per-tenant knowledge deletion insurance policies to regulate whether or not your vectors are deleted from the vector database when a information base is deleted. Separate information bases additionally imply you can have totally different ingestion schedules per tenants, permitting you to comply with totally different knowledge freshness requirements together with your prospects.

- A separate OpenSearch Serverless assortment per tenant – Having a separate OpenSearch Serverless assortment per tenant means that you can have a separate KMS encryption key per tenant, sustaining per-tenant end-to-end encryption. For every tenant-specific assortment, you’ll be able to create a separate vector index, subsequently selecting for every tenant the space metric between Euclidean and dot product, in an effort to select how a lot significance to offer to the doc size. You can too select the precise settings for the HNSW algorithm per tenant to regulate reminiscence consumption, price, and indexing time. Every vector index, together with the setup of metadata mappings in your information base, can have a special metadata set per tenant, which can be utilized to carry out filtered searches. Metadata filtering can be utilized within the silo sample to limit the search to a subset of paperwork with a particular attribute. For instance, one in all your tenants is perhaps importing dated paperwork and desires to filter paperwork pertaining to a particular yr, whereas one other tenant is perhaps importing paperwork coming from totally different firm divisions and desires to filter over the documentation of a particular firm division.

As a result of the silo sample provides tenant architectural independence, onboarding and offboarding a tenant means creating and destroying the RAG stack for that tenant, composed of the S3 bucket, information base, and OpenSearch Serverless assortment. You’d usually do that utilizing infrastructure as code (IaC). Relying in your utility structure, you may additionally have to replace the log sinks and monitoring techniques for every tenant.

Though the silo sample provides the best degree of tenant isolation, additionally it is the costliest to implement, primarily resulting from making a separate OpenSearch Serverless assortment per tenant for the next causes:

- Minimal capability prices – Every OpenSearch Serverless assortment encrypted with a separate KMS key has a minimal of two OpenSearch Compute Models (OCUs) charged hourly. These OCUs are charged independently from utilization, which means that you’ll incur prices for dormant tenants in case you select to have a separate KMS encryption key per tenant.

- Scalability overhead – Every assortment individually scales OCUs relying on utilization, in steps of 6 GB of reminiscence, and related vCPUs and quick entry storage. Which means assets may not be absolutely and optimally utilized throughout tenants.

When selecting the silo sample, be aware {that a} most of 100 information bases are supported in every AWS account. This makes the silo sample favorable in your largest tenants with particular isolation necessities. Having a separate information base per tenant additionally reduces the influence of quotas on concurrent ingestion jobs (most one concurrent job per KB, 5 per account), job measurement (100 GB per job), and knowledge sources (most of 5 million paperwork per knowledge supply). It additionally improves the efficiency equity as perceived by your tenants.

Deleting a information base throughout offboarding a tenant is perhaps time-consuming, relying on the dimensions of the information sources and the synchronization course of. To mitigate this, you’ll be able to set the information deletion coverage in your tenants’ information bases to RETAIN. This manner, the information base deletion course of won’t delete your tenants’ knowledge from the OpenSearch Service index. You’ll be able to delete the index by deleting the OpenSearch Serverless assortment.

Pool

In distinction with the silo sample, within the pool sample, illustrated within the following determine, the entire end-to-end RAG structure is shared by your tenants, making it significantly appropriate to accommodate many small tenants.

The pool sample prescribes the next:

- Single knowledge supply – The tenants’ knowledge is saved throughout the similar S3 bucket. This means that the pool mannequin helps a shared KMS key for encryption at relaxation, not providing the opportunity of per-tenant encryption keys. To determine tenant possession downstream for every doc uploaded to Amazon S3, a corresponding JSON metadata file needs to be generated and uploaded. The metadata file era course of might be asynchronous, and even batched for a number of recordsdata, as a result of Amazon Bedrock Information Bases requires an specific triggering of the ingestion job. The metadata file should use the identical identify as its related supply doc file, with

.metadata.jsonappended to the tip of the file identify, and should be saved in the identical folder or location because the supply file within the S3 bucket. The next code is an instance of the format:

Within the previous JSON construction, the important thing tenantId has been intentionally chosen, and might be modified to a key you wish to use to specific tenancy. The tenancy area shall be used at runtime to filter paperwork belonging to a particular tenant, subsequently the filtering key at runtime should match the metadata key within the JSON used to index the paperwork. Moreover, you’ll be able to embrace different metadata keys to carry out additional filtering that isn’t based mostly on tenancy. In the event you don’t add the object.metadata.json file, the shopper utility received’t be capable of discover the doc utilizing metadata filtering.

- Single information base – A single information base is created to deal with the information ingestion in your tenants. Which means your tenants will share the identical chunking technique and embedding mannequin, and share the identical encryption at-rest KMS key. Furthermore, as a result of ingestion jobs are triggered per knowledge supply per KB, you may be restricted to supply to your tenants the identical knowledge freshness requirements.

- Single OpenSearch Serverless assortment and index – Your tenant knowledge is pooled in a single OpenSearch Service vector index, subsequently your tenants share the identical KMS encryption key for vector knowledge, and the identical HNSW parameters for indexing and question. As a result of tenant knowledge isn’t bodily segregated, it’s essential that the question shopper be capable of filter outcomes for a single tenant. This may be effectively achieved utilizing both the Amazon Bedrock Information Bases

RetrieveorRetrieveAndGenerate, expressing the tenant filtering situation as a part of the retrievalConfiguration (for extra particulars, see Amazon Bedrock Information Bases now helps metadata filtering to enhance retrieval accuracy). If you wish to prohibit the vector search to return outcomes fortenant_1, the next is an instance shopper implementation performingRetrieveAndGeneratebased mostly on the AWS SDK for Python (Boto3):

textual content accommodates the unique consumer question that must be answered. Bearing in mind the doc base,

As a result of the end-to-end structure is pooled on this sample, onboarding and offboarding a tenant doesn’t require you to create new bodily or logical constructs, and it’s so simple as beginning or stopping and importing particular tenant paperwork to Amazon S3. This means that there isn’t a AWS managed API that can be utilized to offboard and end-to-end neglect a particular tenant. To delete the historic paperwork belonging to a particular tenant, you’ll be able to simply delete the related objects in Amazon S3. Sometimes, prospects could have an exterior utility that maintains the listing of obtainable tenants and their standing, facilitating the onboarding and offboarding course of.

Sharing the monitoring system and logging capabilities on this sample reduces the complexity of operations with numerous tenants. Nevertheless, it requires you to gather the tenant-specific metrics from the shopper facet to carry out particular tenant attribution.

The pool sample optimizes the end-to-end price of your RAG structure, as a result of sharing OCUs throughout tenants maximizes the usage of every OCU and minimizes the tenants’ idle time. Sharing the identical pool of OCUs throughout tenants signifies that this sample doesn’t provide efficiency isolation on the vector retailer degree, so the most important and most lively tenants may influence the expertise of different tenants.

When selecting the pool sample in your RAG structure, try to be conscious {that a} single ingestion job can ingest or delete a most of 100 GB. Moreover, the information supply can have a most of 5 million paperwork. If the answer has many tenants which might be geographically distributed, think about triggering the ingestion job a number of occasions a day so that you don’t hit the ingestion job measurement restrict. Additionally, relying on the quantity and measurement of your paperwork to be synchronized, the time for ingestion shall be decided by the embedding mannequin invocation charge. For instance, think about the next situation:

- Variety of tenants to be synchronized = 10

- Common variety of paperwork per tenant = 100

- Common measurement per doc = 2 MB, containing roughly 200,000 tokens divided in 220 chunks of 1,000 tokens to permit for overlap

- Utilizing Amazon Titan Embeddings v2 on demand, permitting for two,000 RPM and 300,000 TPM

This may outcome within the following:

- Complete embeddings requests = 10*100*220 = 220,000

- Complete tokens to course of = 10*100*1,000=1,000,000

- Complete time taken to embed is dominated by the RPM, subsequently 220,000/2,000 = 1 hour, 50 minutes

This implies you can set off an ingestion job 12 occasions per day to have a superb time distribution of knowledge to be ingested. This calculation is a best-case situation and doesn’t account for the latency launched by the FM when creating the vector from the chunk. In the event you count on having to synchronize numerous tenants on the similar time, think about using provisioned throughput to lower the time it takes to create vector embeddings. This method can even assist distribute the load on the embedding fashions, limiting throttling of the Amazon Bedrock runtime API calls.

Bridge

The bridge sample, illustrated within the following determine, strikes a steadiness between the silo and pool patterns, providing a center floor that balances tenant knowledge isolation and safety.

The bridge sample delivers the next traits:

- Separate knowledge supply per tenant in a standard S3 bucket – Tenant knowledge is saved in the identical S3 bucket, however prefixed by a tenant identifier. Though having a special prefix per tenant doesn’t provide the opportunity of utilizing per-tenant encryption keys, it does create a logical separation that can be utilized to segregate knowledge downstream within the information bases.

- Separate information base per tenant – This sample prescribes making a separate information base per tenant much like the silo sample. Subsequently, the concerns within the silo sample apply. Purposes constructed utilizing the bridge sample normally share question shoppers throughout tenants, so they should determine the precise tenant’s information base to question. They’ll determine the information base by storing the tenant-to-knowledge base mapping in an exterior database, which manages tenant-specific configurations. The next instance reveals methods to retailer this tenant-specific data in an Amazon DynamoDB desk:

In a manufacturing setting, your utility will retailer tenant-specific parameters belonging to different performance in your knowledge shops. Relying in your utility structure, you may select to retailer

knowledgebaseIdandmodelARNalongside the opposite tenant-specific parameters, or create a separate knowledge retailer (for instance, thetenantKbConfigdesk) particularly in your RAG structure.This mapping can then be utilized by the shopper utility by invoking the

RetrieveAndGenerateAPI. The next is an instance implementation: - Separate OpenSearch Service index per tenant – You retailer knowledge throughout the similar OpenSearch Serverless assortment, however you create a vector index per tenant. This means your tenants share the identical KMS encryption key and the identical pool of OCUs, optimizing the OpenSearch Service assets utilization for indexing and querying. The separation in vector indexes offers you the pliability of selecting totally different HNSM parameters per tenant, letting you tailor the efficiency of your k-NN indexing and querying in your totally different tenants.

The bridge sample helps as much as 100 tenants, and onboarding and offboarding a tenant requires the creation and deletion of a information base and OpenSearch Service vector index. To delete the information pertaining to a specific tenant, you’ll be able to delete the created assets and use the tenant-specific prefix as a logical parameter in your Amazon S3 API calls. In contrast to the silo sample, the bridge sample doesn’t permit for per-tenant end-to-end encryption; it provides the identical degree of tenant customization provided by the silo sample whereas optimizing prices.

Abstract of variations

The next determine and desk present a consolidated view for evaluating the traits of the totally different multi-tenant RAG structure patterns. This complete overview highlights the important thing attributes and trade-offs related to the pool, bridge, and silo patterns, enabling knowledgeable decision-making based mostly on particular necessities.

The next determine illustrates the mapping of design traits to parts of the RAG structure.

The next desk summarizes the traits of the multi-tenant RAG structure patterns.

| Attribute | Attribute of | Pool | Bridge | Silo |

| Per-tenant chunking technique | Amazon Bedrock Information Base Knowledge Supply | No | Sure | Sure |

| Buyer managed key for encryption of transient knowledge and at relaxation | Amazon Bedrock Information Base Knowledge Supply | No | No | Sure |

| Per-tenant distance measure | Amazon OpenSearch Service Index | No | Sure | Sure |

| Per-tenant ANN index configuration | Amazon OpenSearch Service Index | No | Sure | Sure |

| Per-tenant knowledge deletion insurance policies | Amazon Bedrock Information Base Knowledge Supply | No | Sure | Sure |

| Per-tenant vector measurement | Amazon Bedrock Information Base Knowledge Supply | No | Sure | Sure |

| Tenant efficiency isolation | Vector database | No | No | Sure |

| Tenant onboarding and offboarding complexity | General resolution | Easiest, requires administration of recent tenants in current infrastructure | Medium, requires minimal administration of end-to-end infrastructure | Hardest, requires administration of end-to-end infrastructure |

| Question shopper implementation | Authentic Knowledge Supply | Medium, requires dynamic filtering | Hardest, requires exterior tenant mapping desk | Easiest, similar as single-tenant implementation |

| Amazon S3 tenant administration complexity | Amazon S3 buckets and objects | Hardest, want to keep up tenant particular metadata recordsdata for every object | Medium, every tenant wants a special S3 path | Easiest, every tenant requires a special S3 bucket |

| Price | Vector database | Lowest | Medium | Highest |

| Per-tenant FM used to create vector embeddings | Amazon Bedrock Information Base | No | Sure | Sure |

Conclusion

This put up explored three distinct patterns for implementing a multi-tenant RAG structure utilizing Amazon Bedrock Information Bases and OpenSearch Service. The silo, pool, and bridge patterns provide various ranges of tenant isolation, variability, administration simplicity, and cost-efficiency, catering to totally different use circumstances and necessities. By understanding the trade-offs and concerns related to every sample, organizations could make knowledgeable choices and select the method that finest aligns with their wants.

Get began with Amazon Bedrock Information Bases right this moment.

About the Authors

Emanuele Levi is a Options Architect within the Enterprise Software program and SaaS group, based mostly in London. Emanuele helps UK prospects on their journey to refactor monolithic purposes into trendy microservices SaaS architectures. Emanuele is especially occupied with event-driven patterns and designs, particularly when utilized to analytics and AI, the place he has experience within the fraud-detection trade.

Emanuele Levi is a Options Architect within the Enterprise Software program and SaaS group, based mostly in London. Emanuele helps UK prospects on their journey to refactor monolithic purposes into trendy microservices SaaS architectures. Emanuele is especially occupied with event-driven patterns and designs, particularly when utilized to analytics and AI, the place he has experience within the fraud-detection trade.

Mehran Nikoo is a Generative AI Go-To-Market Specialist at AWS. He leads the generative AI go-to-market technique for UK and Eire.

Mehran Nikoo is a Generative AI Go-To-Market Specialist at AWS. He leads the generative AI go-to-market technique for UK and Eire.

Dani Mitchell is a Generative AI Specialist Options Architect at AWS. He’s centered on pc imaginative and prescient use case and helps AWS prospects in EMEA speed up their machine studying and generative AI journeys with Amazon SageMaker and Amazon Bedrock.

Dani Mitchell is a Generative AI Specialist Options Architect at AWS. He’s centered on pc imaginative and prescient use case and helps AWS prospects in EMEA speed up their machine studying and generative AI journeys with Amazon SageMaker and Amazon Bedrock.