As enterprises more and more embrace generative AI , they face challenges in managing the related prices. With demand for generative AI functions surging throughout tasks and a number of traces of enterprise, precisely allocating and monitoring spend turns into extra advanced. Organizations have to prioritize their generative AI spending based mostly on enterprise impression and criticality whereas sustaining value transparency throughout buyer and person segments. This visibility is crucial for setting correct pricing for generative AI choices, implementing chargebacks, and establishing usage-based billing fashions.

And not using a scalable strategy to controlling prices, organizations threat unbudgeted utilization and price overruns. Handbook spend monitoring and periodic utilization restrict changes are inefficient and susceptible to human error, resulting in potential overspending. Though tagging is supported on quite a lot of Amazon Bedrock assets—together with provisioned fashions, customized fashions, brokers and agent aliases, mannequin evaluations, prompts, immediate flows, data bases, batch inference jobs, customized mannequin jobs, and mannequin duplication jobs—there was beforehand no functionality for tagging on-demand basis fashions. This limitation has added complexity to value administration for generative AI initiatives.

To handle these challenges, Amazon Bedrock has launched a functionality that group can use to tag on-demand fashions and monitor related prices. Organizations can now label all Amazon Bedrock fashions with AWS value allocation tags, aligning utilization to particular organizational taxonomies akin to value facilities, enterprise models, and functions. To handle their generative AI spend judiciously, organizations can use providers like AWS Budgets to set tag-based budgets and alarms to watch utilization, and obtain alerts for anomalies or predefined thresholds. This scalable, programmatic strategy eliminates inefficient guide processes, reduces the chance of extra spending, and ensures that vital functions obtain precedence. Enhanced visibility and management over AI-related bills allows organizations to maximise their generative AI investments and foster innovation.

Introducing Amazon Bedrock utility inference profiles

Amazon Bedrock not too long ago launched cross-region inference, enabling computerized routing of inference requests throughout AWS Areas. This function makes use of system-defined inference profiles (predefined by Amazon Bedrock), which configure completely different mannequin Amazon Useful resource Names (ARNs) from varied Areas and unify them beneath a single mannequin identifier (each mannequin ID and ARN). Whereas this enhances flexibility in mannequin utilization, it doesn’t help attaching customized tags for monitoring, managing, and controlling prices throughout workloads and tenants.

To bridge this hole, Amazon Bedrock now introduces utility inference profiles, a brand new functionality that permits organizations to use customized value allocation tags to trace, handle, and management their Amazon Bedrock on-demand mannequin prices and utilization. This functionality allows organizations to create customized inference profiles for Bedrock base basis fashions, including metadata particular to tenants, thereby streamlining useful resource allocation and price monitoring throughout assorted AI functions.

Creating utility inference profiles

Software inference profiles permit customers to outline custom-made settings for inference requests and useful resource administration. These profiles will be created in two methods:

- Single mannequin ARN configuration: Instantly create an utility inference profile utilizing a single on-demand base mannequin ARN, permitting fast setup with a selected mannequin.

- Copy from system-defined inference profile: Copy an current system-defined inference profile to create an utility inference profile, which is able to inherit configurations akin to cross-Area inference capabilities for enhanced scalability and resilience.

The applying inference profile ARN has the next format, the place the inference profile ID element is a novel 12-digit alphanumeric string generated by Amazon Bedrock upon profile creation.

System-defined in comparison with utility inference profiles

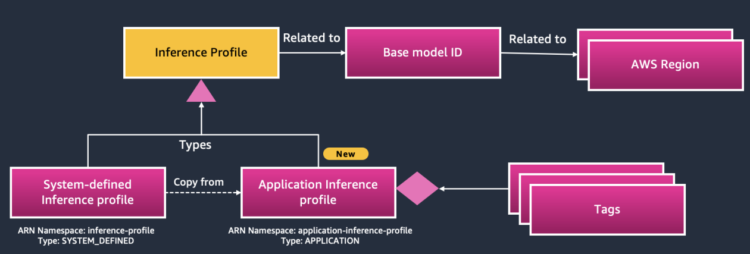

The first distinction between system-defined and utility inference profiles lies of their sort attribute and useful resource specs inside the ARN namespace:

- System-defined inference profiles: These have a kind attribute of

SYSTEM_DEFINEDand make the most of theinference-profileuseful resource sort. They’re designed to help cross-Area and multi-model capabilities however are managed centrally by AWS. - Software inference profiles: These profiles have a

sortattribute ofAPPLICATIONand use theapplication-inference-profileuseful resource sort. They’re user-defined, offering granular management and adaptability over mannequin configurations and permitting organizations to tailor insurance policies with attribute-based entry management (ABAC) utilizing AWS Identification and Entry Administration (IAM). This allows extra exact IAM coverage authoring to handle Amazon Bedrock entry extra securely and effectively.

These variations are necessary when integrating with Amazon API Gateway or different API purchasers to assist guarantee appropriate mannequin invocation, useful resource allocation, and workload prioritization. Organizations can apply custom-made insurance policies based mostly on profile sort, enhancing management and safety for distributed AI workloads. Each fashions are proven within the following determine.

Establishing utility inference profiles for value administration

Think about an insurance coverage supplier embarking on a journey to reinforce buyer expertise by generative AI. The corporate identifies alternatives to automate claims processing, present personalised coverage suggestions, and enhance threat evaluation for purchasers throughout varied areas. Nevertheless, to appreciate this imaginative and prescient, the group should undertake a sturdy framework for successfully managing their generative AI workloads.

The journey begins with the insurance coverage supplier creating utility inference profiles which are tailor-made to their numerous enterprise models. By assigning AWS value allocation tags, the group can successfully monitor and observe their Bedrock spend patterns. For instance, the claims processing group established an utility inference profile with tags akin to dept:claims, group:automation, and app:claims_chatbot. This tagging construction categorizes prices and permits evaluation of utilization in opposition to budgets.

Customers can handle and use utility inference profiles utilizing Bedrock APIs or the boto3 SDK:

- CreateInferenceProfile: Initiates a brand new inference profile, permitting customers to configure the parameters for the profile.

- GetInferenceProfile: Retrieves the small print of a particular inference profile, together with its configuration and present standing.

- ListInferenceProfiles: Lists all obtainable inference profiles inside the person’s account, offering an outline of the profiles which were created.

- TagResource: Permits customers to connect tags to particular Bedrock assets, together with utility inference profiles, for higher group and price monitoring.

- ListTagsForResource: Fetches the tags related to a particular Bedrock useful resource, serving to customers perceive how their assets are categorized.

- UntagResource: Removes specified tags from a useful resource, permitting for administration of useful resource group.

- Invoke fashions with utility inference profiles:

-

- Converse API: Invokes the mannequin utilizing a specified inference profile for conversational interactions.

- ConverseStream API: Just like the Converse API however helps streaming responses for real-time interactions.

- InvokeModel API: Invokes the mannequin with a specified inference profile for basic use circumstances.

- InvokeModelWithResponseStream API: Invokes the mannequin and streams the response, helpful for dealing with giant knowledge outputs or long-running processes.

Word that utility inference profile APIs can’t be accessed by the AWS Administration Console.

Invoke mannequin with utility inference profile utilizing Converse API

The next instance demonstrates how one can create an utility inference profile after which invoke the Converse API to interact in a dialog utilizing that profile –

Tagging, useful resource administration, and price administration with utility inference profiles

Tagging inside utility inference profiles permits organizations to allocate prices with particular generative AI initiatives, guaranteeing exact expense monitoring. Software inference profiles allow organizations to use value allocation tags at creation and help further tagging by the prevailing TagResource and UnTagResource APIs, which permit metadata affiliation with varied AWS assets. Customized tags akin to project_id, cost_center, model_version, and atmosphere assist categorize assets, bettering value transparency and permitting groups to watch spend and utilization in opposition to budgets.

Visualize value and utilization with utility inference profiles and price allocation tags

Leveraging value allocation tags with instruments like AWS Budgets, AWS Value Anomaly Detection, AWS Value Explorer, AWS Value and Utilization Stories (CUR), and Amazon CloudWatch supplies organizations insights into spending traits, serving to detect and tackle value spikes early to remain inside finances.

With AWS Budgets, group can set tag-based thresholds and obtain alerts as spending strategy finances limits, providing a proactive strategy to sustaining management over AI useful resource prices and shortly addressing any surprising surges. For instance, a $10,000 per 30 days finances may very well be utilized on a particular chatbot utility for the Assist Crew within the Gross sales Division by making use of the next tags to the applying inference profile: dept:gross sales, group:help, and app:chat_app. AWS Value Anomaly Detection may also monitor tagged assets for uncommon spending patterns, making it simpler to operationalize value allocation tags by robotically figuring out and flagging irregular prices.

The next AWS Budgets console screenshot illustrates an exceeded finances threshold:

For deeper evaluation, AWS Value Explorer and CUR allow organizations to research tagged assets every day, weekly, and month-to-month, supporting knowledgeable selections on useful resource allocation and price optimization. By visualizing value and utilization based mostly on metadata attributes, akin to tag key/worth and ARN, organizations acquire an actionable, granular view of their spending.

The next AWS Value Explorer console screenshot illustrates a price and utilization graph filtered by tag key and worth:

The next AWS Value Explorer console screenshot illustrates a price and utilization graph filtered by Bedrock utility inference profile ARN:

Organizations may also use Amazon CloudWatch to watch runtime metrics for Bedrock functions, offering further insights into efficiency and price administration. Metrics will be graphed by utility inference profile, and groups can set alarms based mostly on thresholds for tagged assets. Notifications and automatic responses triggered by these alarms allow real-time administration of value and useful resource utilization, stopping finances overruns and sustaining monetary stability for generate AI workloads.

The next Amazon CloudWatch console screenshot highlights Bedrock runtime metrics filtered by Bedrock utility inference profile ARN:

The next Amazon CloudWatch console screenshot highlights an invocation restrict alarm filtered by Bedrock utility inference profile ARN:

By the mixed use of tagging, budgeting, anomaly detection, and detailed value evaluation, organizations can successfully handle their AI investments. By leveraging these AWS instruments, groups can preserve a transparent view of spending patterns, enabling extra knowledgeable decision-making and maximizing the worth of their generative AI initiatives whereas guaranteeing vital functions stay inside finances.

Retrieving utility inference profile ARN based mostly on the tags for Mannequin invocation

Organizations usually use a generative AI gateway or giant language mannequin proxy when calling Amazon Bedrock APIs, together with mannequin inference calls. With the introduction of utility inference profiles, organizations have to retrieve the inference profile ARN to invoke mannequin inference for on-demand basis fashions. There are two major approaches to acquire the suitable inference profile ARN.

- Static configuration strategy: This methodology entails sustaining a static configuration file within the AWS Programs Supervisor Parameter Retailer or AWS Secrets and techniques Supervisor that maps tenant/workload keys to their corresponding utility inference profile ARNs. Whereas this strategy provides simplicity in implementation, it has vital limitations. Because the variety of inference profiles scales from tens to tons of and even hundreds, managing and updating this configuration file turns into more and more cumbersome. The static nature of this methodology requires guide updates each time modifications happen, which may result in inconsistencies and elevated upkeep overhead, particularly in large-scale deployments the place organizations have to dynamically retrieve the right inference profile based mostly on tags.

- Dynamic retrieval utilizing the Useful resource Teams API: The second, extra sturdy strategy leverages the AWS Useful resource Teams GetResources API to dynamically retrieve utility inference profile ARNs based mostly on useful resource and tag filters. This methodology permits for versatile querying utilizing varied tag keys akin to tenant ID, mission ID, division ID, workload ID, mannequin ID, and area. The first benefit of this strategy is its scalability and dynamic nature, enabling real-time retrieval of utility inference profile ARNs based mostly on present tag configurations.

Nevertheless, there are concerns to remember. The GetResources API has throttling limits, necessitating the implementation of a caching mechanism. Organizations ought to preserve a cache with a Time-To-Dwell (TTL) based mostly on the API’s output to optimize efficiency and cut back API calls. Moreover, implementing thread security is essential to assist make sure that organizations all the time learn essentially the most up-to-date inference profile ARNs when the cache is being refreshed based mostly on the TTL.

As illustrated within the following diagram, this dynamic strategy entails a consumer making a request to the Useful resource Teams service with particular useful resource sort and tag filters. The service returns the corresponding utility inference profile ARN, which is then cached for a set interval. The consumer can then use this ARN to invoke the Bedrock mannequin by the InvokeModel or Converse API.

By adopting this dynamic retrieval methodology, organizations can create a extra versatile and scalable system for managing utility inference profiles, permitting for extra simple adaptation to altering necessities and development within the variety of profiles.

The structure within the previous determine illustrates two strategies for dynamically retrieving inference profile ARNs based mostly on tags. Let’s describe each approaches with their professionals and cons:

- Bedrock consumer sustaining the cache with TTL: This methodology entails the consumer straight querying the AWS

ResourceGroupsservice utilizing theGetResourcesAPI based mostly on useful resource sort and tag filters. The consumer caches the retrieved keys in a client-maintained cache with a TTL. The consumer is chargeable for refreshing the cache by calling theGetResourcesAPI within the thread secure method. - Lambda-based Technique: This strategy makes use of AWS Lambda as an middleman between the calling consumer and the

ResourceGroupsAPI. This methodology employs Lambda Extensions core with an in-memory cache, probably lowering the variety of API calls toResourceGroups. It additionally interacts with Parameter Retailer, which can be utilized for configuration administration or storing cached knowledge persistently.

Each strategies use related filtering standards (resource-type-filter and tag-filters) to question the ResourceGroup API, permitting for exact retrieval of inference profile ARNs based mostly on attributes akin to tenant, mannequin, and Area. The selection between these strategies relies on components such because the anticipated request quantity, desired latency, value concerns, and the necessity for extra processing or safety measures. The Lambda-based strategy provides extra flexibility and optimization potential, whereas the direct API methodology is less complicated to implement and preserve.

Overview of Amazon Bedrock assets tagging capabilities

The tagging capabilities of Amazon Bedrock have developed considerably, offering a complete framework for useful resource administration throughout multi-account AWS Management Tower setups. This evolution allows organizations to handle assets throughout growth, staging, and manufacturing environments, serving to organizations observe, handle, and allocate prices for his or her AI/ML workloads.

At its core, the Amazon Bedrock useful resource tagging system spans a number of operational elements. Organizations can successfully tag their batch inference jobs, brokers, customized mannequin jobs, data bases, prompts, and immediate flows. This foundational stage of tagging helps granular management over operational assets, enabling exact monitoring and administration of various workload elements. The mannequin administration facet of Amazon Bedrock introduces one other layer of tagging capabilities, encompassing each customized and base fashions, and distinguishes between provisioned and on-demand fashions, every with its personal tagging necessities and capabilities.

With the introduction of utility inference profiles, organizations can now handle and observe their on-demand Bedrock base basis fashions. As a result of groups can create utility inference profiles derived from system-defined inference profiles, they’ll configure extra exact useful resource monitoring and price allocation on the utility stage. This functionality is especially useful for organizations which are operating a number of AI functions throughout completely different environments, as a result of it supplies clear visibility into useful resource utilization and prices at a granular stage.

The next diagram visualizes the multi-account construction and demonstrates how these tagging capabilities will be carried out throughout completely different AWS accounts.

Conclusion

On this submit we launched the most recent function from Amazon Bedrock, utility inference profiles. We explored the way it operates and mentioned key concerns. The code pattern for this function is obtainable on this GitHub repository. This new functionality allows organizations to tag, allocate, and observe on-demand mannequin inference workloads and spending throughout their operations. Organizations can label all Amazon Bedrock fashions utilizing tags and monitoring utilization based on their particular organizational taxonomy—akin to tenants, workloads, value facilities, enterprise models, groups, and functions. This function is now usually obtainable in all AWS Areas the place Amazon Bedrock is obtainable.

In regards to the authors

Kyle T. Blocksom is a Sr. Options Architect with AWS based mostly in Southern California. Kyle’s ardour is to convey individuals collectively and leverage expertise to ship options that prospects love. Exterior of labor, he enjoys browsing, consuming, wrestling together with his canine, and spoiling his niece and nephew.

Kyle T. Blocksom is a Sr. Options Architect with AWS based mostly in Southern California. Kyle’s ardour is to convey individuals collectively and leverage expertise to ship options that prospects love. Exterior of labor, he enjoys browsing, consuming, wrestling together with his canine, and spoiling his niece and nephew.

Dhawal Patel is a Principal Machine Studying Architect at AWS. He has labored with organizations starting from giant enterprises to mid-sized startups on issues associated to distributed computing, and Synthetic Intelligence. He focuses on Deep studying together with NLP and Pc Imaginative and prescient domains. He helps prospects obtain excessive efficiency mannequin inference on SageMaker.

Dhawal Patel is a Principal Machine Studying Architect at AWS. He has labored with organizations starting from giant enterprises to mid-sized startups on issues associated to distributed computing, and Synthetic Intelligence. He focuses on Deep studying together with NLP and Pc Imaginative and prescient domains. He helps prospects obtain excessive efficiency mannequin inference on SageMaker.