Clients at this time look forward to finding merchandise rapidly and effectively by way of intuitive search performance. A seamless search journey not solely enhances the general consumer expertise, but in addition instantly impacts key enterprise metrics resembling conversion charges, common order worth, and buyer loyalty. In response to a McKinsey research, 78% of customers usually tend to make repeat purchases from firms that present personalised experiences. Because of this, delivering distinctive search performance has change into a strategic differentiator for contemporary ecommerce providers. With ever increasing product catalogs and rising range of manufacturers, harnessing superior search applied sciences is crucial for fulfillment.

Semantic search permits digital commerce suppliers to ship extra related search outcomes by going past key phrase matching. It makes use of an embeddings mannequin to create vector embeddings that seize the that means of the enter question. This helps the search be extra resilient to phrasing variations and to just accept multimodal inputs resembling textual content, picture, audio, and video. For instance, a consumer inputs a question containing textual content and a picture of a product they like, and the search engine interprets each into vector embeddings utilizing a multimodal embeddings mannequin and retrieves associated objects from the catalog utilizing embeddings similarities. To study extra about semantic search and the way Amazon Prime Video makes use of it to assist clients discover their favourite content material, see Amazon Prime Video advances seek for sports activities utilizing Amazon OpenSearch Service.

Whereas semantic search supplies contextual understanding and adaptability, key phrase search stays a vital element for a complete ecommerce search answer. At its core, key phrase search supplies the important baseline performance of precisely matching consumer queries to product information and metadata, ensuring specific product names, manufacturers, or attributes will be reliably retrieved. This matching functionality is important, as a result of customers usually have particular objects in thoughts when initiating a search, and assembly these specific wants with precision is necessary to ship a passable expertise.

Hybrid search combines the strengths of key phrase search and semantic search, enabling retailers to ship extra correct and related outcomes to their clients. Based mostly on OpenSearch weblog publish, hybrid search improves consequence high quality by 8–12% in comparison with key phrase search and by 15% in comparison with pure language search. Nevertheless, combining key phrase search and semantic search presents vital complexity as a result of totally different question varieties present scores on totally different scales. Utilizing Amazon OpenSearch Service hybrid search, clients can seamlessly combine these approaches by combining relevance scores from a number of search varieties into one unified rating.

OpenSearch Service is the AWS really helpful vector database for Amazon Bedrock. It’s a totally managed service that you need to use to deploy, function, and scale OpenSearch on AWS. OpenSearch is a distributed open-source search and analytics engine composed of a search engine and vector database. OpenSearch Service may help you deploy and function your search infrastructure with native vector database capabilities delivering as little as single-digit millisecond latencies for searches throughout billions of vectors, making it best for real-time AI purposes. To study extra, see Enhance search outcomes for AI utilizing Amazon OpenSearch Service as a vector database with Amazon Bedrock.

Multimodal embedding fashions like Amazon Titan Multimodal Embeddings G1, obtainable by way of Amazon Bedrock, play a essential function in enabling hybrid search performance. These fashions generate embeddings for each textual content and pictures by representing them in a shared semantic area. This enables programs to retrieve related outcomes throughout modalities resembling discovering photos utilizing textual content queries or combining textual content with picture inputs.

On this publish, we stroll you thru methods to construct a hybrid search answer utilizing OpenSearch Service powered by multimodal embeddings from the Amazon Titan Multimodal Embeddings G1 mannequin by way of Amazon Bedrock. This answer demonstrates how one can allow customers to submit each textual content and pictures as queries to retrieve related outcomes from a pattern retail picture dataset.

Overview of answer

On this publish, you’ll construct an answer that you need to use to look by way of a pattern picture dataset within the retail area, utilizing a multimodal hybrid search system powered by OpenSearch Service. This answer has two key workflows: a knowledge ingestion workflow and a question workflow.

Knowledge ingestion workflow

The info ingestion workflow generates vector embeddings for textual content, photos, and metadata utilizing Amazon Bedrock and the Amazon Titan Multimodal Embeddings G1 mannequin. Then, it shops the vector embeddings, textual content, and metadata in an OpenSearch Service area.

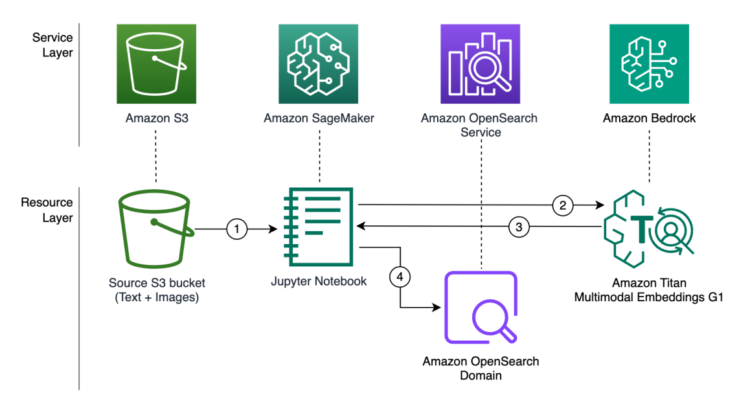

On this workflow, proven within the following determine, we use a SageMaker JupyterLab pocket book to carry out the next actions:

- Learn textual content, photos, and metadata from an Amazon Easy Storage Service (Amazon S3) bucket, and encode photos in Base64 format.

- Ship the textual content, photos, and metadata to Amazon Bedrock utilizing its API to generate embeddings utilizing the Amazon Titan Multimodal Embeddings G1 mannequin.

- The Amazon Bedrock API replies with embeddings to the Jupyter pocket book.

- Retailer each the embeddings and metadata in an OpenSearch Service area.

Question workflow

Within the question workflow, an OpenSearch search pipeline is used to transform the question enter to embeddings utilizing the embeddings mannequin registered with OpenSearch. Then, inside the OpenSearch search pipeline outcomes processor, outcomes of semantic search and key phrase search are mixed utilizing the normalization processor to offer related search outcomes to customers. Search pipelines take away the heavy lifting of constructing rating outcomes normalization and mixture outdoors your OpenSearch Service area.

The workflow consists of the next steps proven within the following determine:

- The consumer submits a question enter containing textual content, a Base64 encoded picture, or each to OpenSearch Service. Textual content submitted is used for each semantic and key phrase search, and the picture is used for semantic search.

- The OpenSearch search pipeline performs the key phrase search utilizing textual inputs and a neural search utilizing vector embeddings generated by Amazon Bedrock utilizing Titan Multimodal Embeddings G1 mannequin.

- The normalization processor inside the pipeline scales search outcomes utilizing strategies like

min_maxand combines key phrase and semantic scores utilizingarithmetic_mean. - Ranked search outcomes are returned to the consumer.

Walkthrough overview

To deploy the answer, full the next high-level steps:

- Create a connector for Amazon Bedrock in OpenSearch Service.

- Create an OpenSearch search pipeline and allow hybrid search.

- Create an OpenSearch Service index for storing the multimodal embeddings and metadata.

- Ingest pattern information to the OpenSearch Service index.

- Create OpenSearch Service question features to check search performance.

Stipulations

For this walkthrough, you need to have the next stipulations:

The code is open supply and hosted on GitHub.

Create a connector for Amazon Bedrock in OpenSearch Service

To make use of OpenSearch Service machine studying (ML) connectors with different AWS providers, you want to arrange an IAM function permitting entry to that service. On this part, we reveal the steps to create an IAM function after which create the connector.

Create an IAM function

Full the next steps to arrange an IAM function to delegate Amazon Bedrock permissions to OpenSearch Service:

- Add the next coverage to the brand new function to permit OpenSearch Service to invoke the Amazon Titan Multimodal Embeddings G1 mannequin:

{ "Model": "2012-10-17", "Assertion": [ { "Effect": "Allow", "Action": "bedrock:InvokeModel", "Resource": "arn:aws:bedrock:region:account-id:foundation-model/amazon.titan-embed-image-v1" } ] }

- Modify the function belief coverage as follows. You’ll be able to comply with the directions in IAM function administration to edit the belief relationship of the function.

{ "Model": "2012-10-17", "Assertion": [ { "Effect": "Allow", "Principal": { "Service": "opensearchservice.amazonaws.com" }, "Action": "sts:AssumeRole" } ] }

Join an Amazon Bedrock mannequin to OpenSearch

After you create the function, you need to use the Amazon Useful resource Identify (ARN) of the function to outline the fixed within the SageMaker pocket book together with the OpenSearch area endpoint. Full the next steps:

- Register a mannequin group. Notice the mannequin group ID returned within the response to register a mannequin in a later step.

- Create a connector, which facilitates registering and deploying exterior fashions in OpenSearch. The response will comprise the connector ID.

- Register the exterior mannequin to the mannequin group and deploy the mannequin. On this step, you register and deploy the mannequin on the identical time—by establishing

deploy=true, the registered mannequin is deployed as properly.

Create an OpenSearch search pipeline and allow hybrid search

A search pipeline runs contained in the OpenSearch Service area and may have three forms of processors: search request processor, search response processor, and search part consequence processor. For our search pipeline, we use the search part consequence processor, which runs between the search phases on the coordinating node stage. The processor makes use of the normalization processor and normalizes the rating from key phrase and semantic search. For hybrid search, min-max normalization and arithmetic_mean mixture strategies are most well-liked, however you may also attempt L2 normalization and geometric_mean or harmonic_mean mixture strategies relying in your information and use case.

payload={

"phase_results_processors": [

{

"normalization-processor": {

"normalization": {

"technique": "min_max"

},

"combination": {

"technique": "arithmetic_mean",

"parameters": {

"weights": [

OPENSEARCH_KEYWORD_WEIGHT,

1 - OPENSEARCH_KEYWORD_WEIGHT

]

}

}

}

}

]

}

response = requests.put(

url=f"{OPENSEARCH_ENDPOINT}/_search/pipeline/"+OPENSEARCH_SEARCH_PIPELINE_NAME,

json=payload,

headers={"Content material-Kind": "utility/json"},

auth=open_search_auth

)Create an OpenSearch Service index for storing the multimodal embeddings and metadata

For this publish, we use the Amazon Berkley Objects Dataset, which is a set of 147,702 product listings with multilingual metadata and 398,212 distinctive catalog photos. On this instance, we solely use Sneakers and listings which are in en_US as proven in part Put together listings dataset for Amazon OpenSearch ingestion of the pocket book.

Use the next code to create an OpenSearch index to ingest the pattern information:

response = opensearch_client.indices.create(

index=OPENSEARCH_INDEX_NAME,

physique={

"settings": {

"index.knn": True,

"number_of_shards": 2

},

"mappings": {

"properties": {

"amazon_titan_multimodal_embeddings": {

"sort": "knn_vector",

"dimension": 1024,

"technique": {

"title": "hnsw",

"engine": "lucene",

"parameters": {}

}

}

}

}

}

)Ingest pattern information to the OpenSearch Service index

On this step, you choose the related options used for producing embeddings. The pictures are transformed to Base64. The mixture of a specific function and a Base64 picture is used to generate multimodal embeddings, that are saved within the OpenSearch Service index together with the metadata utilizing a OpenSearch bulk operation, and ingest listings in batches.

Create OpenSearch Service question features to check search performance

With the pattern information ingested, you’ll be able to run queries in opposition to this information to check the hybrid search performance. To facilitate this course of, we created helper features to carry out the queries within the question workflow part of the pocket book. On this part, you discover particular elements of the features that differentiate the search strategies.

Key phrase search

For key phrase search, ship the next payload to the OpenSearch area search endpoint:

payload = {

"question": {

"multi_match": {

"question": query_text,

}

},

}Semantic search

For semantic search, you’ll be able to ship the textual content and picture as a part of the payload. Model_id within the request is the exterior embeddings mannequin that you just linked earlier. OpenSearch will invoke the mannequin and convert textual content and picture to embeddings.

payload = {

"question": {

"neural": {

"vector_embedding": {

"query_text": query_text,

"query_image": query_jpg_image,

"model_id": model_id,

"ok": 5

}

}

}

}Hybrid search

This technique makes use of the OpenSearch pipeline you created. The payload has each the semantic and neural search.

payload = {

"question": {

"hybrid": {

"queries": [

{

"multi_match": {

"query": query_text,

}

},

{

"neural": {

"vector_embedding": {

"query_text": query_text,

"query_image": query_jpg_image,

"model_id": model_id,

"k": 5

}

}

}

]

}

}

}Check search strategies

To check the a number of search strategies, you’ll be able to question the index utilizing query_text which supplies particular details about the specified output, and query_jpg_image which supplies the general abstraction of the specified model of the output.

query_text = "leather-based sandals in Petal Blush"

search_image_path="16/16e48774.jpg"

Key phrase search

The next output lists the highest three key phrase search outcomes. The key phrase search efficiently situated leather-based sandals within the shade Petal Blush, but it surely didn’t take the specified model into consideration.

Semantic search

Semantic search efficiently situated leather-based sandal and thought of the specified model. Nevertheless, the similarity to the supplied photos took precedence over the particular shade supplied in query_text.

Hybrid search

Hybrid search returned comparable outcomes to the semantic search as a result of they use the identical embeddings mannequin. Nevertheless, by combining the output of key phrase and semantic searches, the rating of the Petal Blush sandal that almost all intently matches query_jpg_image will increase, shifting it the highest of the outcomes record.

Clear up

After you full this walkthrough, clear up all of the assets you created as a part of this publish. This is a vital step to be sure you don’t incur any surprising costs. In the event you used an current OpenSearch Service area, within the Cleanup part of the pocket book, we offer instructed cleanup actions, together with delete the index, un-deploy the mannequin, delete the mannequin, delete the mannequin group, and delete the Amazon Bedrock connector. In the event you created an OpenSearch Service area solely for this train, you’ll be able to bypass these actions and delete the area.

Conclusion

On this publish, we defined methods to implement multimodal hybrid search by combining key phrase and semantic search capabilities utilizing Amazon Bedrock and Amazon OpenSearch Service. We showcased an answer that makes use of Amazon Titan Multimodal Embeddings G1 to generate embeddings for textual content and pictures, enabling customers to look utilizing each modalities. The hybrid strategy combines the strengths of key phrase search and semantic search, delivering correct and related outcomes to clients.

We encourage you to check the pocket book in your individual account and get firsthand expertise with hybrid search variations. Along with the outputs proven on this publish, we offer a number of variations within the pocket book. In the event you’re all in favour of utilizing customized embeddings fashions in Amazon SageMaker AI as a substitute, see Hybrid Search with Amazon OpenSearch Service. If you’d like an answer that provides semantic search solely, see Construct a contextual textual content and picture search engine for product suggestions utilizing Amazon Bedrock and Amazon OpenSearch Serverless and Construct multimodal search with Amazon OpenSearch Service.

Concerning the Authors

Renan Bertolazzi is an Enterprise Options Architect serving to clients understand the potential of cloud computing on AWS. On this function, Renan is a technical chief advising executives and engineers on cloud options and techniques designed to innovate, simplify, and ship outcomes.

Renan Bertolazzi is an Enterprise Options Architect serving to clients understand the potential of cloud computing on AWS. On this function, Renan is a technical chief advising executives and engineers on cloud options and techniques designed to innovate, simplify, and ship outcomes.

Birender Pal is a Senior Options Architect at AWS, the place he works with strategic enterprise clients to design scalable, safe and resilient cloud architectures. He helps digital transformation initiatives with a give attention to cloud-native modernization, machine studying, and Generative AI. Outdoors of labor, Birender enjoys experimenting with recipes from world wide.

Birender Pal is a Senior Options Architect at AWS, the place he works with strategic enterprise clients to design scalable, safe and resilient cloud architectures. He helps digital transformation initiatives with a give attention to cloud-native modernization, machine studying, and Generative AI. Outdoors of labor, Birender enjoys experimenting with recipes from world wide.

Sarath Krishnan is a Senior Options Architect with Amazon Net Providers. He’s obsessed with enabling enterprise clients on their digital transformation journey. Sarath has intensive expertise in architecting extremely obtainable, scalable, cost-effective, and resilient purposes on the cloud. His space of focus contains DevOps, machine studying, MLOps, and generative AI.

Sarath Krishnan is a Senior Options Architect with Amazon Net Providers. He’s obsessed with enabling enterprise clients on their digital transformation journey. Sarath has intensive expertise in architecting extremely obtainable, scalable, cost-effective, and resilient purposes on the cloud. His space of focus contains DevOps, machine studying, MLOps, and generative AI.