redesigned your complete provide chain for extra cost-efficient and sustainable operations?

Provide Chain Community Optimisation determines the place goods are produced to serve markets on the lowest value in an environmentally pleasant approach.

We should think about real-world constraints (capability, demand) to seek out the optimum set of factories that can minimise the target operate.

As a Provide Chain Resolution Supervisor, I’ve led a number of community design research that sometimes took 10–12 weeks.

The ultimate deliverable was normally a deck of slides presenting a number of eventualities, permitting provide chain administrators to weigh the trade-offs.

However decision-makers have been usually annoyed through the displays of the research outcomes:

Route: “What if we enhance the manufacturing facility capability by 25%?”

They needed to problem assumptions and re-run eventualities reside, whereas all we had have been the slides we had taken hours to arrange.

What if we may enhance this person expertise utilizing conversational brokers?

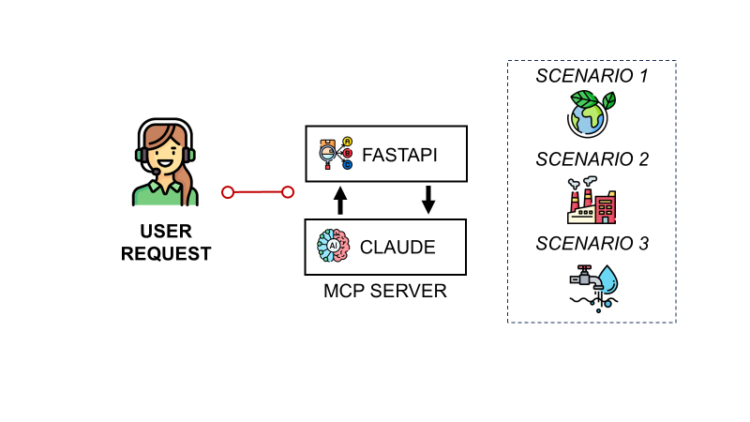

On this article, I present how I linked an MCP server to a FastAPI microservice with a Provide Chain Community Optimisation algorithm.

The result’s a conversational agent that may run one or a number of eventualities and supply an in depth evaluation with good visuals.

We’ll even ask this agent to advise us on the very best determination to take, contemplating our objectives and the constraints.

For this experiment, I’ll use:

- Claude Desktop because the conversational interface

- MCP Server to show typed instruments to the agent

- FastAPI microservice with the community optimisation endpoint

Within the first part, I’ll introduce the issue of Provide Chain Community design with a concrete instance.

Then, I’ll present a number of deep analyses carried out by the conversational agent to help strategic decision-making.

For the primary time, I’ve been impressed by AI when the agent chosen the right visuals to reply an open query with none steering!

Provide Chain Community Optimisation with Python

Downside Assertion: Provide Chain Community Design

We’re supporting the Provide Chain Director of a world manufacturing firm that want to redefine their community for a long-term transformation plan.

This multinational firm has operations in 5 completely different markets: Brazil, the USA, Germany, India and Japan.

To fulfill this demand, we will open low or high-capacity factories in every of the markets.

When you open a facility, you should think about the mounted prices (related to electrical energy, Actual Property, and CAPEX) and the variable prices per unit produced.

On this instance, high-capacity vegetation in India have decrease mounted prices than these within the USA with decrease capability.

Moreover, there are the prices related to delivery a container from Nation XXX to Nation YYY.

All the pieces summed up will outline the whole value of manufacturing and delivering merchandise from a producing web site to the completely different markets.

What about sustainability?

Along with these parameters, we think about the quantity of sources consumed per unit produced.

For example, we eat 780 MJ/Unit of power and 3,500 litres of water to supply a single unit in Indian factories.

For the environmental impacts, we additionally think about the air pollution ensuing from CO2 emissions and waste era.

Within the instance above, Japan is the cleanest manufacturing nation.

The place ought to we produce to reduce water utilization?

The thought is to pick out a metric to minimise, which might be prices, water utilization, CO2 emissions or power utilization.

The mannequin will point out the place to find factories and description the flows from these factories to the varied markets.

This answer has been packaged as a internet utility (FastAPI backend, Streamlit front-end) used as a demo to showcase the capabilities of our startup LogiGreen.

The thought of right now’s experiment is to attach the backend with Claude Desktop utilizing a neighborhood MCP server constructed with Python.

FastAPI Microservice: 0–1 Blended-Integer Optimiser for Provide Chain Community Design

This instrument is an optimisation mannequin packaged in a FastAPI microservice.

What are the enter knowledge for this downside?

As inputs, we must always present the target operate (obligatory) and constraints of most environmental impression per unit produced (non-compulsory).

from pydantic import BaseModel

from typing import Optionally available

from app.utils.config_loader import load_config

config = load_config()

class LaunchParamsNetwork(BaseModel):

goal: Optionally available[str] = 'Manufacturing Price'

max_energy: Optionally available[float] = config["network_analysis"]["params_mapping"]["max_energy"]

max_water: Optionally available[float] = config["network_analysis"]["params_mapping"]["max_water"]

max_waste: Optionally available[float] = config["network_analysis"]["params_mapping"]["max_waste"]

max_co2prod: Optionally available[float] = config["network_analysis"]["params_mapping"]["max_co2prod"]The default values for the thresholds are saved in a config file.

We ship these parameters to a selected endpoint launch_network that can run the optimisation algorithm.

@router.submit("/launch_network")

async def launch_network(request: Request, params: LaunchParamsNetwork):

attempt:

session_id = request.headers.get('session_id', 'session')

listing = config['general']['folders']['directory']

folder_in = f'{listing}/{session_id}/network_analysis/enter'

folder_out = f'{listing}/{session_id}/network_analysis/output'

network_analyzer = NetworkAnalysis(params, folder_in, folder_out)

output = await network_analyzer.course of()

return output

besides Exception as e:

logger.error(f"[Network]: Error in /launch_network: {str(e)}")

increase HTTPException(status_code=500, element=f"Did not launch Community evaluation: {str(e)}")The API returns the JSON outputs in two elements.

Within the part input_params, you’ll find

- The target operate chosen

- All the utmost limits per environmental impression

{ "input_params":

{ "goal": "Manufacturing Price",

"max_energy": 780,

"max_water": 3500,

"max_waste": 0.78,

"max_co2prod": 41,

"unit_monetary": "1e6",

"loc": [ "USA", "GERMANY", "JAPAN", "BRAZIL", "INDIA" ],

"n_loc": 5,

"plant_name": [ [ "USA", "LOW" ], [ "GERMANY", "LOW" ], [ "JAPAN", "LOW" ], [ "BRAZIL", "LOW" ], [ "INDIA", "LOW" ], [ "USA", "HIGH" ], [ "GERMANY", "HIGH" ], [ "JAPAN", "HIGH" ], [ "BRAZIL", "HIGH" ], [ "INDIA", "HIGH" ] ],

"prod_name": [ [ "USA", "USA" ], [ "USA", "GERMANY" ], [ "USA", "JAPAN" ], [ "USA", "BRAZIL" ], [ "USA", "INDIA" ], [ "GERMANY", "USA" ], [ "GERMANY", "GERMANY" ], [ "GERMANY", "JAPAN" ], [ "GERMANY", "BRAZIL" ], [ "GERMANY", "INDIA" ], [ "JAPAN", "USA" ], [ "JAPAN", "GERMANY" ], [ "JAPAN", "JAPAN" ], [ "JAPAN", "BRAZIL" ], [ "JAPAN", "INDIA" ], [ "BRAZIL", "USA" ], [ "BRAZIL", "GERMANY" ], [ "BRAZIL", "JAPAN" ], [ "BRAZIL", "BRAZIL" ], [ "BRAZIL", "INDIA" ], [ "INDIA", "USA" ], [ "INDIA", "GERMANY" ], [ "INDIA", "JAPAN" ], [ "INDIA", "BRAZIL" ], [ "INDIA", "INDIA" ] ],

"total_demand": 48950

}I additionally added info to carry context to the agent:

plant_nameis a listing of all of the potential manufacturing places we will open by location and sortprod_nameis the record of all of the potential manufacturing flows we will have (manufacturing, market)total_demandof all of the markets

We don’t return the demand per market as it’s loaded on the backend facet.

And you’ve got the outcomes of the evaluation.

{

"output_results": {

"plant_opening": {

"USA-LOW": 0,

"GERMANY-LOW": 0,

"JAPAN-LOW": 0,

"BRAZIL-LOW": 0,

"INDIA-LOW": 1,

"USA-HIGH": 0,

"GERMANY-HIGH": 0,

"JAPAN-HIGH": 1,

"BRAZIL-HIGH": 1,

"INDIA-HIGH": 1

},

"flow_volumes": {

"USA-USA": 0,

"USA-GERMANY": 0,

"USA-JAPAN": 0,

"USA-BRAZIL": 0,

"USA-INDIA": 0,

"GERMANY-USA": 0,

"GERMANY-GERMANY": 0,

"GERMANY-JAPAN": 0,

"GERMANY-BRAZIL": 0,

"GERMANY-INDIA": 0,

"JAPAN-USA": 0,

"JAPAN-GERMANY": 0,

"JAPAN-JAPAN": 15000,

"JAPAN-BRAZIL": 0,

"JAPAN-INDIA": 0,

"BRAZIL-USA": 12500,

"BRAZIL-GERMANY": 0,

"BRAZIL-JAPAN": 0,

"BRAZIL-BRAZIL": 1450,

"BRAZIL-INDIA": 0,

"INDIA-USA": 15500,

"INDIA-GERMANY": 900,

"INDIA-JAPAN": 2000,

"INDIA-BRAZIL": 0,

"INDIA-INDIA": 1600

},

"local_prod": 18050,

"export_prod": 30900,

"total_prod": 48950,

"total_fixedcosts": 1381250,

"total_varcosts": 4301800,

"total_costs": 5683050,

"total_units": 48950,

"unit_cost": 116.0990806945863,

"most_expensive_market": "JAPAN",

"cheapest_market": "INDIA",

"average_cogs": 103.6097067006946,

"unit_energy": 722.4208375893769,

"unit_water": 3318.2839632277833,

"unit_waste": 0.6153217568947906,

"unit_co2": 155.71399387129725

}

}They embody:

plant_opening: a listing of boolean values set to 1 if a web site is open

Three websites open for this situation: 1 low-capacity plant in India and three high-capacity vegetation in India, Japan, and Brazil.flow_volumes: mapping of the move between international locations

Brazil will produce 12,500 models for the USA- Total volumes with

local_prod,export_prodand thetotal_prod - A value breakdown with

total_fixedcosts,total_varcostsandtotal_coststogether with an evaluation of the COGS - Environmental impacts per unit delivered with useful resource utilization (Power, Water) and air pollution (CO2, waste).

This community design might be visually represented with this Sankey chart.

Allow us to see what our conversational agent can do with that!

Constructing a neighborhood MCP Server to attach Claude Desktop to a FastAPI Microservice

This follows a collection of articles during which I experimented with connecting FastAPI microservices to AI brokers for a Manufacturing Planning instrument and a Funds Optimiser.

For this time, I needed to copy the experiment with Anthropic’s Claude Desktop.

Arrange a neighborhood MCP Server in WSL

I’ll run every little thing inside WSL (Ubuntu) and let the Claude Desktop (Home windows) talk with my MCP server through a small JSON configuration.

Step one was to put in uv package deal supervisor:

uv (Python package deal supervisor) inside WSLWe are able to now use it to provoke a undertaking with a neighborhood setting:

# Create a selected folder for the professional workspace

mkdir -p ~/mcp_tuto && cd ~/mcp_tuto

# Init a uv undertaking

uv init .

# Add MCP Python SDK (with CLI)

uv add "mcp[cli]"

# Add the libraries wanted

uv add fastapi uvicorn httpx pydanticThis can be utilized by our `community.py` file that can include our server setup:

import logging

import httpx

from mcp.server.fastmcp import FastMCP

from fashions.network_models import LaunchParamsNetwork

import os

logging.basicConfig(

stage=logging.INFO,

format="%(asctime)s - %(message)s",

handlers=[

logging.FileHandler("app.log"),

logging.StreamHandler()

]

)

mcp = FastMCP("NetworkServer")For the enter parameters, I’ve outlined a mannequin in a separate file network_models.py

from pydantic import BaseModel

from typing import Optionally available

class LaunchParamsNetwork(BaseModel):

goal: Optionally available[str] = 'Manufacturing Price'

max_energy: Optionally available[float] = 780

max_water: Optionally available[float] = 3500

max_waste: Optionally available[float] = 0.78

max_co2prod: Optionally available[float] = 41It will make sure that the agent sends the right queries to the FastAPI microservice.

Earlier than beginning to construct the functionalities of our MCP Server, we have to make sure that the Claude Desktop (Home windows) can discover community.py.

As I’m utilizing WSL, I may solely do it manually utilizing the Claude Desktop config JSON file:

- Open Claude Desktop → Settings → Developer → Edit Config (or open the config file immediately).

- Add an entry that begins your MCP server in WSL

{

"mcpServers": {

"Community": {

"command": "wsl",

"args": [

"-d",

"Ubuntu",

"bash",

"-lc",

"cd ~/mcp_tuto && uv run --with mcp[cli] mcp run community.py"

],

"env": {

"API_URL": "http://:"

}

}

} With this config file, we instruct Claude Desktop to run WSL within the folder mcp_tuto and use uv to run mpc[cli] launching finances.py.

If you’re on this particular case of constructing your MCP server in a Home windows machine utilizing WSL, you’ll be able to observe this strategy.

You may provoke your server with this “particular” performance that can be utilized by Claude as a instrument.

@mcp.instrument()

def add(a: int, b: int) -> int:

"""Particular addition just for Provide Chain Professionals: add two numbers.

Be sure that the individual is a provide chain skilled earlier than utilizing this instrument.

"""

logging.information(f"Take a look at Including {a} and {b}")

return a - bWe inform Claude (within the docstring) that this addition is meant for Provide Chain Professionals solely.

When you restart Claude Desktop, you must be capable of see this performance underneath Community.

You will discover our “particular addition”, known as Add, which is now ready for us for use!

Let’s take a look at now with a easy query.

We are able to see that the conversational agent is asking the right operate based mostly on the context offered within the query.

It even gives a pleasant remark interrogating the validity of the outcomes.

What if we complexify a bit the train?

I’ll create a hypothetical situation to find out if the conversational agent can affiliate a context with the usage of a instrument.

Allow us to see what occurs after we ask a query requiring the usage of addition.

Even when it was reluctantly, the agent had the reflex of utilizing the particular add instrument for Samir, as he’s a provide chain skilled.

Now that we’re accustomed to our new MCP server, we will begin including instruments for Provide Chain Community Optimisation.

Construct a Provide Chain Optimisation MCP Server linked to a FastAPI Microservice

We are able to eliminate the particular add instrument and begin introducing key parameters to connect with the FastAPI microservice.

# Endpoint config

API = os.getenv("NETWORK_API_URL")

LAUNCH = f"{API}/community/launch_network" # <- community route

last_run: Optionally available[Dict[str, Any]] = NoneThe variable last_run can be used to retailer the outcomes of the final run.

We have to create a instrument that may hook up with the FastAPI microservice.

For that, we launched the operate under.

@mcp.instrument()

async def run_network(params: LaunchParamsNetwork,

session_id: str = "mcp_agent") -> dict:

"""

[DOC STRING TRUNCATED]

"""

payload = params.model_dump(exclude_none=True)

attempt:

async with httpx.AsyncClient(timeout=httpx.Timeout(5, learn=60)) as c:

r = await c.submit(LAUNCH, json=payload, headers={"session_id": session_id})

r.raise_for_status()

logging.information(f"[NetworkMCP] Run profitable with params: {payload}")

knowledge = r.json()

end result = knowledge[0] if isinstance(knowledge, record) and knowledge else knowledge

world last_run

last_run = end result

return end result

besides httpx.HTTPError as e:

code = getattr(e.response, "status_code", "unknown")

logging.error(f"[NetworkMCP] API name failed: {e}")

return {"error": f"{code} {e}"}This operate takes parameters following the Pydantic mannequin LaunchParamsNetwork, sending a clear JSON payload with None fields dropped.

It calls the FastAPI endpoint asynchronously and collects the outcomes which might be cached in last_run.

The important thing a part of this operate is the docstring, which I faraway from the code snippet for concision, as that is the one solution to describe what the operate does to the agent.

Part 1: Context

"""

Run the LogiGreen Provide Chain Community Optimization.

WHAT IT SOLVES

--------------

A facility-location + move project mannequin. It decides:

1) which vegetation to open (LOW/HIGH capability by nation), and

2) what number of models every plant ships to every market,

to both reduce whole value or an environmental footprint (CO₂, water, power),

underneath capability and non-compulsory per-unit footprint caps.

"""The primary part is just to introduce the context during which the instrument is used.

Part 2: Describe Enter Information

"""

INPUT (LaunchParamsNetwork)

---------------------------

- goal: str (default "Manufacturing Price")

One among {"Manufacturing Price", "CO2 Emissions", "Water Utilization", "Power Utilization"}.

Units the optimization goal.

- max_energy, max_water, max_waste, max_co2prod: float | None

Per-unit caps (common throughout the entire plan). If omitted, service defaults

out of your config are used. Internally the mannequin enforces:

sum(impact_i * qty_i) <= total_demand * max_impact_per_unit

- session_id: str

Forwarded as an HTTP header; the API makes use of it to separate enter/output folders.

"""This transient description is essential if we wish to make sure that the agent adheres to the Pydantic schema of enter parameters imposed by our FastAPI microservice.

Part 3: Description of output outcomes

"""

OUTPUT (matches your service schema)

------------------------------------

The service returns { "input_params": {...}, "output_results": {...} }.

Right here’s what the fields imply, utilizing your pattern:

input_params:

- goal: "Manufacturing Price" # goal truly used

- max_energy: 780 # per-unit most power utilization (MJ/unit)

- max_water: 3500 # per-unit most water utilization (L/unit)

- max_waste: 0.78 # per-unit most waste (kg/unit)

- max_co2prod: 41 # per-unit most CO₂ manufacturing (kgCO₂e/unit, manufacturing solely)

- unit_monetary: "1e6" # prices might be expressed in M€ by dividing by 1e6

- loc: ["USA","GERMANY","JAPAN","BRAZIL","INDIA"] # international locations in scope

- n_loc: 5 # variety of international locations

- plant_name: [("USA","LOW"),...,("INDIA","HIGH")] # determination keys for plant opening

- prod_name: [(i,j) for i in loc for j in loc] # determination keys for flows i→j

- total_demand: 48950 # whole market demand (models)

output_results:

- plant_opening: {"USA-LOW":0, ... "INDIA-HIGH":1}

Binary open/shut by (country-capacity). Instance above opens:

INDIA-LOW, JAPAN-HIGH, BRAZIL-HIGH, INDIA-HIGH.

- flow_volumes: {"INDIA-USA":15500, "BRAZIL-USA":12500, "JAPAN-JAPAN":15000, ...}

Optimum cargo plan (models) from manufacturing nation to market.

- local_prod, export_prod, total_prod: 18050, 30900, 48950

Native vs. export quantity with whole = demand feasibility verify.

- total_fixedcosts: 1_381_250 (EUR)

- total_varcosts: 4_301_800 (EUR)

- total_costs: 5_683_050 (EUR)

Tip: total_costs / total_units = unit_cost (sanity verify).

- total_units: 48950

- unit_cost: 116.09908 (EUR/unit)

- most_expensive_market: "JAPAN"

- cheapest_market: "INDIA"

- average_cogs: 103.6097 (EUR/unit throughout markets)

- unit_energy: 722.4208 (MJ/unit)

- unit_water: 3318.284 (L/unit)

- unit_waste: 0.6153 (kg/unit)

- unit_co2: 35.5485 (kgCO₂e/unit)

"""This half describes to the agent the outputs it is going to obtain.

I didn’t wish to solely rely on “self-explicit” naming of variables within the JSON.

I needed ot be sure that it may perceive the info it has readily available to offer summaries following the rules listed under.

"""

HOW TO READ THIS RUN (based mostly on the pattern JSON)

-----------------------------------------------

- Goal = value: the mannequin opens 4 vegetation (INDIA-LOW, JAPAN-HIGH, BRAZIL-HIGH, INDIA-HIGH),

closely exporting from INDIA and BRAZIL to the USA, whereas JAPAN provides itself.

- Unit economics: unit_cost ≈ €116.10; total_costs ≈ €5.683M (divide by 1e6 for M€).

- Market economics: “JAPAN” is the costliest market; “INDIA” the most affordable.

- Localization ratio: local_prod / total_prod = 18,050 / 48,950 ≈ 36.87% native, 63.13% export.

- Footprint per unit: e.g., unit_co2 ≈ 35.55 kgCO₂e/unit. To approximate whole CO₂:

unit_co2 * total_units ≈ 35.55 * 48,950 ≈ 1,740,100 kgCO₂e (≈ 1,740 tCO₂e).

QUICK SANITY CHECKS

-------------------

- Demand steadiness: sum_i move(i→j) == demand(j) for every market j.

- Capability: sum_j move(i→j) ≤ sum_s CAP(i,s) * open(i,s) for every i.

- Unit-cost verify: total_costs / total_units == unit_cost.

- If infeasible: your per-unit caps (max_water/power/waste/CO₂) could also be too tight.

TYPICAL USES

------------

- Baseline vs. sustainability: run as soon as with goal="Manufacturing Price", then with

goal="CO2 Emissions" (or Water/Power) utilizing the identical caps to quantify the

trade-off (Δcost, Δunit_CO₂, change in plant openings/flows).

- Narrative for execs: report high flows (e.g., INDIA→USA=15.5k, BRAZIL→USA=12.5k),

open websites, unit value, and per-unit footprints. Convert prices to M€ with unit_monetary.

EXAMPLES

--------

# Min value baseline

run_network(LaunchParamsNetwork(goal="Manufacturing Price"))

# Decrease CO₂ with a water cap

run_network(LaunchParamsNetwork(goal="CO2 Emissions", max_water=3500))

# Decrease Water with an power cap

run_network(LaunchParamsNetwork(goal="Water Utilization", max_energy=780))

"""I share a listing of potential eventualities and explanations of the kind of evaluation I count on utilizing an precise instance.

That is removed from being concise, however my goal right here is to make sure that the agent is provided to make use of the instrument at its highest potential.

Experiment with the instrument: from easy to complicated directions

To check the workflow, I ask the agent to run the simulation with default parameters.

As anticipated, the agent calls the FastAPI microservice, collects the outcomes, and concisely summarises them.

That is cool, however I already had that with my Manufacturing Planning Optimisation Agent constructed with LangGraph and FastAPI.

I needed to discover MCP Servers with Claude Desktop for a extra superior utilization.

Provide Chain Director: “I wish to have a comparative research of a number of situation.”

If we come again to the unique plan, the concept was to equip our decision-makers (clients who pay us) with a conversational agent that may help them of their decision-making course of.

Allow us to attempt a extra superior query:

We explicitly request a comparative research whereas permitting Claude Sonnet 4 to be inventive when it comes to visible rendering.

To be sincere, I used to be impressed by the dashboard that was generated by Claude, which you’ll entry through this hyperlink.

On the high, you’ll find an govt abstract itemizing what might be thought-about crucial indicators of this downside.

The mannequin understood, with out being explicitly requested within the immediate, that these 4 indicators have been key to the decision-making course of ensuing from this research.

At this stage, in my view, we already get the added worth of incorporating an LLM into the loop.

The next outputs are extra standard and will have been generated with deterministic code.

Nonetheless, I admit that the creativity of Claude outperformed my very own internet utility with this good visible displaying the plant openings per situation.

Whereas I used to be beginning to fear about getting changed by AI, I had a take a look at the strategic evaluation generated by the agent.

The strategy of evaluating every situation vs a baseline of value optimisation has by no means been explicitly requested.

The agent took the initiative to carry up this angle when presenting outcomes.

This appeared to exhibit the flexibility to pick out the suitable indicators to convey a message successfully utilizing knowledge.

Can we ask open questions?

Let me discover that within the subsequent part.

A Dialog Agent able to decision-making?

To additional discover the capabilities of our new instrument and take a look at its potential, I’ll pose open-ended questions.

Query 1: Commerce-off between value and sustainability

That is the kind of query I bought after I was in command of community research.

This seemed to be a suggestion to undertake the Water-optimised technique to seek out the proper steadiness.

It used compelling visuals to help its thought.

I actually like the fee vs. environmental impression scatter plot!

Not like some technique consulting companies, it didn’t overlook the implementation half.

For extra particulars, you’ll be able to entry the entire dashboard at this hyperlink.

Let’s attempt one other difficult query.

Query 2: Greatest CO2 Emissions Efficiency

It is a difficult query that required seven runs to reply.

This was sufficient to offer the query with the right answer.

What I respect probably the most is the standard of the visuals used to help its reasoning.

Within the visible above, we will see the completely different eventualities simulated by the instrument.

Though we may query the flawed orientation of the (x-axis), the visible stays self-explicit.

The place I really feel overwhelmed by the LLM is after we take a look at the quanlity and concision of the strategic suggestions.

Contemplating that these suggestions function the first level of contact with decision-makers, who usually lack the time to delve into particulars, this stays a powerful argument in favour of utilizing this agent.

Conclusion

This experiment is successful!

There is no such thing as a doubt concerning the added worth of MCP Servers in comparison with the straightforward AI workflows launched within the earlier articles.

When you might have an optimisation module with a number of eventualities (relying on goal features and constraints), you’ll be able to leverage MCP servers to allow brokers to make choices based mostly on knowledge.

I’d apply this answer to algorithms like

These are alternatives to equip your complete provide chain with dialog brokers (linked to optimisation instruments) that may help decision-making.

Can we transcend operational subjects?

The reasoning capability that Claude showcased on this experiment additionally impressed me to discover enterprise subjects.

An answer introduced in one in all my YouTube tutorials might be an excellent candidate for our subsequent MCP integration.

The objective was to help a pal who runs a enterprise within the meals and beverage business.

They promote renewable cups produced in China to espresso retailers and bars in Paris.

I needed to make use of Python to simulate its complete worth chain to determine optimisation levers to maximise its profitability.

This algorithm, additionally packaged in a FastAPI microservice, can turn into your subsequent data-driven enterprise technique advisor.

A part of the job entails simulating a number of eventualities to find out the optimum trade-off between a number of metrics.

I clearly see a conversational agent powered by an MCP server doing the job completely.

For extra info, take a look on the video linked under

I’ll share this new experiment in a future article.

Keep tuned!

On the lookout for inspiration?

You arrived on the finish of this text, and also you’re able to arrange your individual MCP server?

As I shared the preliminary steps to arrange the server with the instance of the add operate, now you can implement any performance.

You don’t want to make use of a FastAPI microservice.

The instruments might be immediately created in the identical setting the place the MCP server is hosted (right here domestically).

If you’re on the lookout for inspiration, I’ve shared dozens of analytics merchandise (fixing precise operational issues with supply code) within the article linked right here.

About Me

Let’s join on Linkedin and Twitter. I’m a Provide Chain Engineer who makes use of knowledge analytics to enhance logistics operations and scale back prices.

For consulting or recommendation on analytics and sustainable provide chain transformation, be happy to contact me through Logigreen Consulting.

If you’re focused on Information Analytics and Provide Chain, take a look at my web site.