The rise of AI has opened new avenues for enhancing buyer experiences throughout a number of channels. Applied sciences like pure language understanding (NLU) are employed to discern buyer intents, facilitating environment friendly self-service actions. Computerized speech recognition (ASR) interprets spoken phrases into textual content, enabling seamless voice interactions. With Amazon Lex bots, companies can use conversational AI to combine these capabilities into their name facilities. Amazon Lex makes use of ASR and NLU to understand buyer wants, guiding them by means of their journey. These AI applied sciences have considerably diminished agent deal with occasions, elevated Web Promoter Scores (NPS), and streamlined self-service duties, similar to appointment scheduling.

The arrival of generative AI additional expands the potential to reinforce omnichannel buyer experiences. Nonetheless, considerations about safety, compliance, and AI hallucinations usually deter companies from straight exposing clients to massive language fashions (LLMs) by means of their omnichannel options. That is the place the mixing of Amazon Lex and Amazon Bedrock turns into invaluable. On this setup, Amazon Lex serves because the preliminary touchpoint, managing intent classification, slot assortment, and achievement. In the meantime, Amazon Bedrock acts as a secondary validation layer, intervening when Amazon Lex encounters uncertainties in understanding buyer inputs.

On this put up, we exhibit tips on how to combine LLMs into your omnichannel expertise utilizing Amazon Lex and Amazon Bedrock.

Enhancing buyer interactions with LLMs

The next are three situations illustrating how LLMs can improve buyer interactions:

- Intent classification – These situations happen when a buyer clearly articulates their intent, however the lack of utterance coaching information ends in poor efficiency by conventional fashions. For instance, a buyer would possibly name in and say, “My basement is flooded, there’s not less than a foot of water, and I do not know what to do.” Conventional NLU fashions would possibly lack the coaching information to deal with this out-of-band response, as a result of they’re sometimes educated on pattern utterances like “I must make a declare,” “I’ve a flood declare,” or “Open declare,” that are mapped to a hypothetical

StartClaimintent. Nonetheless, an LLM, when supplied with the context of every intent together with an outline and pattern utterances, can precisely decide that the shopper is coping with a flooded basement and is looking for to start out a declare. - Assisted slot decision (built-in) and customized slot help (customized) – These situations happen when a buyer says an out-of-band response to a slot assortment. For choose built-in slot varieties similar to

AMAZON.Date,AMAZON.Nation, andAMAZON.Affirmation, Amazon Lex presently has a built-in functionality to deal with slot decision for choose built-in slot varieties. For customized slot varieties, you would wish to implement customized logic utilizing AWS Lambda for slot decision and extra validation. This answer handles customized slot decision by utilizing LLMs to make clear and map these inputs to the right slots. For instance, decoding “Toyota Tundra” as “truck” or “the entire dang high of my home is gone” as “roof.” This lets you combine generative AI to validate each your pre-built slots and your customized slots. - Background noise mitigation – Many purchasers can’t management the background noise when calling right into a name heart. This noise would possibly embody a loud TV, a sidebar dialog, or non-human sounds being transcribed as voice (for instance, a automobile passing by and is transcribed as “uhhh”). In such circumstances, the NLU mannequin, relying on its coaching information, would possibly misclassify the caller’s intent or require the caller to repeat themselves. Nonetheless, with an LLM, you may present the transcript with acceptable context to tell apart the noise from the shopper’s precise assertion. For instance, if a TV present is enjoying within the background and the shopper says “my automobile” when requested about their coverage, the transcription would possibly learn “Tune on this night for my automobile.” The LLM can ignore the irrelevant portion of the transcription and give attention to the related half, “my automobile,” to precisely perceive the shopper’s intent.

As demonstrated in these situations, the LLM shouldn’t be controlling the dialog. As a substitute, it operates inside the boundaries outlined by intents, intent descriptions, slots, pattern slots, and utterances from Amazon Lex. This strategy helps information the shopper alongside the right path, decreasing the dangers of hallucination and manipulation of the customer-facing utility. Moreover, this strategy reduces price, as a result of NLU is used when potential, and the LLM acts as a secondary test earlier than re-prompting the shopper.

You possibly can additional improve this AI-driven expertise by integrating it together with your contact heart answer, similar to Amazon Join. By combining the capabilities of Amazon Lex, Amazon Bedrock, and Amazon Join, you may ship a seamless and clever buyer expertise throughout your channels.

When clients attain out, whether or not by means of voice or chat, this built-in answer gives a strong, AI-driven interplay:

- Amazon Join manages the preliminary buyer contact, dealing with name routing and channel choice.

- Amazon Lex processes the shopper’s enter, utilizing NLU to determine intent and extract related info.

- In circumstances the place Amazon Lex won’t absolutely perceive the shopper’s intent or when a extra nuanced interpretation is required, superior language fashions in Amazon Bedrock will be invoked to supply deeper evaluation and understanding.

- The mixed insights from Amazon Lex and Amazon Bedrock information the dialog stream in Amazon Join, figuring out whether or not to supply automated responses, request extra info, or route the shopper to a human agent.

Resolution overview

On this answer, Amazon Lex will hook up with Amazon Bedrock by means of Lambda, and invoke an LLM of your alternative on Amazon Bedrock when help in intent classification and slot decision is required all through the dialog. As an illustration, if an ElicitIntent name defaults to the FallbackIntent, the Lambda operate runs to have Amazon Bedrock decide if the person doubtlessly used out-of-band phrases that needs to be correctly mapped. Moreover, we will increase the prompts despatched to the mannequin for intent classification and slot decision with enterprise context to yield extra correct outcomes. Instance prompts for intent classification and slot decision is out there within the GitHub repo.

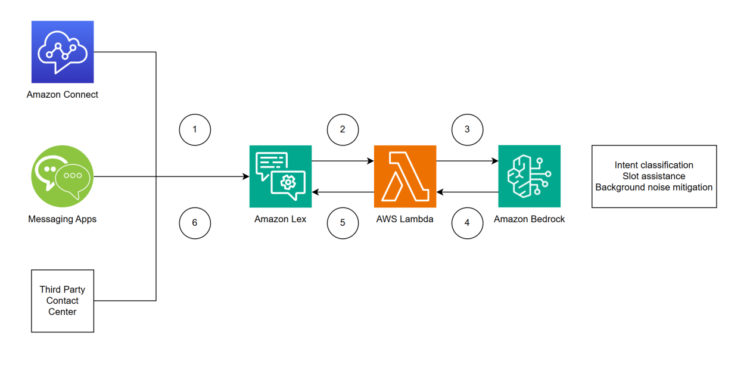

The next diagram illustrates the answer structure:

The workflow consists of the next steps:

- Messages are despatched to the Amazon Lex omnichannel utilizing Amazon Join (textual content and voice), messaging apps (textual content), and third-party contact facilities (textual content and voice). Amazon Lex NLU maps person utterances to particular intents.

- The Lambda operate is invoked at sure phases of the dialog the place Amazon Lex NLU didn’t determine the person utterance, similar to throughout the fallback intent or throughout slot achievement.

- Lambda calls basis fashions (FMs) chosen from an AWS CloudFormation template by means of Amazon Bedrock to determine the intent, determine the slot, or decide if the transcribed messages include background noise.

- Amazon Bedrock returns the recognized intent or slot, or responds that it’s unable to categorise the utterance as a associated intent or slot.

- Lambda units the state of Amazon Lex to both transfer ahead within the chosen intent or re-prompt the person for enter.

- Amazon Lex continues the dialog by both re-prompting the person or persevering with to meet the intent.

Stipulations

You must have the next conditions:

Deploy the omnichannel Amazon Lex bot

To deploy this answer, full the next steps:

- Select Launch Stack to launch a CloudFormation stack in

us-east-1:

- For Stack identify, enter a reputation on your stack. This put up makes use of the identify

FNOLBot. - Within the Parameters part, choose the mannequin you need to use.

- Overview the IAM useful resource creation and select Create stack.

After a couple of minutes, your stack needs to be full. The core assets are as follows:

- Amazon Lex bot –

FNOLBot - Lambda operate –

ai-assist-lambda-{Stack-Title} - IAM roles –

{Stack-Title}-AIAssistLambdaRole, and{Stack-Title}-BotRuntimeRole

Check the omnichannel bot

To check the bot, navigate to FNOLBot on the Amazon Lex console and open a take a look at window. For extra particulars, see Testing a bot utilizing the console.

Intent classification

Let’s take a look at how, as an alternative of claiming “I want to make a declare,” the shopper can ask extra complicated questions:

- Within the take a look at window, enter in “My neighbor’s tree fell on my storage. What steps ought to I take with my insurance coverage firm?”

- Select Examine.

Within the response, the intent has been recognized as GatherFNOLInfo.

Background noise mitigation with intent classification

Let’s simulate making a request with background noise:

- Refresh the bot by selecting the refresh icon.

- Within the take a look at window, enter “Hello sure I’m calling about yeah yeah one minute um um I must make a declare.”

- Select Examine.

Within the response, the intent has been recognized as GatherFNOLInfo.

Slot help

Let’s take a look at how as an alternative of claiming express slot values, we will use generative AI to assist fill the slot:

- Refresh the bot by selecting the refresh icon.

- Enter “I must make a declare.”

The Amazon Lex bot will then ask “What portion of the house was broken?”

- Enter “the entire dang high of my home was gone.”

The bot will then ask “Please describe any accidents that occurred throughout the incident.”

- Enter “I obtained a reasonably unhealthy lower from the shingles.”

- Select Examine.

You’ll discover that the Harm slot has been full of “roof” and the PersonalInjury slot has been full of “laceration.”

Background noise mitigation with slot help

We now simulate how Amazon Lex makes use of ASR transcribing background noise. The primary state of affairs is a dialog the place the person is having a dialog with others whereas speaking to the Amazon Lex bot. Within the second state of affairs, a TV on within the background is so loud that it will get transcribed by ASR.

- Refresh the bot by selecting the refresh icon.

- Enter “I must make a declare.”

The Amazon Lex bot will then ask “What portion of the house was broken?”

- Enter “yeah i actually need that quickly um the roof was broken.”

The bot will then ask “Please describe any accidents that occurred throughout the incident.”

- Enter “tonight on the nightly information reporters are on the scene um i obtained a reasonably unhealthy lower.”

- Select Examine.

You’ll discover that the Harm slot has been full of “roof” and the PersonalInjury slot has been full of “laceration.”

Clear up

To keep away from incurring further prices, delete the CloudFormation stacks you deployed.

Conclusion

On this put up, we confirmed you tips on how to arrange Amazon Lex for an omnichannel chatbot expertise and Amazon Bedrock to be your secondary validation layer. This enables your clients to doubtlessly present out-of-band responses each on the intent and slot assortment ranges with out having to be re-prompted, permitting for a seamless buyer expertise. As we demonstrated, whether or not the person is available in and gives a strong description of their intent and slot or in the event that they use phrases which can be outdoors of the Amazon Lex NLU coaching information, the LLM is ready to accurately determine the right intent and slot.

In case you have an present Amazon Lex bot deployed, you may edit the Lambda code to additional improve the bot. Check out the answer from CloudFormation stack or code within the GitHub repo and tell us when you have any questions within the feedback.

In regards to the Authors

Michael Cho is a Options Architect at AWS, the place he works with clients to speed up their mission on the cloud. He’s enthusiastic about architecting and constructing revolutionary options that empower clients. Recently, he has been dedicating his time to experimenting with Generative AI for fixing complicated enterprise issues.

Michael Cho is a Options Architect at AWS, the place he works with clients to speed up their mission on the cloud. He’s enthusiastic about architecting and constructing revolutionary options that empower clients. Recently, he has been dedicating his time to experimenting with Generative AI for fixing complicated enterprise issues.

Joe Morotti is a Options Architect at Amazon Net Companies (AWS), working with Monetary Companies clients throughout the US. He has held a variety of technical roles and luxuriate in exhibiting buyer’s artwork of the potential. His ardour areas embody conversational AI, contact heart, and generative AI. In his free time, he enjoys spending high quality time along with his household exploring new locations and over analyzing his sports activities staff’s efficiency.

Joe Morotti is a Options Architect at Amazon Net Companies (AWS), working with Monetary Companies clients throughout the US. He has held a variety of technical roles and luxuriate in exhibiting buyer’s artwork of the potential. His ardour areas embody conversational AI, contact heart, and generative AI. In his free time, he enjoys spending high quality time along with his household exploring new locations and over analyzing his sports activities staff’s efficiency.

Vikas Shah is an Enterprise Options Architect at Amazon Net Companies. He’s a know-how fanatic who enjoys serving to clients discover revolutionary options to complicated enterprise challenges. His areas of curiosity are ML, IoT, robotics and storage. In his spare time, Vikas enjoys constructing robots, mountaineering, and touring.

Vikas Shah is an Enterprise Options Architect at Amazon Net Companies. He’s a know-how fanatic who enjoys serving to clients discover revolutionary options to complicated enterprise challenges. His areas of curiosity are ML, IoT, robotics and storage. In his spare time, Vikas enjoys constructing robots, mountaineering, and touring.