Machine studying operations (MLOps) is the mix of individuals, processes, and know-how to productionize ML use instances effectively. To attain this, enterprise clients should develop MLOps platforms to help reproducibility, robustness, and end-to-end observability of the ML use case’s lifecycle. These platforms are primarily based on a multi-account setup by adopting strict safety constraints, growth finest practices corresponding to automated deployment utilizing steady integration and supply (CI/CD) applied sciences, and allowing customers to work together solely by committing adjustments to code repositories. For extra details about MLOps finest practices, seek advice from the MLOps basis roadmap for enterprises with Amazon SageMaker.

Terraform by HashiCorp has been embraced by many purchasers as the primary infrastructure as code (IaC) strategy to develop, construct, deploy, and standardize AWS infrastructure for multi-cloud options. Moreover, growth repositories and CI/CD applied sciences corresponding to GitHub and GitHub Actions, respectively, have been adopted broadly by the DevOps and MLOps group the world over.

On this put up, we present the way to implement an MLOps platform primarily based on Terraform utilizing GitHub and GitHub Actions for the automated deployment of ML use instances. Particularly, we deep dive on the mandatory infrastructure and present you the way to make the most of customized Amazon SageMaker Initiatives templates, which include instance repositories that assist knowledge scientists and ML engineers deploy ML providers (corresponding to an Amazon SageMaker endpoint or batch rework job) utilizing Terraform. You will discover the supply code within the following GitHub repository.

Answer overview

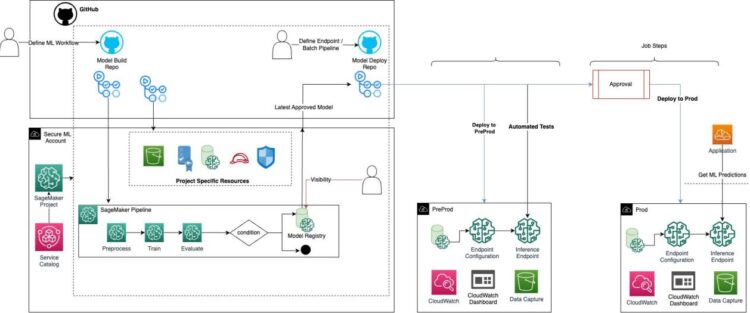

The MLOps structure answer creates the mandatory assets to construct a complete coaching pipeline, registering the fashions within the Amazon SageMaker Mannequin Registry, and its deployment to preproduction and manufacturing environments. This foundational infrastructure permits a scientific strategy to ML operations, offering a strong framework that streamlines the journey from mannequin growth to deployment.

The top-users (knowledge scientists or ML engineers) will choose the group SageMaker Mission template that matches their use case. SageMaker Initiatives helps organizations arrange and standardize developer environments for knowledge scientists and CI/CD methods for MLOps engineers. The challenge deployment creates, from the GitHub templates, a GitHub personal repository and CI/CD assets that knowledge scientists can customise in accordance with their use case. Relying on the chosen SageMaker challenge, different project-specific assets may also be created.

Customized SageMaker Mission template

SageMaker initiatives deploys the related AWS CloudFormation template of the AWS Service Catalog product to provision and handle the infrastructure and assets required in your challenge, together with the mixing with a supply code repository.

On the time of writing, 4 customized SageMaker Initiatives templates can be found for this answer:

- MLOps template for LLM coaching and analysis – An MLOps sample that reveals a easy one-account Amazon SageMaker Pipelines setup for big language fashions (LLMs) This template helps fine-tuning and analysis.

- MLOps template for mannequin constructing and coaching – An MLOps sample that reveals a easy one-account SageMaker Pipelines setup. This template helps mannequin coaching and analysis.

- MLOps template for mannequin constructing, coaching, and deployment – An MLOps sample to coach fashions utilizing SageMaker Pipelines and deploy the skilled mannequin into preproduction and manufacturing accounts. This template helps real-time inference, batch inference pipelines, and bring-your-own-containers (BYOC).

- MLOps template for selling the total ML pipeline throughout environments – An MLOps sample to indicate the way to take the identical SageMaker pipeline throughout environments from dev to prod. This template helps a pipeline for batch inference.

Every SageMaker challenge template has related GitHub repository templates which can be cloned for use in your use case:

When a customized SageMaker challenge is deployed by an information scientist, the related GitHub template repositories are cloned by means of an invocation of the AWS Lambda operate

Infrastructure Terraform modules

The Terraform code, discovered underneath base-infrastructure/terraform, is structured with reusable modules which can be used throughout totally different deployment environments. Their instantiation will probably be discovered for every setting underneath base-infrastructure/terraform/. There are seven key reusable modules:

There are additionally some environment-specific assets, which could be discovered straight underneath base-infrastructure/terraform/.

Stipulations

Earlier than you begin the deployment course of, full the next three steps:

- Put together AWS accounts to deploy the platform. We suggest utilizing three AWS accounts for 3 typical MLOps environments: experimentation, preproduction, and manufacturing. Nevertheless, you may deploy the infrastructure to only one account for testing functions.

- Create a GitHub group.

- Create a private entry token (PAT). It is strongly recommended to create a service or platform account and use its PAT.

Bootstrap your AWS accounts for GitHub and Terraform

Earlier than we are able to deploy the infrastructure, the AWS accounts you’ve gotten vended must be bootstrapped. That is required in order that Terraform can handle the state of the assets deployed. Terraform backends allow safe, collaborative, and scalable infrastructure administration by streamlining model management, locking, and centralized state storage. Subsequently, we deploy an S3 bucket and Amazon DynamoDB desk for storing states and locking consistency checking.

Bootstrapping can also be required in order that GitHub can assume a deployment position in your account, due to this fact we deploy an IAM position and OpenID Join (OIDC) id supplier (IdP). As a substitute for using long-lived IAM person entry keys, organizations can implement an OIDC IdP inside your AWS account. This configuration facilitates the utilization of IAM roles and short-term credentials, enhancing safety and adherence to finest practices.

You may select from two choices to bootstrap your account: a bootstrap.sh Bash script and a bootstrap.yaml CloudFormation template, each saved on the root of the repository.

Bootstrap utilizing a CloudFormation template

Full the next steps to make use of the CloudFormation template:

- Be certain the AWS Command Line Interface (AWS CLI) is put in and credentials are loaded for the goal account that you just need to bootstrap.

- Establish the next:

- Atmosphere kind of the account:

dev,preprod, orprod. - Title of your GitHub group.

- (Non-obligatory) Customise the S3 bucket identify for Terraform state recordsdata by selecting a prefix.

- (Non-obligatory) Customise the DynamoDB desk identify for state locking.

- Atmosphere kind of the account:

- Run the next command, updating the main points from Step 2:

Bootstrap utilizing a Bash script

Full the next steps to make use of the Bash script:

- Be certain the AWS CLI is put in and credentials are loaded for the goal account that you just need to bootstrap.

- Establish the next:

- Atmosphere kind of the account:

dev,preprod, orprod. - Title of your GitHub group.

- (Non-obligatory) Customise the S3 bucket identify for Terraform state recordsdata by selecting a prefix.

- (Non-obligatory) Customise the DynamoDB desk identify for state locking.

- Atmosphere kind of the account:

- Run the script (

bash ./bootstrap.sh) and enter the main points from Step 2 when prompted. You may depart most of those choices as default.

When you change the TerraformStateBucketPrefix or TerraformStateLockTableName parameters, it’s essential to replace the setting variables (S3_PREFIX and DYNAMODB_PREFIX) within the deploy.yml file to match.

Arrange your GitHub group

Within the ultimate step earlier than infrastructure deployment, it’s essential to configure your GitHub group by cloning code from this instance into particular areas.

Base infrastructure

Create a brand new repository in your group that can include the bottom infrastructure Terraform code. Give your repository a novel identify, and transfer the code from this instance’s base-infrastructure folder into your newly created repository. Be certain the .github folder can also be moved to the brand new repository, which shops the GitHub Actions workflow definitions. GitHub Actions make it attainable to automate, customise, and execute your software program growth workflows proper in your repository. On this instance, we use GitHub Actions as our most well-liked CI/CD tooling.

Subsequent, arrange some GitHub secrets and techniques in your repository. Secrets and techniques are variables that you just create in a company, repository, or repository setting. The secrets and techniques that you just create can be found to make use of in our GitHub Actions workflows. Full the next steps to create your secrets and techniques:

- Navigation to the bottom infrastructure repository.

- Select Settings, Secrets and techniques and Variables, and Actions.

- Create two secrets and techniques:

AWS_ASSUME_ROLE_NAME– That is created within the bootstrap script with the default identifyaws-github-oidc-role, and needs to be up to date within the secret with whichever position identify you select.PAT_GITHUB– That is your GitHub PAT token, created within the prerequisite steps.

Template repositories

The template-repos folder of our instance incorporates a number of folders with the seed code for our SageMaker Initiatives templates. Every folder needs to be added to your GitHub group as a personal template repository. Full the next steps:

- Create the repository with the identical identify as the instance folder, for each folder within the

template-reposlisting. - Select Settings in every newly created repository.

- Choose the Non-public Template possibility.

Be sure to transfer all of the code from the instance folder to your personal template, together with the .github folder.

Replace the configuration file

On the root of the bottom infrastructure folder is a config.json file. This file permits the multi-account, multi-environment mechanism. The instance JSON construction is as follows:

To your MLOps setting, merely change the identify of environment_name to your required identify, and replace the AWS Area and account numbers accordingly. Observe the account numbers will correspond to the AWS accounts you bootstrapped. This config.json lets you vend as many MLOps platforms as you want. To take action, merely create a brand new JSON object within the file with the respective setting identify, Area, and bootstrapped account numbers. Then find the GitHub Actions deployment workflow underneath .github/workflows/deploy.yaml and add your new setting identify inside every listing object within the matrix key. After we deploy our infrastructure utilizing GitHub Actions, we use a matrix deployment to deploy to all our environments in parallel.

Deploy the infrastructure

Now that you’ve got arrange your GitHub group, you’re able to deploy the infrastructure into the AWS accounts. Modifications to the infrastructure will deploy routinely when adjustments are made to the primary department, due to this fact while you make adjustments to the config file, this could set off the infrastructure deployment. To launch your first deployment manually, full the next steps:

- Navigate to your base infrastructure repository.

- Select the Actions tab.

- Select Deploy Infrastructure.

- Select Run Workflow and select your required department for deployment.

It will launch the GitHub Actions workflow for deploying the experimentation, preproduction, and manufacturing infrastructure in parallel. You may visualize these deployments on the Actions tab.

Now your AWS accounts will include the mandatory infrastructure in your MLOps platform.

Finish-user expertise

The next demonstration illustrates the end-user expertise.

Clear up

To delete the multi-account infrastructure created by this instance and keep away from additional prices, full the next steps:

- Within the growth AWS account, manually delete the SageMaker initiatives, SageMaker area, SageMaker person profiles, Amazon Elastic File Service (Amazon EFS) storage, and AWS safety teams created by SageMaker.

- Within the growth AWS account, you may want to supply further permissions to the

launch_constraint_roleIAM position. This IAM position is used as a launch constraint. Service Catalog will use this permission to delete the provisioned merchandise. - Within the growth AWS account, manually delete the assets like repositories (Git), pipelines, experiments, mannequin teams, and endpoints created by SageMaker Initiatives.

- For preproduction and manufacturing AWS accounts, manually delete the S3

bucket ml-artifacts-and the mannequin deployed by means of the pipeline.- - After you full these adjustments, set off the GitHub workflow for destroying.

- If the assets aren’t deleted, manually delete the pending assets.

- Delete the IAM person that you just created for GitHub Actions.

- Delete the key in AWS Secrets and techniques Supervisor that shops the GitHub private entry token.

Conclusion

On this put up, we walked by means of the method of deploying an MLOps platform primarily based on Terraform and utilizing GitHub and GitHub Actions for the automated deployment of ML use instances. This answer successfully integrates 4 customized SageMaker Initiatives templates for mannequin constructing, coaching, analysis and deployment with particular SageMaker pipelines. In our situation, we targeted on deploying a multi-account and multi-environment MLOps platform. For a complete understanding of the implementation particulars, go to the GitHub repository.

In regards to the authors

Jordan Grubb is a DevOps Architect at AWS, specializing in MLOps. He permits AWS clients to attain their enterprise outcomes by delivering automated, scalable, and safe cloud architectures. Jordan can also be an inventor, with two patents inside software program engineering. Exterior of labor, he enjoys enjoying most sports activities, touring, and has a ardour for well being and wellness.

Jordan Grubb is a DevOps Architect at AWS, specializing in MLOps. He permits AWS clients to attain their enterprise outcomes by delivering automated, scalable, and safe cloud architectures. Jordan can also be an inventor, with two patents inside software program engineering. Exterior of labor, he enjoys enjoying most sports activities, touring, and has a ardour for well being and wellness.

Irene Arroyo Delgado is an AI/ML and GenAI Specialist Answer at AWS. She focuses on bringing out the potential of generative AI for every use case and productionizing ML workloads, to attain clients’ desired enterprise outcomes by automating end-to-end ML lifecycles. In her free time, Irene enjoys touring and climbing.

Irene Arroyo Delgado is an AI/ML and GenAI Specialist Answer at AWS. She focuses on bringing out the potential of generative AI for every use case and productionizing ML workloads, to attain clients’ desired enterprise outcomes by automating end-to-end ML lifecycles. In her free time, Irene enjoys touring and climbing.