This submit is co-written with Tatia Tsmindashvili, Ana Kolkhidashvili, Guram Dentoshvili, Dachi Choladze from Impel.

Impel transforms automotive retail by an AI-powered buyer lifecycle administration answer that drives dealership operations and buyer interactions. Their core product, Gross sales AI, offers all-day personalised buyer engagement, dealing with vehicle-specific questions and automotive trade-in and financing inquiries. By changing their present third-party massive language mannequin (LLM) with a fine-tuned Meta Llama mannequin deployed on Amazon SageMaker AI, Impel achieved 20% improved accuracy and higher value controls. The implementation utilizing the great function set of Amazon SageMaker, together with mannequin coaching, Activation-Conscious Weight Quantization (AWQ), and Massive Mannequin Inference (LMI) containers. This domain-specific strategy not solely improved output high quality but in addition enhanced safety and operational overhead in comparison with general-purpose LLMs.

On this submit, we share how Impel enhances the automotive dealership buyer expertise with fine-tuned LLMs on SageMaker.

Impel’s Gross sales AI

Impel optimizes how automotive retailers join with prospects by delivering personalised experiences at each touchpoint—from preliminary analysis to buy, service, and repeat enterprise, appearing as a digital concierge for automobile house owners, whereas giving retailers personalization capabilities for buyer interactions. Gross sales AI makes use of generative AI to supply immediate responses across the clock to potential prospects by e mail and textual content. This maintained engagement in the course of the early levels of a buyer’s automotive shopping for journey results in showroom appointments or direct connections with gross sales groups. Gross sales AI has three core options to supply this constant buyer engagement:

- Summarization – Summarizes previous buyer engagements to derive buyer intent

- Comply with-up era – Offers constant follow-up to engaged prospects to assist forestall stalled buyer buying journeys

- Response personalization – Personalizes responses to align with retailer messaging and buyer’s buying specs

Two key elements drove Impel to transition from their present LLM supplier: the necessity for mannequin customization and value optimization at scale. Their earlier answer’s per-token pricing mannequin turned cost-prohibitive as transaction volumes grew, and limitations on fine-tuning prevented them from totally utilizing their proprietary information for mannequin enchancment. By deploying a fine-tuned Meta Llama mannequin on SageMaker, Impel achieved the next:

- Price predictability by hosted pricing, mitigating per-token expenses

- Better management of mannequin coaching and customization, main to twenty% enchancment throughout core options

- Safe processing of proprietary information inside their AWS account

- Computerized scaling to satisfy the spike in inference demand

Resolution overview

Impel selected SageMaker AI, a completely managed cloud service that builds, trains, and deploys machine studying (ML) fashions utilizing AWS infrastructure, instruments, and workflows to fine-tune a Meta Llama mannequin for Gross sales AI. Meta Llama is a robust mannequin, well-suited for industry-specific duties attributable to its robust instruction-following capabilities, help for prolonged context home windows, and environment friendly dealing with of area data.

Impel used SageMaker LMI containers to deploy LLM inference on SageMaker endpoints. These purpose-built Docker containers provide optimized efficiency for fashions like Meta Llama with help for LoRA fine-tuned fashions and AWQ. Impel used LoRA fine-tuning, an environment friendly and cost-effective approach to adapt LLMs for specialised functions, by Amazon SageMaker Studio notebooks operating on ml.p4de.24xlarge situations. This managed atmosphere simplified the event course of, enabling Impel’s group to seamlessly combine widespread open supply instruments like PyTorch and torchtune for mannequin coaching. For mannequin optimization, Impel utilized AWQ methods to cut back mannequin measurement and enhance inference efficiency.

In manufacturing, Impel deployed inference endpoints on ml.g6e.12xlarge situations, powered by 4 NVIDIA GPUs and excessive reminiscence capability, appropriate for serving massive fashions like Meta Llama effectively. Impel used the SageMaker built-in automated scaling function to routinely scale serving containers based mostly on concurrent requests, which helped meet variable manufacturing visitors calls for whereas optimizing for value.

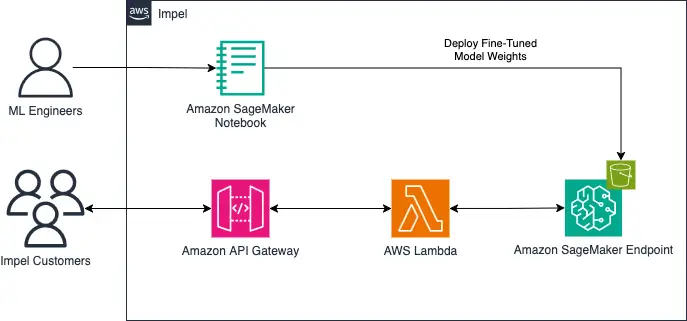

The next diagram illustrates the answer structure, showcasing mannequin fine-tuning and buyer inference.

Impel’s R&D group partnered intently with numerous AWS groups, together with its Account group, GenAI technique group, and SageMaker service group. This digital group collaborated over a number of sprints main as much as the fine-tuned Gross sales AI launch date to assessment mannequin evaluations, benchmark SageMaker efficiency, optimize scaling methods, and determine the optimum SageMaker situations. This partnership encompassed technical classes, strategic alignment conferences, and value and operational discussions for post-implementation. The tight collaboration between Impel and AWS was instrumental in realizing the total potential of Impel’s fine-tuned mannequin hosted on SageMaker AI.

High quality-tuned mannequin analysis course of

Impel’s transition to its fine-tuned Meta Llama mannequin delivered enhancements throughout key efficiency metrics with noticeable enhancements in understanding automotive-specific terminology and producing personalised responses. Structured human evaluations revealed enhancements in crucial buyer interplay areas: personalised replies improved from 73% to 86% accuracy, dialog summarization elevated from 70% to 83%, and follow-up message era confirmed probably the most vital achieve, leaping from 59% to 92% accuracy. The next screenshot exhibits how prospects work together with Gross sales AI. The mannequin analysis course of included Impel’s R&D group grading numerous use instances served by the incumbent LLM supplier and Impel’s fine-tuned fashions.

Instance of a buyer interplay with Gross sales AI.

Along with output high quality, Impel measured latency and throughput to validate the mannequin’s manufacturing readiness. Utilizing awscurl for SigV4-signed HTTP requests, the group confirmed these enhancements in real-world efficiency metrics, making certain optimum buyer expertise in manufacturing environments.

Utilizing domain-specific fashions for higher efficiency

Impel’s evolution of Gross sales AI progressed from a general-purpose LLM to a domain-specific, fine-tuned mannequin. Utilizing anonymized buyer interplay information, Impel fine-tuned a publicly accessible basis mannequin, leading to a number of key enhancements. The brand new mannequin exhibited a 20% improve in accuracy throughout core options, showcasing enhanced automotive {industry} comprehension and extra environment friendly context window utilization. By transitioning to this strategy, Impel achieved three main advantages:

- Enhanced information safety by in-house processing inside their AWS accounts

- Diminished reliance on exterior APIs and third-party suppliers

- Better operational management for scaling and customization

These developments, coupled with the numerous output high quality enchancment, validated Impel’s strategic shift in direction of a domain-specific AI mannequin for Gross sales AI.

Increasing AI innovation in automotive retail

Impel’s success deploying fine-tuned fashions on SageMaker has established a basis for extending its AI capabilities to help a broader vary of use instances tailor-made to the automotive {industry}. Impel is planning to transition to in-house, domain-specific fashions to increase the advantages of improved accuracy and efficiency all through their Buyer Engagement Product suite.Wanting forward, Impel’s R&D group is advancing their AI capabilities by incorporating Retrieval Augmented Technology (RAG) workflows, superior operate calling, and agentic workflows. These improvements might help ship adaptive, context-aware methods designed to work together, purpose, and act throughout complicated automotive retail duties.

Conclusion

On this submit, we mentioned how Impel has enhanced the automotive dealership buyer expertise with fine-tuned LLMs on SageMaker.

For organizations contemplating related transitions to fine-tuned fashions, Impel’s expertise demonstrates how working with AWS might help obtain each accuracy enhancements and mannequin customization alternatives whereas constructing long-term AI capabilities tailor-made to particular {industry} wants. Join together with your account group or go to Amazon SageMaker AI to learn the way SageMaker might help you deploy and handle fine-tuned fashions.

Concerning the Authors

Nicholas Scozzafava is a Senior Options Architect at AWS, centered on startup prospects. Previous to his present function, he helped enterprise prospects navigate their cloud journeys. He’s captivated with cloud infrastructure, automation, DevOps, and serving to prospects construct and scale on AWS.

Nicholas Scozzafava is a Senior Options Architect at AWS, centered on startup prospects. Previous to his present function, he helped enterprise prospects navigate their cloud journeys. He’s captivated with cloud infrastructure, automation, DevOps, and serving to prospects construct and scale on AWS.

Sam Sudakoff is a Senior Account Supervisor at AWS, centered on strategic startup ISVs. Sam makes a speciality of expertise landscapes, AI/ML, and AWS options. Sam’s ardour lies in scaling startups and driving SaaS and AI transformations. Notably, his work with AWS’s high startup ISVs has centered on constructing strategic partnerships and implementing go-to-market initiatives that bridge enterprise expertise with revolutionary startup options, whereas sustaining strict adherence with information safety and privateness necessities.

Sam Sudakoff is a Senior Account Supervisor at AWS, centered on strategic startup ISVs. Sam makes a speciality of expertise landscapes, AI/ML, and AWS options. Sam’s ardour lies in scaling startups and driving SaaS and AI transformations. Notably, his work with AWS’s high startup ISVs has centered on constructing strategic partnerships and implementing go-to-market initiatives that bridge enterprise expertise with revolutionary startup options, whereas sustaining strict adherence with information safety and privateness necessities.

Vivek Gangasani is a Lead Specialist Options Architect for Inference at AWS. He helps rising generative AI firms construct revolutionary options utilizing AWS providers and accelerated compute. At present, he’s centered on creating methods for fine-tuning and optimizing the inference efficiency of huge language fashions. In his free time, Vivek enjoys climbing, watching motion pictures, and making an attempt totally different cuisines.

Vivek Gangasani is a Lead Specialist Options Architect for Inference at AWS. He helps rising generative AI firms construct revolutionary options utilizing AWS providers and accelerated compute. At present, he’s centered on creating methods for fine-tuning and optimizing the inference efficiency of huge language fashions. In his free time, Vivek enjoys climbing, watching motion pictures, and making an attempt totally different cuisines.

Dmitry Soldatkin is a Senior AI/ML Options Architect at AWS, serving to prospects design and construct AI/ML options. Dmitry’s work covers a variety of ML use instances, with a main curiosity in generative AI, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary providers, utilities, and telecommunications. Previous to becoming a member of AWS, Dmitry was an architect, developer, and expertise chief in information analytics and machine studying fields within the monetary providers {industry}.

Dmitry Soldatkin is a Senior AI/ML Options Architect at AWS, serving to prospects design and construct AI/ML options. Dmitry’s work covers a variety of ML use instances, with a main curiosity in generative AI, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary providers, utilities, and telecommunications. Previous to becoming a member of AWS, Dmitry was an architect, developer, and expertise chief in information analytics and machine studying fields within the monetary providers {industry}.

Tatia Tsmindashvili is a Senior Deep Studying Researcher at Impel with an MSc in Biomedical Engineering and Medical Informatics. She has over 5 years of expertise in AI, with pursuits spanning LLM brokers, simulations, and neuroscience. You’ll find her on LinkedIn.

Tatia Tsmindashvili is a Senior Deep Studying Researcher at Impel with an MSc in Biomedical Engineering and Medical Informatics. She has over 5 years of expertise in AI, with pursuits spanning LLM brokers, simulations, and neuroscience. You’ll find her on LinkedIn.

Ana Kolkhidashvili is the Director of R&D at Impel, the place she leads AI initiatives centered on massive language fashions and automatic dialog methods. She has over 8 years of expertise in AI, specializing in massive language fashions, automated dialog methods, and NLP. You’ll find her on LinkedIn.

Ana Kolkhidashvili is the Director of R&D at Impel, the place she leads AI initiatives centered on massive language fashions and automatic dialog methods. She has over 8 years of expertise in AI, specializing in massive language fashions, automated dialog methods, and NLP. You’ll find her on LinkedIn.

Guram Dentoshvili is the Director of Engineering and R&D at Impel, the place he leads the event of scalable AI options and drives innovation throughout the corporate’s conversational AI merchandise. He started his profession at Pulsar AI as a Machine Studying Engineer and performed a key function in constructing AI applied sciences tailor-made to the automotive {industry}. You’ll find him on LinkedIn.

Guram Dentoshvili is the Director of Engineering and R&D at Impel, the place he leads the event of scalable AI options and drives innovation throughout the corporate’s conversational AI merchandise. He started his profession at Pulsar AI as a Machine Studying Engineer and performed a key function in constructing AI applied sciences tailor-made to the automotive {industry}. You’ll find him on LinkedIn.

Dachi Choladze is the Chief Innovation Officer at Impel, the place he leads initiatives in AI technique, innovation, and product improvement. He has over 10 years of expertise in expertise entrepreneurship and synthetic intelligence. Dachi is the co-founder of Pulsar AI, Georgia’s first globally profitable AI startup, which later merged with Impel. You’ll find him on LinkedIn.

Dachi Choladze is the Chief Innovation Officer at Impel, the place he leads initiatives in AI technique, innovation, and product improvement. He has over 10 years of expertise in expertise entrepreneurship and synthetic intelligence. Dachi is the co-founder of Pulsar AI, Georgia’s first globally profitable AI startup, which later merged with Impel. You’ll find him on LinkedIn.

Deepam Mishra is a Sr Advisor to Startups at AWS and advises startups on ML, Generative AI, and AI Security and Duty. Earlier than becoming a member of AWS, Deepam co-founded and led an AI enterprise at Microsoft Company and Wipro Applied sciences. Deepam has been a serial entrepreneur and investor, having based 4 AI/ML startups. Deepam relies within the NYC metro space and enjoys assembly AI founders.

Deepam Mishra is a Sr Advisor to Startups at AWS and advises startups on ML, Generative AI, and AI Security and Duty. Earlier than becoming a member of AWS, Deepam co-founded and led an AI enterprise at Microsoft Company and Wipro Applied sciences. Deepam has been a serial entrepreneur and investor, having based 4 AI/ML startups. Deepam relies within the NYC metro space and enjoys assembly AI founders.