A lot of my prospects ask for recommendation on which LLM (Giant Language Mannequin) to make use of for constructing merchandise tailor-made to Dutch-speaking customers. Nonetheless, most out there benchmarks are multilingual and don’t particularly deal with Dutch. As a machine studying engineer and PhD researcher into machine studying on the College of Amsterdam, I understand how essential benchmarks have been to the development of AI — however I additionally perceive the dangers when benchmarks are trusted blindly. That is why I made a decision to experiment and run some Dutch-specific benchmarking of my very own.

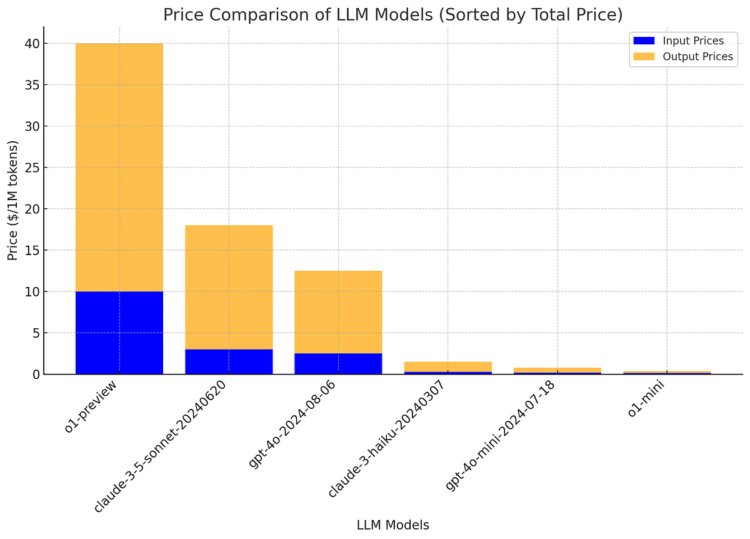

On this submit, you’ll discover an in-depth take a look at my first try at benchmarking a number of giant language fashions (LLMs) on actual Dutch examination questions. I’ll information you thru your entire course of, from gathering over 12,000 examination PDFs to extracting question-answer pairs and grading the fashions’ efficiency mechanically utilizing LLMs. You’ll see how fashions like o1-preview, o1-mini, GPT-4o, GPT-4o-mini, and Claude-3 carried out throughout totally different Dutch instructional ranges, from VMBO to VWO, and whether or not the upper prices of sure fashions result in higher outcomes. That is only a first go on the drawback, and I could dive deeper with extra posts like this sooner or later, exploring different fashions and duties. I’ll additionally speak concerning the challenges and prices concerned and share some insights on which fashions provide one of the best worth for Dutch-language duties. When you’re constructing or scaling LLM-based merchandise for the Dutch market, this submit will present invaluable insights to assist information your selections as of September 2024.

It’s turning into extra widespread for corporations like OpenAI to make daring, nearly extravagant claims concerning the capabilities of their fashions, typically with out sufficient real-world validation to again them up. That’s why benchmarking these fashions is so essential — particularly after they’re marketed as fixing all the things from complicated reasoning to nuanced language understanding. With such grand claims, it’s very important to run goal assessments to see how effectively they really carry out, and extra particularly, how they deal with the distinctive challenges of the Dutch language.

I used to be shocked to search out that there hasn’t been intensive analysis into benchmarking LLMs for Dutch, which is what led me to take issues into my very own fingers on a wet afternoon. With so many establishments and firms counting on these fashions an increasing number of, it felt like the precise time to dive in and begin validating these fashions. So, right here’s my first try to begin filling that hole, and I hope it provides invaluable insights for anybody working with the Dutch-language.

A lot of my prospects work with Dutch-language merchandise, they usually want AI fashions which can be each cost-effective and extremely performant in understanding and processing Dutch. Though giant language fashions (LLMs) have made spectacular strides, many of the out there benchmarks deal with English or multilingual capabilities, typically neglecting the nuances of smaller languages like Dutch. This lack of deal with Dutch is important as a result of linguistic variations can result in giant efficiency gaps when a mannequin is requested to grasp non-English texts.

5 years in the past, NLP — deep studying fashions for Dutch have been removed from mature (Like the primary variations of BERT). On the time, conventional strategies like TF-IDF paired with logistic regression typically outperformed early deep-learning fashions on Dutch language duties I labored on. Whereas fashions (and datasets) have since improved tremendously, particularly with the rise of transformers and multilingual pre-trained LLMs, it’s nonetheless crucial to confirm how effectively these advances translate to particular languages like Dutch. The idea that efficiency good points in English carry over to different languages isn’t all the time legitimate, particularly for complicated duties like studying comprehension.

That’s why I centered on making a customized benchmark for Dutch, utilizing actual examination information from the Dutch “Nederlands” exams (These exams enter the general public area after they’ve been revealed). These exams don’t simply contain easy language processing; they check “begrijpend lezen” (studying comprehension), requiring college students to grasp the intent behind numerous texts and reply nuanced questions on them. Such a activity is especially essential as a result of it’s reflective of real-world purposes, like processing and summarizing authorized paperwork, information articles, or buyer queries written in Dutch.

By benchmarking LLMs on this particular activity, I needed to achieve deeper insights into how fashions deal with the complexity of the Dutch language, particularly when requested to interpret intent, draw conclusions, and reply with correct solutions. That is essential for companies constructing merchandise tailor-made to Dutch-speaking customers. My objective was to create a extra focused, related benchmark to assist determine which fashions provide one of the best efficiency for Dutch, relatively than counting on basic multilingual benchmarks that don’t absolutely seize the intricacies of the language.