It is a visitor publish co-written with Skello.

Skello is a number one human assets (HR) software program as a service (SaaS) answer specializing in worker scheduling and workforce administration. Catering to numerous sectors resembling hospitality, retail, healthcare, building, and {industry}, Skello provides options together with schedule creation, time monitoring, and payroll preparation. With roughly 20,000 clients and 400,000 each day customers throughout Europe as of 2024, Skello frequently innovates to fulfill its purchasers’ evolving wants.

One such innovation is the implementation of an AI-powered assistant to reinforce person expertise and information accessibility. On this publish, we clarify how Skello used Amazon Bedrock to create this AI assistant for end-users whereas sustaining buyer information security in a multi-tenant atmosphere. Amazon Bedrock is a totally managed service that gives a selection of high-performing basis fashions (FMs) by a single API, together with a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI.

We dive deep into the challenges of implementing giant language fashions (LLMs) for information querying, notably within the context of a French firm working beneath the Normal Knowledge Safety Regulation (GDPR). Our answer demonstrates learn how to stability highly effective AI capabilities with strict information safety necessities.

Challenges with multi-tenant information entry

As Skello’s platform grew to serve 1000’s of companies, we recognized a essential want: our customers wanted higher methods to entry and perceive their workforce information. A lot of our clients, notably these in HR and operations roles, discovered conventional database querying instruments too technical and time-consuming. This led us to establish two key areas for enchancment:

- Fast entry to non-structured information – Our customers wanted to search out particular info throughout varied information sorts—worker information, scheduling information, attendance logs, and efficiency metrics. Conventional search strategies usually fell brief when customers had advanced questions like “Present me all part-time workers who labored greater than 30 hours final month” or “What’s the typical sick go away period within the retail division?”

- Visualization of knowledge by graphs for analytics – Though our platform collected complete workforce information, customers struggled to remodel this uncooked info into actionable insights. They wanted an intuitive technique to create visible representations of developments and patterns with out writing advanced SQL queries or studying specialised enterprise intelligence instruments.

To deal with these challenges, we wanted an answer that would:

- Perceive pure language questions on advanced workforce information

- Accurately interpret context and intent from person queries

- Generate applicable database queries whereas respecting information entry guidelines

- Return leads to user-friendly codecs, together with visualizations

- Deal with variations in how customers may phrase comparable questions

- Course of queries about time-based information and developments

LLMs emerged as the best answer for this activity. Their skill to grasp pure language and context, mixed with their functionality to generate structured outputs, made them completely fitted to translating person questions into exact database queries. Nevertheless, implementing LLMs in a business-critical utility required cautious consideration of safety, accuracy, and efficiency necessities.

Answer overview

Utilizing LLMs to generate structured queries from pure language enter is an rising space of curiosity. This course of allows the transformation of person requests into organized information buildings, which may then be used to question databases routinely.

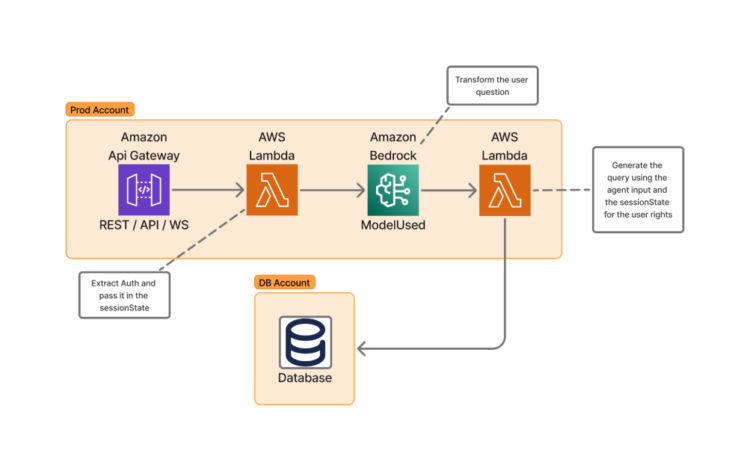

The next diagram of Skello’s high-level structure illustrates this person request transformation course of.

The implementation utilizing AWS Lambda and Amazon Bedrock gives a number of benefits:

- Scalability by serverless structure

- Value-effective processing with pay-as-you-go pricing

- Low-latency efficiency

- Entry to superior language fashions like Anthropic’s Claude 3.5 Sonnet

- Speedy deployment capabilities

- Versatile integration choices

Fundamental question era course of

The next diagram illustrates how we rework pure language queries into structured database requests. For this instance, the person asks “Give me the gender parity.”

The method works as follows:

- The authentication service validates the person’s id and permissions.

- The LLM converts the pure language to a structured question format.

- The question validation service enforces compliance with safety insurance policies.

- The database entry layer executes the question inside the person’s permitted scope.

Dealing with advanced queries

For extra refined requests like “Give me the labored hours per week per place for the final 3 months,” our system completes the next steps:

- Extract question parts:

- Goal metric: labored hours

- Aggregation ranges: week, place

- Time-frame: 3 months

- Generate temporal calculations:

- Use relative time expressions as a substitute of hard-coded dates

- Implement standardized date dealing with patterns

Knowledge schema optimization

To make our system as environment friendly and user-friendly as doable, we fastidiously organized our information construction—consider it as making a well-organized submitting system for a big workplace.

We created standardized schema definitions, establishing constant methods to retailer comparable kinds of info. For instance, date-related fields (rent dates, shift occasions, trip intervals) comply with the identical format. This helps stop confusion when customers ask questions like “Present me all occasions from final week.” It’s much like having all calendars in your workplace utilizing the identical date format as a substitute of some utilizing MM/DD/YY and others utilizing DD/MM/YY.

Our system employs constant naming conventions with clear, predictable names for all information fields. As a substitute of technical abbreviations like emp_typ_cd, we use clear phrases like employee_type. This makes it easy for the AI to grasp what customers imply once they ask questions like “Present me all full-time workers.”

For optimized search patterns, we strategically organized our information to make frequent searches quick and environment friendly. That is notably essential as a result of it instantly impacts person expertise and system efficiency. We analyzed utilization patterns to establish probably the most regularly requested info and designed our database indexes accordingly. Moreover, we created specialised information views that pre-aggregate frequent report requests. This complete strategy means questions like “Who’s working in the present day?” get answered virtually immediately.

We additionally established clear information relationships by mapping out how completely different items of data relate to one another. For instance, we clearly join workers to their departments, shifts, and managers. This helps reply advanced questions like “Present me all division managers who’ve staff members on trip subsequent week.”

These optimizations ship actual advantages to our customers:

- Sooner response occasions when asking questions

- Extra correct solutions to queries

- Much less confusion when referring to particular kinds of information

- Capability to ask extra advanced questions on relationships between several types of info

- Constant outcomes when asking comparable questions in numerous methods

For instance, whether or not a person asks “Present me everybody’s trip time” or “Show all vacation schedules,” the system understands they’re searching for the identical sort of data. This reliability makes the system extra reliable and simpler to make use of for everybody, no matter their technical background.

Graph era and show

Some of the highly effective options of our system is its skill to show information into significant visible charts and graphs routinely. This consists of the next actions:

- Sensible label creation – The system understands what your information means and creates clear, readable labels. For instance, should you ask “Present me worker attendance during the last 6 months,” the horizontal axis routinely labels the months (January by June), the vertical axis reveals attendance numbers with simple-to-read intervals, and the title clearly states what you’re taking a look at: “Worker Attendance Traits.”

- Automated legend creation – The system creates useful legends that designate what every a part of the chart means. As an example, should you ask “Evaluate gross sales throughout completely different departments,” completely different departments get completely different colours, a transparent legend reveals which coloration represents which division, and extra info like “Dashed strains present earlier 12 months” is routinely added when wanted.

- Selecting the best sort of chart – The system is sensible about choosing one of the simplest ways to indicate your info. For instance, it makes use of bar charts for evaluating completely different classes (“Present me gross sales by division”), line graphs for developments over time (“How has attendance modified this 12 months?”), pie charts for exhibiting elements of a complete (“What’s the breakdown of full-time vs. part-time employees?”), and warmth maps for advanced patterns (“Present me busiest hours per day of the week”).

- Sensible sizing and scaling – The system routinely adjusts the scale and scale of charts to make them easy to learn. For instance, if numbers vary from 1–100, it would present intervals of 10; should you’re taking a look at tens of millions, it would present them in a extra readable approach (1M, 2M, and so forth.); charts routinely resize to indicate patterns clearly; and essential particulars are by no means too small to see.

All of this occurs routinely—you ask your query, and the system handles the technical particulars of making a transparent, skilled visualization. For instance, the next determine is an instance for the query “What number of hours my workers labored over the previous 7 weeks?”

Safety-first structure

Our implementation adheres to OWASP greatest practices (particularly LLM06) by sustaining full separation between safety controls and the LLM.

By means of devoted safety companies, person authentication and authorization checks are carried out earlier than LLM interactions, with person context and permissions managed by Amazon Bedrock SessionParameters, preserving safety info solely exterior of LLM processing.

Our validation layer makes use of Amazon Bedrock Guardrails to guard towards immediate injection, inappropriate content material, and forbidden matters resembling racism, sexism, or unlawful content material.

The system’s structure implements strict role-based entry controls by an in depth permissions matrix, so customers can solely entry information inside their licensed scope. For authentication, we use industry-standard JWT and SAML protocols, and our authorization service maintains granular management over information entry permissions.

This multi-layered strategy prevents potential safety bypasses by immediate manipulation or different LLM-specific assaults. The system routinely enforces information boundaries at each database and API ranges, successfully stopping cross-contamination between completely different buyer accounts. As an example, division managers can solely entry their staff’s information, with these restrictions enforced by database compartmentalization.

Moreover, our complete audit system maintains immutable logs of all actions, together with timestamps, person identifiers, and accessed assets, saved individually to guard their integrity. This safety framework operates seamlessly within the background, sustaining sturdy safety of delicate info with out disrupting the person expertise or reputable workflows.

Advantages

Creating information visualizations has by no means been extra accessible. Even with out specialised experience, now you can produce professional-quality charts that talk your insights successfully. The streamlined course of makes positive your visualizations stay persistently clear and intuitive, so you’ll be able to focus on exploring your information questions as a substitute of spending time on presentation particulars.

The answer works by easy conversational requests that require no technical information or specialised software program. You merely describe what you need to visualize utilizing on a regular basis language and the system interprets your request and creates the suitable visualization. There’s no must study advanced software program interfaces, keep in mind particular instructions, or perceive information formatting necessities. The underlying expertise handles the info processing, chart choice, {and professional} formatting routinely, reworking your spoken or written requests into polished visible displays inside moments.

Your particular info must drive how the info is displayed, making the insights extra related and actionable. When it’s time to share your findings, these visualizations seamlessly combine into your experiences and displays with polished formatting that enhances your total message. This democratization of knowledge visualization empowers everybody to inform compelling information tales.

Conclusion

On this publish, we explored Skello’s implementation of an AI-powered assistant utilizing Amazon Bedrock and Lambda. We noticed how end-users can question their very own information in a multi-tenant atmosphere whereas sustaining logical boundaries and complying with GDPR rules. The mix of serverless structure and superior language fashions proved efficient in enhancing information accessibility and person expertise.

We invite you to discover the AWS Machine Studying Weblog for extra insights on AI options and their potential enterprise functions. In case you’re keen on studying extra about Skello’s journey in modernizing HR software program, try our weblog publish collection on the subject.

In case you have any questions or solutions about implementing comparable options in your individual multi-tenant atmosphere, please be at liberty to share them within the feedback part.

Concerning the authors

Nicolas de Place is a Knowledge & AI Options Architect specializing in machine studying technique for high-growth startups. He empowers rising firms to harness the complete potential of synthetic intelligence and superior analytics, designing scalable ML architectures and data-driven options

Nicolas de Place is a Knowledge & AI Options Architect specializing in machine studying technique for high-growth startups. He empowers rising firms to harness the complete potential of synthetic intelligence and superior analytics, designing scalable ML architectures and data-driven options

Cédric Peruzzi is a Software program Architect at Skello, the place he focuses on designing and implementing Generative AI options. Earlier than his present position, he labored as a software program engineer and architect, bringing his expertise to assist construct higher software program options.

Cédric Peruzzi is a Software program Architect at Skello, the place he focuses on designing and implementing Generative AI options. Earlier than his present position, he labored as a software program engineer and architect, bringing his expertise to assist construct higher software program options.