As digital commerce expands, fraud detection has change into essential in defending companies and customers partaking in on-line transactions. Implementing machine studying (ML) algorithms permits real-time evaluation of high-volume transactional knowledge to quickly establish fraudulent exercise. This superior functionality helps mitigate monetary dangers and safeguard buyer privateness inside increasing digital markets.

Deloitte is a strategic international methods integrator with over 19,000 licensed AWS practitioners throughout the globe. It continues to lift the bar by participation within the AWS Competency Program with 29 competencies, together with Machine Studying.

This put up demonstrates the potential for quantum computing algorithms paired with ML fashions to revolutionize fraud detection inside digital fee platforms. We share how Deloitte constructed a hybrid quantum neural community answer with Amazon Braket to display the doable positive aspects coming from this rising know-how.

The promise of quantum computing

Quantum computer systems harbor the potential to radically overhaul monetary methods, enabling a lot sooner and extra exact options. In comparison with classical computer systems, quantum computer systems are anticipated in the long term to should benefits within the areas of simulation, optimization, and ML. Whether or not quantum computer systems can present a significant speedup to ML is an energetic matter of analysis.

Quantum computing can carry out environment friendly close to real-time simulations in essential areas reminiscent of pricing and danger administration. Optimization fashions are key actions in monetary establishments, aimed toward figuring out one of the best funding technique for a portfolio of belongings, allocating capital, or attaining productiveness enhancements. A few of these optimization issues are practically inconceivable for conventional computer systems to sort out, so approximations are used to unravel the issues in an inexpensive period of time. Quantum computer systems may carry out sooner and extra correct optimizations with out utilizing any approximations.

Regardless of the long-term horizon, the doubtless disruptive nature of this know-how implies that monetary establishments want to get an early foothold on this know-how by constructing in-house quantum analysis groups, increasing their current ML COEs to incorporate quantum computing, or partaking with companions reminiscent of Deloitte.

At this early stage, prospects search entry to a alternative of various quantum {hardware} and simulation capabilities in an effort to run experiments and construct experience. Braket is a totally managed quantum computing service that allows you to discover quantum computing. It offers entry to quantum {hardware} from IonQ, OQC, Quera, Rigetti, IQM, quite a lot of native and on-demand simulators together with GPU-enabled simulations, and infrastructure for working hybrid quantum-classical algorithms reminiscent of quantum ML. Braket is totally built-in with AWS companies reminiscent of Amazon Easy Storage Service (Amazon S3) for knowledge storage and AWS Id and Entry Administration (IAM) for id administration, and prospects solely pay for what you employ.

On this put up, we display find out how to implement a quantum neural network-based fraud detection answer utilizing Braket and AWS native companies. Though quantum computer systems can’t be utilized in manufacturing right this moment, our answer offers a workflow that may seamlessly adapt and performance as a plug-and-play system sooner or later, when commercially viable quantum units change into out there.

Answer overview

The purpose of this put up is to discover the potential of quantum ML and current a conceptual workflow that might function a plug-and-play system when the know-how matures. Quantum ML continues to be in its early levels, and this put up goals to showcase the artwork of the doable with out delving into particular safety issues. As quantum ML know-how advances and turns into prepared for manufacturing deployments, sturdy safety measures will probably be important. Nevertheless, for now, the main focus is on outlining a high-level conceptual structure that may seamlessly adapt and performance sooner or later when the know-how is prepared.

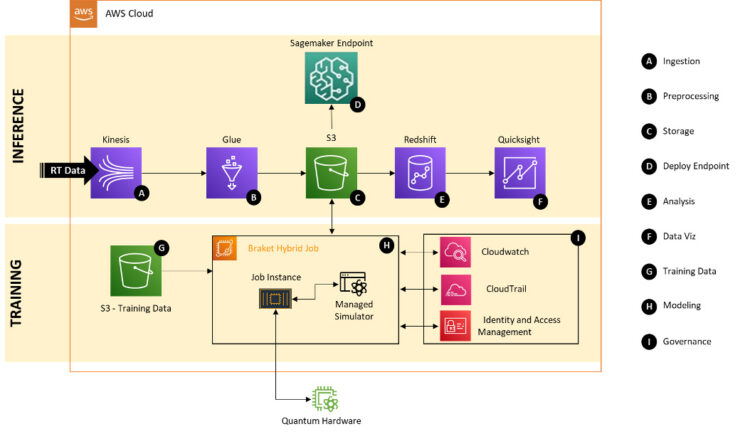

The next diagram exhibits the answer structure for the implementation of a neural network-based fraud detection answer utilizing AWS companies. The answer is applied utilizing a hybrid quantum neural community. The neural community is constructed utilizing the Keras library; the quantum element is applied utilizing PennyLane.

The workflow contains the next key elements for inference (A–F) and coaching (G–I):

- Ingestion – Actual-time monetary transactions are ingested by Amazon Kinesis Information Streams

- Preprocessing – AWS Glue streaming extract, remodel, and cargo (ETL) jobs devour the stream to do preprocessing and lightweight transforms

- Storage – Amazon S3 is used to retailer output artifacts

- Endpoint deployment – We use an Amazon SageMaker endpoint to deploy the fashions

- Evaluation – Transactions together with the mannequin inferences are saved in Amazon Redshift

- Information visualization – Amazon QuickSight is used to visualise the outcomes of fraud detection

- Coaching knowledge – Amazon S3 is used to retailer the coaching knowledge

- Modeling – A Braket surroundings produces a mannequin for inference

- Governance – Amazon CloudWatch, IAM, and AWS CloudTrail are used for observability, governance, and auditability, respectively

Dataset

For coaching the mannequin, we used open supply knowledge out there on Kaggle. The dataset comprises transactions made by bank cards in September 2013 by European cardholders. This dataset data transactions that occurred over a span of two days, throughout which there have been 492 situations of fraud detected out of a complete of 284,807 transactions. The dataset displays a big class imbalance, with fraudulent transactions accounting for simply 0.172% of the whole dataset. As a result of the information is very imbalanced, varied measures have been taken throughout knowledge preparation and mannequin growth.

The dataset completely contains numerical enter variables, which have undergone a Principal Element Evaluation (PCA) transformation due to confidentiality causes.

The information solely contains numerical enter options (PCA-transformed because of confidentiality) and three key fields:

- Time – Time between every transaction and first transaction

- Quantity – Transaction quantity

- Class – Goal variable, 1 for fraud or 0 for non-fraud

Information preparation

We break up the information into coaching, validation, and check units, and we outline the goal and the options units, the place Class is the goal variable:

The Class subject assumes values 0 and 1. To make the neural community cope with knowledge imbalance, we carry out a label encoding on the y units:

The encoding applies to all of the values the mapping: 0 to [1,0], and 1 to [0,1].

Lastly, we apply scaling that standardizes the options by eradicating the imply and scaling to unit variance:

The capabilities LabelEncoder and StandardScaler can be found within the scikit-learn Python library.

After all of the transformations are utilized, the dataset is able to be the enter of the neural community.

Neural community structure

We composed the neural community structure with the next layers primarily based on a number of exams empirically:

- A primary dense layer with 32 nodes

- A second dense layer with 9 nodes

- A quantum layer as neural community output

- Dropout layers with price equals to 0.3

We apply an L2 regularization on the primary layer and each L1 and L2 regularization on the second, to keep away from overfitting. We initialize all of the kernels utilizing the he_normal perform. The dropout layers are supposed to scale back overfitting as properly.

Quantum circuit

Step one to acquire the layer is to construct the quantum circuit (or the quantum node). To perform this job, we used the Python library PennyLane.

PennyLane is an open supply library that seamlessly integrates quantum computing with ML. It means that you can create and practice quantum-classical hybrid fashions, the place quantum circuits act as layers inside classical neural networks. By harnessing the facility of quantum mechanics and merging it with classical ML frameworks like PyTorch, TensorFlow, and Keras, PennyLane empowers you to discover the thrilling frontier of quantum ML. You possibly can unlock new realms of risk and push the boundaries of what’s achievable with this cutting-edge know-how.

The design of the circuit is crucial a part of the general answer. The predictive energy of the mannequin relies upon solely on how the circuit is constructed.

Qubits, the elemental items of knowledge in quantum computing, are entities that behave fairly in another way from classical bits. Not like classical bits that may solely characterize 0 or 1, qubits can exist in a superposition of each states concurrently, enabling quantum parallelism and sooner calculations for sure issues.

We determine to make use of solely three qubits, a small quantity however enough for our case.

We instantiate the qubits as follows:

‘default.qubit’ is the PennyLane qubits simulator. To entry qubits on an actual quantum pc, you’ll be able to substitute the second line with the next code:

device_ARN might be the ARN of the units supported by Braket (for an inventory of supported units, check with Amazon Braket supported units).

We outlined the quantum node as follows:

The inputs are the values yielded as output from the earlier layer of the neural community, and the weights are the precise weights of the quantum circuit.

RY and Rot are rotation capabilities carried out on qubits; CNOT is a managed bitflip gate permitting us to embed the qubits.

qml.expval(qml.PauliZ(0)), qml.expval(qml.PauliZ(2)) are the measurements utilized respectively to the qubits 0 and the qubits 1, and these values would be the neural community output.

Diagrammatically, the circuit may be displayed as:

The transformations utilized to qubit 0 are fewer than the transformations utilized to qbit 2. This alternative is as a result of we wish to separate the states of the qubits in an effort to acquire completely different values when the measures are carried out. Making use of completely different transformations to qubits permits them to enter distinct states, leading to assorted outcomes when measurements are carried out. This phenomenon stems from the rules of superposition and entanglement inherent in quantum mechanics.

After we outline the quantum circuit, we outline the quantum hybrid neural community:

KerasLayer is the PennyLane perform that turns the quantum circuit right into a Keras layer.

Mannequin coaching

After we’ve preprocessed the information and outlined the mannequin, it’s time to coach the community.

A preliminary step is required in an effort to cope with the unbalanced dataset. We outline a weight for every class in response to the inverse root rule:

The weights are given by the inverse of the basis of occurrences for every of the 2 doable goal values.

We compile the mannequin subsequent:

custom_metric is a modified model of the metric precision, which is a customized subroutine to postprocess the quantum knowledge right into a kind suitable with the optimizer.

For evaluating mannequin efficiency on imbalanced knowledge, precision is a extra dependable metric than accuracy, so we optimize for precision. Additionally, in fraud detection, incorrectly predicting a fraudulent transaction as legitimate (false unfavorable) can have severe monetary penalties and dangers. Precision evaluates the proportion of fraud alerts which might be true positives, minimizing expensive false negatives.

Lastly, we match the mannequin:

At every epoch, the weights of each the basic and quantum layer are up to date in an effort to attain increased accuracy. On the finish of the coaching, the community confirmed a lack of 0.0353 on the coaching set and 0.0119 on the validation set. When the match is full, the skilled mannequin is saved in .h5 format.

Mannequin outcomes and evaluation

Evaluating the mannequin is important to gauge its capabilities and limitations, offering insights into the predictive high quality and worth derived from the quantum strategies.

To check the mannequin, we make predictions on the check set:

As a result of the neural community is a regression mannequin, it yields for every report of x_test a 2-D array, the place every element can assume values between 0 and 1. As a result of we’re basically coping with a binary classification drawback, the outputs must be as follows:

- [1,0] – No fraud

- [0,1] – Fraud

To transform the continual values into binary classification, a threshold is important. Predictions which might be equal to or above the brink are assigned 1, and people under the brink are assigned 0.

To align with our purpose of optimizing precision, we selected the brink worth that leads to the very best precision.

The next desk summarizes the mapping between varied threshold values and the precision.

| Class | Threshold = 0.65 | Threshold = 0.70 | Threshold = 0.75 |

| No Fraud | 1.00 | 1.00 | 1.00 |

| Fraud | 0.87 | 0.89 | 0.92 |

The mannequin demonstrates nearly flawless efficiency on the predominant non-fraud class, with precision and recall scores near an ideal 1. Regardless of far much less knowledge, the mannequin achieves precision of 0.87 for detecting the minority fraud class at a 0.65 threshold, underscoring efficiency even on sparse knowledge. To effectively establish fraud whereas minimizing incorrect fraud studies, we determine to prioritize precision over recall.

We additionally needed to match this mannequin with a basic neural community solely mannequin to see if we’re exploiting the positive aspects coming from the quantum utility. We constructed and skilled an an identical mannequin by which the quantum layer is changed by the next:

Within the final epoch, the loss was 0.0119 and the validation loss was 0.0051.

The next desk summarizes the mapping between varied threshold values and the precision for the basic neural community mannequin.

| Class | Threshold=0.65 | Threshold = 0.70 | Threshold = 0.75 |

| No Fraud | 1.0 | 1.00 | 1.00 |

| Fraud | 0.83 | 0.84 | 0. 86 |

Just like the quantum hybrid mannequin, the mannequin efficiency is sort of excellent for almost all class and excellent for the minority class.

The hybrid neural community has 1,296 parameters, whereas the basic one has 1,329. When evaluating precision values, we will observe how the quantum answer offers higher outcomes. The hybrid mannequin, inheriting the properties of high-dimensional areas exploration and a non-linearity from the quantum layer, is ready to generalize the issue higher utilizing fewer parameters, leading to higher efficiency.

Challenges of a quantum answer

Though the adoption of quantum know-how exhibits promise in offering organizations quite a few advantages, sensible implementation on large-scale, fault-tolerant quantum computer systems is a posh job and is an energetic space of analysis. Due to this fact, we must be conscious of the challenges that it poses:

- Sensitivity to noise – Quantum computer systems are extraordinarily delicate to exterior components (reminiscent of atmospheric temperature) and require extra consideration and upkeep than conventional computer systems, and this may drift over time. One technique to decrease the consequences of drift is by benefiting from parametric compilation—the power to compile a parametric circuit such because the one used right here just one time, and feed it contemporary parameters at runtime, avoiding repeated compilation steps. Braket routinely does this for you.

- Dimensional complexity – The inherent nature of qubits, the elemental items of quantum computing, introduces the next stage of intricacy in comparison with conventional binary bits employed in typical computer systems. By harnessing the rules of superposition and entanglement, qubits possess an elevated diploma of complexity of their design. This intricate structure renders the analysis of computational capability a formidable problem, as a result of the multidimensional features of qubits demand a extra nuanced strategy to assessing their computational prowess.

- Computational errors – Elevated calculation errors are intrinsic to quantum computing’s probabilistic nature through the sampling part. These errors may impression accuracy and reliability of the outcomes obtained by quantum sampling. Methods reminiscent of error mitigation and error suppression are actively being developed in an effort to decrease the consequences of errors ensuing from noisy qubits. To be taught extra about error mitigation, see Enabling state-of-the-art quantum algorithms with Qedma’s error mitigation and IonQ, utilizing Braket Direct.

Conclusion

The outcomes mentioned on this put up recommend that quantum computing holds substantial promise for fraud detection within the monetary companies trade. The hybrid quantum neural community demonstrated superior efficiency in precisely figuring out fraudulent transactions, highlighting the potential positive aspects supplied by quantum know-how. As quantum computing continues to advance, its function in revolutionizing fraud detection and different essential monetary processes will change into more and more evident. You possibly can lengthen the outcomes of the simulation through the use of actual qubits and testing varied outcomes on actual {hardware} out there on Braket, reminiscent of these from IQM, IonQ, and Rigetti, all on demand, with pay-as-you-go pricing and no upfront commitments.

To arrange for the way forward for quantum computing, organizations should keep knowledgeable on the most recent developments in quantum know-how. Adopting quantum-ready cloud options now’s a strategic precedence, permitting a easy transition to quantum when {hardware} reaches industrial viability. This forward-thinking strategy will present each a technological edge and fast adaptation to quantum computing’s transformative potential throughout industries. With an built-in cloud technique, companies can proactively get quantum-ready, primed to capitalize on quantum capabilities on the proper second. To speed up your studying journey and earn a digital badge in quantum computing fundamentals, see Introducing the Amazon Braket Studying Plan and Digital Badge.

Join with Deloitte to pilot this answer on your enterprise on AWS.

Concerning the authors

Federica Marini is a Supervisor in Deloitte Italy AI & Information follow with a powerful expertise as a enterprise advisor and technical knowledgeable within the subject of AI, Gen AI, ML and Information. She addresses analysis and buyer enterprise wants with tailor-made data-driven options offering significant outcomes. She is keen about innovation and believes digital disruption would require a human centered strategy to attain full potential.

Federica Marini is a Supervisor in Deloitte Italy AI & Information follow with a powerful expertise as a enterprise advisor and technical knowledgeable within the subject of AI, Gen AI, ML and Information. She addresses analysis and buyer enterprise wants with tailor-made data-driven options offering significant outcomes. She is keen about innovation and believes digital disruption would require a human centered strategy to attain full potential.

Matteo Capozi is a Information and AI knowledgeable in Deloitte Italy, specializing within the design and implementation of superior AI and GenAI fashions and quantum computing options. With a powerful background on cutting-edge applied sciences, Matteo excels in serving to organizations harness the facility of AI to drive innovation and remedy advanced issues. His experience spans throughout industries, the place he collaborates intently with government stakeholders to attain strategic objectives and efficiency enhancements.

Matteo Capozi is a Information and AI knowledgeable in Deloitte Italy, specializing within the design and implementation of superior AI and GenAI fashions and quantum computing options. With a powerful background on cutting-edge applied sciences, Matteo excels in serving to organizations harness the facility of AI to drive innovation and remedy advanced issues. His experience spans throughout industries, the place he collaborates intently with government stakeholders to attain strategic objectives and efficiency enhancements.

Kasi Muthu is a senior companion options architect specializing in generative AI and knowledge at AWS primarily based out of Dallas, TX. He’s keen about serving to companions and prospects speed up their cloud journey. He’s a trusted advisor on this subject and has loads of expertise architecting and constructing scalable, resilient, and performant workloads within the cloud. Outdoors of labor, he enjoys spending time along with his household.

Kasi Muthu is a senior companion options architect specializing in generative AI and knowledge at AWS primarily based out of Dallas, TX. He’s keen about serving to companions and prospects speed up their cloud journey. He’s a trusted advisor on this subject and has loads of expertise architecting and constructing scalable, resilient, and performant workloads within the cloud. Outdoors of labor, he enjoys spending time along with his household.

Kuldeep Singh is a Principal World AI/ML chief at AWS with over 20 years in tech. He skillfully combines his gross sales and entrepreneurship experience with a deep understanding of AI, ML, and cybersecurity. He excels in forging strategic international partnerships, driving transformative options and techniques throughout varied industries with a give attention to generative AI and GSIs.

Kuldeep Singh is a Principal World AI/ML chief at AWS with over 20 years in tech. He skillfully combines his gross sales and entrepreneurship experience with a deep understanding of AI, ML, and cybersecurity. He excels in forging strategic international partnerships, driving transformative options and techniques throughout varied industries with a give attention to generative AI and GSIs.