This publish is cowritten with Ethan Handel and Zhiyuan He from Certainly.com.

Certainly is the world’s #1 job site¹ and a number one world job matching and hiring market. Our mission is to assist folks get jobs. At Certainly, we serve over 350 million world Distinctive Guests monthly² throughout greater than 60 international locations, powering thousands and thousands of connections to new job alternatives on daily basis. Since our founding practically 20 years in the past, machine studying (ML) and synthetic intelligence (AI) have been on the coronary heart of constructing data-driven merchandise that higher match job seekers with the appropriate roles and get folks employed.

On the Core AI staff at Certainly, we embody this legacy of AI innovation by investing closely in HR area analysis and improvement. We offer groups throughout the corporate with production-ready, fine-tuned massive language fashions (LLMs) based mostly on state-of-the-art open supply architectures. On this publish, we describe how utilizing the capabilities of Amazon SageMaker has accelerated Certainly’s AI analysis, improvement velocity, flexibility, and general worth in our pursuit of utilizing Certainly’s distinctive and huge information to leverage superior LLMs.

Infrastructure challenges

Certainly’s enterprise is basically text-based. Certainly firm generates 320 Terabytes of information daily³, which is uniquely beneficial as a consequence of its breadth and the flexibility to attach parts like job descriptions and resumes and match them to the actions and behaviors that drive key firm metric: a profitable rent. LLMs symbolize a major alternative to enhance how job seekers and employers work together in Certainly’s market, with use instances comparable to match explanations, job description technology, match labeling, resume or job description talent extraction, and profession guides, amongst others.

Final 12 months, the Core AI staff evaluated if Certainly’s HR domain-specific information might be used to fine-tune open supply LLMs to reinforce efficiency on specific duties or domains. We selected the fine-tuning method to greatest incorporate Certainly’s distinctive data and vocabulary round mapping the world of jobs. Different methods like immediate tuning or Retrieval Augmented Technology (RAG) and pre-training fashions have been initially much less applicable as a consequence of context window limitations and cost-benefit trade-offs.

The Core AI staff’s goal was to discover options that addressed the precise wants of Certainly’s setting by offering excessive efficiency for fine-tuning, minimal effort for iterative improvement, and a pathway for future cost-effective manufacturing inference. Certainly was on the lookout for an answer that addressed the next challenges:

- How will we effectively arrange repeatable, low-overhead patterns for fine-tuning open-source LLMs?

- How can we offer manufacturing LLM inference at Certainly’s scale with favorable latency and prices?

- How will we effectively onboard early merchandise with totally different request and inference patterns?

The next sections talk about how we addressed every problem.

Answer overview

In the end, Certainly’s Core AI staff converged on the choice to make use of Amazon SageMaker to resolve for the aforementioned challenges and meet the next necessities:

- Speed up fine-tuning utilizing Amazon SageMaker

- Serve manufacturing site visitors shortly utilizing Amazon SageMaker inference

- Allow Certainly to serve quite a lot of manufacturing use instances with flexibility utilizing Amazon SageMaker generative AI inference capabilities (inference parts)

Speed up fine-tuning utilizing Amazon SageMaker

One of many main challenges that we confronted was attaining environment friendly fine-tuning. Initially, Certainly’s Core AI staff setup concerned manually establishing uncooked Amazon Elastic Compute Cloud (Amazon EC2) cases and configuring coaching environments. Scientists needed to handle private improvement accounts and GPU schedules, resulting in improvement overhead and useful resource under-utilization. To deal with these challenges, we used Amazon SageMaker to provoke and handle coaching jobs effectively. Transitioning to Amazon SageMaker offered a number of benefits:

- Useful resource optimization – Amazon SageMaker supplied higher occasion availability and billed just for the precise coaching time, lowering prices related to idle assets

- Ease of setup – We not wanted to fret concerning the setup required for operating coaching jobs, simplifying the method considerably

- Scalability – The Amazon SageMaker infrastructure allowed us to scale our coaching jobs effectively, accommodating the rising calls for of our LLM fine-tuning efforts

Easily serve manufacturing site visitors utilizing Amazon SageMaker inference

To higher serve Certainly customers with LLMs, we standardized the request and response codecs throughout totally different fashions by using open supply software program as an abstraction layer. This layer transformed the interactions right into a standardized OpenAI format, simplifying integration with numerous companies and offering consistency in mannequin interactions.

We constructed an inference infrastructure utilizing Amazon SageMaker inference to host fine-tuned Certainly in-house fashions. The Amazon SageMaker infrastructure offered a strong service for deploying and managing fashions at scale. We deployed totally different specialised fashions on Amazon SageMaker inference endpoints. Amazon SageMaker helps numerous inference frameworks; we selected the Transformers Generative Inference (TGI) framework from Hugging Face for flexibility in entry to the most recent open supply fashions.

The setup on Amazon SageMaker inference has enabled speedy iteration, permitting Certainly to experiment with over 20 totally different fashions in a month. Moreover, the sturdy infrastructure is able to internet hosting dynamic manufacturing site visitors, dealing with as much as 3 million requests per day.

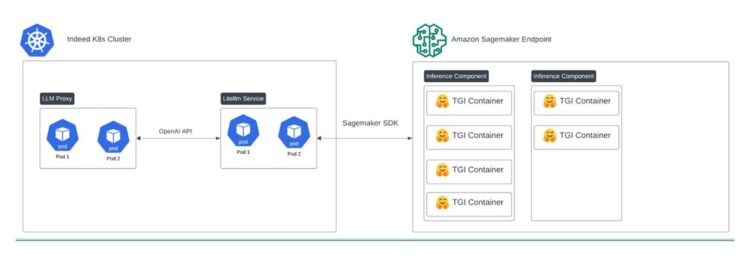

The next structure diagram showcases the interplay between Certainly’s utility and Amazon SageMaker inference endpoints.

Serve quite a lot of manufacturing use instances with flexibility utilizing Amazon SageMaker generative AI inference parts

Outcomes from LLM fine-tuning revealed efficiency advantages. The ultimate problem was shortly implementing the potential to serve manufacturing site visitors to help actual, high-volume manufacturing use instances. Given the applicability of our fashions to satisfy use instances throughout the HR area, our staff hosted a number of totally different specialty fashions for numerous functions. Most fashions didn’t necessitate the intensive assets of an 8-GPU p4d occasion however nonetheless required the latency advantages of A100 GPUs.

Amazon SageMaker just lately launched a brand new characteristic known as inference parts that considerably enhances the effectivity of deploying a number of ML fashions to a single endpoint. This progressive functionality permits for the optimum placement and packing of fashions onto ML cases, leading to a mean price financial savings of as much as 50%. The inference parts abstraction allows customers to assign particular compute assets, comparable to CPUs, GPUs, or AWS Neuron accelerators, to every particular person mannequin. This granular management permits for extra environment friendly utilization of computing energy, as a result of Amazon SageMaker can now dynamically scale every mannequin up or down based mostly on the configured scaling insurance policies. Moreover, the clever scaling supplied by this functionality robotically provides or removes cases as wanted, ensuring that capability is met whereas minimizing idle compute assets. This flexibility extends the flexibility to scale a mannequin right down to zero copies, releasing up beneficial assets when demand is low. This characteristic empowers generative AI and LLM inference to optimize their mannequin deployment prices, cut back latency, and handle a number of fashions with better agility and precision. By decoupling the fashions from the underlying infrastructure, inference parts provide a extra environment friendly and cost-effective manner to make use of the total potential of Amazon SageMaker inference.

Amazon SageMaker inference parts allowed Certainly’s Core AI staff to deploy totally different fashions to the identical occasion with the specified copies of a mannequin, optimizing useful resource utilization. By consolidating a number of fashions on a single occasion, we created essentially the most cost-effective LLM answer obtainable to Certainly product groups. Moreover, with inference parts now supporting dynamic auto scaling, we might optimize the deployment technique. This characteristic robotically adjusts the variety of mannequin copies based mostly on demand, offering even better effectivity and price financial savings, even in comparison with third-party LLM suppliers.

Since integrating inference parts into the inference design, Certainly’s Core AI staff has constructed and validated LLMs which have served over 6.5 million manufacturing requests.

The next determine illustrates the internals of the Core AI’s LLM server.

The simplicity of our Amazon SageMaker setup considerably improves setup pace and suppleness. Immediately, we deploy Amazon SageMaker fashions utilizing the Hugging Face TGI picture in a customized Docker container, giving Certainly immediate entry to over 18 open supply mannequin households.

The next diagram illustrates Certainly’s Core AI flywheel.

Core AI’s enterprise worth from Amazon SageMaker

The seamless integration of Amazon SageMaker inference parts, coupled with our staff’s iterative enhancements, has accelerated our path to worth. We are able to now swiftly deploy and fine-tune our fashions, whereas benefiting from sturdy scalability and cost-efficiency—a major benefit in our pursuit of delivering cutting-edge HR options to our clients.

Maximize efficiency

Excessive-velocity analysis allows Certainly to iterate on fine-tuning approaches to maximise efficiency. Now we have fine-tuned over 75 fashions to advance analysis and manufacturing aims.

We are able to shortly validate and enhance our fine-tuning methodology with many open-source LLMs. As an example, we moved from fine-tuning base basis fashions (FMs) with third-party instruction information to fine-tuning instruction-tuned FMs based mostly on empirical efficiency enhancements.

For our distinctive functions, our portfolio of LLMs performs at parity or higher than the most well-liked normal third-party fashions throughout 15 HR domain-specific duties. For particular HR area duties like extracting talent attributes from resumes, we see a 4–5 occasions enchancment from fine-tuning efficiency over normal area third-party fashions and a notable enhance in HR market performance.

The next determine illustrates Certainly’s inference steady integration and supply (CI/CD) workflow.

The next determine presents some process examples.

Excessive flexibility

Flexibility permits Certainly to be on the frontier of LLM know-how. We are able to deploy and check the most recent state-of-the-art open science fashions on our scalable Amazon SageMaker inference infrastructure instantly upon availability. When Meta launched the Llama3 mannequin household in April 2024, these FMs have been deployed throughout the day, enabling Certainly to start out analysis and supply early testing for groups throughout Certainly. Inside weeks, we fine-tuned our best-performing mannequin to-date and launched it. The next determine illustrates an instance process.

Manufacturing scale

Core AI developed LLMs have already served 6.5 million stay manufacturing requests with a single p4d occasion and a p99 latency of beneath 7 seconds.

Price-efficiency

Every LLM request by Amazon SageMaker is on common 67% cheaper than the prevailing third-party vendor mannequin’s on-demand pricing in early 2024, creating the potential for important price financial savings.

Certainly’s contributions to Amazon SageMaker inference: Enhancing generative AI inference capabilities

Constructing upon the success of their use case, Certainly has been instrumental in partnering with the Amazon SageMaker inference staff to supply inputs to assist AWS construct and improve key generative AI capabilities inside Amazon SageMaker. For the reason that early days of engagement, Certainly has offered the Amazon SageMaker inference staff with beneficial inputs to enhance our choices. The options and optimizations launched by this collaboration are empowering different AWS clients to unlock the transformative potential of generative AI with better ease, cost-effectiveness, and efficiency.

“Amazon SageMaker inference has enabled Certainly to quickly deploy high-performing HR area generative AI fashions, powering thousands and thousands of customers looking for new job alternatives on daily basis. The flexibleness, partnership, and cost-efficiency of Amazon SageMaker inference has been beneficial in supporting Certainly’s efforts to leverage AI to raised serve our customers.”

– Ethan Handel, Senior Product Supervisor at Certainly.

Conclusion

Certainly’s implementation of Amazon SageMaker inference parts has been instrumental in solidifying the corporate’s place as an AI chief within the HR trade. Core AI now has a strong service panorama that enhances the corporate’s potential to develop and deploy AI options tailor-made to the HR trade. With Amazon SageMaker, Certainly has efficiently constructed and built-in HR area LLMs that considerably enhance job matching processes and different features of Certainly’s market.

The flexibleness and scalability of Amazon SageMaker inference parts have empowered Certainly to remain forward of the curve, regularly adapting its AI-driven options to satisfy the evolving wants of job seekers and employers worldwide. This strategic partnership underscores the transformative potential of integrating superior AI capabilities, like these supplied by Amazon SageMaker inference parts, into core enterprise operations to drive effectivity and innovation.

¹Comscore, Distinctive Guests, June 2024

²Indeed Inside Knowledge, common month-to-month Distinctive Guests October 2023 – March 2024

³Indeed information

Concerning the Authors

Ethan Handel is a Senior Product Supervisor at Certainly, based mostly in Austin, TX. He makes a speciality of generative AI analysis and improvement and utilized information science merchandise, unlocking new methods to assist folks get jobs the world over on daily basis. He loves fixing huge issues and innovating with how Certainly will get worth from information. Ethan additionally loves being a dad of three, is an avid photographer, and loves every thing automotive.

Ethan Handel is a Senior Product Supervisor at Certainly, based mostly in Austin, TX. He makes a speciality of generative AI analysis and improvement and utilized information science merchandise, unlocking new methods to assist folks get jobs the world over on daily basis. He loves fixing huge issues and innovating with how Certainly will get worth from information. Ethan additionally loves being a dad of three, is an avid photographer, and loves every thing automotive.

Zhiyuan He is a Workers Software program Engineer at Certainly, based mostly in Seattle, WA. He leads a dynamic staff that focuses on all features of using LLM at Certainly, together with fine-tuning, analysis, and inferencing, enhancing the job search expertise for thousands and thousands globally. Zhiyuan is enthusiastic about tackling complicated challenges and is exploring artistic approaches.

Zhiyuan He is a Workers Software program Engineer at Certainly, based mostly in Seattle, WA. He leads a dynamic staff that focuses on all features of using LLM at Certainly, together with fine-tuning, analysis, and inferencing, enhancing the job search expertise for thousands and thousands globally. Zhiyuan is enthusiastic about tackling complicated challenges and is exploring artistic approaches.

Alak Eswaradass is a Principal Options Architect at AWS based mostly in Chicago, IL. She is enthusiastic about serving to clients design cloud architectures utilizing AWS companies to resolve enterprise challenges and is smitten by fixing quite a lot of ML use instances for AWS clients. When she’s not working, Alak enjoys spending time along with her daughters and exploring the outside along with her canines.

Alak Eswaradass is a Principal Options Architect at AWS based mostly in Chicago, IL. She is enthusiastic about serving to clients design cloud architectures utilizing AWS companies to resolve enterprise challenges and is smitten by fixing quite a lot of ML use instances for AWS clients. When she’s not working, Alak enjoys spending time along with her daughters and exploring the outside along with her canines.

Saurabh Trikande is a Senior Product Supervisor for Amazon SageMaker Inference. He’s enthusiastic about working with clients and is motivated by the purpose of democratizing AI. He focuses on core challenges associated to deploying complicated AI functions, multi-tenant fashions, price optimizations, and making deployment of generative AI fashions extra accessible. In his spare time, Saurabh enjoys climbing, studying about progressive applied sciences, following TechCrunch, and spending time along with his household.

Saurabh Trikande is a Senior Product Supervisor for Amazon SageMaker Inference. He’s enthusiastic about working with clients and is motivated by the purpose of democratizing AI. He focuses on core challenges associated to deploying complicated AI functions, multi-tenant fashions, price optimizations, and making deployment of generative AI fashions extra accessible. In his spare time, Saurabh enjoys climbing, studying about progressive applied sciences, following TechCrunch, and spending time along with his household.

Brett Seib is a Senior Options Architect, based mostly in Austin, Texas. He’s enthusiastic about innovating and utilizing know-how to resolve enterprise challenges for purchasers. Brett has a number of years of expertise within the enterprise, Synthetic Intelligence (AI), and information analytics industries, accelerating enterprise outcomes.

Brett Seib is a Senior Options Architect, based mostly in Austin, Texas. He’s enthusiastic about innovating and utilizing know-how to resolve enterprise challenges for purchasers. Brett has a number of years of expertise within the enterprise, Synthetic Intelligence (AI), and information analytics industries, accelerating enterprise outcomes.