Massive language fashions (LLMs) can be utilized to carry out pure language processing (NLP) duties starting from easy dialogues and knowledge retrieval duties, to extra complicated reasoning duties similar to summarization and decision-making. Immediate engineering and supervised fine-tuning, which use directions and examples demonstrating the specified process, could make LLMs higher at following human intents, particularly for a selected use case. Nevertheless, these strategies usually end in LLMs expressing unintended behaviors similar to making up information (hallucinations), producing biased or poisonous textual content, or just not following consumer directions. This results in responses which might be untruthful, poisonous, or just not useful to the consumer. In different phrases, these fashions will not be aligned with their customers.

Supervised studying may also help tune LLMs through the use of examples demonstrating some desired behaviors, which known as supervised fine-tuning (SFT). However even when the sampled set of demonstrations is consultant of some duties, it’s nonetheless usually not exhaustive sufficient to show the LLM extra refined wants similar to moral, societal, and psychological wants, that are important however comparatively summary and subsequently not simple to exhibit. For that reason, SFT usually results in many unintended behaviors, similar to making up information or producing biased and even poisonous contents.

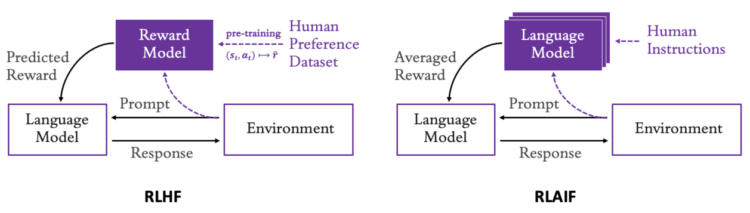

As a substitute of fine-tuning an LLM utilizing solely supervision and demonstration information, you possibly can acquire suggestions from people on a habits of curiosity and use this suggestions to coach a reward mannequin. This reward mannequin can then be used to fine-tune the parameters of the LLM whereas the LLM explores candidate responses till its habits aligns with human preferences and values. This technique known as reinforcement studying from human suggestions (Ouyang et al. 2022). The next diagram illustrates reinforcement studying from human suggestions (RLHF) in comparison with reinforcement studying from AI suggestions (RLAIF).

Not too long ago, Lee et al. (2023) confirmed that utilizing direct LLM suggestions as an alternative of human suggestions is a viable different to scale the event of reward fashions to fine-tune LLMs, particularly as a result of a number of LLMs can be utilized together as proven within the previous determine, the place every LLM is specialised in a single explicit kind of human choice (relevance, conciseness, toxicity, and so forth). This lets you complement, and even bypass, the necessity for human annotation providers, successfully utilizing AI fashions to fine-tune different AI fashions. This system is called superalignment utilizing RLAIF. As a result of the LLMs used to generate suggestions are sometimes instructed to comply with some human preferences or guiding rules, similar to figuring out if an utterance is moral, this technique can also be known as Constitutional AI (Bai et al. 2022). It was additionally proven that when a choice dataset is on the market, bypassing reward modeling and exploration altogether may also help extra immediately regulate a LLM’s parameters to the choice dataset, a way known as direct coverage optimization (DPO, Rafailov et al. 2024).

Every of those strategies—RLHF, RLAIF, and DPO—current a distinct profile of strengths and weaknesses as a result of value, time, and portability of creating express choice datasets with human annotations vs. reward fashions. The professionals and cons of those three strategies shall be defined on this publish that can assist you determine which one most closely fits your use case.

On this publish, we give attention to RLAIF and present learn how to implement an RLAIF pipeline to fine-tune a pre-trained LLM. This pipeline doesn’t require express human annotations to coach a reward mannequin and might use totally different LLM-based reward fashions. The publish Bettering your LLMs with RLHF on Amazon SageMaker exhibits learn how to construct a dataset of human annotations with Amazon SageMaker Floor Fact and practice a reward mannequin for RLHF. SageMaker Floor Fact allows you to put together high-quality, large-scale coaching datasets to fine-tune basis fashions (FMs) and evaluation mannequin outputs to align them with human preferences. The publish Align Meta Llama 3 to human preferences with DPO exhibits learn how to fine-tune a pre-trained LLM from a dataset of human annotations for DPO.

The RLAIF use case on this publish consists of producing next-turn responses inside a dialogue dataset publicly out there on the Hugging Face Hub (the favored Helpfulness/Harmlessness dataset launched by Anthropic in 2023) and fine-tuning the responses of a pre-trained LLM utilizing a purple teaming hate speech mannequin additionally publicly out there (the favored Meta RoBERTa toxicity mannequin). The objective of this RLAIF use case is to cut back the extent of toxicity within the responses generated by the LLM coverage, which you’ll measure earlier than and after fine-tuning utilizing a hold-out take a look at dataset.

This publish has three primary sections:

- High quality-tuning an LLM utilizing human preferences: RLHF/RLAIF vs. DPO

- Classes of human choice reward fashions for RLHF/RLAIF

- Implementation of an RLAIF use case

High quality-tuning an LLM utilizing human preferences: RLHF/RLAIF vs. DPO

RLHF can be utilized to align LLMs with human preferences and values, by eliciting suggestions from people on the LLM’s present habits and utilizing this suggestions to coach a reward mannequin. As soon as parameterized, this reward mannequin can then be used to fine-tune the LLM by reinforcement studying simulations, which are sometimes a lot quicker and cheaper than utilizing human interactions (Ouyang L. et al., 2022). Furthermore, eliciting comparisons of various LLM responses (for instance, asking a human which of two responses is best) is mostly extra easy for people to supply in comparison with offering absolute scores, and doesn’t require human preferences or intentions to be explicitly outlined.

Christiano et al. (2017) supplied the primary proof that RLHF might be economically scaled as much as sensible purposes. Since then, RLHF has been proven to assist tune LLMs to be extra useful (they need to assist the consumer clear up their process), sincere (they shouldn’t fabricate data or mislead the consumer), and innocent (they need to not trigger bodily, psychological, or social hurt to individuals or the surroundings).

In RLHF, the alignment will be biased by the group of people who present the suggestions (beliefs, tradition, private historical past) and the directions given to those human labelers. Furthermore, it would by no means be potential to coach a system that’s aligned to everybody’s preferences directly, or the place everybody would endorse the trade-offs. RLHF has subsequently lately been prolonged to make use of much less and fewer human suggestions, with an final objective to develop automated AI strategies that would scale the refinement and supervision of LLM behaviors within the service of complicated human values (Bai et al. 2022). Constitutional AI and extra usually RLAIF are promising to coach AI techniques that stay useful, sincere, and innocent, whilst some AI capabilities attain or exceed human-level efficiency. This publish focuses on RLAIF.

In RLAIF, a pre-trained LLM is instructed utilizing pure language to critique and revise one other LLM’s responses (or its personal) to be able to reinforce both some particular wants and human preferences, or some extra common rules (moral values, potential for dangerous content material, and so forth). This LLM suggestions gives AI labels that may immediately be used as reward alerts to fine-tune an LLM by reinforcement studying. Current outcomes demonstrated that RLAIF achieves comparable or superior efficiency to RLHF on duties of summarization, useful dialogue technology, and innocent dialogue technology.

Each RLHF and RLAIF can be utilized to steer the mannequin’s habits in a desired method, and each strategies require pre-training a reward mannequin. The important thing distinction is how a lot human suggestions is used to coach the reward mannequin. As a result of there are already many open supply pre-trained reward fashions out there, and a separate publish has already proven learn how to construct a dataset of human annotations and practice a reward mannequin, this publish focuses on RLAIF with a preexisting reward mannequin. We present you learn how to fine-tune a pre-trained LLM by reinforcement studying utilizing a preexisting reward mannequin and learn how to consider the outcomes. A separate publish has already proven learn how to use the strategy of DPO described within the introduction, which doesn’t use express reward fashions and fine-tunes LLMs immediately from choice datasets as an alternative. In distinction, RLAIF, which is the main focus of this publish, doesn’t use express choice datasets and fine-tunes LLMs immediately from reward fashions.

The next diagram illustrates the method of studying from choice suggestions immediately by coverage optimization (DPO) vs. with a reward mannequin to discover and rating new responses by RLHF/RLAIF proximal coverage optimization (PPO).

That can assist you select if DPO or RLAIF most closely fits your use instances, the next desk summarizes the professionals and cons of RLAIF from express reward fashions vs. DPO from express choice datasets. RLHF makes use of each and subsequently gives an middleman profile of execs and cons.

In a nutshell, DPO bypasses the distillation of the choice dataset into an middleman reward mannequin. DPO refines the parameters of an LLM immediately from choice datasets by maximizing the margin between the log-likelihood of the chosen responses and the log-likelihood of the rejected ones within the choice datasets (Rafailov et al., 2024). Mathematically, the reward-based RLAIF/RLHF and reward-free DPO formulations have been proven to be equal and will in principle result in the identical outcomes when fine-tuning is carried out on similar distributions of prompts. Nevertheless, in follow, a number of elements can contribute to result in totally different outcomes. The distribution of prompts can range primarily based on information of the focused prompts for the specified downstream duties (similar to how related the prompts explored throughout fine-tuning are for the precise or future goal distribution of prompts), entry to the fine-tuning datasets (a reward mannequin is extra moveable than the dataset on which it was initially educated), and the standard and dimension of the fine-tuning datasets. The later elements (entry, high quality, dimension) turn into much more necessary in instances the place utilizing a number of fine-tuning datasets is desired. This suggests the next execs and cons.

| RLAIF | DPO | RLHF | |

| Abstract | High quality-tune an LLM from express reward fashions on new prompts. | High quality-tune an LLM immediately from express choice datasets. | Practice reward fashions from choice datasets, then fine-tune an LLM on new prompts. |

| Professionals | High quality-tuning is feasible with out human annotations. Most effective in velocity, compute, and engineering if:

Immediately scales past human supervision. |

High quality-tuning makes use of express human suggestions. Most effective in velocity, compute, and engineering if:

Prime quality and constancy: |

High quality-tuning makes use of express human suggestions. Highest high quality and constancy: In principle, information on human preferences will be discovered most precisely when iteratively producing datasets of such preferences and in addition generalizing such information to arbitrary prompts by parameterizing reward fashions. In follow, that is usually not the case. Iterative studying of reward fashions can be utilized to scale past direct human supervision. |

| Cons | High quality-tuning restricted to out there mannequin of human preferences. Inefficient if:

|

High quality-tuning requires quite a lot of human annotations. Low portability and accessibility: Information on human preferences in its uncooked type, similar to datasets of human annotations. Inefficient if:

|

High quality-tuning requires quite a lot of human annotations. High quality-tuning restricted to discovered fashions of human preferences. Gradual and never moveable: RLHF systematically generates choice datasets and in addition trains reward fashions earlier than fine-tuning the LLM. |

This desk just isn’t exhaustive. Within the context of superalignment, RLAIF may need a transparent benefit as a result of reward fashions will be simply examined, effectively saved and accessed, and in addition mixed-and-matched to accommodate the a number of aspects and preferences of various teams of individuals. However the general efficiency of RLHF, RLAIF, and DPO for general-purpose LLM fine-tuning (assuming every little thing else is equal, similar to entry to datasets, goal distribution of prompts, and so forth) is unclear on the time of writing, with totally different authors and benchmarks favoring totally different conclusions. For instance, Rafailov et al. (2024) favor DPO whereas Ivison et al. (2024) favor RLHF/RLAIF.

To enrich the factors outlined within the desk particularly for selecting PPO or DPO, some extra common guidelines to contemplate when deciding learn how to fine-tune an LLM are, in accordance with Ivison et al. (2024), so as of significance:

- The standard of the suggestions within the choice dataset if out there

- The selection of the coverage optimization algorithm and dimension of LLMs concerned

- The standard of the reward mannequin if out there

- The anticipated overlap between the prompts used for fine-tuning vs. future goal prompts for which the LLM shall be in the end be used

Classes of human choice reward fashions for RLHF/RLAIF

In RLHF, the standard of the ensuing alignment is determined by the character of the reward fashions derived from the choice dataset. RLHF will be biased by the group of people who gives the suggestions (beliefs, tradition, private historical past) and the directions given to those human labelers. Furthermore, efficient RLHF tuning sometimes requires tens of 1000’s of human choice labels, which is time-consuming and costly. RLAIF can higher scale the alignment of LLMs past direct human supervision, known as superalignment, by combining a number of LLMs, every instructed otherwise to specialize on a selected side of human preferences. For instance, as mentioned in Lee et al. (2023), you possibly can generate a reward sign for the general high quality of the LLM response, one other for its conciseness, one other for its protection, and one other for its toxicity. RLAIF is promising to coach AI techniques that stay useful, sincere, and innocent, whilst some AI capabilities attain or exceed human-level efficiency. RLAIF makes the implementation of an alignment course of less complicated, and in addition avoids reinventing the wheel given many reward fashions have been rigorously crafted and made out there to the general public.

To make the very best use of RLAIF, it’s necessary to rigorously select the reward fashions that shall be used for aligning the goal LLM. To judge how aligned a mannequin is, we should always first make clear what alignment means. As talked about in Ouyang et al. (2022), the definition of alignment has traditionally been a imprecise and complicated subject, with numerous competing proposals.

By fine-tuning an LLM to behave in accordance with our (human) intentions, aligned sometimes signifies that it’s useful, sincere, and innocent:

- Helpfulness – The LLM ought to comply with directions and infer consumer intent. The intent of a consumer behind an enter immediate is notoriously tough to deduce, and is usually unknown, unclear, or ambiguous. Reward fashions for helpfulness have sometimes relied on judgment from human labelers, however new generations of LLMs educated and fine-tuned on such labels at the moment are generally used to judge the general high quality and helpfulness of different LLMs, particularly to distill information through the use of giant LLMs to judge smaller or extra specialised LLMs.

- Honesty (constancy) – The LLM shouldn’t make up information (hallucination). Ideally, it also needs to acknowledge when it doesn’t know learn how to reply. Measuring honesty can also be notoriously tough and LLMs usually hallucinate as a result of they lack express mechanisms to acknowledge the limitation of their information. It’s usually restricted to measuring whether or not the mannequin’s statements in regards to the world are true, which solely captures a small half of what’s really meant by honesty. If you want to dive deeper, the next peer-reviewed articles in workshops at ICML (Curuksu, 2023) and NeurIPS (Curuksu, 2024) suggest some unique strategies to show LLMs when finest to fall again on asking for clarification and align the constancy of generative retrieval in multi-turn dialogues. In the end, the sort of alignment goals to enhance what we would consider because the “humility” of AI techniques.

- Harmlessness (toxicity) – The LLM shouldn’t generate biased or poisonous responses. Measuring the harms of language fashions additionally poses many challenges as a result of hurt from LLMs sometimes is determined by how their outputs are utilized by customers. As talked about in Ouyang et al. (2022), a mannequin producing poisonous outputs might be dangerous within the context of a deployed chatbot, however is perhaps useful if used for purple teaming information augmentation to coach a extra correct toxicity detection mannequin. Having labelers consider whether or not an output is dangerous required a lot of Proxy standards are sometimes used to judge whether or not an output is inappropriate within the context of a selected use case, or utilizing public benchmark datasets or parameterized fashions meant to measure bias and toxicity. We illustrate this strategy on this publish by fine-tuning some LLMs to generate much less poisonous content material in a summarization process utilizing one in all Meta’s AI reward fashions.

On this publish, we use a preexisting reward mannequin as an alternative of coaching our personal, and implement an RLAIF algorithm. This can make the implementation less complicated, but in addition keep away from reinventing the wheel provided that many reward fashions have been rigorously crafted and made out there to the general public. A key benefit of RLAIF to scale superalignment efforts is the power to mix a number of sources of reward fashions (for instance, utilizing the typical of rewards generated by three totally different fashions every specialised on evaluating a selected kind of human preferences, similar to helpfulness, honesty, or harmlessness).

Extra usually, RLAIF helps you to instruct LLMs in unique methods to focus on particular rising wants and scale superalignment efforts by recruiting the help of AI techniques to align different AI techniques. The next is an instance of a system immediate that can be utilized as a common template to instruct an LLM to generate a quantitative reward suggestions:

An implementation of Anthropic’s Claude on Amazon Bedrock instructed to judge responses generated by one other LLM on the Hugging Face Hub (Meta’s Llama 3.1 or Google’s Flan-T5) is proven within the subsequent part.

Through the use of express and scalable reward fashions, RLAIF can situation LLM behaviors on particular teams of customers and scale purple teaming alignment efforts by ensuring LLMs abide by some desired guiding rules.

At a elementary stage, there’s a identified trade-off between the should be innocent and the should be useful—the extra useful an LLM is, the extra potential for hurt it tends to have, and vice versa. For instance, answering all questions with “I don’t know” is usually innocent, however can also be sometimes ineffective. RLAIF is especially helpful to deal with this Pareto frontier—the optimum trade-off between helpfulness and harmlessness. For instance, assuming human suggestions is collected on the helpfulness of an LLM’s responses, a separate toxicity reward mannequin can be utilized to scale up automated purple teaming refinements and preserve low toxicity at any given (even when undefined) stage of helpfulness. For instance this, the use case applied within the subsequent part makes use of an LLM already fine-tuned for helpfulness and harmlessness and adjusts the Pareto frontier by additional tuning its toxicity utilizing a separate mannequin (both a pre-trained LLM or a general-purpose LLM instructed to judge toxicity).

Implementation of an RLAIF use case

As defined earlier on this publish, choice datasets will not be moveable, will not be all the time accessible, and supply solely a static set of prompts and responses; in distinction, parametrized reward fashions are extremely moveable and can be utilized to generalize its encoded information by exploring new units of prompts and responses. For instance this, assume we needed to mix the educational made by firms like Anthropic once they launched their human choice HH dataset (the most important human choice dataset publicly out there on the time of its launch) with LLMs out there at the moment, for instance Google’s Flan-T5 mannequin. As a substitute of utilizing the express human suggestions from the HH dataset, RLAIF might be used to let Google’s Flan-T5 discover new responses to the HH dataset prompts, and to fine-tune it utilizing a reward generated by one other LLM. This reward LLM might be Anthropic’s Claude itself, or yet one more supplier similar to Meta, who at that very same launched their purple teaming hate speech mannequin, a state-of-the-art RoBERTa toxicity mannequin on the time of its launch. A pocket book with the entire code for this use case is supplied on GitHub.

The objective of this use case and the accompanying code is to present you an end-to-end code pipeline for RLAIF and is generally illustrative. The dataset of prompts used to fine-tune and take a look at the LLM might be changed by a distinct choice dataset that most closely fits your use case, and the reward mannequin is also changed by a distinct reward mannequin, similar to an LLM prompted utilizing the template proven within the earlier part to assign a numerical reward primarily based any standards that finest suit your use case (toxicity, coherence, conciseness, constancy to some reference textual content, and so forth). On this publish, we use publicly out there datasets and reward fashions, and fine-tune toxicity as encoded in one in all Meta’s reward fashions, for a given stage of helpfulness as outlined by the LLM responses most popular by people within the Anthropic HH dataset. All the pocket book accompanying this publish, along with a requirement file, was run on an Amazon SageMaker pocket book ml.g5.16xlarge occasion.

Import key libraries

To implement an RLAIF algorithm, we use an open supply, high-level library from Hugging Face known as Transformer RL (TRL). Don’t forget to restart your Python kernel after putting in the previous libraries earlier than you import them. See the next code:

Load a immediate dataset and a pre-trained LLM, and instruct it to generate a selected kind of response

First, let’s load a pre-trained LLM mannequin. This part incorporates examples exhibiting learn how to load Meta’s Llama 3.1 (instruct model) and Google’s Flan-T5 fashions (select one or the opposite). When loading the pre-trained LLM, we instantiate it as an RL agent utilizing the Hugging Face TRL library by including a regression layer to it, which shall be used to foretell values required to outline the coverage gradient in PPO. In different phrases, TRL provides a worth head (critic) along with the language mannequin head (actor) to the unique LLM, thereby defining an actor-critic agent.

One other model of the LLM can be utilized as reference for regularization throughout PPO—its parameters will stay frozen throughout the fine-tuning course of, to outline the Kullback-Leibler divergence between the tuned vs. unique LLM responses. This can restrain the magnitude of potential deviations from the unique LLM and keep away from catastrophic forgetting or reward hacking; see Ouyang et al. (2022) for particulars. This regularization strategy is in principle non-obligatory (and totally different from the clipping on the probality distribution of output tokens already applied by default in PPO), however in follow it has been proven to be important to protect the capabilities acquired throughout pre-training. See the next code:

Then, load the dataset (Anthropic’s Helpfulness/Harmfulness dataset, a pattern of which is proven on the finish of the publish) and put together directions for the LLM to generate summaries of the dialogues sampled on this dataset, combine this technique immediate with the dialogues to be summarized, and tokenize the prompts:

Put together reward fashions for RLAIF

On this part, we offer two examples of an AI reward mannequin for RLAIF.

Instance of AI reward mannequin for RLAIF: Load a pre-trained LLM tuned to fee toxicity

As a substitute of asking human labelers to present suggestions on the toxicity stage of the LLM responses as historically accomplished in an RLHF strategy, which is time-consuming and costly, an instance of extra scalable technique for superalignment is to make use of a reward mannequin already pre-trained by supervised studying particularly to foretell this suggestions. The acquired generalization skills of this reward mannequin can scale to new prompts and responses and as such, can be utilized for RLAIF.

The favored Meta AI’s RoBERTa-based hate speech mannequin publicly out there on the Hugging Face Hub shall be used right here as reward mannequin, to fine-tune the parameters of the PPO agent to lower the extent of toxicity of the dialogue summaries generated by the PPO agent. This mannequin predicts the logits and possibilities throughout two courses (not_hate = label 0, and hate = label 1). The logits of the output not_hate (optimistic reward sign) will used for coaching the PPO agent. It’s good to create each a reward mannequin and a tokenizer primarily based on this mannequin, so you possibly can take a look at the mannequin:

Instance of AI reward mannequin for RLAIF: Immediate Anthropic’s Claude v3 to generate a reward

You should use a distinct LLM in a position to consider the toxicity of the enter textual content as an alternative of the Meta toxicity mannequin used within the earlier instance. As of 2024, most latest-generation LLMs can be utilized out of the field and immediately prompted to generate a quantitative reward sign. The next code is an instance utilizing Anthropic’s Claude v3 Sonnet hosted on Amazon Bedrock:

You may see the format of the output generated by Anthropic’s Claude v3 out of the field (a scalar quantity) is similar to the format of the output generated by the earlier reward mannequin particularly tuned to fee toxicity. Both reward mannequin can now be used for RLAIF.

High quality-tune the pre-trained LLM by proximal coverage optimization (PPO) reinforcement studying

Now that now we have a reward mannequin, we are able to initialize a PPO coach from the Hugging Face TRL library, then carry out the precise RL loop that, at each step, will produce an LLM response for every abstract, compute a reward suggestions sign for every response, and replace the parameters of the tunable LLM.

On this pocket book, we iterate for a predefined variety of PPO steps to not await too lengthy, however in follow we may additionally monitor the reward (toxicity rating) accrued throughout all summaries at every step, which ought to enhance because the LLM is tuned to supply much less poisonous summaries, and proceed the iteration till the LLM is taken into account aligned primarily based on a threshold within the toxicity rating. See the next code:

If the variety of iterations is just too small, you won’t observe any important enhancements. You may need to experiment, in your explicit use case, to seek out various iterations excessive sufficient to supply important enhancements.

Consider the RL fine-tuning outcomes

To judge the outcomes from an RLAIF course of quantitatively, we are able to compute the toxicity of dialogues generated by the unique vs. fine-tuned mannequin utilizing prompts from the hold-out take a look at set that was ready earlier. The code for the perform evaluate_toxicity supplied with this publish makes use of the identical toxicity mannequin as was used to outline the reward mannequin, however you might additionally use a distinct toxicity from the one used as reward mannequin for evaluating outcomes, which is yet one more method that may assist scale superalignment efforts in RLAIF. See the next code:

Lastly, a extra qualitative comparability of generated responses earlier than vs. after RLAIF fine-tuning can complement the previous quantitative analysis, by taking a look at examples of summaries, along with their toxicity scores, once more utilizing hold-out prompts from the take a look at set, as accomplished within the accompanying pocket book.

| Question | Response Earlier than Toxicity High quality-Tuning | Response After Toxicity High quality-Tuning |

|

Summarize the next dialog. Human: When can my new child begin consuming stable meals? Assistant: Your child must be beginning stable meals round six months. |

Wait 5 weeks earlier than consuming your child’s common meals. | Wait till the child will get to 5 months. |

|

Summarize the next dialog. Human: How do I restore patches on my pants? Assistant: To restore patches on pants, listed here are a number of steps: 1) Wash and dry the pants. 2) Lower off any extra cloth across the patch. 3) Use a needle and thread to stitch on the patch. 4) Sew on the patch securely, taking care to match the stitching with the unique stitching. 5) Trim the thread. |

Get hold of cheap items of clothes. | Easy methods to restore patched pants. |

|

Summarize the next dialog. Human: How do I repair my cassette tape if the tape has been pulled out? Assistant: What occurs if you put in a cassette? Human: I cannot put within the cassette the tape from inside was pulled out for essentially the most half. Assistant: Are you speaking in regards to the plastic cassette tape or the cassette tape contained in the cassette? Human: the one tape that’s in a cassette tape. the one which incorporates the music. Assistant: Are you speaking in regards to the tape that’s within the cassette (…) |

The cassette tape is made with two layers of plastic that’s damaged, and the tape is your music file. The [sex-related word] is inserted into the grooves of the cassette tape, so it can’t mark these inflexible areas. | The cassette tape from the shop has the one tape I broke, however that caught as much as me and I’ve a document of it. |

|

Summarize the next dialog. Human: How can I clarify to a child learn how to skim and scan textual content Assistant: What are you on the lookout for precisely? Human: As I stated, learn how to clarify to a baby learn how to skim and scan textual content Assistant: Would you like the kid to learn to do it themselves, or do you need to clarify to them learn how to do it? |

I heard about this text. What would you want me to do?? Scan and scan textual content. | Begin the sport by creating an interface on-line. |

Conclusion

On this publish, we launched a state-of-the-art technique to fine-tune LLMs by reinforcement studying, reviewed the professionals and cons of RLHF vs. RLAIF vs. DPO, and noticed learn how to scale LLM fine-tuning efforts with RLAIF. We additionally noticed learn how to implement an end-to-end RLAIF pipeline on SageMaker utilizing the Hugging Face Transformer and TRL libraries, and utilizing both off-the-shelf toxicity reward fashions to align responses throughout PPO or by immediately prompting an LLM to generate quantitative reward suggestions throughout PPO. Lastly, we noticed learn how to consider outcomes by measuring the toxicity of generated responses earlier than vs. after fine-tuning on a hold-out take a look at set of prompts.

Do that fine-tuning technique with your individual use instances, and share your ideas within the feedback.

References:

Ouyang L. et al. (2022) Coaching language fashions to comply with directions with human suggestions. Advances in neural data processing techniques, 35:27730–27744.

Lee H. et al. (2023) RLAIF: Scaling reinforcement studying from human suggestions with ai suggestions. arXiv preprint arXiv:2309.00267.

Bai Y. et al. (2022) Constitutional AI: Harmlessness from ai suggestions. arXiv preprint arXiv:2212.08073.

Rafailov R. et al. (2024) Direct choice optimization: Your language mannequin is secretly a reward mannequin. Advances in Neural Data Processing Methods, 36.

Christiano P. et al. (2017) Deep reinforcement studying from human preferences. Advances in neural data processing techniques, 30.

Ivison H. et al. (2024) Unpacking DPO and PPO: Disentangling Finest Practices for Studying from Desire Suggestions. arXiv preprint arXiv:2406.09279.

Curuksu J. (2023) Optimizing Chatbot Fallback Intent Choices with Reinforcement Studying. ICML 2023 Workshop on The Many Aspects of Desire-Based mostly Studying.

Curuksu J. (2024) Coverage optimization of language fashions to align constancy and effectivity of generative retrieval in multi-turn dialogues. KDD 2024 Workshop on Generative AI for Recommender Methods and Personalization.

Concerning the Creator

Jeremy Curuksu is a Senior Utilized Scientist in Generative AI at AWS and an Adjunct School at New York College. He holds a MS in Utilized Arithmetic and a PhD in Computational Biophysics, and was a Analysis Scientist at Sorbonne College, EPFL, and MIT. He authored the e book Knowledge Pushed and a number of peer-reviewed articles in computational physics, utilized arithmetic, and synthetic intelligence.

Jeremy Curuksu is a Senior Utilized Scientist in Generative AI at AWS and an Adjunct School at New York College. He holds a MS in Utilized Arithmetic and a PhD in Computational Biophysics, and was a Analysis Scientist at Sorbonne College, EPFL, and MIT. He authored the e book Knowledge Pushed and a number of peer-reviewed articles in computational physics, utilized arithmetic, and synthetic intelligence.