Knowledge science groups typically face challenges when transitioning fashions from the event setting to manufacturing. These embody difficulties integrating knowledge science staff’s fashions into the IT staff’s manufacturing setting, the necessity to retrofit knowledge science code to satisfy enterprise safety and governance requirements, getting access to manufacturing grade knowledge, and sustaining repeatability and reproducibility in machine studying (ML) pipelines, which may be tough and not using a correct platform infrastructure and standardized templates.

This submit, a part of the “Governing the ML lifecycle at scale” collection (Half 1, Half 2, Half 3), explains how one can arrange and govern a multi-account ML platform that addresses these challenges. The platform offers self-service provisioning of safe environments for ML groups, accelerated mannequin improvement with predefined templates, a centralized mannequin registry for collaboration and reuse, and standardized mannequin approval and deployment processes.

An enterprise might need the next roles concerned within the ML lifecycles. The capabilities for every position can differ from firm to firm. On this submit, we assign the capabilities by way of the ML lifecycle to every position as follows:

- Lead knowledge scientist – Provision accounts for ML improvement groups, govern entry to the accounts and assets, and promote standardized mannequin improvement and approval course of to eradicate repeated engineering effort. Often, there may be one lead knowledge scientist for a knowledge science group in a enterprise unit, akin to advertising.

- Knowledge scientists – Carry out knowledge evaluation, mannequin improvement, mannequin analysis, and registering the fashions in a mannequin registry.

- ML engineers – Develop mannequin deployment pipelines and management the mannequin deployment processes.

- Governance officer – Assessment the mannequin’s efficiency together with documentation, accuracy, bias and entry, and supply closing approval for fashions to be deployed.

- Platform engineers – Outline a standardized course of for creating improvement accounts that conform to the corporate’s safety, monitoring, and governance requirements; create templates for mannequin improvement; and handle the infrastructure and mechanisms for sharing mannequin artifacts.

This ML platform offers a number of key advantages. First, it permits each step within the ML lifecycle to adapt to the group’s safety, monitoring, and governance requirements, lowering total danger. Second, the platform offers knowledge science groups the autonomy to create accounts, provision ML assets and entry ML assets as wanted, lowering useful resource constraints that always hinder their work.

Moreover, the platform automates most of the repetitive guide steps within the ML lifecycle, permitting knowledge scientists to focus their time and efforts on constructing ML fashions and discovering insights from the info quite than managing infrastructure. The centralized mannequin registry additionally promotes collaboration throughout groups, permits centralized mannequin governance, growing visibility into fashions developed all through the group and lowering duplicated work.

Lastly, the platform standardizes the method for enterprise stakeholders to evaluation and devour fashions, smoothing the collaboration between the info science and enterprise groups. This makes certain fashions may be shortly examined, accepted, and deployed to manufacturing to ship worth to the group.

Total, this holistic method to governing the ML lifecycle at scale offers vital advantages by way of safety, agility, effectivity, and cross-functional alignment.

Within the subsequent part, we offer an summary of the multi-account ML platform and the way the completely different roles collaborate to scale MLOps.

Answer overview

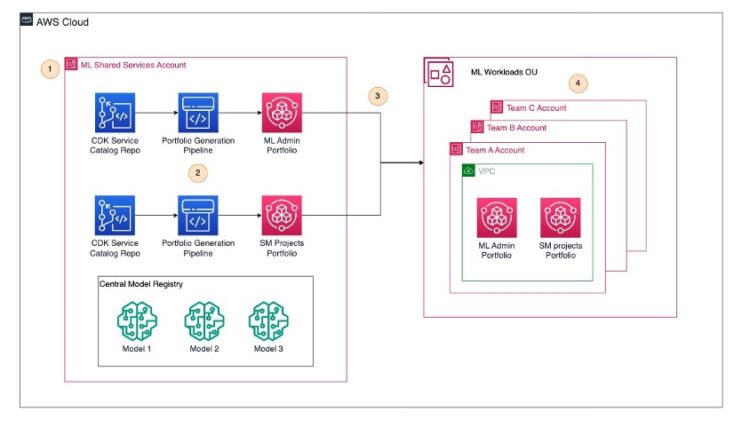

The next structure diagram illustrates the options for a multi-account ML platform and the way completely different personas collaborate inside this platform.

There are 5 accounts illustrated within the diagram:

- ML Shared Companies Account – That is the central hub of the platform. This account manages templates for establishing new ML Dev Accounts, in addition to SageMaker Tasks templates for mannequin improvement and deployment, in AWS Service Catalog. It additionally hosts a mannequin registry to retailer ML fashions developed by knowledge science groups, and offers a single location to approve fashions for deployment.

- ML Dev Account – That is the place knowledge scientists carry out their work. On this account, knowledge scientists can create new SageMaker notebooks primarily based on the wants, hook up with knowledge sources akin to Amazon Easy Storage Service (Amazon S3) buckets, analyze knowledge, construct fashions and create mannequin artifacts (for instance, a container picture), and extra. The SageMaker initiatives, provisioned utilizing the templates within the ML Shared Companies Account, can pace up the mannequin improvement course of as a result of it has steps (akin to connecting to an S3 bucket) configured. The diagram exhibits one ML Dev Account, however there may be a number of ML Dev Accounts in a company.

- ML Take a look at Account – That is the take a look at setting for brand new ML fashions, the place stakeholders can evaluation and approve fashions earlier than deployment to manufacturing.

- ML Prod Account – That is the manufacturing account for brand new ML fashions. After the stakeholders approve the fashions within the ML Take a look at Account, the fashions are robotically deployed to this manufacturing account.

- Knowledge Governance Account – This account hosts knowledge governance providers for knowledge lake, central characteristic retailer, and fine-grained knowledge entry.

Key actions and actions are numbered within the previous diagram. A few of these actions are carried out by varied personas, whereas others are robotically triggered by AWS providers.

- ML engineers create the pipelines in Github repositories, and the platform engineer converts them into two completely different Service Catalog portfolios: ML Admin Portfolio and SageMaker Challenge Portfolio. The ML Admin Portfolio shall be utilized by the lead knowledge scientist to create AWS assets (for instance, SageMaker domains). The SageMaker Challenge Portfolio has SageMaker initiatives that knowledge scientists and ML engineers can use to speed up mannequin coaching and deployment.

- The platform engineer shares the 2 Service Catalog portfolios with workload accounts within the group.

- Knowledge engineer prepares and governs datasets utilizing providers akin to Amazon S3, AWS Lake Formation, and Amazon DataZone for ML.

- The lead knowledge scientist makes use of the ML Admin Portfolio to arrange SageMaker domains and the SageMaker Challenge Portfolio to arrange SageMaker initiatives for his or her groups.

- Knowledge scientists subscribe to datasets, and use SageMaker notebooks to investigate knowledge and develop fashions.

- Knowledge scientists use the SageMaker initiatives to construct mannequin coaching pipelines. These SageMaker initiatives robotically register the fashions within the mannequin registry.

- The lead knowledge scientist approves the mannequin regionally within the ML Dev Account.

- This step consists of the next sub-steps:

- After the info scientists approve the mannequin, it triggers an occasion bus in Amazon EventBridge that ships the occasion to the ML Shared Companies Account.

- The occasion in EventBridge triggers the AWS Lambda operate that copies mannequin artifacts (managed by SageMaker, or Docker photos) from the ML Dev Account into the ML Shared Companies Account, creates a mannequin bundle within the ML Shared Companies Account, and registers the brand new mannequin within the mannequin registry within the ML Shared Companies account.

- ML engineers evaluation and approve the brand new mannequin within the ML Shared Companies account for testing and deployment. This motion triggers a pipeline that was arrange utilizing a SageMaker challenge.

- The accepted fashions are first deployed to the ML Take a look at Account. Integration assessments shall be run and endpoint validated earlier than being accepted for manufacturing deployment.

- After testing, the governance officer approves the brand new mannequin within the CodePipeline.

- After the mannequin is accepted, the pipeline will proceed to deploy the brand new mannequin into the ML Prod Account, and creates a SageMaker endpoint.

The next sections present particulars on the important thing elements of this diagram, how one can set them up, and pattern code.

Arrange the ML Shared Companies Account

The ML Shared Companies Account helps the group standardize administration of artifacts and assets throughout knowledge science groups. This standardization additionally helps implement controls throughout assets consumed by knowledge science groups.

The ML Shared Companies Account has the next options:

Service Catalog portfolios – This contains the next portfolios:

- ML Admin Portfolio – That is supposed for use by the challenge admins of the workload accounts. It’s used to create AWS assets for his or her groups. These assets can embody SageMaker domains, Amazon Redshift clusters, and extra.

- SageMaker Tasks Portfolio – This portfolio accommodates the SageMaker merchandise for use by the ML groups to speed up their ML fashions’ improvement whereas complying with the group’s greatest practices.

- Central mannequin registry – That is the centralized place for ML fashions developed and accepted by completely different groups. For particulars on setting this up, seek advice from Half 2 of this collection.

The next diagram illustrates this structure.

As step one, the cloud admin units up the ML Shared Companies Account by utilizing one of many blueprints for customizations in AWS Management Tower account merchandising, as described in Half 1.

Within the following sections, we stroll by how one can arrange the ML Admin Portfolio. The identical steps can be utilized to arrange the SageMaker Tasks Portfolio.

Bootstrap the infrastructure for 2 portfolios

After the ML Shared Companies Account has been arrange, the ML platform admin can bootstrap the infrastructure for the ML Admin Portfolio utilizing pattern code within the GitHub repository. The code accommodates AWS CloudFormation templates that may be later deployed to create the SageMaker Tasks Portfolio.

Full the next steps:

- Clone the GitHub repo to an area listing:

- Change the listing to the portfolio listing:

- Set up dependencies in a separate Python setting utilizing your most popular Python packages supervisor:

- Bootstrap your deployment goal account utilizing the next command:

If you have already got a task and AWS Area from the account arrange, you should utilize the next command as an alternative:

- Lastly, deploy the stack:

When it’s prepared, you may see the MLAdminServicesCatalogPipeline pipeline in AWS CloudFormation.

Navigate to AWS CodeStar Connections of the Service Catalog web page, you may see there’s a connection named “codeconnection-service-catalog”. Should you click on the connection, you’ll discover that we have to join it to GitHub to will let you combine it along with your pipelines and begin pushing code. Click on the ‘Replace pending connection’ to combine along with your GitHub account.

As soon as that’s performed, you should create empty GitHub repositories to begin pushing code to. For instance, you may create a repository referred to as “ml-admin-portfolio-repo”. Each challenge you deploy will want a repository created in GitHub beforehand.

Set off CodePipeline to deploy the ML Admin Portfolio

Full the next steps to set off the pipeline to deploy the ML Admin Portfolio. We suggest making a separate folder for the completely different repositories that shall be created within the platform.

- Get out of the cloned repository and create a parallel folder referred to as platform-repositories:

- Clone and fill the empty created repository:

- Push the code to the Github repository to create the Service Catalog portfolio:

After it’s pushed, the Github repository we created earlier is not empty. The brand new code push triggers the pipeline named cdk-service-catalog-pipeline to construct and deploy artifacts to Service Catalog.

It takes about 10 minutes for the pipeline to complete working. When it’s full, yow will discover a portfolio named ML Admin Portfolio on the Portfolios web page on the Service Catalog console.

Repeat the identical steps to arrange the SageMaker Tasks Portfolio, be sure you’re utilizing the pattern code (sagemaker-projects-portfolio) and create a brand new code repository (with a reputation akin to sm-projects-service-catalog-repo).

Share the portfolios with workload accounts

You possibly can share the portfolios with workload accounts in Service Catalog. Once more, we use ML Admin Portfolio for instance.

- On the Service Catalog console, select Portfolios within the navigation pane.

- Select the ML Admin Portfolio.

- On the Share tab, select Share.

- Within the Account information part, present the next info:

- For Choose how one can share, choose Group node.

- Select Organizational Unit, then enter the organizational unit (OU) ID of the workloads OU.

- Within the Share settings part, choose Principal sharing.

- Select Share.

Deciding on the Principal sharing choice means that you can specify the AWS Id and Entry Administration (IAM) roles, customers, or teams by title for which you wish to grant permissions within the shared accounts. - On the portfolio particulars web page, on the Entry tab, select Grant entry.

- For Choose how one can grant entry, choose Principal Title.

- Within the Principal Title part, select position/ for Kind and enter the title of the position that the ML admin will assume within the workload accounts for Title.

- Select Grant entry.

- Repeat these steps to share the SageMaker Tasks Portfolio with workload accounts.

Verify accessible portfolios in workload accounts

If the sharing was profitable, it’s best to see each portfolios accessible on the Service Catalog console, on the Portfolios web page beneath Imported portfolios.

Now that the service catalogs within the ML Shared Companies Account have been shared with the workloads OU, the info science staff can provision assets akin to SageMaker domains utilizing the templates and arrange SageMaker initiatives to speed up ML fashions’ improvement whereas complying with the group’s greatest practices.

We demonstrated how one can create and share portfolios with workload accounts. Nonetheless, the journey doesn’t cease right here. The ML engineer can proceed to evolve present merchandise and develop new ones primarily based on the group’s necessities.

The next sections describe the processes concerned in establishing ML Growth Accounts and working ML experiments.

Arrange the ML Growth Account

The ML Growth account setup consists of the next duties and stakeholders:

- The staff lead requests the cloud admin to provision the ML Growth Account.

- The cloud admin provisions the account.

- The staff lead makes use of shared Service Catalog portfolios to provisions SageMaker domains, arrange IAM roles and provides entry, and get entry to knowledge in Amazon S3, or Amazon DataZone or AWS Lake Formation, or a central characteristic group, relying on which resolution the group decides to make use of.

Run ML experiments

Half 3 on this collection described a number of methods to share knowledge throughout the group. The present structure permits knowledge entry utilizing the next strategies:

- Choice 1: Practice a mannequin utilizing Amazon DataZone – If the group has Amazon DataZone within the central governance account or knowledge hub, a knowledge writer can create an Amazon DataZone challenge to publish the info. Then the info scientist can subscribe to the Amazon DataZone printed datasets from Amazon SageMaker Studio, and use the dataset to construct an ML mannequin. Check with the pattern code for particulars on how one can use subscribed knowledge to coach an ML mannequin.

- Choice 2: Practice a mannequin utilizing Amazon S3 – Make certain the person has entry to the dataset within the S3 bucket. Observe the pattern code to run an ML experiment pipeline utilizing knowledge saved in an S3 bucket.

- Choice 3: Practice a mannequin utilizing a knowledge lake with Athena – Half 2 launched how one can arrange a knowledge lake. Observe the pattern code to run an ML experiment pipeline utilizing knowledge saved in a knowledge lake with Amazon Athena.

- Choice 4: Practice a mannequin utilizing a central characteristic group – Half 2 launched how one can arrange a central characteristic group. Observe the pattern code to run an ML experiment pipeline utilizing knowledge saved in a central characteristic group.

You possibly can select which choice to make use of relying in your setup. For choices 2, 3, and 4, the SageMaker Tasks Portfolio offers challenge templates to run ML experiment pipelines, steps together with knowledge ingestion, mannequin coaching, and registering the mannequin within the mannequin registry.

Within the following instance, we use choice 2 to reveal how one can construct and run an ML pipeline utilizing a SageMaker challenge that was shared from the ML Shared Companies Account.

- On the SageMaker Studio area, beneath Deployments within the navigation pane, select Tasks

- Select Create challenge.

- There’s a listing of initiatives that serve varied functions. As a result of we wish to entry knowledge saved in an S3 bucket for coaching the ML mannequin, select the challenge that makes use of knowledge in an S3 bucket on the Group templates tab.

- Observe the steps to offer the required info, akin to Title, Tooling Account(ML Shared Companies account id), S3 bucket(for MLOPS) after which create the challenge.

It takes a couple of minutes to create the challenge.

After the challenge is created, a SageMaker pipeline is triggered to carry out the steps specified within the SageMaker challenge. Select Pipelines within the navigation pane to see the pipeline.You possibly can select the pipeline to see the Directed Acyclic Graph (DAG) of the pipeline. While you select a step, its particulars present in the correct pane.

The final step of the pipeline is registering the mannequin within the present account’s mannequin registry. As the following step, the lead knowledge scientist will evaluation the fashions within the mannequin registry, and determine if a mannequin ought to be accepted to be promoted to the ML Shared Companies Account.

Approve ML fashions

The lead knowledge scientist ought to evaluation the skilled ML fashions and approve the candidate mannequin within the mannequin registry of the event account. After an ML mannequin is accepted, it triggers an area occasion, and the occasion buses in EventBridge will ship mannequin approval occasions to the ML Shared Companies Account, and the artifacts of the fashions shall be copied to the central mannequin registry. A mannequin card shall be created for the mannequin if it’s a brand new one, or the present mannequin card will replace the model.

The next structure diagram exhibits the movement of mannequin approval and mannequin promotion.

Mannequin deployment

After the earlier step, the mannequin is accessible within the central mannequin registry within the ML Shared Companies Account. ML engineers can now deploy the mannequin.

Should you had used the pattern code to bootstrap the SageMaker Tasks portfolio, you should utilize the Deploy real-time endpoint from ModelRegistry – Cross account, take a look at and prod choice in SageMaker Tasks to arrange a challenge to arrange a pipeline to deploy the mannequin to the goal take a look at account and manufacturing account.

- On the SageMaker Studio console, select Tasks within the navigation pane.

- Select Create challenge.

- On the Group templates tab, you may view the templates that had been populated earlier from Service Catalog when the area was created.

- Choose the template Deploy real-time endpoint from ModelRegistry – Cross account, take a look at and prod and select Choose challenge template.

- Fill within the template:

- The SageMakerModelPackageGroupName is the mannequin group title of the mannequin promoted from the ML Dev Account within the earlier step.

- Enter the Deployments Take a look at Account ID for PreProdAccount, and the Deployments Prod Account ID for ProdAccount.

The pipeline for deployment is prepared. The ML engineer will evaluation the newly promoted mannequin within the ML Shared Companies Account. If the ML engineer approves mannequin, it is going to set off the deployment pipeline. You possibly can see the pipeline on the CodePipeline console.

The pipeline will first deploy the mannequin to the take a look at account, after which pause for guide approval to deploy to the manufacturing account. ML engineer can take a look at the efficiency and Governance officer can validate the mannequin leads to the take a look at account. If the outcomes are passable, Governance officer can approve in CodePipeline to deploy the mannequin to manufacturing account.

Conclusion

This submit offered detailed steps for establishing the important thing elements of a multi-account ML platform. This contains configuring the ML Shared Companies Account, which manages the central templates, mannequin registry, and deployment pipelines; sharing the ML Admin and SageMaker Tasks Portfolios from the central Service Catalog; and establishing the person ML Growth Accounts the place knowledge scientists can construct and prepare fashions.

The submit additionally lined the method of working ML experiments utilizing the SageMaker Tasks templates, in addition to the mannequin approval and deployment workflows. Knowledge scientists can use the standardized templates to hurry up their mannequin improvement, and ML engineers and stakeholders can evaluation, take a look at, and approve the brand new fashions earlier than selling them to manufacturing.

This multi-account ML platform design follows a federated mannequin, with a centralized ML Shared Companies Account offering governance and reusable elements, and a set of improvement accounts managed by particular person traces of enterprise. This method offers knowledge science groups the autonomy they should innovate, whereas offering enterprise-wide safety, governance, and collaboration.

We encourage you to check this resolution by following the AWS Multi-Account Knowledge & ML Governance Workshop to see the platform in motion and discover ways to implement it in your individual group.

Concerning the authors

Jia (Vivian) Li is a Senior Options Architect in AWS, with specialization in AI/ML. She at the moment helps clients in monetary business. Previous to becoming a member of AWS in 2022, she had 7 years of expertise supporting enterprise clients use AI/ML within the cloud to drive enterprise outcomes. Vivian has a BS from Peking College and a PhD from College of Southern California. In her spare time, she enjoys all of the water actions, and mountain climbing within the stunning mountains in her dwelling state, Colorado.

Jia (Vivian) Li is a Senior Options Architect in AWS, with specialization in AI/ML. She at the moment helps clients in monetary business. Previous to becoming a member of AWS in 2022, she had 7 years of expertise supporting enterprise clients use AI/ML within the cloud to drive enterprise outcomes. Vivian has a BS from Peking College and a PhD from College of Southern California. In her spare time, she enjoys all of the water actions, and mountain climbing within the stunning mountains in her dwelling state, Colorado.

Ram Vittal is a Principal ML Options Architect at AWS. He has over 3 many years of expertise architecting and constructing distributed, hybrid, and cloud functions. He’s enthusiastic about constructing safe, scalable, dependable AI/ML and large knowledge options to assist enterprise clients with their cloud adoption and optimization journey to enhance their enterprise outcomes. In his spare time, he enjoys using motorbike and strolling together with his canine.

Ram Vittal is a Principal ML Options Architect at AWS. He has over 3 many years of expertise architecting and constructing distributed, hybrid, and cloud functions. He’s enthusiastic about constructing safe, scalable, dependable AI/ML and large knowledge options to assist enterprise clients with their cloud adoption and optimization journey to enhance their enterprise outcomes. In his spare time, he enjoys using motorbike and strolling together with his canine.

Dr. Alessandro Cerè is a GenAI Analysis Specialist and Options Architect at AWS. He assists clients throughout industries and areas in operationalizing and governing their generative AI programs at scale, guaranteeing they meet the very best requirements of efficiency, security, and moral concerns. Bringing a novel perspective to the sector of AI, Alessandro has a background in quantum physics and analysis expertise in quantum communications and quantum recollections. In his spare time, he pursues his ardour for panorama and underwater pictures.

Dr. Alessandro Cerè is a GenAI Analysis Specialist and Options Architect at AWS. He assists clients throughout industries and areas in operationalizing and governing their generative AI programs at scale, guaranteeing they meet the very best requirements of efficiency, security, and moral concerns. Bringing a novel perspective to the sector of AI, Alessandro has a background in quantum physics and analysis expertise in quantum communications and quantum recollections. In his spare time, he pursues his ardour for panorama and underwater pictures.

Alberto Menendez is a DevOps Advisor in Skilled Companies at AWS. He helps speed up clients’ journeys to the cloud and obtain their digital transformation objectives. In his free time, he enjoys enjoying sports activities, particularly basketball and padel, spending time with household and mates, and studying about know-how.

Alberto Menendez is a DevOps Advisor in Skilled Companies at AWS. He helps speed up clients’ journeys to the cloud and obtain their digital transformation objectives. In his free time, he enjoys enjoying sports activities, particularly basketball and padel, spending time with household and mates, and studying about know-how.

Sovik Kumar Nath is an AI/ML and Generative AI senior resolution architect with AWS. He has in depth expertise designing end-to-end machine studying and enterprise analytics options in finance, operations, advertising, healthcare, provide chain administration, and IoT. He has double masters levels from the College of South Florida, College of Fribourg, Switzerland, and a bachelors diploma from the Indian Institute of Know-how, Kharagpur. Exterior of labor, Sovik enjoys touring, taking ferry rides, and watching films.

Sovik Kumar Nath is an AI/ML and Generative AI senior resolution architect with AWS. He has in depth expertise designing end-to-end machine studying and enterprise analytics options in finance, operations, advertising, healthcare, provide chain administration, and IoT. He has double masters levels from the College of South Florida, College of Fribourg, Switzerland, and a bachelors diploma from the Indian Institute of Know-how, Kharagpur. Exterior of labor, Sovik enjoys touring, taking ferry rides, and watching films.

Viktor Malesevic is a Senior Machine Studying Engineer inside AWS Skilled Companies, main groups to construct superior machine studying options within the cloud. He’s enthusiastic about making AI impactful, overseeing the whole course of from modeling to manufacturing. In his spare time, he enjoys browsing, biking, and touring.

Viktor Malesevic is a Senior Machine Studying Engineer inside AWS Skilled Companies, main groups to construct superior machine studying options within the cloud. He’s enthusiastic about making AI impactful, overseeing the whole course of from modeling to manufacturing. In his spare time, he enjoys browsing, biking, and touring.