Knowledge is the lifeblood of contemporary functions, driving every part from software testing to machine studying (ML) mannequin coaching and analysis. As information calls for proceed to surge, the emergence of generative AI fashions presents an revolutionary answer. These giant language fashions (LLMs), educated on expansive information corpora, possess the outstanding functionality to generate new content material throughout a number of media codecs—textual content, audio, and video—and throughout numerous enterprise domains, primarily based on offered prompts and inputs.

On this publish, we discover how you should utilize these LLMs with superior Retrieval Augmented Era (RAG) to generate high-quality artificial information for a finance area use case. You should utilize the identical approach for artificial information for different enterprise area use instances as effectively. For this publish, we display generate counterparty threat (CR) information, which might be helpful for over-the-counter (OTC) derivatives which can be traded straight between two events, with out going by a proper trade.

Resolution overview

OTC derivatives are usually custom-made contracts between counterparties and embrace quite a lot of monetary devices, corresponding to forwards, choices, swaps, and different structured merchandise. A counterparty is the opposite social gathering concerned in a monetary transaction. Within the context of OTC derivatives, the counterparty refers back to the entity (corresponding to a financial institution, monetary establishment, company, or particular person) with whom a spinoff contract is made.

For instance, in an OTC swap or choice contract, one entity agrees to phrases with one other social gathering, and every entity turns into the counterparty to the opposite. The obligations, obligations, and dangers (corresponding to credit score threat) are shared between these two entities based on the contract.

As monetary establishments proceed to navigate the complicated panorama of CR, the necessity for correct and dependable threat evaluation fashions has change into paramount. For our use case, ABC Financial institution, a fictional monetary companies group, has taken on the problem of growing an ML mannequin to evaluate the danger of a given counterparty primarily based on their publicity to OTC spinoff information.

Constructing such a mannequin presents quite a few challenges. Though ABC Financial institution has gathered a big dataset from numerous sources and in several codecs, the information could also be biased, skewed, or lack the range wanted to coach a extremely correct mannequin. The first problem lies in gathering and preprocessing the information to make it appropriate for coaching an ML mannequin. Deploying a poorly suited mannequin may lead to misinformed choices and vital monetary losses.

We suggest a generative AI answer that makes use of the RAG method. RAG is a extensively used method that enhances LLMs by supplying further data from exterior information sources not included of their authentic coaching. The complete answer will be broadly divided into three steps: indexing, information technology, and validation.

Knowledge indexing

Within the indexing step, we parse, chunk, and convert the consultant CR information into vector format utilizing the Amazon Titan Textual content Embeddings V2 mannequin and retailer this data in a Chroma vector database. Chroma is an open supply vector database recognized for its ease of use, environment friendly similarity search, and help for multimodal information and metadata. It gives each in-memory and protracted storage choices, integrates effectively with in style ML frameworks, and is appropriate for a variety of AI functions. It’s significantly helpful for smaller to medium-sized datasets and initiatives requiring native deployment or low useful resource utilization. The next diagram illustrates this structure.

Listed below are the steps for information indexing:

- The pattern CR information is segmented into smaller, manageable chunks to optimize it for embedding technology.

- These segmented information chunks are then handed to a technique accountable for each producing embeddings and storing them effectively.

- The Amazon Titan Textual content Embeddings V2 API known as upon to generate high-quality embeddings from the ready information chunks.

- The ensuing embeddings are then saved within the Chroma vector database, offering environment friendly retrieval and similarity searches for future use.

Knowledge technology

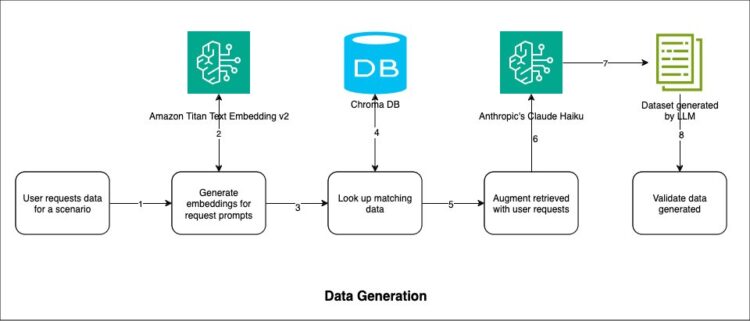

When the person requests information for a sure state of affairs, the request is transformed into vector format after which appeared up within the Chroma database to search out matches with the saved information. The retrieved information is augmented with the person request and extra prompts to Anthropic’s Claude Haiku on Amazon Bedrock. Anthropic’s Claude Haiku was chosen primarily for its velocity, processing over 21,000 tokens per second, which considerably outpaces its friends. Furthermore, Anthropic’s Claude Haiku’s effectivity in information technology is outstanding, with a 1:5 input-to-output token ratio. This implies it will possibly generate a big quantity of information from a comparatively small quantity of enter or context. This functionality not solely enhances the mannequin’s effectiveness, but in addition makes it cost-efficient for our software, the place we have to generate quite a few information samples from a restricted set of examples. Anthropic’s Claude Haiku LLM is invoked iteratively to effectively handle token consumption and assist forestall reaching the utmost token restrict. The next diagram illustrates this workflow.

Listed below are the steps for information technology:

- The person initiates a request to generate new artificial counterparty threat information primarily based on particular standards.

- The Amazon Titan Textual content Embeddings V2 LLM is employed to create embeddings for the person’s request prompts, remodeling them right into a machine-interpretable format.

- These newly generated embeddings are then forwarded to a specialised module designed to determine matching saved information.

- The Chroma vector database, which homes beforehand saved embeddings, is queried to search out information that intently matches the person’s request.

- The recognized matching information and the unique person prompts are then handed to a module accountable for producing new artificial information.

- Anthropic’s Claude Haiku 3.0 mannequin is invoked, utilizing each the matching embeddings and person prompts as enter to create high-quality artificial information.

- The generated artificial information is then parsed and formatted right into a .csv file utilizing the Pydantic library, offering a structured and validated output.

- To verify the standard of the generated information, a number of statistical strategies are utilized, together with quantile-quantile (Q-Q) plots and correlation warmth maps of key attributes, offering a complete validation course of.

Knowledge validation

When validating the artificial CR information generated by the LLM, we employed Q-Q plots and correlation warmth maps specializing in key attributes corresponding to cp_exposure, cp_replacement_cost, and cp_settlement_risk. These statistical instruments serve essential roles in selling the standard and representativeness of the artificial information. Through the use of the Q-Q plots, we are able to assess whether or not these attributes comply with a standard distribution, which is commonly anticipated in lots of medical and monetary variables. By evaluating the quantiles of our artificial information in opposition to theoretical regular distributions, we are able to determine vital deviations that may point out bias or unrealistic information technology.

Concurrently, the correlation warmth maps present a visible illustration of the relationships between these attributes and others within the dataset. That is significantly essential as a result of it helps confirm that the LLM has maintained the complicated interdependencies usually noticed in actual CR information. For example, we might anticipate sure correlations between publicity and alternative value, or between alternative value and settlement threat. By ensuring these correlations are preserved in our artificial information, we will be extra assured that analyses or fashions constructed on this information will yield insights which can be relevant to real-world eventualities. This rigorous validation course of helps to mitigate the danger of introducing synthetic patterns or biases, thereby enhancing the reliability and utility of our artificial CR dataset for subsequent analysis or modeling duties.

We’ve created a Jupyter pocket book containing three elements to implement the important thing parts of the answer. We offer code snippets from the notebooks for higher understanding.

Conditions

To arrange the answer and generate check information, you need to have the next conditions:

- Python 3 have to be put in in your machine

- We advocate that an built-in improvement atmosphere (IDE) that may run Jupyter notebooks be put in

- You can too create a Jupyter pocket book occasion utilizing Amazon SageMaker from AWS console and develop the code there.

- That you must have an AWS account with entry to Amazon Bedrock and the next LLMs enabled (watch out to not share the AWS account credentials):

- Amazon Titan Textual content Embeddings V2

- Anthropic’s Claude 3 Haiku

Setup

Listed below are the steps to setup the atmosphere.

import sys!{sys.executable} -m pip set up -r necessities.txtThe content material of the necessities.txt is given right here.

boto3

langchain

langchain-community

streamlit

chromadb==0.4.15

numpy

jq

langchain-aws

seaborn

matplotlib

scipyThe next code snippet will carry out all the mandatory imports.

from pprint import pprint

from uuid import uuid4

import chromadb

from langchain_community.document_loaders import JSONLoader

from langchain_community.embeddings import BedrockEmbeddings

from langchain_community.vectorstores import Chroma

from langchain_text_splitters import RecursiveCharacterTextSplitterIndex information within the Chroma database

On this part, we present how indexing of information is finished in a Chroma database as a domestically maintained open supply vector retailer. This index information is used as context for producing information.

The next code snippet exhibits the preprocessing steps of loading the JSON information from a file and splitting it into smaller chunks:

def load_using_jsonloaer(path):

loader = JSONLoader(path,

jq_schema=".[]",

text_content=False)

paperwork = loader.load()

return paperwork

def split_documents(paperwork):

doc_list = [item for item in documents]

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1200, chunk_overlap=0)

texts = text_splitter.split_documents(doc_list)

return textsThe next snippet exhibits how an Amazon Bedrock embedding occasion is created. We used the Amazon Titan Embeddings V2 mannequin:

def get_bedrock_embeddings():

aws_region = "us-east-1"

model_id = "amazon.titan-embed-text-v2:0" #search for newest model of mannequin

bedrock_embeddings = BedrockEmbeddings(model_id=model_id, region_name=aws_region)

return bedrock_embeddingsThe next code exhibits how the embeddings are created after which loaded within the Chroma database:

persistent_client = chromadb.PersistentClient(path="../information/chroma_index")

assortment = persistent_client.get_or_create_collection("test_124")

print(assortment)

# question the database

vector_store_with_persistent_client = Chroma(collection_name="test_124",

persist_directory="../information/chroma_index",

embedding_function=get_bedrock_embeddings(),

consumer=persistent_client)

load_json_and_index(vector_store_with_persistent_client)Generate information

The next code snippet exhibits the configuration used through the LLM invocation utilizing Amazon Bedrock APIs. The LLM used is Anthropic’s Claude 3 Haiku:

config = Config(

region_name="us-east-1",

signature_version='v4',

retries={

'max_attempts': 2,

'mode': 'normal'

}

)

bedrock_runtime = boto3.consumer('bedrock-runtime', config=config)

model_id = "anthropic.claude-3-haiku-20240307-v1:0" #search for newest model of mannequin

model_kwrgs = {

"temperature": 0,

"max_tokens": 8000,

"top_p": 1.0,

"top_k": 25,

"stop_sequences": ["company-1000"],

}

# Initialize the language mannequin

llm = ChatBedrock(

model_id=model_id,

model_kwargs=model_kwrgs,

consumer=bedrock_runtime,

)The next code exhibits how the context is fetched by trying up the Chroma database (the place information was listed) for matching embeddings. We use the identical Amazon Titan mannequin to generate the embeddings:

def get_context(state of affairs):

region_name="us-east-1"

credential_profile_name = "default"

titan_model_id = "amazon.titan-embed-text-v2:0"

kb_context = []

be = BedrockEmbeddings(region_name=region_name,

credentials_profile_name=credential_profile_name,

model_id=titan_model_id)

vector_store = Chroma(collection_name="test_124", persist_directory="../information/chroma_index",

embedding_function=be)

search_results = vector_store.similarity_search(state of affairs, ok=3)

for doc in search_results:

kb_context.append(doc.page_content)

return json.dumps(kb_context)The next snippet exhibits how we formulated the detailed immediate that was handed to the LLM. We offered examples for the context, state of affairs, begin index, finish index, data depend, and different parameters. The immediate is subjective and will be adjusted for experimentation.

# Create a immediate template

prompt_template = ChatPromptTemplate.from_template(

"You're a monetary information professional tasked with producing data "

"representing firm OTC spinoff information and "

"must be adequate for investor and lending ML mannequin to take choices "

"and information ought to precisely characterize the state of affairs: {state of affairs} n "

"and as per examples given in context: "

"and context is {context} "

"the examples given in context is for reference solely, don't use similar values whereas producing dataset."

"generate dataset with the various set of samples however report ought to be capable of characterize the given state of affairs precisely."

"Please be certain that the generated information meets the next standards: "

"The info must be various and real looking, reflecting numerous industries, "

"firm sizes, monetary metrics. "

"Make sure that the generated information follows logical relationships and correlations between options "

"(e.g., increased income usually corresponds to extra staff, "

"higher credit score rankings, and decrease threat). "

"And Generate {depend} data ranging from index {start_index}. "

"generate simply JSON as per schema and don't embrace any textual content or message earlier than or after JSON. "

"{format_instruction} n"

"If persevering with, begin after this report: {last_record}n"

"If stopping, don't embrace this report within the output."

"Please be certain that the generated information is well-formatted and constant."

)The next code snippet exhibits the method for producing the artificial information. You’ll be able to name this methodology in an iterative method to generate extra data. The enter parameters embrace state of affairs, context, depend, start_index, and last_record. The response information can be formatted into CSV format utilizing the instruction offered by the next:

output_parser.get_format_instructions():

def generate_records(start_index, depend, state of affairs, context, last_record=""):

strive:

response = chain.invoke({

"depend": depend,

"start_index": start_index,

"state of affairs": state of affairs,

"context": context,

"last_record": last_record,

"format_instruction": output_parser.get_format_instructions(),

"data_set_class_schema": DataSet.schema_json()

})

return response

besides Exception as e:

print(f"Error in generate_records: {e}")

elevate eParsing the output generated by the LLM and representing it in CSV was fairly difficult. We used a Pydantic parser to parse the JSON output generated by the LLM, as proven within the following code snippet:

class CustomPydanticOutputParser(PydanticOutputParser):

def parse(self, textual content: str) -> BaseModel:

# Extract JSON from the textual content

strive:

# Discover the primary incidence of '{'

begin = textual content.index('{')

# Discover the final incidence of '}'

finish = textual content.rindex('}') + 1

json_str = textual content[start:end]

# Parse the JSON string

parsed_json = json.masses(json_str)

# Use the mum or dad class to transform to Pydantic object

return tremendous().parse_with_cls(parsed_json)

besides (ValueError, json.JSONDecodeError) as e:

elevate ValueError(f"Did not parse output: {e}")The next code snippet exhibits how the data are generated in an iterative method with 10 data in every invocation to the LLM:

def generate_full_dataset(total_records, batch_size, state of affairs, context):

dataset = []

total_generated = 0

last_record = ""

batch: DataSet = generate_records(total_generated,

min(batch_size, total_records - total_generated),

state of affairs, context, last_record)

# print(f"batch: {sort(batch)}")

total_generated = len(batch.data)

dataset.prolong(batch.data)

whereas total_generated < total_records:

strive:

batch = generate_records(total_generated,

min(batch_size, total_records - total_generated),

state of affairs, context, batch.data[-1].json())

processed_batch = batch.data

if processed_batch:

dataset.prolong(processed_batch)

total_generated += len(processed_batch)

last_record = processed_batch[-1].start_index

print(f"Generated {total_generated} data.")

else:

print("Generated an empty or invalid batch. Retrying...")

time.sleep(10)

besides Exception as e:

print(f"Error occurred: {e}. Retrying...")

time.sleep(5)

return dataset[:total_records] # Guarantee precisely the requested variety of dataConfirm the statistical properties of the generated information

We generated Q-Q plots for key attributes of the generated information: cp_exposure, cp_replacement_cost, and cp_settlement_risk, as proven within the following screenshots. The Q-Q plots examine the quantiles of the information distribution with the quantiles of a standard distribution. If the information isn’t skewed, the factors ought to roughly comply with the diagonal line.

As the subsequent step of verification, we created a corelation warmth map of the next attributes: cp_exposure, cp_replacement_cost, cp_settlement_risk, and threat. The plot is completely balanced with the diagonal components displaying a price of 1. The worth of 1 signifies the column is completely co-related to itself. The next screenshot is the correlation heatmap.

Clear up

It’s a finest observe to scrub up the sources you created as a part of this publish to stop pointless prices and potential safety dangers from leaving sources operating. If you happen to created the Jupyter pocket book occasion in SageMaker please full the next steps:

- Save and shut down the pocket book:

# First save your work # Then shut all open notebooks by clicking File -> Shut and Halt - Clear the output (if wanted earlier than saving):

# Possibility 1: Utilizing pocket book menu # Kernel -> Restart & Clear Output # Possibility 2: Utilizing code from IPython.show import clear_output clear_output() - Cease and delete the Jupyter pocket book occasion created in SageMaker:

# Possibility 1: Utilizing aws cli # Cease the pocket book occasion when not in use aws sagemaker stop-notebook-instance --notebook-instance-name# If you happen to not want the pocket book occasion aws sagemaker delete-notebook-instance --notebook-instance-name # Possibility 2: Utilizing Sagemager Console # Amazon Sagemaker -> Notebooks # Choose the Pocket book and click on Actions drop-down and hit Cease. Click on Actions drop-down and hit Delete

Accountable use of AI

Accountable AI use and information privateness are paramount when utilizing AI in monetary functions. Though artificial information technology generally is a highly effective instrument, it’s essential to make it possible for no actual buyer data is used with out correct authorization and thorough anonymization. Organizations should prioritize information safety, implement sturdy safety measures, and cling to related rules. Moreover, when growing and deploying AI fashions, it’s important to contemplate moral implications, potential biases, and the broader societal influence. Accountable AI practices embrace common audits, transparency in decision-making processes, and ongoing monitoring to assist forestall unintended penalties. By balancing innovation with moral concerns, monetary establishments can harness the advantages of AI whereas sustaining belief and defending particular person privateness.

Conclusion

On this publish, we confirmed generate a well-balanced artificial dataset representing numerous points of counterparty information, utilizing RAG-based immediate engineering with LLMs. Counterparty information evaluation is crucial for making OTC transactions between two counterparties. As a result of precise enterprise information on this area isn’t simply out there, utilizing this method you possibly can generate artificial coaching information to your ML fashions at minimal value usually inside minutes. After you practice the mannequin, you should utilize it to make clever choices earlier than getting into into an OTC spinoff transaction.

For extra details about this subject, consult with the next sources:

In regards to the Authors

Santosh Kulkarni is a Senior Moderation Architect with over 16 years of expertise, specialised in growing serverless, container-based, and information architectures for shoppers throughout numerous domains. Santosh’s experience extends to machine studying, as a licensed AWS ML specialist. At present, engaged in a number of initiatives leveraging AWS Bedrock and hosted Basis fashions.

Santosh Kulkarni is a Senior Moderation Architect with over 16 years of expertise, specialised in growing serverless, container-based, and information architectures for shoppers throughout numerous domains. Santosh’s experience extends to machine studying, as a licensed AWS ML specialist. At present, engaged in a number of initiatives leveraging AWS Bedrock and hosted Basis fashions.

Joyanta Banerjee is a Senior Modernization Architect with AWS ProServe and focuses on constructing safe and scalable cloud native software for patrons from totally different business domains. He has developed an curiosity within the AI/ML area significantly leveraging Gen AI capabilities out there on Amazon Bedrock.

Joyanta Banerjee is a Senior Modernization Architect with AWS ProServe and focuses on constructing safe and scalable cloud native software for patrons from totally different business domains. He has developed an curiosity within the AI/ML area significantly leveraging Gen AI capabilities out there on Amazon Bedrock.

Mallik Panchumarthy is a Senior Specialist Options Architect for generative AI and machine studying at AWS. Mallik works with prospects to assist them architect environment friendly, safe and scalable AI and machine studying functions. Mallik focuses on generative AI companies Amazon Bedrock and Amazon SageMaker.

Mallik Panchumarthy is a Senior Specialist Options Architect for generative AI and machine studying at AWS. Mallik works with prospects to assist them architect environment friendly, safe and scalable AI and machine studying functions. Mallik focuses on generative AI companies Amazon Bedrock and Amazon SageMaker.