As generative synthetic intelligence (AI) continues to revolutionize each business, the significance of efficient immediate optimization by means of immediate engineering strategies has grow to be key to effectively balancing the standard of outputs, response time, and prices. Immediate engineering refers back to the observe of crafting and optimizing inputs to the fashions by deciding on applicable phrases, phrases, sentences, punctuation, and separator characters to successfully use basis fashions (FMs) or giant language fashions (LLMs) for all kinds of functions. A high-quality immediate maximizes the probabilities of having an excellent response from the generative AI fashions.

A elementary a part of the optimization course of is the analysis, and there are a number of components concerned within the analysis of a generative AI utility. Past the most typical analysis of FMs, the immediate analysis is a important, but typically difficult, side of growing high-quality AI-powered options. Many organizations wrestle to persistently create and successfully consider their prompts throughout their numerous functions, resulting in inconsistent efficiency and consumer experiences and undesired responses from the fashions.

On this put up, we show how you can implement an automatic immediate analysis system utilizing Amazon Bedrock so you’ll be able to streamline your immediate growth course of and enhance the general high quality of your AI-generated content material. For this, we use Amazon Bedrock Immediate Administration and Amazon Bedrock Immediate Flows to systematically consider prompts on your generative AI functions at scale.

The significance of immediate analysis

Earlier than we clarify the technical implementation, let’s briefly talk about why immediate analysis is essential. The important thing facets to contemplate when constructing and optimizing a immediate are sometimes:

- High quality assurance – Evaluating prompts helps make it possible for your AI functions persistently produce high-quality, related outputs for the chosen mannequin.

- Efficiency optimization – By figuring out and refining efficient prompts, you’ll be able to enhance the general efficiency of your generative AI fashions by way of decrease latency and finally greater throughput.

- Price effectivity – Higher prompts can result in extra environment friendly use of AI assets, probably decreasing prices related to mannequin inference. A superb immediate permits for using smaller and lower-cost fashions, which wouldn’t give good outcomes with a foul high quality immediate.

- Person expertise – Improved prompts lead to extra correct, personalised, and useful AI-generated content material, enhancing the tip consumer expertise in your functions.

Optimizing prompts for these facets is an iterative course of that requires an analysis for driving the changes within the prompts. It’s, in different phrases, a strategy to perceive how good a given immediate and mannequin mixture are for attaining the specified solutions.

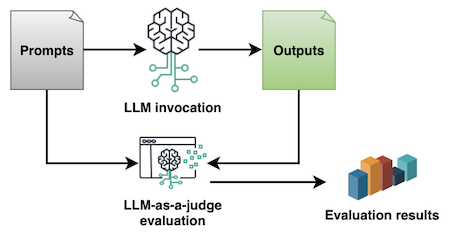

In our instance, we implement a technique referred to as LLM-as-a-judge, the place an LLM is used for evaluating the prompts based mostly on the solutions it produced with a sure mannequin, in response to predefined standards. The analysis of prompts and their solutions for a given LLM is a subjective job by nature, however a scientific immediate analysis utilizing LLM-as-a-judge permits you to quantify it with an analysis metric in a numerical rating. This helps to standardize and automate the prompting lifecycle in your group and is likely one of the the explanation why this methodology is likely one of the commonest approaches for immediate analysis within the business.

Let’s discover a pattern resolution for evaluating prompts with LLM-as-a-judge with Amazon Bedrock. You can too discover the entire code instance in amazon-bedrock-samples.

Conditions

For this instance, you want the next:

Arrange the analysis immediate

To create an analysis immediate utilizing Amazon Bedrock Immediate Administration, comply with these steps:

- On the Amazon Bedrock console, within the navigation pane, select Immediate administration after which select Create immediate.

- Enter a Title on your immediate reminiscent of

prompt-evaluatorand a Description reminiscent of “Immediate template for evaluating immediate responses with LLM-as-a-judge.” Select Create.

- Within the Immediate subject, write your immediate analysis template. Within the instance, you should use a template like the next or alter it in response to your particular analysis necessities.

- Underneath Configurations, choose a mannequin to make use of for working evaluations with the immediate. In our instance we chosen Anthropic Claude Sonnet. The standard of the analysis will rely on the mannequin you choose on this step. Be sure to steadiness the standard, response time, and price accordingly in your choice.

- Set the Inference parameters for the mannequin. We suggest that you just preserve Temperature as 0 for making a factual analysis and to keep away from hallucinations.

You possibly can take a look at your analysis immediate with pattern inputs and outputs utilizing the Check variables and Check window panels.

- Now that you’ve got a draft of your immediate, you can too create variations of it. Variations help you rapidly swap between totally different configurations on your immediate and replace your utility with probably the most applicable model on your use case. To create a model, select Create model on the prime.

The next screenshot reveals the Immediate builder web page.

Arrange the analysis move

Subsequent, that you must construct an analysis move utilizing Amazon Bedrock Immediate Flows. In our instance, we use immediate nodes. For extra data on the sorts of nodes supported, examine the Node sorts in immediate move documentation. To construct an analysis move, comply with these steps:

- On the Amazon Bedrock console, below Immediate flows, select Create immediate move.

- Enter a Title reminiscent of

prompt-eval-flow. Enter a Description reminiscent of “Immediate Stream for evaluating prompts with LLM-as-a-judge.” Select Use an present service function to pick out a task from the dropdown. Select Create. - This can open the Immediate move builder. Drag two Prompts nodes to the canvas and configure the nodes as per the next parameters:

- Stream enter

- Output:

- Title:

doc, Kind: String

- Title:

- Output:

- Invoke (Prompts)

- Node title:

Invoke - Outline in node

- Choose mannequin: A most popular mannequin to be evaluated along with your prompts

- Message:

{{enter}} - Inference configurations: As per your preferences

- Enter:

- Title:

enter, Kind: String, Expression:$.information

- Title:

- Output:

- Title:

modelCompletion, Kind: String

- Title:

- Node title:

- Consider (Prompts)

- Node title:

Consider - Use a immediate out of your Immediate Administration

- Immediate:

prompt-evaluator - Model: Model 1 (or your most popular model)

- Choose mannequin: Your most popular mannequin to judge your prompts with

- Inference configurations: As set in your immediate

- Enter:

- Title:

enter, Kind: String, Expression:$.information - Title:

output, Kind: String, Expression:$.information

- Title:

- Output

- Title:

modelCompletion, Kind: String

- Title:

- Node title:

- Stream output

- Node title:

Finish - Enter:

- Title:

doc, Kind: String, Expression:$.information

- Title:

- Node title:

- Stream enter

- To attach the nodes, drag the connecting dots, as proven within the following diagram.

You possibly can take a look at your immediate analysis move through the use of the Check immediate move panel. Move an enter, such because the query, “What’s cloud computing in a single paragraph?” It ought to return a JSON with the results of the analysis much like the next instance. Within the code instance pocket book, amazon-bedrock-samples, we additionally included the details about the fashions used for invocation and analysis to our consequence JSON.

As the instance reveals, we requested the FM to judge with separate scores the immediate and the reply the FM generated from that immediate. We requested it to supply a justification for the rating and a few suggestions to additional enhance the prompts. All this data is effective for a immediate engineer as a result of it helps information the optimization experiments and helps them make extra knowledgeable selections in the course of the immediate life cycle.

Implementing immediate analysis at scale

Up to now, we’ve explored how you can consider a single immediate. Usually, medium to giant organizations work with tens, tons of, and even hundreds of immediate variations for his or her a number of functions, making it an ideal alternative for automation at scale. For this, you’ll be able to run the move in full datasets of prompts saved in recordsdata, as proven within the instance pocket book.

Alternatively, you can too depend on different node sorts in Amazon Bedrock Immediate Flows for studying and storing in Amazon Easy Storage Service (Amazon S3) recordsdata and implementing iterator and collector based mostly flows. The next diagram reveals one of these move. Upon getting established a file-based mechanism for working the immediate analysis move on datasets at scale, you can too automate the entire course of by connecting it your most popular steady integration and steady growth (CI/CD) instruments. The main points for these are out of the scope of this put up.

Finest practices and proposals

Primarily based on our analysis course of, listed here are some greatest practices for immediate refinement:

- Iterative enchancment – Use the analysis suggestions to repeatedly refine your prompts. The immediate optimization is finally an iterative course of.

- Context is vital – Be certain your prompts present enough context for the AI mannequin to generate correct responses. Relying on the complexity of the duties or questions that your immediate will reply, you would possibly want to make use of totally different immediate engineering strategies. You possibly can examine the Immediate engineering pointers within the Amazon Bedrock documentation and different assets on the subject supplied by the mannequin suppliers.

- Specificity issues – Be as particular as potential in your prompts and analysis standards. Specificity guides the fashions in direction of desired outputs.

- Check edge circumstances – Consider your prompts with quite a lot of inputs to confirm robustness. You may also need to run a number of evaluations on the identical immediate for evaluating and testing output consistency, which is likely to be necessary relying in your use case.

Conclusion and subsequent steps

Through the use of the LLM-as-a-judge methodology with Amazon Bedrock Immediate Administration and Amazon Bedrock Immediate Flows, you’ll be able to implement a scientific strategy to immediate analysis and optimization. This not solely improves the standard and consistency of your AI-generated content material but in addition streamlines your growth course of, probably decreasing prices and bettering consumer experiences.

We encourage you to discover these options additional and adapt the analysis course of to your particular use circumstances. As you proceed to refine your prompts, you’ll be capable to unlock the total potential of generative AI in your functions. To get began, try the total with the code samples used on this put up. We’re excited to see the way you’ll use these instruments to reinforce your AI-powered options!

For extra data on Amazon Bedrock and its options, go to the Amazon Bedrock documentation.

Concerning the Creator

Antonio Rodriguez is a Sr. Generative AI Specialist Options Architect at Amazon Net Providers. He helps corporations of all sizes clear up their challenges, embrace innovation, and create new enterprise alternatives with Amazon Bedrock. Aside from work, he likes to spend time together with his household and play sports activities together with his pals.