This weblog put up is co-written with Renuka Kumar and Thomas Matthew from Cisco.

Enterprise knowledge by its very nature spans various knowledge domains, akin to safety, finance, product, and HR. Information throughout these domains is commonly maintained throughout disparate knowledge environments (akin to Amazon Aurora, Oracle, and Teradata), with every managing lots of or maybe hundreds of tables to symbolize and persist enterprise knowledge. These tables home advanced domain-specific schemas, with situations of nested tables and multi-dimensional knowledge that require advanced database queries and domain-specific information for knowledge retrieval.

Current advances in generative AI have led to the speedy evolution of pure language to SQL (NL2SQL) know-how, which makes use of pre-trained massive language fashions (LLMs) and pure language to generate database queries within the second. Though this know-how guarantees simplicity and ease of use for knowledge entry, changing pure language queries to advanced database queries with accuracy and at enterprise scale has remained a big problem. For enterprise knowledge, a serious problem stems from the widespread case of database tables having embedded constructions that require particular information or extremely nuanced processing (for instance, an embedded XML formatted string). Because of this, NL2SQL options for enterprise knowledge are sometimes incomplete or inaccurate.

This put up describes a sample that AWS and Cisco groups have developed and deployed that’s viable at scale and addresses a broad set of difficult enterprise use circumstances. The methodology permits for the usage of easier, and due to this fact more cost effective and decrease latency, generative fashions by lowering the processing required for SQL technology.

Particular challenges for enterprise-scale NL2SQL

Generative accuracy is paramount for NL2SQL use circumstances; inaccurate SQL queries may end in a delicate enterprise knowledge leak, or result in inaccurate outcomes impacting essential enterprise choices. Enterprise-scale knowledge presents particular challenges for NL2SQL, together with the next:

- Advanced schemas optimized for storage (and never retrieval) – Enterprise databases are sometimes distributed in nature and optimized for storage and never for retrieval. Because of this, the desk schemas are advanced, involving nested tables and multi-dimensional knowledge constructions (for instance, a cell containing an array of information). As an extra outcome, creating queries for retrieval from these knowledge shops requires particular experience and entails advanced filtering and joins.

- Numerous and sophisticated pure language queries – The consumer’s pure language enter may also be advanced as a result of they may consult with a listing of entities of curiosity or date ranges. Changing the logical which means of those consumer queries right into a database question can result in overly lengthy and sophisticated SQL queries as a result of authentic design of the info schema.

- LLM information hole – NL2SQL language fashions are sometimes skilled on knowledge schemas which are publicly obtainable for schooling functions and won’t have the mandatory information complexity required of enormous, distributed databases in manufacturing environments. Consequently, when confronted with advanced enterprise desk schemas or advanced consumer queries, LLMs have problem producing appropriate question statements as a result of they’ve problem understanding interrelationships between the values and entities of the schema.

- LLM consideration burden and latency – Queries containing multi-dimensional knowledge typically contain multi-level filtering over every cell of the info. To generate queries for circumstances akin to these, the generative mannequin requires extra consideration to assist attending to the rise in related tables, columns, and values; analyzing the patterns; and producing extra tokens. This will increase the LLM’s question technology latency, and the probability of question technology errors, due to the LLM misunderstanding knowledge relationships and producing incorrect filter statements.

- Effective-tuning problem – One widespread method to realize larger accuracy with question technology is to fine-tune the mannequin with extra SQL question samples. Nevertheless, it’s non-trivial to craft coaching knowledge for producing SQL for embedded constructions inside columns (for instance, JSON, or XML), to deal with units of identifiers, and so forth, to get baseline efficiency (which is the issue we are attempting to resolve within the first place). This additionally introduces a slowdown within the improvement cycle.

Answer design and methodology

The answer described on this put up offers a set of optimizations that remedy the aforementioned challenges whereas lowering the quantity of labor that needs to be carried out by an LLM for producing correct output. This work extends upon the put up Producing worth from enterprise knowledge: Finest practices for Text2SQL and generative AI. That put up has many helpful suggestions for producing high-quality SQL, and the rules outlined could be ample in your wants, relying on the inherent complexity of the database schemas.

To attain generative accuracy for advanced eventualities, the answer breaks down NL2SQL technology right into a sequence of centered steps and sub-problems, narrowing the generative focus to the suitable knowledge area. Utilizing knowledge abstractions for advanced joins and knowledge construction, this method permits the usage of smaller and extra inexpensive LLMs for the duty. This method ends in lowered immediate dimension and complexity for inference, lowered response latency, and improved accuracy, whereas enabling the usage of off-the-shelf pre-trained fashions.

Narrowing scope to particular knowledge domains

The answer workflow narrows down the general schema area into the info area focused by the consumer’s question. Every knowledge area corresponds to the set of database knowledge constructions (tables, views, and so forth) which are generally used collectively to reply a set of associated consumer queries, for an software or enterprise area. The answer makes use of the info area to assemble immediate inputs for the generative LLM.

This sample consists of the next components:

- Mapping enter queries to domains – This entails mapping every consumer question to the info area that’s applicable for producing the response for NL2SQL at runtime. This mapping is analogous in nature to intent classification, and permits the development of an LLM immediate that’s scoped for every enter question (described subsequent).

- Scoping knowledge area for centered immediate building – This can be a divide-and-conquer sample. By specializing in the info area of the enter question, redundant data, akin to schemas for different knowledge domains within the enterprise knowledge retailer, will be excluded. This could be thought-about as a type of immediate pruning; nevertheless, it gives greater than immediate discount alone. Lowering the immediate context to the in-focus knowledge area permits larger scope for few-shot studying examples, declaration of particular enterprise guidelines, and extra.

- Augmenting SQL DDL definitions with metadata to reinforce LLM inference – This entails enhancing the LLM immediate context by augmenting the SQL DDL for the info area with descriptions of tables, columns, and guidelines for use by the LLM as steering on its technology. That is described in additional element later on this put up.

- Decide question dialect and connection data – For every knowledge area, the database server metadata (such because the SQL dialect and connection URI) is captured throughout use case onboarding and made obtainable at runtime to be mechanically included within the immediate for SQL technology and subsequent question execution. This allows scalability by means of decoupling the pure language question from the particular queried knowledge supply. Collectively, the SQL dialect and connectivity abstractions permit for the answer to be knowledge supply agnostic; knowledge sources could be distributed inside or throughout totally different clouds, or offered by totally different distributors. This modularity permits scalable addition of recent knowledge sources and knowledge domains, as a result of every is impartial.

Managing identifiers for SQL technology (useful resource IDs)

Resolving identifiers entails extracting the named sources, as named entities, from the consumer’s question and mapping the values to distinctive IDs applicable for the goal knowledge supply previous to NL2SQL technology. This may be applied utilizing pure language processing (NLP) or LLMs to use named entity recognition (NER) capabilities to drive the decision course of. This optionally available step has probably the most worth when there are lots of named sources and the lookup course of is advanced. For example, in a consumer question akin to “In what video games did Isabelle Werth, Nedo Nadi, and Allyson Felix compete?” there are named sources: ‘allyson felix’, ‘isabelle werth’, and ‘nedo nadi’. This step permits for speedy and exact suggestions to the consumer when a useful resource can’t be resolved to an identifier (for instance, as a consequence of ambiguity).

This optionally available technique of dealing with many or paired identifiers is included to dump the burden on LLMs for consumer queries with difficult units of identifiers to be integrated, akin to those who may are available pairs (akin to ID-type, ID-value), or the place there are lots of identifiers. Moderately than having the generative LLM insert every distinctive ID into the SQL straight, the identifiers are made obtainable by defining a brief knowledge construction (akin to a brief desk) and a set of corresponding insert statements. The LLM is prompted with few-shot studying examples to generate SQL for the consumer question by becoming a member of with the short-term knowledge construction, reasonably than try id injection. This ends in a less complicated and extra constant question sample for circumstances when there are one, many, or pairs of identifiers.

Dealing with advanced knowledge constructions: Abstracting area knowledge constructions

This step is geared toward simplifying advanced knowledge constructions right into a type that may be understood by the language mannequin with out having to decipher advanced inter-data relationships. Advanced knowledge constructions may seem as nested tables or lists inside a desk column, as an illustration.

We are able to outline short-term knowledge constructions (akin to views and tables) that summary advanced multi-table joins, nested constructions, and extra. These higher-level abstractions present simplified knowledge constructions for question technology and execution. The highest-level definitions of those abstractions are included as a part of the immediate context for question technology, and the complete definitions are offered to the SQL execution engine, together with the generated question. The ensuing queries from this course of can use easy set operations (akin to IN, versus advanced joins) that LLMs are effectively skilled on, thereby assuaging the necessity for nested joins and filters over advanced knowledge constructions.

Augmenting knowledge with knowledge definitions for immediate building

A number of of the optimizations famous earlier require making a number of the specifics of the info area specific. Luckily, this solely needs to be finished when schemas and use circumstances are onboarded or up to date. The profit is larger generative accuracy, lowered generative latency and value, and the power to assist arbitrarily advanced question necessities.

To seize the semantics of a knowledge area, the next components are outlined:

- The usual tables and views in knowledge schema, together with feedback to explain the tables and columns.

- Be a part of hints for the tables and views, akin to when to make use of outer joins.

- Information domain-specific guidelines, akin to which columns won’t seem in a last choose assertion.

- The set of few-shot examples of consumer queries and corresponding SQL statements. A very good set of examples would come with all kinds of consumer queries for that area.

- Definitions of the info schemas for any short-term tables and views used within the answer.

- A website-specific system immediate that specifies the position and experience that the LLM has, the SQL dialect, and the scope of its operation.

- A website-specific consumer immediate.

- Moreover, if short-term tables or views are used for the info area, a SQL script is required that, when executed, creates the specified short-term knowledge constructions must be outlined. Relying on the use case, this could be a static or dynamically generated script.

Accordingly, the immediate for producing the SQL is dynamic and constructed primarily based on the info area of the enter query, with a set of particular definitions of information construction and guidelines applicable for the enter question. We consult with this set of components because the knowledge area context. The aim of the info area context is to supply the mandatory immediate metadata for the generative LLM. Examples of this, and the strategies described within the earlier sections, are included within the GitHub repository. There may be one context for every knowledge area, as illustrated within the following determine.

Bringing all of it collectively: The execution move

This part describes the execution move of the answer. An instance implementation of this sample is out there within the GitHub repository. Entry the repository to comply with together with the code.

As an instance the execution move, we use an instance database with knowledge about Olympics statistics and one other with the corporate’s worker trip schedule. We comply with the execution move for the area relating to Olympics statistics utilizing the consumer question “In what video games did Isabelle Werth, Nedo Nadi, and Allyson Felix compete?” to point out the inputs and outputs of the steps within the execution move, as illustrated within the following determine.

Preprocess the request

Step one of the NL2SQL move is to preprocess the request. The principle goal of this step is to categorise the consumer question into a site. As defined earlier, this narrows down the scope of the issue to the suitable knowledge area for SQL technology. Moreover, this step identifies and extracts the referenced named sources within the consumer question. These are then used to name the id service within the subsequent step to get the database identifiers for these named sources.

Utilizing the sooner talked about instance, the inputs and outputs of this step are as follows:

Resolve identifiers (to database IDs)

This step processes the named sources’ strings extracted within the earlier step and resolves them to be identifiers that can be utilized in database queries. As talked about earlier, the named sources (for instance, “group22”, “user123”, and “I”) are regarded up utilizing solution-specific means, such by means of database lookups or an ID service.

The next code exhibits the execution of this step in our operating instance:

Put together the request

This step is pivotal on this sample. Having obtained the area and the named sources together with their looked-up IDs, we use the corresponding context for that area to generate the next:

- A immediate for the LLM to generate a SQL question equivalent to the consumer question

- A SQL script to create the domain-specific schema

To create the immediate for the LLM, this step assembles the system immediate, the consumer immediate, and the obtained consumer question from the enter, together with the domain-specific schema definition, together with new short-term tables created in addition to any be part of hints, and eventually the few-shot examples for the area. Aside from the consumer question that’s obtained as in enter, different elements are primarily based on the values offered within the context for that area.

A SQL script for creating required domain-specific short-term constructions (akin to views and tables) is constructed from the knowledge within the context. The domain-specific schema within the LLM immediate, be part of hints, and the few-shot examples are aligned with the schema that will get generated by operating this script. In our instance, this step is proven within the following code. The output is a dictionary with two keys, llm_prompt and sql_preamble. The worth strings for these have been clipped right here; the complete output will be seen within the Jupyter pocket book.

Generate SQL

Now that the immediate has been ready together with any data essential to supply the right context to the LLM, we offer that data to the SQL-generating LLM on this step. The aim is to have the LLM output SQL with the right be part of construction, filters, and columns. See the next code:

Execute the SQL

After the SQL question is generated by the LLM, we are able to ship it off to the following step. At this step, the SQL preamble and the generated SQL are merged to create an entire SQL script for execution. The whole SQL script is then executed in opposition to the info retailer, a response is fetched, after which the response is handed again to the consumer or end-user. See the next code:

Answer advantages

General, our checks have proven a number of advantages, akin to:

- Excessive accuracy – That is measured by a string matching of the generated question with the goal SQL question for every check case. In our checks, we noticed over 95% accuracy for 100 queries, spanning three knowledge domains.

- Excessive consistency – That is measured by way of the identical SQL generated being generated throughout a number of runs. We noticed over 95% consistency for 100 queries, spanning three knowledge domains. With the check configuration, the queries had been correct more often than not; a small quantity often produced inconsistent outcomes.

- Low value and latency – The method helps the usage of small, low-cost, low-latency LLMs. We noticed SQL technology within the 1–3 second vary utilizing fashions Meta’s Code Llama 13B and Anthropic’s Claude Haiku 3.

- Scalability – The strategies that we employed by way of knowledge abstractions facilitate scaling impartial of the variety of entities or identifiers within the knowledge for a given use case. For example, in our checks consisting of a listing of 200 totally different named sources per row of a desk, and over 10,000 such rows, we measured a latency vary of two–5 seconds for SQL technology and three.5–4.0 seconds for SQL execution.

- Fixing complexity – Utilizing the info abstractions for simplifying complexity enabled the correct technology of arbitrarily advanced enterprise queries, which just about definitely wouldn’t be doable in any other case.

We attribute the success of the answer with these wonderful however light-weight fashions (in comparison with a Meta Llama 70B variant or Anthropic’s Claude Sonnet) to the factors famous earlier, with the lowered LLM process complexity being the driving power. The implementation code demonstrates how that is achieved. General, by utilizing the optimizations outlined on this put up, pure language SQL technology for enterprise knowledge is way more possible than could be in any other case.

AWS answer structure

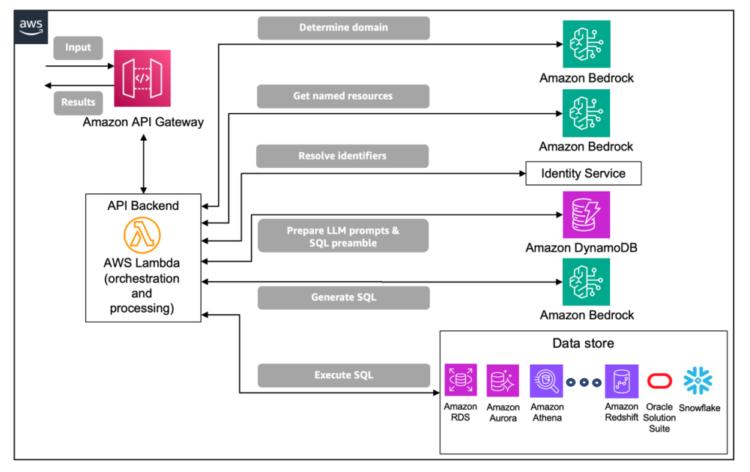

On this part, we illustrate the way you may implement the structure on AWS. The tip-user sends their pure language queries to the NL2SQL answer utilizing a REST API. Amazon API Gateway is used to provision the REST API, which will be secured by Amazon Cognito. The API is linked to an AWS Lambda perform, which implements and orchestrates the processing steps described earlier utilizing a programming language of the consumer’s alternative (akin to Python) in a serverless method. On this instance implementation, the place Amazon Bedrock is famous, the answer makes use of Anthropic’s Claude Haiku 3.

Briefly, the processing steps are as follows:

- Decide the area by invoking an LLM on Amazon Bedrock for classification.

- Invoke Amazon Bedrock to extract related named sources from the request.

- After the named sources are decided, this step calls a service (the Identification Service) that returns identifier specifics related to the named sources for the duty at hand. The Identification Service is logically a key/worth lookup service, which could assist for a number of domains.

- This step runs on Lambda to create the LLM immediate to generate the SQL, and to outline short-term SQL constructions that will likely be executed by the SQL engine together with the SQL generated by the LLM (within the subsequent step).

- Given the ready immediate, this step invokes an LLM operating on Amazon Bedrock to generate the SQL statements that correspond to the enter pure language question.

- This step executes the generated SQL question in opposition to the goal database. In our instance implementation, we used an SQLite database for illustration functions, however you would use one other database server.

The ultimate result’s obtained by operating the previous pipeline on Lambda. When the workflow is full, the result’s offered as a response to the REST API request.

The next diagram illustrates the answer structure.

Conclusion

On this put up, the AWS and Cisco groups unveiled a brand new methodical method that addresses the challenges of enterprise-grade SQL technology. The groups had been in a position to scale back the complexity of the NL2SQL course of whereas delivering larger accuracy and higher general efficiency.

Although we’ve walked you thru an instance use case centered on answering questions on Olympic athletes, this versatile sample will be seamlessly tailored to a variety of enterprise purposes and use circumstances. The demo code is out there within the GitHub repository. We invite you to go away any questions and suggestions within the feedback.

In regards to the authors

Renuka Kumar is a Senior Engineering Technical Lead at Cisco, the place she has architected and led the event of Cisco’s Cloud Safety BU’s AI/ML capabilities within the final 2 years, together with launching first-to-market improvements on this area. She has over 20 years of expertise in a number of cutting-edge domains, with over a decade in safety and privateness. She holds a PhD from the College of Michigan in Pc Science and Engineering.

Toby Fotherby is a Senior AI and ML Specialist Options Architect at AWS, serving to prospects use the newest advances in AI/ML and generative AI to scale their improvements. He has over a decade of cross-industry experience main strategic initiatives and grasp’s levels in AI and Information Science. Toby additionally leads a program coaching the following technology of AI Options Architects.

Shweta Keshavanarayana is a Senior Buyer Options Supervisor at AWS. She works with AWS Strategic Prospects and helps them of their cloud migration and modernization journey. Shweta is captivated with fixing advanced buyer challenges utilizing inventive options. She holds an undergraduate diploma in Pc Science & Engineering. Past her skilled life, she volunteers as a staff supervisor for her sons’ U9 cricket staff, whereas additionally mentoring ladies in tech and serving the local people.

Thomas Matthew is an AL/ML Engineer at Cisco. Over the previous decade, he has labored on making use of strategies from graph idea and time collection evaluation to resolve detection and exfiltration issues present in Community safety. He has introduced his analysis and work at Blackhat and DevCon. At present, he helps combine generative AI know-how into Cisco’s Cloud Safety product choices.

Thomas Matthew is an AL/ML Engineer at Cisco. Over the previous decade, he has labored on making use of strategies from graph idea and time collection evaluation to resolve detection and exfiltration issues present in Community safety. He has introduced his analysis and work at Blackhat and DevCon. At present, he helps combine generative AI know-how into Cisco’s Cloud Safety product choices.

Daniel Vaquero is a Senior AI/ML Specialist Options Architect at AWS. He helps prospects remedy enterprise challenges utilizing synthetic intelligence and machine studying, creating options starting from conventional ML approaches to generative AI. Daniel has greater than 12 years of {industry} expertise engaged on pc imaginative and prescient, computational images, machine studying, and knowledge science, and he holds a PhD in Pc Science from UCSB.

Daniel Vaquero is a Senior AI/ML Specialist Options Architect at AWS. He helps prospects remedy enterprise challenges utilizing synthetic intelligence and machine studying, creating options starting from conventional ML approaches to generative AI. Daniel has greater than 12 years of {industry} expertise engaged on pc imaginative and prescient, computational images, machine studying, and knowledge science, and he holds a PhD in Pc Science from UCSB.

Atul Varshneya is a former Principal AI/ML Specialist Options Architect with AWS. He at the moment focuses on growing options within the areas of AI/ML, notably in generative AI. In his profession of 4 many years, Atul has labored because the know-how R&D chief in a number of massive firms and startups.

Atul Varshneya is a former Principal AI/ML Specialist Options Architect with AWS. He at the moment focuses on growing options within the areas of AI/ML, notably in generative AI. In his profession of 4 many years, Atul has labored because the know-how R&D chief in a number of massive firms and startups.

Jessica Wu is an Affiliate Options Architect at AWS. She helps prospects construct extremely performant, resilient, fault-tolerant, cost-optimized, and sustainable architectures.

Jessica Wu is an Affiliate Options Architect at AWS. She helps prospects construct extremely performant, resilient, fault-tolerant, cost-optimized, and sustainable architectures.