Amazon operations span the globe, touching the lives of hundreds of thousands of shoppers, staff, and distributors day by day. From the huge logistics community to the cutting-edge expertise infrastructure, this scale is a testomony to the corporate’s means to innovate and serve its prospects. With this scale comes a duty to handle dangers and handle claims—whether or not they contain employee’s compensation, transportation incidents, or different insurance-related issues. Danger managers oversee claims towards Amazon all through their lifecycle. Declare paperwork from numerous sources develop because the claims mature, with a single declare consisting of 75 paperwork on common. Danger managers are required to strictly comply with the related commonplace working process (SOP) and overview the evolution of dozens of declare points to evaluate severity and to take correct actions, reviewing and addressing every declare pretty and effectively. However as Amazon continues to develop, how are danger managers empowered to maintain up with the rising variety of claims?

In December 2024, an inner expertise group at Amazon constructed and applied an AI-powered resolution as utilized to information associated to claims towards the corporate. This resolution generates structured summaries of claims underneath 500 phrases throughout numerous classes, bettering effectivity whereas sustaining accuracy of the claims overview course of. Nonetheless, the group confronted challenges with excessive inference prices and processing instances (3–5 minutes per declare), significantly as new paperwork are added. As a result of the group plans to increase this expertise to different enterprise strains, they explored Amazon Nova Basis Fashions as potential alternate options to handle value and latency issues.

The next graphs present efficiency in contrast with latency and efficiency in contrast with value for numerous basis fashions on the declare dataset.

The analysis of the claims summarization use case proved that Amazon Nova basis fashions (FMs) are a powerful different to different frontier giant language fashions (LLMs), attaining comparable efficiency with considerably decrease value and better total velocity. The Amazon Nova Lite mannequin demonstrates robust summarization capabilities within the context of lengthy, various, and messy paperwork.

Answer overview

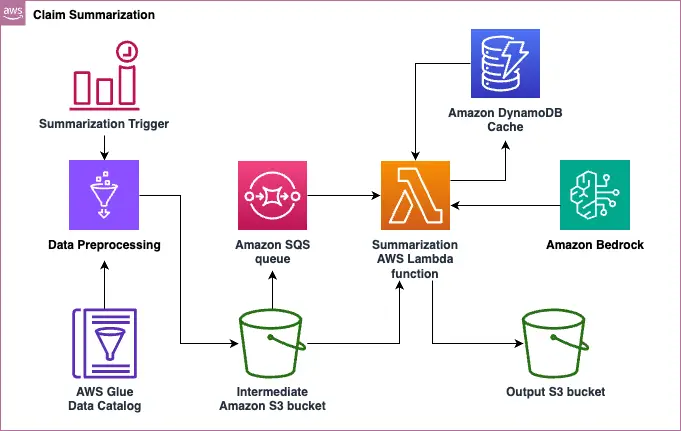

The summarization pipeline begins by processing uncooked declare information utilizing AWS Glue jobs. It shops information into intermediate Amazon Easy Storage Service (Amazon S3) buckets, and makes use of Amazon Easy Queue Service (Amazon SQS) to handle summarization jobs. Declare summaries are generated by AWS Lambda utilizing basis fashions hosted in Amazon Bedrock. We first filter the irrelevant declare information utilizing an LLM-based classification mannequin based mostly on Nova Lite and summarize solely the related declare information to cut back the context window. Contemplating relevance and summarization requires completely different ranges of intelligence, we choose the suitable fashions to optimize value whereas sustaining efficiency. As a result of claims are summarized upon arrival of latest data, we additionally cache the intermediate outcomes and summaries utilizing Amazon DynamoDB to cut back duplicate inference and cut back value. The next picture reveals a high-level structure of the declare summarization use case resolution.

Though the Amazon Nova group has revealed efficiency benchmarks throughout a number of completely different classes, claims summarization is a singular use case given its variety of inputs and lengthy context home windows. This prompted the expertise group proudly owning the claims resolution to research additional with their very own benchmarking research. To evaluate the efficiency, velocity, and price of Amazon Nova fashions for his or her particular use case, the group curated a benchmark dataset consisting of 95 pairs of declare paperwork and verified facet summaries. Declare paperwork vary from 1,000 to 60,000 phrases, with most being round 13,000 phrases (median 10,100). The verified summaries of those paperwork are normally transient, containing fewer than 100 phrases. Inputs to the fashions embody various forms of paperwork and summaries that cowl a wide range of points in manufacturing.

In response to benchmark assessments, the group noticed that Amazon Nova Lite is twice as quick and prices 98% lower than their present mannequin. Amazon Nova Micro is much more environment friendly, working 4 instances quicker and costing 99% much less. The substantial cost-effectiveness and latency enhancements supply extra flexibility for designing a complicated mannequin and scaling up take a look at compute to enhance abstract high quality. Furthermore, the group additionally noticed that the latency hole between Amazon Nova fashions and the subsequent finest mannequin widened for lengthy context home windows and lengthy output, making Amazon Nova a stronger different within the case of lengthy paperwork whereas optimizing for latency. Moreover, the group carried out this benchmarking research utilizing the identical immediate as the present in-production resolution with seamless immediate portability. Regardless of this, Amazon Nova fashions efficiently adopted directions and generated the specified format for post-processing. Primarily based on the benchmarking and analysis outcomes, the group used Amazon Nova Lite for classification and summarization use instances.

Conclusion

On this put up, we shared how an inner expertise group at Amazon evaluated Amazon Nova fashions, leading to notable enhancements in inference velocity and cost-efficiency. Trying again on the initiative, the group recognized a number of vital components that supply key benefits:

- Entry to a various mannequin portfolio – The supply of a wide selection of fashions, together with compact but highly effective choices corresponding to Amazon Nova Micro and Amazon Nova Lite, enabled the group to rapidly experiment with and combine probably the most appropriate fashions for his or her wants.

- Scalability and suppleness – The price and latency enhancements of the Amazon Nova fashions permit for extra flexibility in designing subtle fashions and scaling up take a look at compute to enhance abstract high quality. This scalability is especially useful for organizations dealing with giant volumes of knowledge or advanced workflows.

- Ease of integration and migration – The fashions’ means to comply with directions and generate outputs within the desired format simplifies post-processing and integration into present methods.

In case your group has the same use case of huge doc processing that’s expensive and time-consuming, the above analysis train reveals that Amazon Nova Lite and Amazon Nova Micro might be game-changing. These fashions excel at dealing with giant volumes of various paperwork and lengthy context home windows—good for advanced information processing environments. What makes this significantly compelling is the fashions’ means to take care of excessive efficiency whereas considerably lowering operational prices. It’s vital to iterate over new fashions for all three pillars—high quality, value, and velocity. Benchmark these fashions with your individual use case and datasets.

You may get began with Amazon Nova on the Amazon Bedrock console. Be taught extra on the Amazon Nova product web page.

Concerning the authors

Aitzaz Ahmad is an Utilized Science Supervisor at Amazon, the place he leads a group of scientists constructing numerous functions of machine studying and generative AI in finance. His analysis pursuits are in pure language processing (NLP), generative AI, and LLM brokers. He obtained his PhD in electrical engineering from Texas A&M College.

Aitzaz Ahmad is an Utilized Science Supervisor at Amazon, the place he leads a group of scientists constructing numerous functions of machine studying and generative AI in finance. His analysis pursuits are in pure language processing (NLP), generative AI, and LLM brokers. He obtained his PhD in electrical engineering from Texas A&M College.

Stephen Lau is a Senior Supervisor of Software program Improvement at Amazon, leads groups of scientists and engineers. His group develops highly effective fraud detection and prevention functions, saving Amazon billions yearly. Additionally they construct Treasury functions that optimize Amazon international liquidity whereas managing dangers, considerably impacting the monetary safety and effectivity of Amazon.

Stephen Lau is a Senior Supervisor of Software program Improvement at Amazon, leads groups of scientists and engineers. His group develops highly effective fraud detection and prevention functions, saving Amazon billions yearly. Additionally they construct Treasury functions that optimize Amazon international liquidity whereas managing dangers, considerably impacting the monetary safety and effectivity of Amazon.

Yong Xie is an utilized scientist in Amazon FinTech. He focuses on growing giant language fashions and generative AI functions for finance.

Yong Xie is an utilized scientist in Amazon FinTech. He focuses on growing giant language fashions and generative AI functions for finance.

Kristen Henkels is a Sr. Product Supervisor – Technical in Amazon FinTech, the place she focuses on serving to inner groups enhance their productiveness by leveraging ML and AI options. She holds an MBA from Columbia Enterprise College and is enthusiastic about empowering groups with the proper expertise to allow strategic, high-value work.

Kristen Henkels is a Sr. Product Supervisor – Technical in Amazon FinTech, the place she focuses on serving to inner groups enhance their productiveness by leveraging ML and AI options. She holds an MBA from Columbia Enterprise College and is enthusiastic about empowering groups with the proper expertise to allow strategic, high-value work.

Shivansh Singh is a Principal Options Architect at Amazon. He’s enthusiastic about driving enterprise outcomes by progressive, cost-effective and resilient options, with a concentrate on machine studying, generative AI, and serverless applied sciences. He’s a technical chief and strategic advisor to large-scale video games, media, and leisure prospects. He has over 16 years of expertise remodeling companies by technological improvements and constructing large-scale enterprise options.

Shivansh Singh is a Principal Options Architect at Amazon. He’s enthusiastic about driving enterprise outcomes by progressive, cost-effective and resilient options, with a concentrate on machine studying, generative AI, and serverless applied sciences. He’s a technical chief and strategic advisor to large-scale video games, media, and leisure prospects. He has over 16 years of expertise remodeling companies by technological improvements and constructing large-scale enterprise options.

Dushan Tharmal is a Principal Product Supervisor – Technical on the Amazons Synthetic Basic Intelligence group, chargeable for the Amazon Nova Basis Fashions. He earned his bachelor’s in arithmetic on the College of Waterloo and has over 10 years of technical product management expertise throughout monetary providers and loyalty. In his spare time, he enjoys wine, hikes, and philosophy.

Dushan Tharmal is a Principal Product Supervisor – Technical on the Amazons Synthetic Basic Intelligence group, chargeable for the Amazon Nova Basis Fashions. He earned his bachelor’s in arithmetic on the College of Waterloo and has over 10 years of technical product management expertise throughout monetary providers and loyalty. In his spare time, he enjoys wine, hikes, and philosophy.

Anupam Dewan is a Senior Options Architect with a ardour for generative AI and its functions in actual life. He and his group allow Amazon builders who construct customer-facing functions utilizing generative AI. He lives within the Seattle space, and outdoors of labor, he likes to go mountaineering and revel in nature.

Anupam Dewan is a Senior Options Architect with a ardour for generative AI and its functions in actual life. He and his group allow Amazon builders who construct customer-facing functions utilizing generative AI. He lives within the Seattle space, and outdoors of labor, he likes to go mountaineering and revel in nature.