was co-authored by Sebastian Humberg and Morris Stallmann.

Introduction

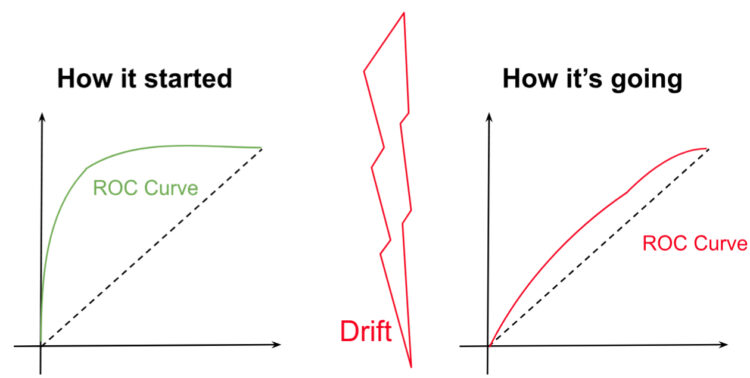

Machine studying (ML) fashions are designed to make correct predictions primarily based on patterns in historic knowledge. However what if these patterns change in a single day? As an illustration, in bank card fraud detection, at present’s official transaction patterns may look suspicious tomorrow as criminals evolve their techniques and trustworthy clients change their habits. Or image an e-commerce recommender system: what labored for summer season customers might all of a sudden flop as winter holidays sweep in new tendencies. This delicate, but relentless, shifting of knowledge, often known as drift, can quietly erode your mannequin’s efficiency, turning yesterday’s correct predictions into at present’s pricey errors.

On this article, we’ll lay the muse for understanding drift: what it’s, why it issues, and the way it can sneak up on even the most effective machine studying techniques. We’ll break down the 2 major forms of drift: knowledge drift and idea drift. Then, we transfer from concept to follow by outlining sturdy frameworks and statistical instruments for detecting drift earlier than it derails your fashions. Lastly, you’ll get a look into what to do towards drift, so your machine studying techniques stay resilient in a always evolving world.

What’s drift?

Drift refers to sudden modifications within the knowledge distribution over time, which may negatively impression the efficiency of predictive fashions. ML fashions resolve prediction duties by making use of patterns that the mannequin realized from historic knowledge. Extra formally, in supervised ML, the mannequin learns a joint distribution of some set of characteristic vectors X and goal values y from all knowledge out there at time t0:

[P_{t_{0}}(X, y) = P_{t_{0}}(X) times P_{t_{0}}(y|X)]

After coaching and deployment, the mannequin might be utilized to new knowledge Xt to foretell yt underneath the idea that the brand new knowledge follows the identical joint distribution. Nonetheless, if that assumption is violated, then the mannequin’s predictions might not be dependable, because the patterns within the coaching knowledge might have grow to be irrelevant. The violation of that assumption, specifically the change of the joint distribution, known as drift. Formally, we are saying drift has occurred if:

[P_{t_0} (X,y) ne P_{t}(X,y).]

for some t>t0.

The Major Kinds of Drift: Knowledge Drift and Idea Drift

Usually, drift happens when the joint chance P(X, y) modifications over time. But when we glance extra intently, we discover there are totally different sources of drift with totally different implications for the ML system. On this part, we introduce the notions of knowledge drift and idea drift.

Recall that the joint chance may be decomposed as follows:

[P(X,y) = P(X) times P(y|X).]

Relying on which a part of the joint distribution modifications, we both speak about knowledge drift or idea drift.

Knowledge Drift

If the distribution of the options modifications, then we communicate of knowledge drift:

[ P_{t_0}(X) ne P_{t}(X), t_0 > t. ]

Word that knowledge drift doesn’t essentially imply that the connection between the goal values y and the options X has modified. Therefore, it’s doable that the machine studying mannequin nonetheless performs reliably even after the incidence of knowledge drift.

Usually, nonetheless, knowledge drift typically coincides with idea drift and is usually a good early indicator of mannequin efficiency degradation. Particularly in eventualities the place floor fact labels are usually not (instantly) out there, detecting knowledge drift may be an necessary part of a drift warning system. For instance, consider the COVID-19 pandemic, the place the enter knowledge distribution of sufferers, corresponding to signs, modified for fashions attempting to foretell medical outcomes. This transformation in medical outcomes was a drift in idea and would solely be observable after some time. To keep away from incorrect therapy primarily based on outdated mannequin predictions, it is very important detect and sign knowledge drift that may be noticed instantly.

Furthermore, drift may happen in unsupervised ML techniques the place goal values y are usually not of curiosity in any respect. In such unsupervised techniques, solely knowledge drift is outlined.

Idea Drift

Idea drift is the change within the relationship between goal values and options over time:

[P_{t_0}(y|X) ne P_{t}(y|X), t_0 > t.]

Often, efficiency is negatively impacted if idea drift happens.

In follow, the bottom fact label y typically solely turns into out there with a delay (or by no means). Therefore, additionally observing Pt(y|X) might solely be doable with a delay. Due to this fact, in lots of eventualities, detecting idea drift in a well timed and dependable method may be way more concerned and even unimaginable. In such instances, we might have to depend on knowledge drift as an indicator of idea drift.

How Drift Can Evolve Over Time

Idea and knowledge drift can take totally different kinds, and these kinds might have various implications for drift detection and drift dealing with methods.

Drift might happen all of a sudden with abrupt distribution modifications. For instance, buying habits might change in a single day with the introduction of a brand new product or promotion.

In different instances, drift might happen extra step by step or incrementally over an extended time period. As an illustration, if a digital platform introduces a brand new characteristic, this will likely have an effect on person habits on that platform. Whereas at first, just a few customers adopted the brand new characteristic, increasingly more customers might undertake it in the long term. Lastly, drift could also be recurring and pushed by seasonality. Think about a clothes firm. Whereas in the summertime the corporate’s top-selling merchandise could also be T-shirts and shorts, these are unlikely to promote equally properly in winter, when clients could also be extra keen on coats and different hotter clothes objects.

Learn how to Establish Drift

Earlier than drift may be dealt with, it should be detected. To debate drift detection successfully, we introduce a psychological framework borrowed from the superb learn “Studying underneath Idea Drift: A evaluation” (see reference checklist). A drift detection framework may be described in three phases:

- Knowledge Assortment and Modelling: The information retrieval logic specifies the info and time durations to be in contrast. Furthermore, the info is ready for the following steps by making use of an information mannequin. This mannequin could possibly be a machine studying mannequin, histograms, and even no mannequin in any respect. We are going to see examples in subsequent sections.

- Check Statistic Calculation: The check statistic defines how we measure (dis)similarity between historic and new knowledge. For instance, by evaluating mannequin efficiency on historic and new knowledge, or by measuring how totally different the info chunks’ histograms are.

- Speculation Testing: Lastly, we apply a speculation check to resolve whether or not we wish the system to sign drift. We formulate a null speculation and a call criterion (corresponding to defining a p-value).

Knowledge Assortment and Modelling

On this stage, we outline precisely which chunks of knowledge might be in contrast in subsequent steps. First, the time home windows of our reference and comparability (i.e., new) knowledge must be outlined. The reference knowledge may strictly be the historic coaching knowledge (see determine beneath), or change over time as outlined by a sliding window. Equally, the comparability knowledge can strictly be the latest batches of knowledge, or it could possibly prolong the historic knowledge over time, the place each time home windows may be sliding.

As soon as the info is offered, it must be ready for the check statistic calculation. Relying on the statistic, it’d must be fed by a machine studying mannequin (e.g., when calculating efficiency metrics), remodeled into histograms, or not be processed in any respect.

Drift Detection Strategies

One can establish drift by making use of sure detection strategies. These strategies monitor the efficiency of a mannequin (idea drift detection) or straight analyse incoming knowledge (knowledge drift detection). By making use of varied statistical checks or monitoring metrics, drift detection strategies assist to maintain your mannequin dependable. Both by easy threshold-based approaches or superior strategies, these strategies assure the robustness and adaptivity of your machine studying system.

Observing Idea Drift Via Efficiency Metrics

Essentially the most direct method to spot idea drift (or its penalties) is by monitoring the mannequin’s efficiency over time. Given two time home windows [t0, t1] and [t2, t3], we calculate the efficiency p[t0, t1] and p[t2, t3]. Then, the check statistic may be outlined because the distinction (or dissimilarity) of efficiency:

[dis = |p_{[t_0, t_1]} – p_{[t_2, t_3]}|.]

Efficiency may be any metric of curiosity, corresponding to accuracy, precision, recall, F1-score (in classification duties), or imply squared error, imply absolute share error, R-squared, and so forth. (in regression issues).

Calculating efficiency metrics typically requires floor fact labels that will solely grow to be out there with a delay, or might by no means grow to be out there.

To detect drift in a well timed method even in such instances, proxy efficiency metrics can typically be derived. For instance, in a spam detection system, we’d by no means know whether or not an e-mail was truly spam or not, so we can’t calculate the accuracy of the mannequin on reside knowledge. Nonetheless, we’d be capable to observe a proxy metric: the share of emails that have been moved to the spam folder. If the speed modifications considerably over time, this may point out idea drift.

If such proxy metrics are usually not out there both, we are able to base the detection framework on knowledge distribution-based metrics, which we introduce within the subsequent part.

Knowledge Distribution-Based mostly Strategies

Strategies on this class quantify how dissimilar the info distributions of reference knowledge X[t0,t1] and new knowledge X[t2,t3] are with out requiring floor fact labels.

How can the dissimilarity between two distributions be quantified? Within the subsequent subsections, we are going to introduce some well-liked univariate and multivariate metrics.

Univariate Metrics

Let’s begin with a quite simple univariate strategy:

First, calculate the technique of the i-th characteristic within the reference and new knowledge. Then, outline the variations of means because the dissimilarity measure

[dis_i = |mean_{i}^{[t_0,t_1]} – mean_{i}^{[t_2,t_3]}|. ]

Lastly, sign drift if disi is unexpectedly massive. We sign drift each time we observe an sudden change in a characteristic’s imply over time. Different comparable easy statistics embrace the minimal, most, quantiles, and the ratio of null values in a column. These are easy to calculate and are a superb start line for constructing drift detection techniques.

Nonetheless, these approaches may be overly simplistic. For instance, calculating the imply misses modifications within the tails of the distribution, as would different easy statistics. That is why we’d like barely extra concerned knowledge drift detection strategies.

Kolmogorov-Smirnov (Okay-S) Check

One other well-liked univariate methodology is the Kolmogorov-Smirnov (Okay-S) check. The KS check examines your entire distribution of a single characteristic and calculates the cumulative distribution perform (CDF) of X(i)[t0,t1] and X(i)[t2,t3]. Then, the check statistic is calculated as the utmost distinction between the 2 distributions:

[ dis_i = sup |CDF(X(i)_{[t_0,t_1]})-CDF(X(i)_{[t_2,t_3]})|, ]

and may detect variations within the imply and the tails of the distribution.

The null speculation is that every one samples are drawn from the identical distribution. Therefore, if the p-value is lower than a predefined worth of 𝞪 (e.g., 0.05), then we reject the null speculation and conclude drift. To find out the vital worth for a given 𝞪, we have to seek the advice of a two-sample KS desk. Or, if the pattern sizes n (variety of reference samples) and m (variety of new samples) are massive, the vital worth cv𝞪 is calculated in line with

[cv_{alpha}= c(alpha)sqrt{ frac{n+m}{n*m} }, ]

the place c(𝞪) may be discovered right here on Wikipedia for frequent values.

The Okay-S check is extensively utilized in drift detection and is comparatively sturdy towards excessive values. Nonetheless, bear in mind that even small numbers of maximum outliers can disproportionately have an effect on the dissimilarity measure and result in false optimistic alarms.

Inhabitants Stability Index

A fair much less delicate different (or complement) is the inhabitants stability index (PSI). As a substitute of utilizing cumulative distribution capabilities, the PSI includes dividing the vary of observations into bins b and calculating frequencies for every bin, successfully producing histograms of the reference and new knowledge. We examine the histograms, and if they seem to have modified unexpectedly, the system alerts drift. Formally, the dissimilarity is calculated in line with:

[dis = sum_{bin B} (ratio(b^{new}) – ratio(b^{ref}))ln(frac{ratio(b^{new})}{ratio(b^{ref})}) = sum_{bin B} PSI_{b}, ]

the place ratio(bnew) is the ratio of knowledge factors falling into bin b within the new dataset, and ratio(bref) is the ratio of knowledge factors falling into bin b within the reference dataset, B is the set of all bins. The smaller the distinction between ratio(bnew) and ratio(bref), the smaller the PSI. Therefore, if a giant PSI is noticed, then a drift detection system would sign drift. In follow, typically a threshold of 0.2 or 0.25 is utilized as a rule of thumb. That’s, if the PSI > 0.25, the system alerts drift.

Chi-Squared Check

Lastly, we introduce a univariate drift detection methodology that may be utilized to categorical options. All earlier strategies solely work with numerical options.

So, let x be a categorical characteristic with n classes. Calculating the chi-squared check statistic is considerably much like calculating the PSI from the earlier part. Slightly than calculating the histogram of a steady characteristic, we now think about the (relative) counts per class i. With these counts, we outline the dissimilarity because the (normalized) sum of squared frequency variations within the reference and new knowledge:

[dis = sum_{i=1}^{n} frac{(count_{i}^{new}-count_{i}^{ref})^{2}}{count_{i}^{ref}}].

Word that in follow chances are you’ll have to resort to relative counts if the cardinalities of recent and reference knowledge are totally different.

To resolve whether or not an noticed dissimilarity is critical (with some pre-defined p worth), a desk of chi-squared values with one diploma of freedom is consulted, e.g., Wikipedia.

Multivariate Checks

In lots of instances, every characteristic’s distribution individually will not be affected by drift in line with the univariate checks within the earlier part, however the general distribution X should be affected. For instance, the correlation between x1 and x2 might change whereas the histograms of each (and, therefore, the univariate PSI) seem like steady. Clearly, such modifications in characteristic interactions can severely impression machine studying mannequin efficiency and should be detected. Due to this fact, we introduce a multivariate check that may complement the univariate checks of the earlier sections.

Reconstruction-Error Based mostly Check

This strategy relies on self-supervised autoencoders that may be educated with out labels. Such fashions encompass an encoder and a decoder half, the place the encoder maps the info to a, sometimes low-dimensional, latent area and the decoder learns to reconstruct the unique knowledge from the latent area illustration. The educational goal is to reduce the reconstruction error, i.e., the distinction between the unique and reconstructed knowledge.

How can such autoencoders be used for drift detection? First, we prepare the autoencoder on the reference dataset, and retailer the imply reconstruction error. Then, utilizing the identical mannequin, we calculate the reconstruction error on new knowledge and use the distinction because the dissimilarity metric:

[ dis = |error_{[t_0, t_1]} – error_{[t_2, t_3]}|. ]

Intuitively, if the brand new and reference knowledge are comparable, the unique mannequin mustn’t have issues reconstructing the info. Therefore, if the dissimilarity is larger than a predefined threshold, the system alerts drift.

This strategy can spot extra delicate multivariate drift. Word that principal part evaluation may be interpreted as a particular case of autoencoders. NannyML demonstrates how PCA reconstructions can establish modifications in characteristic correlations that univariate strategies miss.

Abstract of Well-liked Drift Detection Strategies

To conclude this part, we wish to summarize the drift detection strategies within the following desk:

| Title | Utilized to | Check statistic | Drift if | Notes |

| Statistical and threshold-based checks | Univariate, numerical knowledge | Variations in easy statistics like imply, quantiles, counts, and so forth. | The distinction is larger than a predefined threshold | Might miss variations in tails of distributions, setting the edge requires area information or intestine feeling |

| Kolmogorov-Smirnov (Okay-S) | Univariate, numerical knowledge | Most distinction within the cumulative distribution perform of reference and new knowledge. | p-value is small (e.g., p < 0.05) | May be delicate to outliers |

| Inhabitants Stability Index (PSI) | Univariate, numerical knowledge | Variations within the histogram of reference and new knowledge. | PSI is larger than the predefined threshold (e.g., PSI > 0.25) | Selecting a threshold is usually primarily based on intestine feeling |

| Chi-Squared Check | Univariate, categorical knowledge | Variations in counts of observations per class in reference and new knowledge. | p-value is small (e.g., p < 0.05) | |

| Reconstruction-Error Check | Multivariate, numerical knowledge | Distinction in imply reconstruction error in reference and new knowledge | The distinction is larger than the predefined threshold | Defining a threshold may be exhausting; the strategy could also be comparatively complicated to implement and preserve. |

What to Do Towards Drift

Regardless that the main target of this text is the detection of drift, we might additionally like to present an concept of what may be carried out towards drift.

As a normal rule, it is very important automate drift detection and mitigation as a lot as doable and to outline clear obligations guarantee ML techniques stay related.

First Line of Protection: Sturdy Modeling Methods

The primary line of protection is utilized even earlier than the mannequin is deployed. Coaching knowledge and mannequin engineering selections straight impression sensitivity to float, and mannequin builders ought to deal with sturdy modeling strategies or sturdy machine studying. For instance, a machine studying mannequin counting on many options could also be extra prone to the results of drift. Naturally, extra options imply a bigger “assault floor”, and a few options could also be extra delicate to float than others (e.g., sensor measurements are topic to noise, whereas sociodemographic knowledge could also be extra steady). Investing in sturdy characteristic choice is more likely to repay in the long term.

Moreover, together with noisy or malicious knowledge within the coaching dataset might make fashions extra sturdy towards smaller distributional modifications. The sector of adversarial machine studying is anxious with instructing ML fashions easy methods to cope with adversarial inputs.

Second Line of Protection: Outline a Fallback Technique

Even essentially the most rigorously engineered mannequin will possible expertise drift sooner or later. When this occurs, be certain to have a backup plan prepared. To arrange such a plan, first, the results of failure should be understood. Recommending the fallacious pair of sneakers in an e-mail publication has very totally different implications from misclassifying objects in autonomous driving techniques. Within the first case, it could be acceptable to attend for human suggestions earlier than sending the e-mail if drift is detected. Within the latter case, a way more rapid response is required. For instance, a rule-based system or some other system not affected by drift might take over.

Putting Again: Mannequin Updates

After addressing the rapid results of drift, you may work to revive the mannequin’s efficiency. The obvious exercise is retraining the mannequin or updating mannequin weights with the latest knowledge. One of many challenges of retraining is defining a brand new coaching dataset. Ought to it embrace all out there knowledge? Within the case of idea drift, this will likely hurt convergence for the reason that dataset might include inconsistent coaching samples. If the dataset is simply too small, this will likely result in catastrophic forgetting of beforehand realized patterns for the reason that mannequin will not be uncovered to sufficient coaching samples.

To stop catastrophic forgetting, strategies from continuous and energetic studying may be utilized, e.g., by introducing reminiscence techniques.

It is very important weigh totally different choices, pay attention to the trade-offs, and decide primarily based on the impression on the use case.

Conclusion

On this article, we describe why drift detection is necessary should you care concerning the long-term success and robustness of machine studying techniques. If drift happens and isn’t taken care of, then machine studying fashions’ efficiency will degrade, doubtlessly harming income, eroding belief and popularity, and even having authorized penalties.

We formally introduce idea and knowledge drift as sudden variations between coaching and inference knowledge. Such sudden modifications may be detected by making use of univariate checks just like the Kolmogorov-Smirnov check, Inhabitants Stability Index checks, and the Chi-Sq. check, or multivariate checks like reconstruction-error-based checks. Lastly, we briefly contact upon a number of methods about easy methods to cope with drift.

Sooner or later, we plan to observe up with a hands-on information constructing on the ideas launched on this article. Lastly, one final notice: Whereas the article introduces a number of more and more extra complicated strategies and ideas, keep in mind that any drift detection is at all times higher than no drift detection. Relying on the use case, a quite simple detection system can show itself to be very efficient.

- https://en.wikipedia.org/wiki/Catastrophic_interference

- J. Lu, A. Liu, F. Dong, F. Gu, J. Gama and G. Zhang, “Studying underneath Idea Drift: A Overview,” in IEEE Transactions on Information and Knowledge Engineering, vol. 31, no. 12, pp. 2346-2363, 1 Dec. 2019

- M. Stallmann, A. Wilbik and G. Weiss, “In direction of Unsupervised Sudden Knowledge Drift Detection in Federated Studying with Fuzzy Clustering,” 2024 IEEE Worldwide Convention on Fuzzy Methods (FUZZ-IEEE), Yokohama, Japan, 2024, pp. 1-8, doi: 10.1109/FUZZ-IEEE60900.2024.10611883

- https://www.evidentlyai.com/ml-in-production/concept-drift

- https://www.evidentlyai.com/ml-in-production/data-drift

- https://en.wikipedia.org/wiki/KolmogorovpercentE2percent80percent93Smirnov_test

- https://stats.stackexchange.com/questions/471732/intuitive-explanation-of-kolmogorov-smirnov-test

- Yurdakul, Bilal, “Statistical Properties of Inhabitants Stability Index” (2018). Dissertations. 3208. https://scholarworks.wmich.edu/dissertations/3208

- https://en.wikipedia.org/wiki/Chi-squared_test

- https://www.nannyml.com/weblog/hypothesis-testing-for-ml-performance#chi-2-test

- https://nannyml.readthedocs.io/en/major/how_it_works/multivariate_drift.html#how-multiv-drift

- https://en.wikipedia.org/wiki/Autoencoder