paper from Konrad Körding’s Lab [1], “Does Object Binding Naturally Emerge in Massive Pretrained Imaginative and prescient Transformers?” provides insights right into a foundational query in visible neuroscience: what’s required to bind visible parts and textures collectively as objects? The objective of this text is to provide you a background on this downside, overview this NeurIPS paper, and hopefully offer you perception into each synthetic and organic neural networks. I may even be reviewing some deep studying self-supervised studying strategies and visible transformers, whereas highlighting the variations between present deep studying techniques and our brains.

1. Introduction

After we view a scene, our visible system doesn’t simply hand our consciousness a high-level abstract of the objects and composition; we even have acutely aware entry to a whole visible hierarchy.

We will “seize” an object with our consideration within the higher-level areas, just like the Inferior Temporal (IT) cortex and Fusiform Face Space (FFA), and entry all of the contours and textures which are coded within the lower-level areas like V1 and V2.

If we lacked this functionality to entry our total visible hierarchy, we’d both not have acutely aware entry to low-level particulars of the visible system, or the dimensionality would explode within the higher-level areas attempting to convey all this data. This may require our brains to be considerably bigger and eat extra power.

This distribution of knowledge of the visible scene throughout the visible system implies that the elements or objects of the scene should be certain collectively in some method. For years, there have been two essential factions on how that is executed: one faction argued that object binding used neural oscillations (or extra typically, synchrony) to bind object elements collectively, and the opposite faction argued that will increase in neural firing have been ample to bind the attended objects. My educational background places me firmly within the latter camp, underneath the tutelage of Rüdiger von der Heydt, Ernst Niebur, and Pieter Roelfsema.

Von der Malsburg and Schneider proposed the neural oscillation binding speculation in 1986 (see [2] for overview), the place they proposed that every object had its personal temporal tag.

On this framework, if you take a look at an image with two puppies, all of the neurons all through the visible system encoding the primary pet would fireplace at one part of the oscillation, whereas the neurons encoding the opposite pet would fireplace at a unique part. Proof for one of these binding was present in anesthetized cats, nevertheless, anesthesia will increase oscillation within the mind.

Within the firing price framework, neurons encoding attended objects fired at a better price than these attending unattended objects and neurons encoding attended or unattended objects would fireplace at a better price than these encoding the background. This has been proven repeatedly and robustly in awake animals [3].

Initially, there have been extra experiments supporting the neural synchrony or oscillation hypotheses, however over time there was extra proof for the elevated firing price binding speculation.

The main target of Li’s paper is whether or not deep studying fashions exhibit object binding. They convincingly argue that ViT networks skilled by self-supervised studying naturally be taught to bind objects, however these skilled by way of supervised classification (ImageNet) don’t. The failure of supervised coaching to show object binding, in my view, suggests that there’s a elementary weak point to a single backpropagated world loss. With out rigorously tuning this coaching paradigm, you’ve a system that takes shortcuts and (for instance) learns textures as an alternative of objects, as proven by Geirhos et al. [4]. As an finish consequence, you get fashions which are fragile to adversarial assaults and solely be taught one thing when it has a big affect on the ultimate loss perform. Fortuitously, self-supervised studying works fairly effectively because it stands with out my extra radical takes, and it is ready to reliably be taught object binding.

2. Strategies

2.1. The Structure: Imaginative and prescient Transformers (ViT)

I’m going to overview the Imaginative and prescient Transformer (ViT; [5]) on this part, so be happy to skip if you happen to don’t have to brush up on this structure. After its introduction, there have been many further visible transformer architectures, just like the Swin transformer and numerous hybrid convolutional transformers, such because the CoAtNet and Convolutional Imaginative and prescient Transformer (CvT). Nevertheless, the analysis group retains coming again to ViT. A part of it’s because ViT is effectively fitted to present self-supervised approaches – equivalent to Masked Auto-Encoding (MAE) and I-JEPA (Picture Joint Embedding Predictive Structure).

ViT splits the picture right into a grid of patches that are transformed into tokens. Tokens in ViT are simply function vectors, whereas tokens in different transformers might be discrete. For Li’s paper, the authors resized the photographs to (224times 224) pixels after which cut up them right into a grid of (16times 16) patches ((14times 14) pixels per patch). The patches are then transformed to tokens by merely flattening the patches.

The positions of the patches within the picture are added as positional embeddings utilizing elementwise addition. For classification, the sequence of tokens is prepended with a particular, realized classification token. So, if there are (W instances H) patches, then there are (1 + W instances H) enter tokens. There are additionally (1 + W instances H) output tokens from the core ViT mannequin. The primary token of the output sequence, which corresponds to the classification token, is handed to the classification head to provide the classification. All the remaining output tokens are ignored for the classification activity. Via coaching, the community learns to encode the worldwide context of the picture wanted for classification into this token.

The tokens get handed by way of the encoder of the transformer whereas holding the size of the sequence the identical. There’s an implied correspondence from the enter token and the identical token all through the community. Whereas there isn’t a assure of what the tokens in the midst of the community might be encoding, this may be influenced by the coaching methodology. A dense activity, like MAE, enforces this correspondence between the (i)-th token of the enter sequence and the (i)-th token of the output sequence. A activity with a rough sign, like classification, won’t educate the community to maintain this correspondence.

2.2. The Coaching Regimes: Self-Supervised Studying (SSL)

You don’t essentially have to know the main points of the self-supervised studying strategies used within the Li et al. NeurIPS 2025 paper to understand the outcomes. They argue that the outcomes utilized to all of the SSL strategies they tried: DINO, MAE, and CLIP.

DINOv2 was the primary SSL methodology the authors examined and the one which they centered on. DINO works by degrading the picture with cropping and information augmentations. The fundamental thought is that the mannequin learns to extract the essential data from the degraded data and match that to the total unique picture. There’s some complexity in that there’s a trainer community, which is an exponential transferring common (EMA) of the scholar community. That is much less more likely to collapse than if the scholar community is used to generate the coaching sign.

MAE is a sort of Masked Picture Modelling (MIM). It drops a sure % of the tokens or patches from the enter sequence. For the reason that tokens embody positional encoding, that is simple to do. This decreased set of tokens is then handed by way of the encoder. The tokens are then handed by way of a transformer decoder to attempt to “inpaint” the lacking tokens. The loss sign then comes from evaluating the enter with all of the tokens (the ground-truth) with the anticipated tokens.

CLIP depends on captioned photographs, equivalent to these scraped from the net. It aligns a textual content encoder and picture encoder, coaching them concurrently. I received’t spend plenty of time describing it right here, however one factor to level out is that this coaching sign is coarse (based mostly on the entire picture and the entire caption). The coaching information is web-scale, slightly than restricted to ImageNet, and whereas the sign is coarse, the function vectors aren’t sparse (e.g. one-hot encoded). So, whereas it’s thought-about self-supervised, it does use a weakly supervised sign within the type of the captions.

2.3. Probes

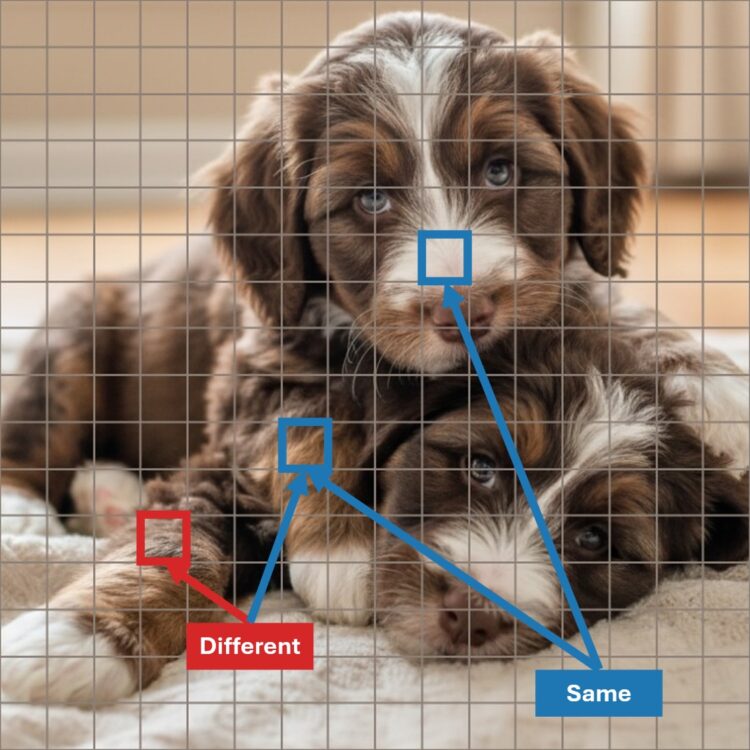

As proven in Determine 2, a probe or check that is ready to discriminate object binding wants to find out whether or not the blue patches are from the identical pet and the pink and blue patches are from completely different puppies. So that you may create a check like cosine similarity between the patches and discover that this does fairly effectively in your check set. However… is it actually detecting object binding and never low-level or class-based options? Many of the photographs most likely aren’t as complicated. So that you want some probe that’s just like the cosine similarity check, but in addition some sort of robust baseline that is ready to, for instance, inform whether or not the patches belong to the identical semantic class, however not essentially whether or not they belong to the identical occasion.

The probes that they use which are most just like utilizing cosine similarity are the diagonal quadratic probe and the quadratic probe, the place the latter basically provides one other linear layer (sort of like a linear probe, however you’ve two linear probes that you simply then take the dot product of). These are the 2 probes that I might think about have the potential to detect binding. In addition they have some object class-based probes that I might think about the robust baselines.

Of their Determine 2 (my Determine 3), I might take note of the quadratic probe magenta curve and the overlapping object class orange curve. The quadratic curve doesn’t rise above the thing class curves till round layers 10-11 of the 23 layers. The diagonal quadratic curve doesn’t ever attain above these curves (see unique determine in paper), which means that the binding data not less than wants a linear layer to venture it into an “IsSameObject” subspace.

I am going into a bit extra element with the probes within the appendix part, which I like to recommend skipping till/until you learn the paper.

3. The Central Declare: Li et al. (2025)

The primary declare of their paper is that ViT fashions skilled with self-supervised studying (SSL) naturally be taught object binding, whereas ViT fashions skilled with ImageNet supervised classification exhibit a lot weaker object binding. Total, I discover their arguments convincing, though, like with all papers, there are areas the place they may have improved.

Their arguments are weakened by utilizing the weak baseline of at all times guessing that two patches aren’t certain, as proven in Determine 2. Fortuitously, they used a variety of probes that features stronger class-based baselines, and their quadratic probe nonetheless performs higher than them. I do imagine that it might be attainable to create a greater check and/or baselines, like including positional consciousness into the class-based strategies. Nevertheless, I believe that is nitpicking and the object-based probes do make a reasonably good baseline. Their Determine 4 provides further reassurance that it’s performing object binding, though probe distance may nonetheless be taking part in a task.

Their supervised ViT mannequin solely achieved 3.7% greater accuracy than the weak baseline, which I might interpret as not having any object binding. There’s one complication to this lead to that fashions skilled with DINOv2 (and MAE) implement a correspondence between the enter tokens and output tokens, whereas the ImageNet classification solely trains on the primary token that corresponds to the realized “classify” activity token; the remaining output tokens are ignored by this supervised coaching loss. So the probe is assuming that the (i)-th token at a given degree corresponds to the (i)-th token of the enter sequence, which is more likely to maintain more true for the DINOv2-trained fashions in comparison with the ImageNet-trained classification mannequin.

I believe it’s an open query whether or not CLIP and MAE would have proven object binding if it was in comparison with a stronger baseline. Determine 7 of their Appendix doesn’t make CLIP’s binding sign look that robust. Though CLIP, like supervised classification coaching, doesn’t implement the token correspondence all through the processing. Notably in each supervised studying and CLIP, the layer with the height accuracy on same-object prediction is earlier within the community (0.13 and 0.39 out of 1), whereas networks that protect the token correspondence present a peak later within the networks (0.65-1 out of 1).

Going again to mushy organic brains, one of many the explanation why binding is a matter is that the illustration of an object is distributed throughout the visible hierarchy. The ViT structure is basically completely different in that there isn’t a bidirectionality of knowledge; all the data flows in a single route and the illustration at decrease ranges is not wanted as soon as its data is handed on. Appendix A3 does present that the quadratic probe has a comparatively excessive accuracy for estimating whether or not patches from layer 15 and 18 are certain, so evidently this data is not less than there, even when it isn’t a bidirectional, recurrent structure.

4. Conclusion: A New Baseline for “Understanding”?

I believe this paper is basically fairly cool, because it’s the primary paper that I’m conscious of that exhibits proof of a deep studying mannequin displaying the emergent property of object binding. It will be nice if the outcomes of the opposite SSL strategies, like MAE, may very well be proven with the stronger baselines, however this paper not less than exhibits robust proof that ViTs skilled with DINO exhibit object binding. Earlier work has steered that this was not the case. The weak point (or absence) of the thing binding sign from ViTs skilled on ImageNet classification can be attention-grabbing, and it’s in line with the papers that counsel that CNNs skilled with ImageNet classification are biased in direction of texture as an alternative of object form [4], though ViTs have much less texture bias [6] and DINO self-supervision additionally reduces the feel bias (however probably not MAE) [7].

There are at all times issues that may be improved with papers, and that’s why science and analysis builds on previous analysis and expands and checks earlier findings. Discriminating object-binding from different options is troublesome and may require checks like synthetic geometric stimuli to show for sure that object-binding was discovered with none doubt. Nevertheless, the proof introduced remains to be fairly robust.

Even in case you are not interested by object-binding per se, the distinction in conduct between ViT skilled by unsupervised and supervised approaches is slightly stark and offers us some insights into the coaching regimes. It means that the inspiration fashions that we’re constructing are studying in a manner that’s extra just like the gold commonplace of actual intelligence: people.

Hyperlinks

Appendix

Probe Particulars

I’m including this part as an appendix as a result of it is likely to be helpful in case you are going into the paper in additional element. Nevertheless, I think it is going to be an excessive amount of element for most individuals studying this publish. One strategy to find out whether or not two tokens are certain is likely to be to calculate the cosine similarity of these tokens. That is merely taking the dot-product of the L2-normalized vector tokens. Sadly, in my view, they didn’t attempt to take the L2-normalization of the vector tokens, however they did strive a weighted dot product which they name the diagonal quadratic probe.

$$phi_text{diag} (x,y) = x ^ topmathrm{diag} (w) y$$

The weights ( w ) are realized, so the probe can be taught to concentrate on the size extra related to binding. Whereas they didn’t carry out L2-normalization, they did apply layer-normalization to the tokens, which incorporates L1-normalization and whitening per token.

There is no such thing as a motive to imagine that the thing binding property can be properly segregated within the function vectors of their present types, so it might make sense to first venture them into a brand new “IsSameObject” subspace after which take their dot product. That is the quadratic probe that they discovered works so effectively:

$$start{align}

phi_text{quad} (x,y) &= W x cdot W y

&= left( W x proper) ^ prime W y

&= x ^prime W ^prime W y

finish{align}

$$

the place (W in mathbb R ^{ok instances d}, ok ll d).

The quadratic probe is significantly better at extracting the binding than the diagonal quadratic probe. The truth is, I might argue that the quadratic probe is the one probe that they present that may extract the data on whether or not the objects are certain or not, since it’s the just one that exceed the robust baseline of the thing class-based probes.

I left out their linear probe, which is a probe that I really feel that they needed to embody within the paper, however that doesn’t actually make any sense. For this, they utilized a linear probe (a further layer that they prepare individually) to each the tokens, after which add the outcomes. The addition is why I believe the probe is a distraction. To check the tokens, there must be a multiplication. The quadratic probe is a greater equal to the linear probe when you find yourself evaluating two function vectors.

Bibliography

[1] Y. Li, S. Salehi, L. Ungar and Okay. P. Kording, Does Object Binding Naturally Emerge in Massive Pretrained Imaginative and prescient Transformers? (2025), arXiv preprint arXiv:2510.24709

[2] P. R. Roelfsema, Fixing the binding downside: Assemblies kind when neurons improve their firing price—they don’t have to oscillate or synchronize (2023), Neuron, 111(7), 1003-1019

[3] J. R. Williford and R. von der Heydt, Border-ownership coding (2013), Scholarpedia journal, 8(10), 30040

[4] R. Geirhos, P. Rubisch, C. Michaelis, M. Bethge, F. A. Wichmann and W. Brendel, ImageNet-trained CNNs are biased in direction of texture; growing form bias improves accuracy and robustness (2018), Worldwide Convention on Studying Representations

[5] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, et al., A picture is price 16×16 phrases: Transformers for picture recognition at scale (2020), arXiv preprint arXiv:2010.11929

[6] M. M. Naseer, Okay. Ranasinghe, S. H. Khan, M. Hayat, F. Shahbaz Khan and M. H. Yang, Intriguing properties of imaginative and prescient transformers (2021), Advances in Neural Data Processing Methods, 34, 23296-23308

[7] N. Park, W. Kim, B. Heo, T. Kim and S. Yun, What do self-supervised imaginative and prescient transformers be taught? (2023), arXiv preprint arXiv:2305.00729