Kubernetes is a well-liked orchestration platform for managing containers. Its scalability and load-balancing capabilities make it perfect for dealing with the variable workloads typical of machine studying (ML) functions. DevOps engineers usually use Kubernetes to handle and scale ML functions, however earlier than an ML mannequin is on the market, it should be skilled and evaluated and, if the standard of the obtained mannequin is passable, uploaded to a mannequin registry.

Amazon SageMaker offers capabilities to take away the undifferentiated heavy lifting of constructing and deploying ML fashions. SageMaker simplifies the method of managing dependencies, container photographs, auto scaling, and monitoring. Particularly for the mannequin constructing stage, Amazon SageMaker Pipelines automates the method by managing the infrastructure and assets wanted to course of knowledge, practice fashions, and run analysis exams.

A problem for DevOps engineers is the extra complexity that comes from utilizing Kubernetes to handle the deployment stage whereas resorting to different instruments (such because the AWS SDK or AWS CloudFormation) to handle the mannequin constructing pipeline. One various to simplify this course of is to make use of AWS Controllers for Kubernetes (ACK) to handle and deploy a SageMaker coaching pipeline. ACK lets you benefit from managed mannequin constructing pipelines without having to outline assets outdoors of the Kubernetes cluster.

On this put up, we introduce an instance to assist DevOps engineers handle the complete ML lifecycle—together with coaching and inference—utilizing the identical toolkit.

Answer overview

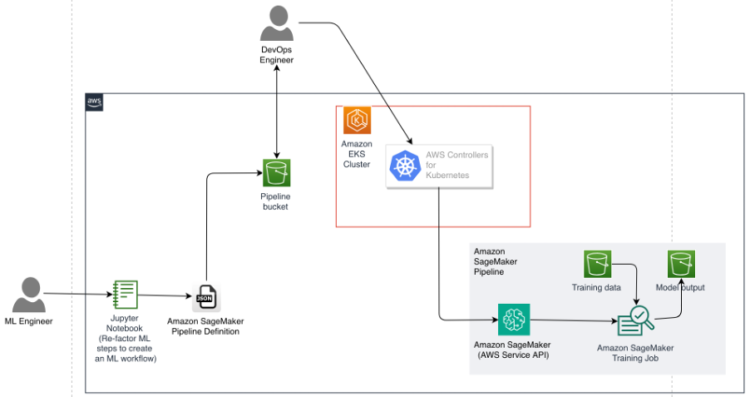

We contemplate a use case during which an ML engineer configures a SageMaker mannequin constructing pipeline utilizing a Jupyter pocket book. This configuration takes the type of a Directed Acyclic Graph (DAG) represented as a JSON pipeline definition. The JSON doc might be saved and versioned in an Amazon Easy Storage Service (Amazon S3) bucket. If encryption is required, it may be applied utilizing an AWS Key Administration Service (AWS KMS) managed key for Amazon S3. A DevOps engineer with entry to fetch this definition file from Amazon S3 can load the pipeline definition into an ACK service controller for SageMaker, which is operating as a part of an Amazon Elastic Kubernetes Service (Amazon EKS) cluster. The DevOps engineer can then use the Kubernetes APIs supplied by ACK to submit the pipeline definition and provoke a number of pipeline runs in SageMaker. This whole workflow is proven within the following resolution diagram.

Stipulations

To comply with alongside, you must have the next stipulations:

- An EKS cluster the place the ML pipeline might be created.

- A consumer with entry to an AWS Id and Entry Administration (IAM) function that has IAM permissions (

iam:CreateRole,iam:AttachRolePolicy, andiam:PutRolePolicy) to permit creating roles and attaching insurance policies to roles. - The next command line instruments on the native machine or cloud-based improvement atmosphere used to entry the Kubernetes cluster:

Set up the SageMaker ACK service controller

The SageMaker ACK service controller makes it easy for DevOps engineers to make use of Kubernetes as their management aircraft to create and handle ML pipelines. To put in the controller in your EKS cluster, full the next steps:

- Configure IAM permissions to ensure the controller has entry to the suitable AWS assets.

- Set up the controller utilizing a SageMaker Helm Chart to make it out there on the shopper machine.

The next tutorial offers step-by-step directions with the required instructions to put in the ACK service controller for SageMaker.

Generate a pipeline JSON definition

In most firms, ML engineers are accountable for creating the ML pipeline of their group. They usually work with DevOps engineers to function these pipelines. In SageMaker, ML engineers can use the SageMaker Python SDK to generate a pipeline definition in JSON format. A SageMaker pipeline definition should comply with the supplied schema, which incorporates base photographs, dependencies, steps, and occasion varieties and sizes which might be wanted to completely outline the pipeline. This definition then will get retrieved by the DevOps engineer for deploying and sustaining the infrastructure wanted for the pipeline.

The next is a pattern pipeline definition with one coaching step:

With SageMaker, ML mannequin artifacts and different system artifacts are encrypted in transit and at relaxation. SageMaker encrypts these by default utilizing the AWS managed key for Amazon S3. You may optionally specify a customized key utilizing the KmsKeyId property of the OutputDataConfig argument. For extra data on how SageMaker protects knowledge, see Information Safety in Amazon SageMaker.

Moreover, we advocate securing entry to the pipeline artifacts, resembling mannequin outputs and coaching knowledge, to a selected set of IAM roles created for knowledge scientists and ML engineers. This may be achieved by attaching an applicable bucket coverage. For extra data on greatest practices for securing knowledge in Amazon S3, see Prime 10 safety greatest practices for securing knowledge in Amazon S3.

Create and submit a pipeline YAML specification

Within the Kubernetes world, objects are the persistent entities within the Kubernetes cluster used to signify the state of your cluster. While you create an object in Kubernetes, it’s essential to present the article specification that describes its desired state, in addition to some fundamental details about the article (resembling a reputation). Then, utilizing instruments resembling kubectl, you present the knowledge in a manifest file in YAML (or JSON) format to speak with the Kubernetes API.

Confer with the next Kubernetes YAML specification for a SageMaker pipeline. DevOps engineers want to switch the .spec.pipelineDefinition key within the file and add the ML engineer-provided pipeline JSON definition. They then put together and submit a separate pipeline execution YAML specification to run the pipeline in SageMaker. There are two methods to submit a pipeline YAML specification:

- Move the pipeline definition inline as a JSON object to the pipeline YAML specification.

- Convert the JSON pipeline definition into String format utilizing the command line utility jq. For instance, you should use the next command to transform the pipeline definition to a JSON-encoded string:

On this put up, we use the primary choice and put together the YAML specification (my-pipeline.yaml) as follows:

Submit the pipeline to SageMaker

To submit your ready pipeline specification, apply the specification to your Kubernetes cluster as follows:

Create and submit a pipeline execution YAML specification

Confer with the next Kubernetes YAML specification for a SageMaker pipeline. Put together the pipeline execution YAML specification (pipeline-execution.yaml) as follows:

To begin a run of the pipeline, use the next code:

Overview and troubleshoot the pipeline run

To record all pipelines created utilizing the ACK controller, use the next command:

To record all pipeline runs, use the next command:

To get extra particulars in regards to the pipeline after it’s submitted, like checking the standing, errors, or parameters of the pipeline, use the next command:

To troubleshoot a pipeline run by reviewing extra particulars in regards to the run, use the next command:

Clear up

Use the next command to delete any pipelines you created:

Use the next command to cancel any pipeline runs you began:

Conclusion

On this put up, we offered an instance of how ML engineers aware of Jupyter notebooks and SageMaker environments can effectively work with DevOps engineers aware of Kubernetes and associated instruments to design and preserve an ML pipeline with the appropriate infrastructure for his or her group. This permits DevOps engineers to handle all of the steps of the ML lifecycle with the identical set of instruments and atmosphere they’re used to, which allows organizations to innovate sooner and extra effectively.

Discover the GitHub repository for ACK and the SageMaker controller to begin managing your ML operations with Kubernetes.

Concerning the Authors

Pratik Yeole is a Senior Options Architect working with international clients, serving to clients construct value-driven options on AWS. He has experience in MLOps and containers domains. Outdoors of labor, he enjoys time with buddies, household, music, and cricket.

Pratik Yeole is a Senior Options Architect working with international clients, serving to clients construct value-driven options on AWS. He has experience in MLOps and containers domains. Outdoors of labor, he enjoys time with buddies, household, music, and cricket.

Felipe Lopez is a Senior AI/ML Specialist Options Architect at AWS. Previous to becoming a member of AWS, Felipe labored with GE Digital and SLB, the place he centered on modeling and optimization merchandise for industrial functions.

Felipe Lopez is a Senior AI/ML Specialist Options Architect at AWS. Previous to becoming a member of AWS, Felipe labored with GE Digital and SLB, the place he centered on modeling and optimization merchandise for industrial functions.