This publish is cowritten by Laura Skylaki, Vaibhav Goswami, Ramdev Wudali and Sahar El Khoury from Thomson Reuters.

Thomson Reuters (TR) is a number one AI and know-how firm devoted to delivering trusted content material and workflow automation options. With over 150 years of experience, TR offers important options throughout authorized, tax, accounting, threat, commerce, and media sectors in a fast-evolving world.

TR acknowledged early that AI adoption would basically rework skilled work. In response to TR’s 2025 Way forward for Professionals Report, 80% of pros anticipate AI considerably impacting their work inside 5 years, with projected productiveness beneficial properties of as much as 12 hours per week by 2029. To unlock this immense potential, TR wanted an answer to democratize AI creation throughout its group.

On this weblog publish, we discover how TR addressed key enterprise use instances with Open Area, a extremely scalable and versatile no-code AI resolution powered by Amazon Bedrock and different AWS companies corresponding to Amazon OpenSearch Service, Amazon Easy Storage Service (Amazon S3), Amazon DynamoDB, and AWS Lambda. We’ll clarify how TR used AWS companies to construct this resolution, together with how the structure was designed, the use instances it solves, and the enterprise profiles that use it. The system demonstrates TR’s profitable strategy of utilizing present TR companies for speedy launches whereas supporting 1000’s of customers, showcasing how organizations can democratize AI entry and help enterprise profiles (for instance, AI explorers and SMEs) to create purposes with out coding experience.

Introducing Open Area: No-code AI for all

TR launched Open Area to non-technical professionals to create their very own custom-made AI options. With Open Area customers can use cutting-edge AI powered by Amazon Bedrock in a no-code surroundings, exemplifying TR’s dedication to democratizing AI entry.

Right this moment, Open Area helps:

- Excessive adoption: ~70% worker adoption, with 19,000 month-to-month energetic customers.

- Customized options: Hundreds of custom-made AI options created with out coding, used for inside workflows or built-in into TR merchandise for patrons.

- Self-served performance: 100% self-served performance, in order that customers, regardless of technical background, can develop, consider, and deploy generative AI options.

The Open Area journey: From prototype to enterprise resolution

Conceived as a speedy prototype, Open Area was developed in beneath six weeks on the onset of the generative AI growth in early 2023 by TR Labs – TR’s devoted utilized analysis division targeted on the analysis, growth, and software of AI and rising developments in applied sciences. The aim was to help inside group exploration of enormous language fashions (LLMs) and uncover distinctive use instances by merging LLM capabilities with TR firm knowledge.

Open Area’s introduction considerably elevated AI consciousness, fostered developer-SME collaboration for groundbreaking ideas, and accelerated AI functionality growth for TR merchandise. The speedy success and demand for brand new options shortly highlighted Open Area’s potential for AI democratization, so TR developed an enterprise model of Open Area. Constructed on the TR AI Platform, Open Area enterprise model provides safe, scalable, and standardized companies masking your complete AI growth lifecycle, considerably accelerating time to manufacturing.

The Open Area enterprise model makes use of present system capabilities for enhanced knowledge entry controls, standardized service entry, and compliance with TR’s governance and moral requirements. This model launched self-served capabilities so that each consumer, regardless of their technical capability, can create, consider, and deploy custom-made AI options in a no-code surroundings.

“The inspiration of the AI Platform has all the time been about empowerment; within the early days it was about empowering Knowledge Scientists however with the rise of Gen AI, the platform tailored and developed on empowering customers of any background to leverage and create AI Options.”

– Maria Apazoglou, Head of AI Engineering, CoCounsel

As of July 2025, the TR Enterprise AI Platform consists of 15 companies spanning your complete AI growth lifecycle and consumer personas. Open Area stays certainly one of its hottest, serving 19,000 customers every month, with rising month-to-month utilization.

Addressing key enterprise AI challenges throughout consumer varieties

Utilizing the TR Enterprise AI Platform, Open Area helped 1000’s of pros transition into utilizing generative AI. AI-powered innovation is now readily within the fingers of everybody, not simply AI scientists.

Open Area efficiently addresses 4 vital enterprise AI challenges:

- Enablement: Delivers AI resolution constructing with constant LLM and repair supplier expertise and help for numerous consumer personas, together with non-technical.

- Safety and high quality: Streamlines AI resolution high quality monitoring utilizing analysis and monitoring companies, while complying with knowledge governance and ethics insurance policies.

- Pace and reusability: Automates workflows and makes use of present AI options and prompts.

- Assets and value administration: Tracks and shows generative AI resolution useful resource consumption, supporting transparency and effectivity.

The answer at the moment helps a number of AI experiences, together with tech help, content material creation, coding help, knowledge extraction and evaluation, proof studying, mission administration, content material summarization, private growth, translation, and downside fixing, catering to totally different consumer wants throughout the group.

Determine 1. Examples of Open Area use instances.

AI explorers use Open Area to hurry up day-to-day duties, corresponding to summarizing paperwork, partaking in LLM chat, constructing customized workflows, and evaluating AI fashions. AI creators and Topic Matter Consultants (SMEs) use Open Area to construct customized AI workflows and experiences and to judge options with out requiring coding information. In the meantime, builders can develop and deploy new AI options at pace, coaching fashions, creating new AI expertise, and deploying AI capabilities.

Why Thomson Reuters chosen AWS for Open Area

TR strategically selected AWS as a main cloud supplier for Open Area primarily based on a number of vital components:

- Complete AI/ML capabilities: Amazon Bedrock provides easy accessibility to a selection of high-performing basis fashions from main AI corporations like AI21 Labs, Anthropic, Cohere, DeepSeek, Luma AI, Meta, Mistral AI, OpenAI, Qwen, Stability AI, TwelveLabs, Author, and Amazon. It helps easy chat and sophisticated RAG workflows, and integrates seamlessly with TR’s present Enterprise AI Platform.

- Enterprise-grade safety and governance: Superior safety controls, mannequin entry utilizing RBAC, knowledge dealing with with enhanced security measures, single sign-on (SSO) enabled, and clear operational and consumer knowledge separation throughout AWS accounts.

- Scalable infrastructure: Serverless structure for computerized scaling, pay-per-use pricing for price optimization, and international availability with low latency.

- Current relationship and experience: Sturdy, established relationship between TR and AWS, present Enterprise AI Platform on AWS, and deep AWS experience inside TR’s technical groups.

“Our long-standing partnership with AWS and their strong, versatile and revolutionary companies made them the pure option to energy Open Area and speed up our AI initiatives.”

– Maria Apazoglou, Head of AI Engineering, CoCounsel

Open Area structure: Scalability, extensibility, and safety

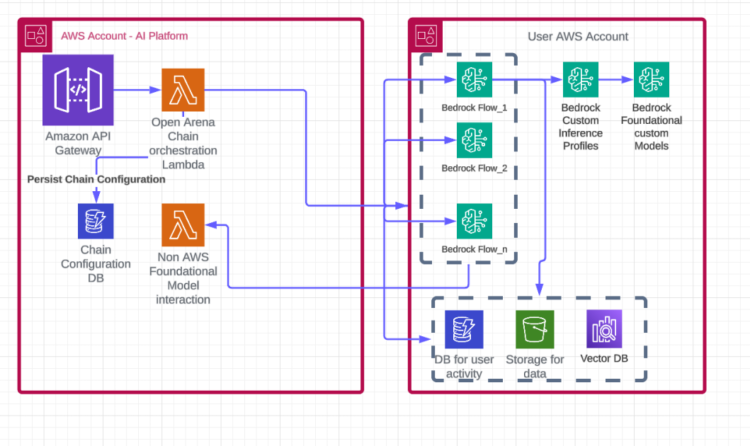

Designed for a broad enterprise viewers, Open Area prioritizes scalability, extensibility and safety whereas sustaining simplicity for non-technical customers to create and deploy AI options. The next diagram illustrates the structure of Open Area.

Determine 2. Structure design of Open Area.

The structure design facilitates enterprise-grade efficiency with clear separation between functionality and utilization, aligning with TR’s enterprise price and utilization monitoring necessities.

The next are key elements of the answer structure:

- No-code interface: Intuitive UI, visible workflow builder, pre-built templates, drag-and-drop performance.

- Enterprise integration: Seamless integration with TR’s Enterprise AI Platform, SSO enabled, knowledge dealing with with enhanced safety, clear knowledge separation.

- Resolution administration: Searchable repository, public/non-public sharing, model management, utilization analytics.

TR developed Open Area utilizing AWS companies corresponding to Amazon Bedrock, Amazon OpenSearch, Amazon DynamoDB, Amazon API Gateway, AWS Lambda, and AWS Step Features. It makes use of Amazon Bedrock for foundational mannequin interactions, supporting easy chat and sophisticated Retrieval-Augmented Era (RAG) duties. Open Area makes use of Amazon Bedrock Flows because the customized workflow builder the place customers can drag-and-drop elements like prompts, brokers, information bases and Lambda features to create subtle AI workflows with out coding. The system additionally integrates with AWS OpenSearch for information bases and exterior APIs for superior agent capabilities.

For knowledge separation, orchestration is managed utilizing the Enterprise AI Platform AWS account, capturing operational knowledge. Circulation situations and user-specific knowledge reside within the consumer’s devoted AWS account, saved in a database. Every consumer’s knowledge and workflow executions are remoted inside their respective AWS accounts, which is required for complying with Thomson Reuters knowledge sovereignty and enterprise safety insurance policies with strict regional controls. The system integrates with Thomson Reuters SSO resolution to robotically determine customers and grant safe, non-public entry to foundational fashions.

The orchestration layer, centrally hosted throughout the Enterprise AI Platform AWS account, manages AI workflow actions, together with scheduling, deployment, useful resource provisioning, and governance throughout consumer environments.

The system options totally automated provisioning of Amazon Bedrock Flows instantly inside every consumer’s AWS account, avoiding handbook setup and accelerating time to worth. Utilizing AWS Lambda for serverless compute and DynamoDB for scalable, low-latency storage, the system dynamically allocates sources primarily based on real-time demand. This structure makes positive immediate flows and supporting infrastructure are deployed and scaled to match workload fluctuations, optimizing efficiency, price, and consumer expertise.

“Our choice to undertake a cross-account structure was pushed by a dedication to enterprise safety and operational excellence. By isolating orchestration from execution, we make it possible for every consumer’s knowledge stays non-public and safe inside their very own AWS account, whereas nonetheless delivering a seamless, centrally-managed expertise. This design empowers organizations to innovate quickly with out compromising compliance or management.”

– Thomson Reuters’ structure group

Evolution of Open Area: From basic to Amazon Bedrock Flows-powered chain builder

Open Area has developed to cater to various ranges of consumer sophistication:

- Open Area v1 (Traditional): Encompasses a form-based interface for easy immediate customization and primary AI workflow deployment inside a single AWS account. Its simplicity appeals to novice customers for simple use instances, although with restricted superior capabilities.

- Open Area v2 (Chain Builder): Introduces a sturdy, visible workflow builder interface, enabling customers to design advanced, multi-step AI workflows utilizing drag-and-drop elements. With help for superior node varieties, parallel execution, and seamless cross-account deployment, Chain Builder dramatically expands the system’s capabilities and accessibility for non-technical customers.

Thomson Reuters makes use of Amazon Bedrock Flows as a core function of Chain Builder. Customers can outline, customise, and deploy AI-driven workflows utilizing Amazon Bedrock fashions. Bedrock Flows helps superior workflows combining a number of immediate nodes, incorporating AWS Lambda features, and supporting subtle RAG pipelines. Working seamlessly throughout consumer AWS accounts, Bedrock Flows facilitates safe, scalable execution of personalised AI options, serving as the elemental engine for the Chain Builder workflows and driving TR’s capability to ship strong, enterprise-grade automation and innovation.

What’s subsequent?

TR continues to develop Open Area’s capabilities by the strategic partnership with AWS, specializing in:

- Driving additional adoption of Open Area’s DIY capabilities.

- Enhancing flexibility for workflow creation in Chain Builder with customized elements, corresponding to inline scripts.

- Creating new templates to characterize widespread duties and workflows.

- Enhancing collaboration options inside Open Area.

- Extending multimodal capabilities and mannequin integration.

- Increasing into new use instances throughout the enterprise.

“From innovating new product concepts to reimagining each day duties for Thomson Reuters staff, we proceed to push the boundaries of what’s attainable with Open Area.”

– Maria Apazoglou, Head of AI Engineering, CoCounsel

Conclusion

On this weblog publish, we explored how Thomson Reuters’ Open Area demonstrates the profitable democratization of AI throughout an enterprise by utilizing AWS companies, notably Amazon Bedrock and Bedrock Flows. With 19,000 month-to-month energetic customers and 70% worker adoption, the system proves that no-code AI options can ship enterprise-scale influence whereas sustaining safety and governance requirements.

By combining the strong infrastructure of AWS with revolutionary structure design, TR has created a blueprint for AI democratization that empowers professionals throughout technical talent ranges to harness generative AI for his or her each day work.

As Open Area continues to evolve, it exemplifies how strategic cloud partnerships can speed up AI adoption and rework how organizations strategy innovation with generative AI.

Concerning the authors

Laura Skylaki, PhD, leads the Enterprise AI Platform at Thomson Reuters, driving the event of GenAI companies that speed up the creation, testing and deployment of AI options, enhancing product worth. A acknowledged skilled with a doctorate in stem cell bioinformatics, her in depth expertise in AI analysis and sensible software spans authorized, tax, and biotech domains. Her machine studying work is revealed in main educational journals, and she or he is a frequent speaker on AI and machine studying

Laura Skylaki, PhD, leads the Enterprise AI Platform at Thomson Reuters, driving the event of GenAI companies that speed up the creation, testing and deployment of AI options, enhancing product worth. A acknowledged skilled with a doctorate in stem cell bioinformatics, her in depth expertise in AI analysis and sensible software spans authorized, tax, and biotech domains. Her machine studying work is revealed in main educational journals, and she or he is a frequent speaker on AI and machine studying

Vaibhav Goswami is a Lead Software program Engineer on the AI Platform group at Thomson Reuters, the place he leads the event of the Generative AI Platform that empowers customers to construct and deploy generative AI options at scale. With experience in constructing production-grade AI techniques, he focuses on creating instruments and infrastructure that democratize entry to cutting-edge AI capabilities throughout the enterprise.

Vaibhav Goswami is a Lead Software program Engineer on the AI Platform group at Thomson Reuters, the place he leads the event of the Generative AI Platform that empowers customers to construct and deploy generative AI options at scale. With experience in constructing production-grade AI techniques, he focuses on creating instruments and infrastructure that democratize entry to cutting-edge AI capabilities throughout the enterprise.

Ramdev Wudali is a Distinguished Engineer, serving to architect and construct the AI/ML Platform to allow the Enterprise consumer, knowledge scientists and researchers to develop Generative AI and machine studying options by democratizing entry to instruments and LLMs. In his spare time, he likes to fold paper to create origami tessellations, and carrying irreverent T-shirts

Ramdev Wudali is a Distinguished Engineer, serving to architect and construct the AI/ML Platform to allow the Enterprise consumer, knowledge scientists and researchers to develop Generative AI and machine studying options by democratizing entry to instruments and LLMs. In his spare time, he likes to fold paper to create origami tessellations, and carrying irreverent T-shirts

Because the director of AI Platform Adoption and Coaching, Sahar El Khoury guides customers to seamlessly onboard and efficiently use the platform companies, drawing on her expertise in AI and knowledge evaluation throughout robotics (PhD), monetary markets, and media.

Because the director of AI Platform Adoption and Coaching, Sahar El Khoury guides customers to seamlessly onboard and efficiently use the platform companies, drawing on her expertise in AI and knowledge evaluation throughout robotics (PhD), monetary markets, and media.

Vu San Ha Huynh is a Options Architect at AWS with a PhD in Pc Science. He helps giant Enterprise clients drive innovation throughout totally different domains with a give attention to AI/ML and Generative AI options.

Vu San Ha Huynh is a Options Architect at AWS with a PhD in Pc Science. He helps giant Enterprise clients drive innovation throughout totally different domains with a give attention to AI/ML and Generative AI options.

Paul Wright is a Senior Technical Account Supervisor, with over 20 years expertise within the IT business and over 7 years of devoted cloud focus. Paul has helped a number of the largest enterprise clients develop their enterprise and enhance their operational excellence. In his spare time Paul is a large soccer and NFL fan.

Paul Wright is a Senior Technical Account Supervisor, with over 20 years expertise within the IT business and over 7 years of devoted cloud focus. Paul has helped a number of the largest enterprise clients develop their enterprise and enhance their operational excellence. In his spare time Paul is a large soccer and NFL fan.

Mike Bezak is a Senior Technical Account Supervisor in AWS Enterprise Assist. He has over 20 years of expertise in data know-how, primarily catastrophe restoration and techniques administration. Mike’s present focus helps clients streamline and optimize their AWS Cloud journey. Exterior of AWS, Mike enjoys spending time with household & mates.

Mike Bezak is a Senior Technical Account Supervisor in AWS Enterprise Assist. He has over 20 years of expertise in data know-how, primarily catastrophe restoration and techniques administration. Mike’s present focus helps clients streamline and optimize their AWS Cloud journey. Exterior of AWS, Mike enjoys spending time with household & mates.