Contemplate a rising social media platform that processes tens of millions of consumer posts every day. Their content material moderation group faces a well-recognized problem: their rule-based system flags a cooking video discussing “knife strategies” as violent content material, irritating customers, whereas concurrently lacking a veiled menace disguised as a restaurant evaluation. Once they strive a general-purpose AI moderation service, it struggles with their neighborhood’s gaming terminology, flagging discussions about “eliminating opponents” in technique video games whereas lacking precise harassment that makes use of coded language particular to their platform. The moderation group finds themselves caught between consumer complaints about over-moderation and advertiser considerations about dangerous content material slipping by means of—an issue that scales exponentially as their consumer base grows.

This situation illustrates the broader challenges that content material moderation at scale presents for patrons throughout industries. Conventional rule-based approaches and key phrase filters typically wrestle to catch nuanced coverage violations, rising dangerous content material patterns, or contextual violations that require deeper semantic understanding. In the meantime, the amount of user-generated content material continues to develop, making guide moderation more and more impractical and expensive. Clients want adaptable options that may scale with their content material wants whereas sustaining accuracy and reflecting their particular moderation insurance policies.

Whereas general-purpose AI content material moderation providers provide broad capabilities, they usually implement standardized insurance policies that may not align with a buyer’s distinctive necessities. These approaches typically wrestle with domain-specific terminology, complicated coverage edge instances, or culturally-specific content material analysis. Moreover, totally different clients may need various taxonomies for content material annotation and totally different thresholds or boundaries for a similar coverage classes. Consequently, many purchasers discover themselves managing trade-offs between detection capabilities and false positives.

On this publish, we introduce an strategy to content material moderation by means of Amazon Nova customization on Amazon SageMaker AI. With this answer, you’ll be able to fine-tune Amazon Nova for content material moderation duties tailor-made to your necessities. Through the use of domain-specific coaching knowledge and organization-specific moderation tips, this custom-made strategy can ship improved accuracy and coverage alignment in comparison with off-the-shelf options. Our analysis throughout three benchmarks exhibits that custom-made Nova fashions obtain a median enchancment of seven.3% in F1 scores in comparison with the baseline Nova Lite, with particular person enhancements starting from 4.2% to 9.2% throughout totally different content material moderation duties. The custom-made Nova mannequin can detect coverage violations, perceive contextual nuances, and adapt to content material patterns primarily based by yourself dataset.

Key benefits

With Nova customization, you’ll be able to construct textual content content material moderators that ship compelling benefits over various approaches together with coaching from scratch and utilizing a basic basis mannequin. Through the use of pre-trained Nova fashions as a basis, you’ll be able to obtain superior outcomes whereas decreasing complexity, price, and time-to-deployment.

When in comparison with constructing fashions solely from the bottom up, Nova customization supplies a number of key advantages to your group:

- Makes use of pre-existing data: Nova comes with prior data in textual content content material moderation, having been skilled on related datasets, offering a basis for personalization that achieves aggressive efficiency with simply 10,000 situations for SFT.

- Simplified workflow: As an alternative of constructing coaching infrastructure from scratch, you’ll be able to add formatted knowledge and submit a SageMaker coaching job, with coaching code and workflows supplied, finishing coaching in roughly one hour at a price of $55 (primarily based on US East Ohio Amazon EC2 P5 occasion pricing).

- Diminished time and value: Reduces the necessity for intensive computational sources and months of coaching time required for constructing fashions from the bottom up.

Whereas general-purpose basis fashions provide broad capabilities, Nova customization delivers extra focused advantages to your content material moderation use instances:

- Coverage-specific customization: Not like basis fashions skilled with broad datasets, Nova customization fine-tunes to your group’s particular moderation tips and edge instances, attaining 4.2% to 9.2% enhancements in F1 scores throughout totally different content material moderation duties.

- Constant efficiency: Reduces unpredictability from third-party API updates and coverage adjustments that may alter your content material moderation habits.

- Value effectivity: At $0.06 per 1 million enter tokens and $0.24 per 1 million output tokens, Nova Lite supplies important price benefits in comparison with different business basis fashions that spend about 10–100 occasions extra price, delivering substantial price financial savings.

Past particular comparisons, Nova customization provides inherent advantages that apply no matter your present strategy:

- Versatile coverage boundaries: Customized thresholds and coverage boundaries will be managed by means of prompts and taught to the mannequin throughout fine-tuning.

- Accommodates numerous taxonomies: The answer adapts to totally different annotation taxonomies and organizational content material moderation frameworks.

- Versatile knowledge necessities: You need to use your present coaching datasets with proprietary knowledge or use public coaching splits from established content material moderation benchmarks if you happen to don’t have your individual datasets.

Demonstrating content material moderation efficiency with Nova customization

To judge the effectiveness of Nova customization for content material moderation, we developed and evaluated three content material moderation fashions utilizing Amazon Nova Lite as our basis. Our strategy used each proprietary inner content material moderation datasets and established public benchmarks, coaching low-rank adaptation (LoRA) fashions with 10,000 fine-tuning situations—augmenting Nova Lite’s intensive base data with specialised content material moderation experience.

Coaching strategy and mannequin variants

We created three mannequin variants from Nova Lite, every optimized for various content material moderation eventualities that you simply may encounter in your individual implementation:

- NovaTextCM: Educated on our inner content material moderation dataset, optimized for organization-specific coverage enforcement

- NovaAegis: Superb-tuned utilizing Aegis-AI-Content material-Security-2.0 coaching break up, specialised for adversarial immediate detection

- NovaWildguard: Personalized with WildGuardMix coaching break up, designed for content material moderation throughout actual and artificial contents

This multi-variant strategy demonstrates the flexibleness of Nova customization in adapting to totally different content material moderation taxonomies and coverage frameworks which you can apply to your particular use instances.

Complete benchmark analysis

We evaluated our custom-made fashions in opposition to three established content material moderation benchmarks, every representing totally different features of the content material moderation challenges that you simply may encounter in your individual deployments. In our analysis, we computed F1 scores for binary classification, figuring out whether or not every occasion violates the given coverage or not. The F1 rating supplies a balanced measure of precision and recall, which is beneficial for content material moderation the place each false positives (incorrectly flagging protected content material) and false negatives (lacking dangerous content material) carry prices.

- Aegis-AI-Content material-Security-2.0 (2024): A dataset with 2,777 check samples (1,324 protected, 1,453 unsafe) for binary coverage violation classification. This dataset combines artificial LLM-generated and actual prompts from pink teaming datasets, that includes adversarial prompts designed to check mannequin robustness in opposition to bypass makes an attempt. Out there at Aegis-AI-Content material-Security-Dataset-2.0.

- WildGuardMix (2024): An analysis set with 3,408 check samples (2,370 protected, 1,038 unsafe) for binary coverage violation classification. The dataset consists largely of actual prompts with some LLM-generated responses, curated from a number of security datasets and human-labeled for analysis protection. Out there at wildguardmix.

- Jigsaw Poisonous Remark (2018): A benchmark with 63,978 check samples (57,888 protected, 6,090 unsafe) for binary poisonous content material classification. This dataset accommodates actual Wikipedia speak web page feedback and serves as a longtime benchmark within the content material moderation neighborhood, offering insights into mannequin efficiency on genuine user-generated content material. Out there at jigsaw-toxic-comment.

Efficiency achievements

Our outcomes present that Nova customization supplies significant efficiency enhancements throughout all benchmarks which you can count on when implementing this answer. The custom-made fashions achieved efficiency ranges similar to massive business language fashions (referred to right here as LLM-A and LLM-B) whereas utilizing solely a fraction of the coaching knowledge and computational sources.

The efficiency knowledge exhibits important F1 rating enhancements throughout all mannequin variants. NovaLite baseline achieved F1 scores of 0.7822 on Aegis, 0.54103 on Jigsaw, and 0.78901 on Wildguard. NovaTextCM improved to 0.8305 (+6.2%) on Aegis, 0.59098 (+9.2%) on Jigsaw, and 0.83871 (+6.3%) on Wildguard. NovaAegis achieved the very best Aegis efficiency at 0.85262 (+9.0%), with scores of 0.55129 on Jigsaw, and 0.81701 on Wildguard. NovaWildguard scored 0.848 on Aegis, 0.56439 on Jigsaw, and 0.82234 (+4.2%) on Wildguard.

As proven within the previous determine, the efficiency beneficial properties had been noticed throughout all three variants, with every mannequin exhibiting enhancements over the baseline Nova Lite throughout a number of analysis standards:

- NovaAegis achieved the very best efficiency on the Aegis benchmark (0.85262), representing a 9.0% enchancment over Nova Lite (0.7822)

- NovaTextCM confirmed constant enhancements throughout all benchmarks: Aegis (0.8305, +6.2%), Jigsaw (0.59098, +9.2%), and WildGuard (0.83871, +6.3%)

- NovaWildguard carried out nicely on JigSaw (0.56439, +2.3%) and WildGuard (0.82234, +4.2%)

- All three custom-made fashions confirmed beneficial properties throughout benchmarks in comparison with the baseline Nova Lite

These efficiency enhancements recommend that Nova customization can facilitate significant beneficial properties in content material moderation duties by means of focused fine-tuning. The constant enhancements throughout totally different benchmarks point out that custom-made Nova fashions have the potential to exceed the efficiency of business fashions in specialised purposes.

Value-effective large-scale deployment

Past efficiency enhancements, Nova Lite provides important price benefits for large-scale content material moderation deployments which you can benefit from to your group. With low-cost pricing for each enter and output tokens, Nova Lite supplies substantial price benefits in comparison with business basis fashions, delivering price financial savings whereas sustaining aggressive efficiency.

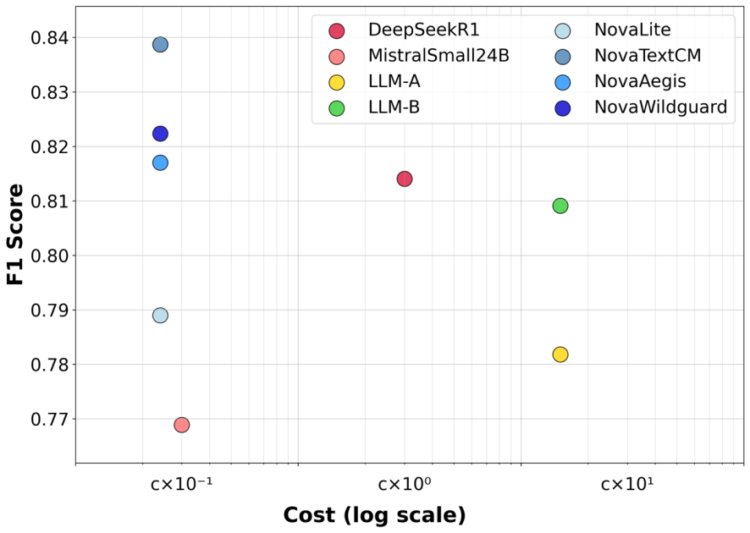

The price-performance evaluation on the WildGuard benchmark reveals compelling benefits for Nova customization which you can notice in your deployments. Your Nova variants obtain superior F1 scores in comparison with business basis fashions whereas working within the low-cost class. For instance, NovaTextCM achieves an F1 rating of 0.83871 on WildGuard whereas working at extraordinarily low price, outperforming LLM-B’s F1 rating of 0.80911 which operates at high-cost pricing—delivering higher efficiency at considerably decrease price.

This price effectivity turns into notably compelling at scale to your group. Once you’re moderating massive volumes of content material every day, the pricing benefit of Nova variants within the low-cost class can translate to substantial operational financial savings whereas delivering superior efficiency. The mixture of higher accuracy and dramatically decrease prices makes Nova customization an economically enticing answer to your enterprise content material moderation wants.

Key coaching insights

We noticed a number of necessary findings for Nova customization that may information your implementation strategy as follows.

- Extra knowledge isn’t essentially higher: We discovered that 10,000 coaching situations represents an acceptable quantity for LoRA adaptation. Once we elevated the coaching knowledge from 10,000 to twenty-eight,000 situations, we noticed proof of overfitting. This discovering means that when utilizing LoRA for fine-tuning, extra coaching situations can harm efficiency, indicating that the pre-existing content material moderation data inbuilt to Nova permits for studying with comparatively small, well-curated datasets.

- Format consistency is necessary: Efficiency degraded when coaching and analysis knowledge codecs had been inconsistent. This highlights the significance of sustaining constant knowledge formatting all through the customization pipeline.

- Activity-specific adaptation: Every mannequin variant carried out finest on benchmarks most just like their coaching knowledge, confirming that focused customization can ship improved outcomes in comparison with general-purpose approaches.

The way to prepare a mannequin with Nova customization

This part supplies a walkthrough for coaching your individual custom-made Nova mannequin for content material moderation. We’ll cowl the information preparation, configuration setup, and coaching execution utilizing SageMaker AI.

Stipulations and setup

Earlier than starting the coaching course of, guarantee you might have adopted the excellent directions in Superb-tuning Amazon Nova fashions utilizing SageMaker coaching jobs. The next examples display the particular configurations we used for our textual content content material moderation fashions.

Coaching knowledge format

Your coaching knowledge have to be formatted as a JSONL file and uploaded to an Amazon Easy Storage Service (Amazon S3) bucket. Every line ought to include an entire dialog following the Amazon Bedrock dialog schema. Right here’s an instance from our coaching dataset:

This format helps be certain that the mannequin learns each the enter construction (content material moderation directions and textual content to judge) and the anticipated output format (structured coverage violation responses).

Coaching configuration

The coaching recipe defines all of the hyperparameters and settings to your Nova customization. Save the next configuration as a YAML file (for instance, text_cm.yaml):

This configuration makes use of LoRA for environment friendly fine-tuning, which considerably reduces coaching time and computational necessities whereas sustaining excessive efficiency.

SageMaker AI coaching job setup

Use the next pocket book code to submit your coaching job to SageMaker AI. This implementation intently follows the pattern pocket book supplied within the official tips, with particular variations for content material moderation:

Essential configuration notes:

Coaching efficiency

With our configuration utilizing LoRA fine-tuning, coaching 10,000 situations on Nova Lite takes roughly one hour utilizing the previous setup. This environment friendly coaching time demonstrates the facility of parameter-efficient fine-tuning mixed with Nova’s pre-existing data base.The comparatively brief coaching period makes it sensible to iterate in your content material moderation insurance policies and retrain fashions as wanted, enabling speedy adaptation to evolving content material challenges.

The way to infer with a custom-made Nova mannequin

After your Nova mannequin has been efficiently skilled for content material moderation, this part guides you thru the analysis and inference course of. We’ll display the best way to benchmark your custom-made mannequin in opposition to established datasets and deploy it for manufacturing use.

Stipulations and setup

Earlier than continuing with mannequin analysis, guarantee you might have adopted the excellent directions in Evaluating your SageMaker AI-trained mannequin. The next examples present the particular configurations we used for benchmarking our content material moderation fashions in opposition to public datasets.

Check knowledge format

Your analysis knowledge ought to be formatted as a JSONL file and uploaded to an S3 bucket. Every line accommodates a query-response pair that represents the enter immediate and anticipated output for analysis. Right here’s an instance from our check dataset:

This format permits the analysis framework to check your mannequin’s generated responses in opposition to the anticipated floor fact labels, enabling correct efficiency measurement throughout totally different content material moderation benchmarks. Observe that the response subject was not used within the inference however included right here to ship the label within the inference output.

Analysis configuration

The analysis recipe defines the inference parameters and analysis settings to your custom-made Nova mannequin. Save the next configuration as a YAML file (for instance, recipe.yaml):

Key configuration notes:

- The

temperature: 0setting ensures deterministic outputs, which is essential for benchmarking

SageMaker analysis job setup

Use the next pocket book code to submit your analysis job to SageMaker. You need to use this setup to benchmark your custom-made mannequin in opposition to the identical datasets utilized in our efficiency analysis:

Essential setup notes:

Clear up

To keep away from incurring extra prices after following together with this publish, it is best to clear up the AWS sources that had been created through the coaching and deployment course of. Right here’s how one can systematically take away these sources:

Cease and delete coaching jobs

After your coaching job finishes, you’ll be able to clear up your coaching job utilizing the next AWS Command Line Interface (AWS CLI) command.

aws sagemaker list-training-jobsaws sagemaker stop-training-job --training-job-name

Delete endpoints, endpoint configs, fashions

These are the large price drivers if left working. It’s best to delete them on this particular order:aws sagemaker delete-endpoint --endpoint-name

aws sagemaker delete-endpoint-config --endpoint-config-name

aws sagemaker delete-model --model-name

Delete in that order:

- endpoint

- config

- mannequin.

Clear up storage and artifacts

Coaching output and checkpoints are saved in Amazon S3. Delete them if not wanted:

aws s3 rm s3://your-bucket-name/path/ --recursive

Extra storage concerns to your cleanup:

- FSx for Lustre (if you happen to connected it for coaching or HyperPod): delete the file system within the FSx console

- EBS volumes (if you happen to spun up notebooks or clusters with connected volumes): verify to substantiate that they aren’t lingering

Take away supporting sources

If you happen to constructed customized Docker photographs for coaching or inference, delete them:

aws ecr delete-repository --repository-name

Different supporting sources to contemplate:

- CloudWatch logs: These don’t often price a lot, however you’ll be able to clear them if desired

- IAM roles: If you happen to created momentary roles for jobs, detach or delete insurance policies if unused

If you happen to used HyperPod

For HyperPod deployments, you also needs to:

- Delete the HyperPod cluster (to the SageMaker console and select HyperPod)

- Take away related VPC endpoints, safety teams, and subnets if devoted

- Delete coaching job sources tied to HyperPod (identical because the earlier: endpoints, configs, fashions, FSx, and so forth)

Analysis efficiency and outcomes

With this analysis setup, processing 100,000 check situations utilizing the skilled Nova Lite mannequin takes roughly one hour utilizing a single p5.48xlarge occasion. This environment friendly inference time makes it sensible to recurrently consider your mannequin’s efficiency as you iterate on coaching knowledge or regulate moderation insurance policies.

Subsequent steps: Deploying your custom-made Nova mannequin

Able to deploy your custom-made Nova mannequin for manufacturing content material moderation? Right here’s the best way to deploy your mannequin utilizing Amazon Bedrock for on-demand inference:

Customized mannequin deployment workflow

After you’ve skilled or fine-tuned your Nova mannequin by means of SageMaker utilizing PEFT and LoRA strategies as demonstrated on this publish, you’ll be able to deploy it in Amazon Bedrock for inference. The deployment course of follows this workflow:

- Create your custom-made mannequin: Full the Nova customization coaching course of utilizing SageMaker together with your content material moderation dataset

- Deploy utilizing Bedrock: Arrange a customized mannequin deployment in Amazon Bedrock

- Use for inference: Use the deployment Amazon Useful resource Identify (ARN) because the mannequin ID for inference by means of the console, APIs, or SDKs

On-demand inference necessities

For on-demand (OD) inference deployment, guarantee your setup meets these necessities:

- Coaching methodology: If you happen to used SageMaker customization, on-demand inference is just supported for Parameter-Environment friendly Superb-Tuned (PEFT) fashions, together with Direct Choice Optimization, when hosted in Amazon Bedrock.

- Deployment platform: Your custom-made mannequin have to be hosted in Amazon Bedrock to make use of on-demand inference capabilities.

Implementation concerns

When deploying your custom-made Nova mannequin for content material moderation, take into account these elements:

- Scaling technique: Use the managed infrastructure of Amazon Bedrock to mechanically scale your content material moderation capability primarily based on demand.

- Value optimization: Reap the benefits of on-demand pricing to pay just for the inference requests you make, optimizing prices for variable content material moderation workloads.

- Integration strategy: Use the deployment ARN to combine your custom-made mannequin into present content material moderation workflows and purposes.

Conclusion

The quick inference velocity of Nova Lite—processing 100,000 situations per hour utilizing a single P5 occasion—supplies important benefits for large-scale content material moderation deployments. With this throughput, you’ll be able to reasonable excessive volumes of user-generated content material in real-time, making Nova customization notably well-suited for platforms with tens of millions of every day posts, feedback, or messages that require quick coverage enforcement.

With the deployment strategy and subsequent steps described on this publish, you’ll be able to seamlessly combine your custom-made Nova mannequin into manufacturing content material moderation programs, benefiting from each the efficiency enhancements demonstrated in our analysis and the managed infrastructure of Amazon Bedrock for dependable, scalable inference.

Concerning the authors

Yooju Shin is an Utilized Scientist on Amazon’s AGI Foundations RAI group. He focuses on auto-prompting for RAI coaching dataset and supervised fine-tuning (SFT) of multimodal fashions. He accomplished his Ph.D. from KAIST in 2023.

Yooju Shin is an Utilized Scientist on Amazon’s AGI Foundations RAI group. He focuses on auto-prompting for RAI coaching dataset and supervised fine-tuning (SFT) of multimodal fashions. He accomplished his Ph.D. from KAIST in 2023.

Chentao Ye is a Senior Utilized Scientist within the Amazon AGI Foundations RAI group, the place he leads key initiatives in post-training recipes and multimodal massive language fashions. His work focuses notably on RAI alignment. He brings deep experience in Generative AI, Multimodal AI, and Accountable AI.

Chentao Ye is a Senior Utilized Scientist within the Amazon AGI Foundations RAI group, the place he leads key initiatives in post-training recipes and multimodal massive language fashions. His work focuses notably on RAI alignment. He brings deep experience in Generative AI, Multimodal AI, and Accountable AI.

Fan Yang is a Senior Utilized Scientist on the Amazon AGI Foundations RAI group, the place he develops multimodal observers for accountable AI programs. He obtained a PhD in Pc Science from the College of Houston in 2020 with analysis centered on false info detection. Since becoming a member of Amazon, he has specialised in constructing and advancing multimodal fashions.

Fan Yang is a Senior Utilized Scientist on the Amazon AGI Foundations RAI group, the place he develops multimodal observers for accountable AI programs. He obtained a PhD in Pc Science from the College of Houston in 2020 with analysis centered on false info detection. Since becoming a member of Amazon, he has specialised in constructing and advancing multimodal fashions.

Weitong Ruan is an Utilized Science Manger on the Amazon AGI Foundations RAI group, the place he leads the event of RAI programs for Nova and bettering Nova’s RAI efficiency throughout SFT. Earlier than becoming a member of Amazon, he accomplished his Ph.D. in Electrical Engineering with specialization in Machine Studying from the Tufts College in Aug 2018.

Weitong Ruan is an Utilized Science Manger on the Amazon AGI Foundations RAI group, the place he leads the event of RAI programs for Nova and bettering Nova’s RAI efficiency throughout SFT. Earlier than becoming a member of Amazon, he accomplished his Ph.D. in Electrical Engineering with specialization in Machine Studying from the Tufts College in Aug 2018.

Rahul Gupta is a senior science supervisor on the Amazon Synthetic Normal Intelligence group heading initiatives on Accountable AI. Since becoming a member of Amazon, he has centered on designing NLU fashions for scalability and velocity. A few of his newer analysis focuses on Accountable AI with emphasis on privateness preserving strategies, equity and federated studying. He obtained his PhD from the College of Southern California in 2016 on decoding non-verbal communications in human interplay. He has printed a number of papers in avenues akin to EMNLP, ACL, NAACL, ACM Facct, IEEE-Transactions of affective computing, IEEE-Spoken language Understanding workshop, ICASSP, Interspeech and Elselvier pc speech and language journal. He’s additionally co-inventor on over twenty 5 patented/patent-pending applied sciences at Amazon.

Rahul Gupta is a senior science supervisor on the Amazon Synthetic Normal Intelligence group heading initiatives on Accountable AI. Since becoming a member of Amazon, he has centered on designing NLU fashions for scalability and velocity. A few of his newer analysis focuses on Accountable AI with emphasis on privateness preserving strategies, equity and federated studying. He obtained his PhD from the College of Southern California in 2016 on decoding non-verbal communications in human interplay. He has printed a number of papers in avenues akin to EMNLP, ACL, NAACL, ACM Facct, IEEE-Transactions of affective computing, IEEE-Spoken language Understanding workshop, ICASSP, Interspeech and Elselvier pc speech and language journal. He’s additionally co-inventor on over twenty 5 patented/patent-pending applied sciences at Amazon.