Analysis papers and engineering paperwork usually comprise a wealth of knowledge within the type of mathematical formulation, charts, and graphs. Navigating these unstructured paperwork to seek out related data is usually a tedious and time-consuming process, particularly when coping with giant volumes of knowledge. Nevertheless, by utilizing Anthropic’s Claude on Amazon Bedrock, researchers and engineers can now automate the indexing and tagging of those technical paperwork. This allows the environment friendly processing of content material, together with scientific formulation and information visualizations, and the inhabitants of Amazon Bedrock Data Bases with acceptable metadata.

Amazon Bedrock is a totally managed service that gives a single API to entry and use numerous high-performing basis fashions (FMs) from main AI corporations. It presents a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI practices. Anthropic’s Claude 3 Sonnet presents best-in-class imaginative and prescient capabilities in comparison with different main fashions. It could precisely transcribe textual content from imperfect photos—a core functionality for retail, logistics, and monetary companies, the place AI would possibly glean extra insights from a picture, graphic, or illustration than from textual content alone. The most recent of Anthropic’s Claude fashions reveal a powerful aptitude for understanding a variety of visible codecs, together with photographs, charts, graphs and technical diagrams. With Anthropic’s Claude, you may extract extra insights from paperwork, course of internet UIs and numerous product documentation, generate picture catalog metadata, and extra.

On this submit, we discover how you should utilize these multi-modal generative AI fashions to streamline the administration of technical paperwork. By extracting and structuring the important thing data from the supply supplies, the fashions can create a searchable information base that permits you to shortly find the info, formulation, and visualizations you’ll want to help your work. With the doc content material organized in a information base, researchers and engineers can use superior search capabilities to floor probably the most related data for his or her particular wants. This may considerably speed up analysis and growth workflows, as a result of professionals not must manually sift by means of giant volumes of unstructured information to seek out the references they want.

Resolution overview

This resolution demonstrates the transformative potential of multi-modal generative AI when utilized to the challenges confronted by scientific and engineering communities. By automating the indexing and tagging of technical paperwork, these highly effective fashions can allow extra environment friendly information administration and speed up innovation throughout a wide range of industries.

Along with Anthropic’s Claude on Amazon Bedrock, the answer makes use of the next companies:

- Amazon SageMaker JupyterLab – The SageMakerJupyterLab utility is a web-based interactive growth surroundings (IDE) for notebooks, code, and information. JupyterLab utility’s versatile and in depth interface can be utilized to configure and organize machine studying (ML) workflows. We use JupyterLab to run the code for processing formulae and charts.

- Amazon Easy Storage Service (Amazon S3) – Amazon S3 is an object storage service constructed to retailer and shield any quantity of knowledge. We use Amazon S3 to retailer pattern paperwork which can be used on this resolution.

- AWS Lambda –AWS Lambda is a compute service that runs code in response to triggers resembling adjustments in information, adjustments in utility state, or person actions. As a result of companies resembling Amazon S3 and Amazon Easy Notification Service (Amazon SNS) can immediately set off a Lambda operate, you may construct a wide range of real-time serverless data-processing programs.

The answer workflow comprises the next steps:

- Cut up the PDF into particular person pages and save them as PNG information.

- With every web page:

- Extract the unique textual content.

- Render the formulation in LaTeX.

- Generate a semantic description of every system.

- Generate a proof of every system.

- Generate a semantic description of every graph.

- Generate an interpretation for every graph.

- Generate metadata for the web page.

- Generate metadata for the total doc.

- Add the content material and metadata to Amazon S3.

- Create an Amazon Bedrock information base.

The next diagram illustrates this workflow.

Stipulations

- In the event you’re new to AWS, you first have to create and arrange an AWS account.

- Moreover, in your account underneath Amazon Bedrock, request entry to

anthropic.claude-3-5-sonnet-20241022-v2:0in the event you don’t have it already.

Deploy the answer

Full the next steps to arrange the answer:

- Launch the AWS CloudFormation template by selecting Launch Stack (this creates the stack within the

us-east-1AWS Area):

- When the stack deployment is full, open the Amazon SageMaker AI

- Select Notebooks within the navigation pane.

- Find the pocket book

claude-scientific-docs-notebookand select Open JupyterLab.

- Within the pocket book, navigate to

notebooks/process_scientific_docs.ipynb.

- Select conda_python3 because the kernel, then select Choose.

- Stroll by means of the pattern code.

Rationalization of the pocket book code

On this part, we stroll by means of the pocket book code.

Load information

We use instance analysis papers from arXiv to reveal the potential outlined right here. arXiv is a free distribution service and an open-access archive for almost 2.4 million scholarly articles within the fields of physics, arithmetic, laptop science, quantitative biology, quantitative finance, statistics, electrical engineering and programs science, and economics.

We obtain the paperwork and retailer them underneath a samples folder domestically. Multi-modal generative AI fashions work properly with textual content extraction from picture information, so we begin by changing the PDF to a set of photos, one for every web page.

Get Metadata from formulation

After the picture paperwork can be found, you should utilize Anthropic’s Claude to extract formulation and metadata with the Amazon Bedrock Converse API. Moreover, you should utilize the Amazon Bedrock Converse API to acquire a proof of the extracted formulation in plain language. By combining the system and metadata extraction capabilities of Anthropic’s Claude with the conversational talents of the Amazon Bedrock Converse API, you may create a complete resolution for processing and understanding the knowledge contained inside the picture paperwork.

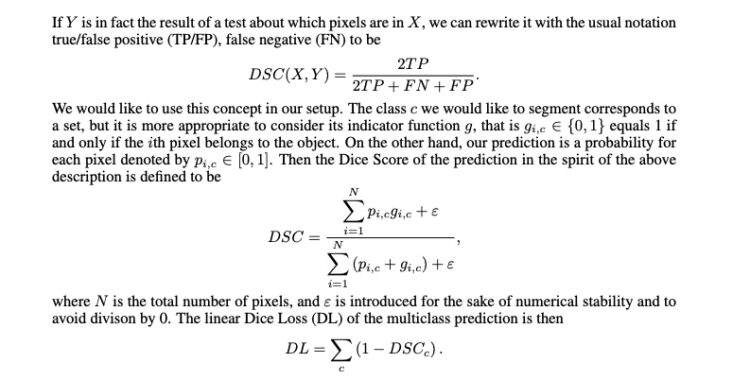

We begin with the next instance PNG file.

We use the next request immediate:

We get the next response, which reveals the extracted system transformed to LaTeX format and described in plain language, enclosed in double greenback indicators.

Get metadata from charts

One other helpful functionality of multi-modal generative AI fashions is the power to interpret graphs and generate summaries and metadata. The next is an instance of how one can acquire metadata of the charts and graphs utilizing easy pure language dialog with fashions. We use the next graph.

We offer the next request:

The response returned offers its interpretation of the graph explaining the color-coded traces and suggesting that general, the DSC mannequin is performing properly on the coaching information, reaching a excessive Cube coefficient of round 0.98. Nevertheless, the decrease and fluctuating validation Cube coefficient signifies potential overfitting and room for enchancment within the mannequin’s generalization efficiency.

Generate metadata

Utilizing pure language processing, you may generate metadata for the paper to help in searchability.

We use the next request:

We get the next response, together with system markdown and an outline.

Use your extracted information in a information base

Now that we’ve ready our information with formulation, analyzed charts, and metadata, we are going to create an Amazon Bedrock information base. It will make the knowledge searchable and allow question-answering capabilities.

Put together your Amazon Bedrock information base

To create a information base, first add the processed information and metadata to Amazon S3:

When your information have completed importing, full the next steps:

- Create an Amazon Bedrock information base.

- Create an Amazon S3 information supply on your information base, and specify hierarchical chunking because the chunking technique.

Hierarchical chunking entails organizing data into nested buildings of kid and father or mother chunks.

The hierarchical construction permits for quicker and extra focused retrieval of related data, first by performing semantic search on the kid chunk after which returning the father or mother chunk throughout retrieval. By changing the kids chunks with the father or mother chunk, we offer giant and complete context to the FM.

Hierarchical chunking is finest fitted to advanced paperwork which have a nested or hierarchical construction, resembling technical manuals, authorized paperwork, or tutorial papers with advanced formatting and nested tables.

Question the information base

You possibly can question the information base to retrieve data from the extracted system and graph metadata from the pattern paperwork. With a question, related chunks of textual content from the supply of knowledge are retrieved and a response is generated for the question, based mostly off the retrieved supply chunks. The response additionally cites sources which can be related to the question.

We use the customized immediate template function of information bases to format the output as markdown:

We get the next response, which offers data on when the Focal Tversky Loss is used.

Clear up

To wash up and keep away from incurring prices, run the cleanup steps within the pocket book to delete the information you uploaded to Amazon S3 together with the information base. Then, on the AWS CloudFormation console, find the stack claude-scientific-doc and delete it.

Conclusion

Extracting insights from advanced scientific paperwork is usually a daunting process. Nevertheless, the appearance of multi-modal generative AI has revolutionized this area. By harnessing the superior pure language understanding and visible notion capabilities of Anthropic’s Claude, now you can precisely extract formulation and information from charts, enabling quicker insights and knowledgeable decision-making.

Whether or not you’re a researcher, information scientist, or developer working with scientific literature, integrating Anthropic’s Claude into your workflow on Amazon Bedrock can considerably enhance your productiveness and accuracy. With the power to course of advanced paperwork at scale, you may concentrate on higher-level duties and uncover invaluable insights out of your information.

Embrace the way forward for AI-driven doc processing and unlock new potentialities on your group with Anthropic’s Claude on Amazon Bedrock. Take your scientific doc evaluation to the following stage and keep forward of the curve on this quickly evolving panorama.

For additional exploration and studying, we suggest testing the next sources:

Concerning the Authors

Erik Cordsen is a Options Architect at AWS serving prospects in Georgia. He’s obsessed with making use of cloud applied sciences and ML to unravel actual life issues. When he’s not designing cloud options, Erik enjoys journey, cooking, and biking.

Erik Cordsen is a Options Architect at AWS serving prospects in Georgia. He’s obsessed with making use of cloud applied sciences and ML to unravel actual life issues. When he’s not designing cloud options, Erik enjoys journey, cooking, and biking.

Renu Yadav is a Options Architect at Amazon Internet Companies (AWS), the place she works with enterprise-level AWS prospects offering them with technical steerage and assist them obtain their enterprise aims. Renu has a powerful ardour for studying together with her space of specialization in DevOps. She leverages her experience on this area to help AWS prospects in optimizing their cloud infrastructure and streamlining their software program growth and deployment processes.

Renu Yadav is a Options Architect at Amazon Internet Companies (AWS), the place she works with enterprise-level AWS prospects offering them with technical steerage and assist them obtain their enterprise aims. Renu has a powerful ardour for studying together with her space of specialization in DevOps. She leverages her experience on this area to help AWS prospects in optimizing their cloud infrastructure and streamlining their software program growth and deployment processes.

Venkata Moparthi is a Senior Options Architect at AWS who empowers monetary companies organizations and different industries to navigate cloud transformation with specialised experience in Cloud Migrations, Generative AI, and safe structure design. His customer-focused strategy combines technical innovation with sensible implementation, serving to companies speed up digital initiatives and obtain strategic outcomes by means of tailor-made AWS options that maximize cloud potential.

Venkata Moparthi is a Senior Options Architect at AWS who empowers monetary companies organizations and different industries to navigate cloud transformation with specialised experience in Cloud Migrations, Generative AI, and safe structure design. His customer-focused strategy combines technical innovation with sensible implementation, serving to companies speed up digital initiatives and obtain strategic outcomes by means of tailor-made AWS options that maximize cloud potential.