Context Engineering by now. This text will cowl the important thing concepts behind creating LLM purposes utilizing Context Engineering rules, visually clarify these workflows, and share code snippets that apply these ideas virtually.

Don’t fear about copy-pasting the code from this text into your editor. On the finish of this text, I’ll share the GitHub hyperlink to the open-source code repository and a hyperlink to my 1-hour 20-minute YouTube course that explains the ideas offered right here in better element.

Until in any other case talked about, all pictures used on this article are produced by the writer and are free to make use of.

Let’s start!

What’s Context Engineering?

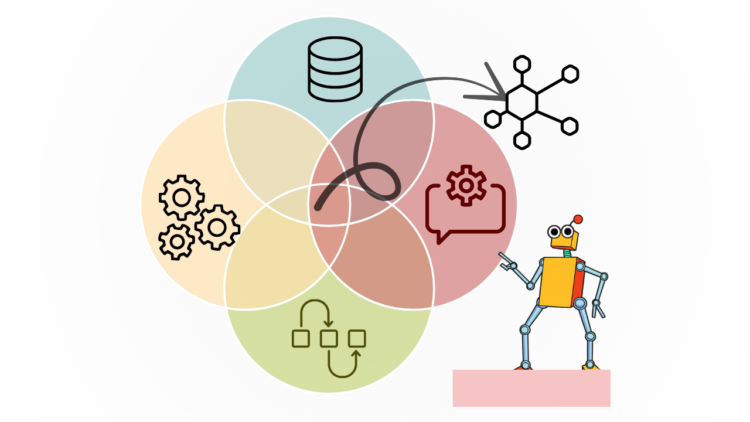

There’s a important hole between writing easy prompts and constructing production-ready purposes. Context Engineering is an umbrella time period that refers back to the delicate artwork and science of becoming data into the context window of an LLM as it really works on a process.

The precise scope of the place the definition of Context Engineering begins and ends is debatable, however in keeping with this tweet from Andrej Karpathy, we will determine the next key factors:

- It isn’t simply atomic immediate engineering, the place you ask one query to the LLM and get a response

- It’s a holistic strategy that breaks up a bigger drawback into a number of subproblems

- These subproblems will be solved by a number of LLMs (or brokers) in isolation. Every agent is supplied with the suitable context to hold out its process

- Every agent will be of acceptable functionality and measurement relying on the complexity of the duty.

- Intermediate steps that every agent can take to finish the duty – the context is not simply data we enter – it additionally contains intermediate tokens that the LLMs see throughout era (eg. reasoning steps, instrument outcomes, and many others)

- The brokers are linked with management flows, and we orchestrate precisely how data flows by means of our system

- The data out there to the brokers can come from a number of sources – exterior databases with Retrieval-Augmented Era (RAG), instrument calls (like net search), reminiscence techniques, or traditional few-shot examples.

- Brokers can take actions whereas producing responses. Every motion the agent can take ought to be well-defined so the LLM can work together with it by means of reasoning and performing.

- Moreover, techniques have to be evaluated with metrics and maintained with observability. Monitoring token utilization, latency, and price to output high quality is a key consideration.

Necessary: How this text is structured

All through this text, I will likely be referring to the factors above whereas offering examples of how they’re utilized in constructing actual purposes. Each time I accomplish that, I’ll use a block quote like this:

It’s a holistic strategy that breaks up a bigger drawback into a number of subproblems

Once you see a quote on this format, the instance that follows will apply the quoted idea programmatically.

However earlier than that, we should ask ourselves one query…

Why not cross every little thing into the LLM?

Analysis has proven that cramming every bit of data into the context of an LLM is way from excellent. Though many frontier fashions do declare to help “long-context” home windows, they nonetheless undergo from points like context poisoning or context rot.

(Supply: Chroma)

An excessive amount of pointless data in an LLM’s context can pollute the mannequin’s understanding, result in hallucinations, and lead to poor efficiency.

Because of this merely having a big context window isn’t sufficient. We want systematic approaches to context engineering.

Why DSPY

For this tutorial, I’ve chosen the DSPy framework. I’ll clarify the reasoning for this selection shortly, however let me guarantee you that the ideas offered right here apply to nearly any prompting framework, together with writing prompts in pure English.

DSPy is a declarative framework for constructing modular AI software program. They’ve neatly separated the 2 key features of any LLM process —

(a) the enter and output contracts handed right into a module,

and (b) the logic that governs how data flows.

Let’s see an instance!

Think about we need to use an LLM to write down a joke. Particularly, we would like it to generate a setup, a punchline, and the total supply in a comic’s voice.

Oh, and we additionally need the output in JSON format in order that we will post-process particular person fields of the dictionary after era. For instance, maybe we need to print the punchline on a T-shirt (assume somebody has already written a handy operate for that).

system_prompt = """

You're a comic who tells jokes, you might be at all times humorous.

Generate the setup, punchline, and full supply within the comic's voice.

Output within the following JSON format:

{

"setup": ,

"punchline": ,

"supply":

}

Your response ought to be parsable withou errors in Python utilizing json.masses().

"""

shopper = openai.Shopper()

response = shopper.chat.completions.create(

mannequin="gpt-4o-mini",

temperature = 1,

messages=[

{"role": "system", "content": system_prompt,

{"role": "user", "content": "Write a joke about AI"}

]

)

joke = json.masses(response.decisions[0].message.content material) # Hope for one of the best

print_on_a_tshirt(joke["punchline"]) Discover how we post-process the LLM’s response to extract the dictionary? What if one thing “unhealthy” occurred, just like the LLM failing to generate the response within the desired format? Our complete code would fail and there will likely be no printing on any T-shirts!

The above code can be fairly troublesome to increase. For instance, if we wished the LLM to do chain of thought reasoning earlier than producing the reply, we would want to write down extra logic to parse that reasoning textual content appropriately.

Moreover, it may be troublesome to take a look at plain English prompts like these and perceive what the inputs and outputs of those techniques are. DSPy solves the entire above. Let’s write the above instance utilizing DSPy.

class JokeGenerator(dspy.Signature):

"""You are a comic who tells jokes. You are at all times humorous."""

question: str = dspy.InputField()

setup: str = dspy.OutputField()

punchline: str = dspy.OutputField()

supply: str = dspy.OutputField()

joke_gen = dspy.Predict(JokeGenerator)

joke_gen.set_lm(lm=dspy.LM("openai/gpt-4.1-mini", temperature=1))

consequence = joke_gen(question="Write a joke about AI")

print(consequence)

print_on_a_tshirt(consequence.punchline)

This strategy offers you structured, predictable outputs that you would be able to work with programmatically, eliminating the necessity for regex parsing or error-prone string manipulation.

Dspy Signatures explicitly makes you outline what the inputs to the system are (“question” within the above instance), and the outputs to the system (setup, punchline, and supply) in addition to their data-types. It additionally tells the LLM the order during which you need them to be generated.

The dspy.Predict factor is an instance of a DSPy Module. With modules, you outline how the LLM converts from inputs to outputs. dspy.Predict is essentially the most fundamental one – you possibly can cross the question to it, as in joke_gen(question="Write a joke about AI") and it’ll create a fundamental immediate to ship to the LLM. Internally, DSPy simply creates a immediate as you possibly can see beneath.

As soon as the LLM responds, DSPy will create Pydantic BaseModel objects that carry out computerized schema validation and ship again the output. If errors happen throughout this validation course of, DSPy robotically makes an attempt to repair them by re-prompting the LLM—thereby considerably decreasing the chance of a program crash.

One other widespread theme in context engineering is Chain of Thought. Right here, we would like the LLM to generate reasoning textual content earlier than offering its closing reply. This permits the LLM’s context to be populated with its self-generated reasoning earlier than it generates the ultimate output tokens.

To try this, you possibly can merely substitute dspy.Predict with dspy.ChainOfThought within the instance above. The remainder of the code stays the identical. Now you possibly can see that the LLM generates reasoning earlier than the outlined output fields.

Multi-Step Interactions and Agentic Workflows

The very best a part of DSPy’s strategy is the way it decouples system dependencies (Signatures) from management flows (Modules), which makes writing code for multi-step interactions trivial (and enjoyable!). On this part, let’s see how we will construct some easy agentic flows.

Sequential Processing

Let’s remind ourselves about one of many key elements of Context Engineering.

It’s a holistic strategy that breaks up a bigger drawback into a number of subproblems

Let’s proceed with our joke era instance. We are able to simply separate out two subproblems from it. Producing the concept is one, making a joke is one other.

Let’s have two brokers then — the primary Agent generates a joke concept (setup and punchline) from a question. A second agent then generates the joke from this concept.

Every agent will be of acceptable functionality and measurement relying on the complexity of the duty

We’re additionally working the primary agent with gpt-4.1-mini and the second agent with the extra highly effective gpt-4.1.

Discover how we wrote our personal dspy.Module known as JokeGenerator. Right here we use two separate dspy modules – the query_to_idea and the idea_to_joke to transform our authentic question to a JokeIdea and subsequently right into a joke (as pictured above).

class JokeIdea(BaseModel):

setup: str

contradiction: str

punchline: str

class QueryToIdea(dspy.Signature):

"""Generate a joke concept with setup, contradiction, and punchline."""

question = dspy.InputField()

joke_idea: JokeIdea = dspy.OutputField()

class IdeaToJoke(dspy.Signature):

"""Convert a joke concept right into a full comic supply."""

joke_idea: JokeIdea = dspy.InputField()

joke = dspy.OutputField()

class JokeGenerator(dspy.Module):

def __init__(self):

self.query_to_idea = dspy.Predict(QueryToIdea)

self.idea_to_joke = dspy.Predict(IdeaToJoke)

self.query_to_idea.set_lm(lm=dspy.LM("openai/gpt-4.1-mini"))

self.idea_to_joke.set_lm(lm=dspy.LM("openai/gpt-4.1"))

def ahead(self, question):

concept = self.query_to_idea(question=question)

joke = self.idea_to_joke(joke_idea=concept.joke_idea)

return jokeIterative Refinement

You may also implement iterative enchancment the place the LLM displays on and refines its outputs. For instance, we will write a refinement module whose context is the output of a earlier LM, and it should act as a suggestions supplier. The primary LM can enter this suggestions and iteratively enhance its response.

iteratively enhance the ultimate joke. (Supply: Creator)

Conditional Branching and Multi-Output Programs

The brokers are linked with management flows, and we orchestrate precisely how data flows by means of our system

Typically you need your agent to output a number of variations, after which choose one of the best amongst them. Let’s take a look at an instance of that.

Right here we have now first outlined a joke choose – it inputs a number of joke concepts, after which picks the index of one of the best joke. This joke is then handed into the subsequent part.

num_samples = 5

class JokeJudge(dspy.Signature):

"""Given a listing of joke concepts, you have to choose one of the best joke"""

joke_ideas: listing[JokeIdeas] = dspy.InputField()

best_idx: int = dspy.OutputField(

le=num_samples,

ge=1,

description="The index of the funniest joke")

class ConditionalJokeGenerator(dspy.Module):

def __init__(self):

self.query_to_idea = dspy.ChainOfThought(QueryToIdea)

self.choose = dspy.ChainOfThought(JokeJudge)

self.idea_to_joke = dspy.ChainOfThought(IdeaToJoke)

async def ahead(self, question):

# Generate a number of concepts in parallel

concepts = await asyncio.collect(*[

self.query_to_idea.acall(query=query)

for _ in range(num_samples)

])

# Choose and rank concepts

best_idx = (await self.choose.acall(joke_ideas=concepts)).best_idx

# Choose greatest concept and generate closing joke

best_idea = concepts[best_idx]

# Convert from concept to joke

return await self.idea_to_joke.acall(joke_idea=best_idea)Instrument Calling

LLM purposes typically must work together with exterior techniques. That is the place tool-calling steps in. You’ll be able to think about a instrument to be any Python operate. You simply want two issues to outline a Python operate as an LLM instrument:

- An outline of what the operate does

- An inventory of inputs and their information sorts

Let’s see an instance of fetching information. We first write a easy Python operate, the place we use Tavily. The operate inputs a search question and fetches current information articles from the final 7 days.

shopper = TavilyClient(api_key=os.getenv("TAVILY_API_KEY"))

def fetch_recent_news(question: str) -> str:

"""Inputs a question string, searches for information and returns prime outcomes."""

response = tavily_client.search(question, search_depth="superior",

matter="information", days=7, max_results=3)

return [x["content"] for x in response["results"]]

Now let’s usedspy.ReAct (or the REasoning and ACTing). The module robotically causes concerning the person’s question, decides when to name which instruments, and incorporates the instrument outcomes into the ultimate response. Doing that is fairly straightforward:

class HaikuGenerator(dspy.Signature):

"""

Generates a haiku concerning the newest information on the question.

Additionally create a easy file the place you save the ultimate abstract.

"""

question = dspy.InputField()

abstract = dspy.OutputField(desc="A abstract of the most recent information")

haiku = dspy.OutputField()

program = dspy.ReAct(signature=HaikuGenerator,

instruments=[fetch_recent_news],

max_iters=2)

program.set_lm(lm=dspy.LM("openai/gpt-4.1", temperature=0.7))

pred = program(question="OpenAI")

When the above code runs, the LLM first causes about what the person needs and which instrument to name (if any). Then it generates the identify of the operate and the arguments to name the operate.

We name the information operate with the generated args, execute the operate to generate the information information. This data is handed again into the LLM. The LLM decides whether or not to name extra instruments, or “end”. If the LLM causes that it has sufficient data to reply the person’s authentic request, it chooses to complete, and generate the reply.

Brokers can take actions whereas producing responses. Every motion the agent can take ought to be nicely outlined so the LLM can work together with it by means of reasoning and performing.

Superior Instrument Utilization — Scratchpad and File I/O

An evolving customary for contemporary purposes is to permit LLMs entry to the file system, permitting them to learn and write information, transfer between directories (with acceptable restrictions), grep and search textual content inside information, and even run terminal instructions!

This sample opens a ton of prospects. It transforms the LLM from a passive textual content generator into an energetic agent able to performing advanced, multi-step duties instantly inside a person’s atmosphere. For instance, simply displaying the listing of instruments out there to Gemini CLI will reveal a brief however extremely highly effective assortment of instruments.

A fast phrase on MCP Servers

One other new paradigm within the house of agentic techniques are MCP servers. MCPs want their very own devoted article, so I gained’t go over them intimately on this one.

This has rapidly turn into the industry-standard method to serve specialised instruments to LLMs. It follows the traditional Shopper-Server structure the place the LLM (a shopper) sends a request to the MCP server, and the MCP server carries out the requested motion, and returns a consequence again to the LLM for downstream processing. MCPs are good for context engineering particular examples since you possibly can declare system immediate codecs, assets, restricted database entry, and many others, to your utility.

This repository has an ideal listing of MCP servers that you would be able to examine to make your LLM purposes join with all kinds of purposes.

Retrieval-Augmented Era (RAG)

Retrieval Augmented Era has turn into a cornerstone of recent AI utility improvement. It’s an architectural strategy that injects exterior, related, and up-to-date data into the Giant Language Fashions (LLMs) that’s contextually related to the person’s question.

RAG pipelines include a preprocessing and an inference-time section. Throughout pre-processing, we course of the reference information corpus and reserve it in a queryable format. Within the inference section, we course of the person question, retrieve related paperwork from our database, and cross them into the LLM to generate a response.

The data out there to the brokers can come from a number of sources – exterior database with Retrieval-Augmented Era (RAG), instrument calls (like net search), reminiscence techniques, or traditional few-shot examples.

Constructing RAGs is difficult, and there was a whole lot of nice analysis and engineering optimizations which have made life simpler. I made a 17-minute video that covers all of the features of constructing a dependable RAG pipeline.

Some sensible suggestions for Good RAG

- When preprocessing, generate extra metadata per chunk. This may be so simple as “questions this chunk solutions”. When saving the chunks to your database, additionally save the generated metadata!

class ChunkAnnotator(dspy.Signature):

chunk: str = dspy.InputField()

possible_questions: listing[str] = dspy.OutputField(

description="listing of questions that this chunk solutions"

)- Question Rewriting: Instantly utilizing the person’s question to do RAG retrieval is commonly a foul concept. Customers write fairly random issues, which can not match the distribution of textual content in your corpus. Question rewriting does what it says – it “rewrites” the question, maybe fixing grammar, spelling errors, contextualizes it with previous dialog, and even provides extra key phrases that make querying simpler.

class QueryRewriting(dspy.Signature):

user_query: str = dspy.InputField()

dialog: str = dspy.InputField(

description="The dialog to date")

modified_query: str = dspy.OutputField(

description="a question contextualizing the person question with the dialog's context and optimized for retrieval search"

)- HYDE or Hypothetical Doc Embedding is a kind of Question Rewriting system. In HYDE, we generate a synthetic (or hypothetical) reply from the LLM’s inside information. This response typically comprises vital key phrases that attempt to instantly match with the solutions database. Vanilla question rewriting is nice for looking out a database of questions, and HYDE is nice for looking out a database with solutions.

- Hybrid search is sort of at all times higher than purely semantic or purely keyword-based search. For semantic search, I’d use cosine similarity nearest neighbor search with vector embeddings. And for semantic search, use BM25.

- RRF: You’ll be able to select a number of methods to retrieve paperwork, after which use reciprocal rank fusion to mix them into one unified listing!

- Multi-Hop Search is an choice to contemplate as nicely if you happen to can afford extra latency. Right here, you cross the retrieved paperwork again into the LLM to generate new queries, that are used to conduct extra searches on the database.

class MultiHopHyDESearch(dspy.Module):

def __init__(self, retriever):

self.generate_queries = dspy.ChainOfThought(QueryGeneration)

self.retriever = retriever

def ahead(self, question, n_hops=3):

outcomes = []

for hop in vary(n_hops): # Discover we loop a number of instances

# Generate optimized search queries

search_queries = self.generate_queries(

question=question,

previous_jokes=retrieved_jokes

)

# Retrieve utilizing each semantic and key phrase search

semantic_results = self.retriever.semantic_search(

search_queries.semantic_query

)

bm25_results = self.retriever.bm25_search(

search_queries.bm25_query

)

# Fuse outcomes

hop_results = reciprocal_rank_fusion([

semantic_results, bm25_results

])

outcomes.prolong(hop_results)

return outcomes- Citations: When asking LLM to generate responses from the retrieved paperwork, we will additionally ask the LLM to quote references to the paperwork it discovered helpful. This permits the LLM to first generate a plan of the way it’s going to make use of the retrieved content material.

- Reminiscence: In case you are constructing a chatbot, you will need to work out the query of reminiscence. You’ll be able to think about Reminiscence as a mix of Retrieval and Instrument Calling. A well known system is the Mem0 system. The LLM observes new information and calls instruments to determine if it wants so as to add or modify its present reminiscences. Throughout question-answering, it retrieves related reminiscences utilizing RAG to generate solutions.

Finest Practices and Manufacturing Issues

This part will not be instantly about Context Engineering, however extra about greatest practices to construct LLM apps for manufacturing.

Moreover, techniques have to be evaluated with metrics and maintained with observability. Monitoring token utilization, latency, and price to output high quality is a key consideration.

1. Design Analysis First

Earlier than constructing options, determine the way you’ll measure success. This helps scope your utility and guides optimization selections.

- In the event you can design verifiable or goal rewards, that’s one of the best. (instance: classification duties the place you may have a validation dataset)

- If not, are you able to outline capabilities that heuristically consider LLM responses on your use case? (instance: variety of instances a selected chunk is retrieved given a query)

- If not, are you able to get people to annotate your LLM’s responses?

- If nothing works, use an LLM as a choose to judge responses. Generally, you need to set your analysis process as a comparability examine, the place the Choose receives a number of responses produced utilizing totally different hyperparameters/prompts, and the choose should rank which of them are one of the best.

3. Use Structured Outputs Nearly All over the place

At all times desire structured outputs over free-form textual content. It makes your system extra dependable and simpler to debug. You’ll be able to add validation and retries as nicely!

4. Design for failure

When designing prompts or dspy modules, be sure to at all times contemplate “what occurs if issues go fallacious?”

Like every good software program, reducing down error states and failing with swagger is the perfect state of affairs.

5. Monitor All the things

DSpy integrates with MLflow to trace:

- Particular person prompts handed into the LLM and their responses

- Token utilization and prices

- Latency per module

- Success/failure charges

- Mannequin efficiency over time

Langfuse, Logfire are equally nice options.

Outro

Context engineering represents a paradigm shift from easy immediate engineering to constructing complete and modular LLM purposes.

The DSPy framework offers the instruments and abstractions wanted to implement these patterns systematically. As LLM capabilities proceed to evolve, context engineering will turn into more and more essential for constructing purposes that successfully leverage the ability of enormous language fashions.

To look at the total video course on which this text relies, please go to this YouTube hyperlink.

To entry the total GitHub repo, go to:

https://github.com/avbiswas/context-engineering-dspy

References

Creator’s YouTube channel: https://www.youtube.com/@avb_fj

Creator’s Patreon: www.patreon.com/NeuralBreakdownwithAVB

Creator’s Twitter (X) account: https://x.com/neural_avb

Full Context Engineering video course: https://youtu.be/5Bym0ffALaU

Github Hyperlink: https://github.com/avbiswas/context-engineering-dspy