The spine of this software are the brokers and their interactions. General, we had two various kinds of brokers :

- Consumer Brokers: Brokers connected to every person. Primarily tasked with translating incoming messages into the person’s most well-liked language

- Aya Brokers: Numerous brokers related to Aya, every with its personal particular function/job

Consumer Brokers

The UserAgent class is used to outline an agent that can be related to each person a part of the chat room. A few of the capabilities carried out by the UserAgent class:

1. Translate incoming messages into the person’s most well-liked language

2. Activate/Invoke graph when a person sends a message

3. Preserve a chat historical past to assist present context to the interpretation activity to permit for ‘context-aware’ translation

class UserAgent(object):def __init__(self, llm, userid, user_language):

self.llm = llm

self.userid = userid

self.user_language = user_language

self.chat_history = []

immediate = ChatPromptTemplate.from_template(USER_SYSTEM_PROMPT2)

self.chain = immediate | llm

def set_graph(self, graph):

self.graph = graph

def send_text(self,textual content:str, debug = False):

message = ChatMessage(message = HumanMessage(content material=textual content), sender = self.userid)

inputs = {"messages": [message]}

output = self.graph.invoke(inputs, debug = debug)

return output

def display_chat_history(self, content_only = False):

for i in self.chat_history:

if content_only == True:

print(f"{i.sender} : {i.content material}")

else:

print(i)

def invoke(self, message:BaseMessage) -> AIMessage:

output = self.chain.invoke({'message':message.content material, 'user_language':self.user_language})

return output

For essentially the most half, the implementation of UserAgent is fairly commonplace LangChain/LangGraph code:

- Outline a LangChain chain ( a immediate template + LLM) that’s chargeable for doing the precise translation.

- Outline a send_text operate thats used to invoke the graph at any time when a person desires to ship a brand new message

For essentially the most half, the efficiency of this agent depends on the interpretation high quality of the LLM, as translation is the first goal of this agent. And LLM efficiency can fluctuate considerably for translation, particularly relying on the languages concerned. Sure low useful resource languages don’t have good illustration within the coaching knowledge of some fashions and this does have an effect on the interpretation high quality for these languages.

Aya Brokers

For Aya, we even have a system of separate brokers that every one contributes in direction of the general assistant. Particularly, we now have

- AyaSupervisor : Management agent that supervises the operation of the opposite Aya brokers.

- AyaQuery : Agent for operating RAG based mostly query answering

- AyaSummarizer : Agent for producing chat summaries and doing activity identification

- AyaTranslator: Agent for translating messages to English

class AyaTranslator(object):def __init__(self, llm) -> None:

self.llm = llm

immediate = ChatPromptTemplate.from_template(AYA_TRANSLATE_PROMPT)

self.chain = immediate | llm

def invoke (self, message: str) -> AIMessage:

output = self.chain.invoke({'message':message})

return output

class AyaQuery(object):

def __init__(self, llm, retailer, retriever) -> None:

self.llm = llm

self.retriever = retriever

self.retailer = retailer

qa_prompt = ChatPromptTemplate.from_template(AYA_AGENT_PROMPT)

self.chain = qa_prompt | llm

def invoke(self, query : str) -> AIMessage:

context = format_docs(self.retriever.invoke(query))

rag_output = self.chain.invoke({'query':query, 'context':context})

return rag_output

class AyaSupervisor(object):

def __init__(self, llm):

immediate = ChatPromptTemplate.from_template(AYA_SUPERVISOR_PROMPT)

self.chain = immediate | llm

def invoke(self, message : str) -> str:

output = self.chain.invoke(message)

return output.content material

class AyaSummarizer(object):

def __init__(self, llm):

message_length_prompt = ChatPromptTemplate.from_template(AYA_SUMMARIZE_LENGTH_PROMPT)

self.length_chain = message_length_prompt | llm

immediate = ChatPromptTemplate.from_template(AYA_SUMMARIZER_PROMPT)

self.chain = immediate | llm

def invoke(self, message : str, agent : UserAgent) -> str:

size = self.length_chain.invoke(message)

strive:

size = int(size.content material.strip())

besides:

size = 0

chat_history = agent.chat_history

if size == 0:

messages_to_summarize = [chat_history[i].content material for i in vary(len(chat_history))]

else:

messages_to_summarize = [chat_history[i].content material for i in vary(min(len(chat_history), size))]

print(size)

print(messages_to_summarize)

messages_to_summarize = "n ".be a part of(messages_to_summarize)

output = self.chain.invoke(messages_to_summarize)

output_content = output.content material

print(output_content)

return output_content

Most of those brokers have the same construction, primarily consisting of a LangChain chain consisting of a customized immediate and a LLM. Exceptions embody the AyaQuery agent which has an extra vector database retriever to implement RAG and AyaSummarizer which has a number of LLM capabilities being carried out inside it.

Design concerns

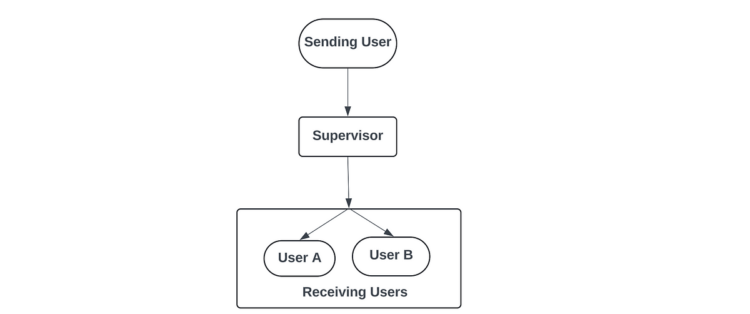

Function of AyaSupervisor Agent: Within the design of the graph, we had a hard and fast edge going from the Supervisor node to the person nodes. Which meant that every one messages that reached the Supervisor node have been pushed to the person nodes itself. Subsequently, in circumstances the place Aya was being addressed, we had to make sure that solely a single remaining output from Aya was being pushed to the customers. We didn’t need intermediate messages, if any, to achieve the customers. Subsequently, we had the AyaSupervisor agent that acted as the only level of contact for the Aya agent. This agent was primarily chargeable for decoding the intent of the incoming message, direct the message to the suitable task-specific agent, after which outputting the ultimate message to be shared with the customers.

Design of AyaSummarizer: The AyaSummarizer agent is barely extra complicated in comparison with the opposite Aya brokers because it carries out a two-step course of. In step one, the agent first determines the variety of messages that must be summarized, which is a LLM name with its personal immediate. Within the second step, as soon as we all know the variety of messages to summarize, we collate the required messages and cross it to the LLM to generate the precise abstract. Along with the abstract, on this step itself, the LLM additionally identifies any motion objects that have been current within the messages and lists it out individually.

So broadly there have been three duties: figuring out the size of the messages to be summarized, summarizing messages, figuring out motion objects. Nevertheless, on condition that the primary activity was proving a bit tough for the LLM with none express examples, I made the selection to have this be a separate LLM name after which mix the 2 final two duties as their very own LLM name.

It could be attainable to get rid of the extra LLM name and mix all three duties in a single name. Potential choices embody :

- Offering very detailed examples that cowl all three duties in a single step

- Producing lot of examples to really finetune a LLM to have the ability to carry out effectively on this activity

Function of AyaTranslator: One of many objectives with respect to Aya was to make it a multilingual AI assistant which may talk within the person’s most well-liked language. Nevertheless, it could be tough to deal with totally different languages internally inside the Aya brokers. Particularly, if the Aya brokers immediate is in English and the person message is in a distinct language, it may probably create points. So with a view to keep away from such conditions, as a filtering step, we translated any incoming person messages to Aya into English. Consequently, all the inner work inside the Aya group of brokers was carried out in English, together with the output. We didnt need to translate the Aya output again to the unique language as a result of when the message reaches the customers, the Consumer brokers will maintain translating the message to their respective assigned language.