Enterprises—particularly within the insurance coverage business—face rising challenges in processing huge quantities of unstructured knowledge from various codecs, together with PDFs, spreadsheets, photos, movies, and audio information. These would possibly embrace claims doc packages, crash occasion movies, chat transcripts, or coverage paperwork. All comprise crucial data throughout the claims processing lifecycle.

Conventional knowledge preprocessing strategies, although practical, may need limitations in accuracy and consistency. This would possibly have an effect on metadata extraction completeness, workflow velocity, and the extent of information utilization for AI-driven insights (similar to fraud detection or threat evaluation). To handle these challenges, this submit introduces a multi‐agent collaboration pipeline: a set of specialised brokers for classification, conversion, metadata extraction, and area‐particular duties. By orchestrating these brokers, you may automate the ingestion and transformation of a variety of multimodal unstructured knowledge—boosting accuracy and enabling finish‐to‐finish insights.

For groups processing a small quantity of uniform paperwork, a single-agent setup could be extra simple to implement and adequate for primary automation. Nevertheless, in case your knowledge spans various domains and codecs—similar to claims doc packages, collision footage, chat transcripts, or audio information—a multi-agent structure affords distinct benefits. Specialised brokers enable for focused immediate engineering, higher debugging, and extra correct extraction, every tuned to a particular knowledge kind.

As quantity and selection develop, this modular design scales extra gracefully, permitting you to plug in new domain-aware brokers or refine particular person prompts and enterprise logic—with out disrupting the broader pipeline. Suggestions from area consultants within the human-in-the-loop part may also be mapped again to particular brokers, supporting steady enchancment.

To help this adaptive structure, you should utilize Amazon Bedrock, a totally managed service that makes it simple to construct and scale generative AI purposes utilizing basis fashions (FMs) from main AI firms like AI21 Labs, Anthropic, Cohere, DeepSeek, Luma, Meta, Mistral AI, poolside (coming quickly), Stability AI, and Amazon by a single API. A robust characteristic of Amazon Bedrock—Amazon Bedrock Brokers—permits the creation of clever, domain-aware brokers that may retrieve context from Amazon Bedrock Data Bases, name APIs, and orchestrate multi-step duties. These brokers present the pliability and flexibility wanted to course of unstructured knowledge at scale, and might evolve alongside your group’s knowledge and enterprise workflows.

Resolution overview

Our pipeline capabilities as an insurance coverage unstructured knowledge preprocessing hub with the next options:

- Classification of incoming unstructured knowledge based mostly on area guidelines

- Metadata extraction for declare numbers, dates, and extra

- Conversion of paperwork into uniform codecs (similar to PDF or transcripts)

- Conversion of audio/video knowledge into structured markup format

- Human validation for unsure or lacking fields

Enriched outputs and related metadata will in the end land in a metadata‐wealthy unstructured knowledge lake, forming the muse for fraud detection, superior analytics, and 360‐diploma buyer views.

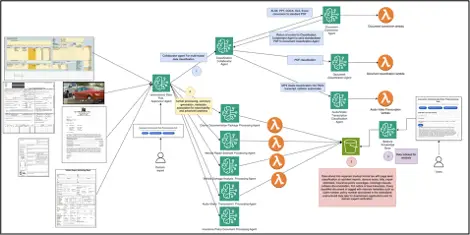

The next diagram illustrates the answer structure.

The tip-to-end workflow incorporates a supervisor agent on the middle, classification and conversion brokers branching off, a human‐in‐the‐loop step, and Amazon Easy Storage Service (Amazon S3) as the ultimate unstructured knowledge lake vacation spot.

Multi‐agent collaboration pipeline

This pipeline consists of a number of specialised brokers, every dealing with a definite perform similar to classification, conversion, metadata extraction, and domain-specific evaluation. In contrast to a single monolithic agent that makes an attempt to handle all duties, this modular design promotes scalability, maintainability, and reuse. Particular person brokers might be independently up to date, swapped, or prolonged to accommodate new doc varieties or evolving enterprise guidelines with out impacting the general system. This separation of issues improves fault tolerance and permits parallel processing, leading to sooner and extra dependable knowledge transformation workflows.

Multi-agent collaboration affords the next metrics and effectivity features:

- Discount in human validation time – Centered prompts tailor-made to particular brokers will result in cleaner outputs and easier verification, offering effectivity in validation time.

- Sooner iteration cycles and regression isolation – Adjustments to prompts or logic are scoped to particular person brokers, minimizing the world of impact of updates and considerably lowering regression testing effort throughout tuning or enhancement phases.

- Improved metadata extraction accuracy, particularly on edge circumstances – Specialised brokers scale back immediate overload and permit deeper area alignment, which improves field-level accuracy—particularly when processing blended doc varieties like crash movies vs. claims doc packages.

- Scalable effectivity features with automated problem resolver brokers – As automated problem resolver brokers are added over time, processing time per doc is predicted to enhance significantly, lowering guide touchpoints. These brokers might be designed to make use of human-in-the-loop suggestions mappings and clever knowledge lake lookups to automate recurring fixes.

Unstructured Knowledge Hub Supervisor Agent

The Supervisor Agent orchestrates the workflow, delegates duties, and invokes specialised downstream brokers. It has the next key duties:

- Obtain incoming multimodal knowledge and processing directions from the person portal (multimodal claims doc packages, car harm photos, audio transcripts, or restore estimates).

- Ahead every unstructured knowledge kind to the Classification Collaborator Agent to find out whether or not a conversion step is required or direct classification is feasible.

- Coordinate specialised area processing by invoking the suitable agent for every knowledge kind—for instance, a claims paperwork bundle is dealt with by the Claims Documentation Package deal Processing Agent, and restore estimates go to the Car Restore Estimate Processing Agent.

- Be sure that each incoming knowledge finally lands, together with its metadata, within the S3 knowledge lake.

Classification Collaborator Agent

The Classification Collaborator Agent determines every file’s kind utilizing area‐particular guidelines and makes positive it’s both transformed (if wanted) or instantly categorized. This consists of the next steps:

- Determine the file extension. If it’s DOCX, PPT, or XLS, it routes the file to the Doc Conversion Agent first.

- Output a unified classification consequence for every standardized doc—specifying the class, confidence, extracted metadata, and subsequent steps.

Doc Conversion Agent

The Doc Conversion Agent converts non‐PDF information into PDF and extracts preliminary metadata (creation date, file dimension, and so forth). This consists of the next steps:

- Remodel DOCX, PPT, XLS, and XLSX into PDF.

- Seize embedded metadata.

- Return the brand new PDF to the Classification Collaborator Agent for remaining classification.

Specialised classification brokers

Every agent handles particular modalities of information:

- Doc Classification Agent:

- Processes textual content‐heavy codecs like claims doc packages, commonplace working process paperwork (SOPs), and coverage paperwork

- Extracts declare numbers, coverage numbers, coverage holder particulars, protection dates, and expense quantities as metadata

- Identifies lacking gadgets (for instance, lacking coverage holder data, lacking dates)

- Transcription Classification Agent:

- Focuses on audio or video transcripts, similar to First Discover of Misplaced (FNOL) calls or adjuster comply with‐ups

- Classifies transcripts into enterprise classes (similar to first‐get together declare or third‐get together dialog) and extracts related metadata

- Picture Classification Agent:

- Analyzes car harm photographs and collision movies for particulars like harm severity, car identification, or location

- Generates structured metadata that may be fed into downstream harm evaluation methods

Moreover, we’ve got outlined specialised downstream brokers:

- Claims Doc Package deal Processing Agent

- Car Restore Estimate Processing Agent

- Car Injury Evaluation Processing Agent

- Audio Video Transcription Processing Agent

- Insurance coverage Coverage Doc Processing Agent

After the excessive‐stage classification identifies a file as, for instance, a claims doc bundle or restore estimate, the Supervisor Agent invokes the suitable specialised agent to carry out deeper area‐particular transformation and extraction.

Metadata extraction and human-in-the-loop

Metadata is crucial for automated workflows. With out correct metadata fields—like declare numbers, coverage numbers, protection dates, loss dates, or claimant names—downstream analytics lack context. This a part of the answer handles knowledge extraction, error dealing with, and restoration by the next options:

- Automated extraction – Massive language fashions (LLMs) and area‐particular guidelines parse crucial knowledge from unstructured content material, determine key metadata fields, and flag anomalies early.

- Knowledge staging for evaluate – The pipeline extracts metadata fields and levels every file for human evaluate. This course of presents the extracted fields—highlighting lacking or incorrect values for human evaluate.

- Human-in-the-loop – Area consultants step in to validate and proper metadata through the human-in-the-loop part, offering accuracy and context for key fields similar to declare numbers, policyholder particulars, and occasion timelines. These interventions not solely function a point-in-time error restoration mechanism but additionally lay the muse for steady enchancment of the pipeline’s domain-specific guidelines, conversion logic, and classification prompts.

Ultimately, automated problem resolver brokers might be launched in iterations to deal with an rising share of information fixes, additional lowering the necessity for guide evaluate. A number of methods might be launched to allow this development to enhance resilience and flexibility over time:

- Persisting suggestions – Corrections made by area consultants might be captured and mapped to the varieties of points they resolve. These structured mappings assist refine immediate templates, replace enterprise logic, and generate focused directions to information the design of automated problem resolver brokers to emulate related fixes in future workflows.

- Contextual metadata lookups – Because the unstructured knowledge lake turns into more and more metadata-rich—with deeper connections throughout coverage numbers, declare IDs, car data, and supporting paperwork— problem resolver brokers with acceptable prompts might be launched to carry out clever dynamic lookups. For instance, if a media file lacks a coverage quantity however features a declare quantity and car data, a problem resolver agent can retrieve lacking metadata by querying associated listed paperwork like claims doc packages or restore estimates.

By combining these methods, the pipeline turns into more and more adaptive—regularly bettering knowledge high quality and enabling scalable, metadata-driven insights throughout the enterprise.

Metadata‐wealthy unstructured knowledge lake

After every unstructured knowledge kind is transformed and categorized, each the standardized content material

and metadata JSON information are saved in an unstructured knowledge lake (Amazon S3). This repository unifies completely different knowledge varieties (photos, transcripts, paperwork) by shared metadata, enabling the next:

- Fraud detection by cross‐referencing repeated claimants or contradictory particulars

- Buyer 360-degree profiles by linking claims, calls, and repair data

- Superior analytics and actual‐time queries

Multi‐modal, multi‐agentic sample

In our AWS CloudFormation template, every multimodal knowledge kind follows a specialised movement:

- Knowledge conversion and classification:

- The Supervisor Agent receives uploads and passes them to the Classification Collaborator Agent.

- If wanted, the Doc Conversion Agent would possibly step in to standardize the file.

- The Classification Collaborator Agent’s classification step organizes the uploads into classes—FNOL calls, claims doc packages, collision movies, and so forth.

- Doc processing:

- The Doc Classification Agent and different specialised brokers apply area guidelines to extract metadata like declare numbers, protection dates, and extra.

- The pipeline presents the extracted in addition to lacking data to the area skilled for correction or updating.

- Audio/video evaluation:

- The Transcription Classification Agent handles FNOL calls and third‐get together dialog transcripts.

- The Audio Video Transcription Processing Agent or the Car Injury Evaluation Processing Agent additional parses collision movies or harm photographs, linking spoken occasions to visible proof.

- Markup textual content conversion:

- Specialised processing brokers create markup textual content from the totally categorized and corrected metadata. This manner, the information is reworked right into a metadata-rich format prepared for consumption by data bases, Retrieval Augmented Technology (RAG) pipelines, or graph queries.

Human-in-the-loop and future enhancements

The human‐in‐the‐loop part is vital for verifying and including lacking metadata and fixing incorrect categorization of information. Nevertheless, the pipeline is designed to evolve as follows:

- Refined LLM prompts – Each correction from area consultants helps refine LLM prompts, lowering future guide steps and bettering metadata consistency

- Problem resolver brokers – As metadata consistency improves over time, specialised fixers can deal with metadata and classification errors with minimal person enter

- Cross referencing – Problem resolver brokers can cross‐reference present knowledge within the metadata-rich S3 knowledge lake to mechanically fill in lacking metadata

The pipeline evolves towards full automation, minimizing human oversight apart from probably the most complicated circumstances.

Conditions

Earlier than deploying this resolution, just remember to have the next in place:

- An AWS account. When you don’t have an AWS account, enroll for one.

- Entry as an AWS Id and Entry Administration (IAM) administrator or an IAM person that has permissions for:

- Entry to Amazon Bedrock. Make sure that Amazon Bedrock is obtainable in your AWS Area, and you’ve got explicitly enabled the FMs you intend to make use of (for instance, Anthropic’s Claude or Cohere). Seek advice from Add or take away entry to Amazon Bedrock basis fashions for steering on enabling fashions in your AWS account. This resolution was examined in us-west-2. Just be sure you have enabled the required FMs:

- claude-3-5-haiku-20241022-v1:0

- claude-3-5-sonnet-20241022-v2:0

- claude-3-haiku-20240307-v1:0

- titan-embed-text-v2:0

- Set the API Gateway integration timeout from the default 29 seconds to 180 seconds, as launched in this announcement, in your AWS account by submitting a service quota improve for API Gateway integration timeout.

Deploy the answer with AWS CloudFormation

Full the next steps to arrange the answer sources:

- Register to the AWS Administration Console as an IAM administrator or acceptable IAM person.

- Select Launch Stack to deploy the CloudFormation template.

- Present the mandatory parameters and create the stack.

For this setup, we use us-west-2 as our Area, Anthropic’s Claude 3.5 Haiku mannequin for orchestrating the movement between the completely different brokers, and Anthropic’s Claude 3.5 Sonnet V2 mannequin for conversion, categorization, and processing of multimodal knowledge.

If you wish to use different fashions on Amazon Bedrock, you are able to do so by making acceptable adjustments within the CloudFormation template. Test for acceptable mannequin help within the Area and the options which can be supported by the fashions.

It’s going to take about half-hour to deploy the answer. After the stack is deployed, you may view the assorted outputs of the CloudFormation stack on the Outputs tab, as proven within the following screenshot.

The supplied CloudFormation template creates a number of S3 buckets (similar to DocumentUploadBucket, SampleDataBucket, and KnowledgeBaseDataBucket) for uncooked uploads, pattern information, Amazon Bedrock Data Bases references, and extra. Every specialised Amazon Bedrock agent or Lambda perform makes use of these buckets to retailer intermediate or remaining artifacts.

The next screenshot is an illustration of the Amazon Bedrock brokers which can be deployed within the AWS account.

The following part outlines the right way to take a look at the unstructured knowledge processing workflow.

Take a look at the unstructured knowledge processing workflow

On this part, we current completely different use circumstances to reveal the answer. Earlier than you start, full the next steps:

- Find the

APIGatewayInvokeURLworth from the CloudFormation stack’s outputs. This URL launches the Insurance coverage Unstructured Knowledge Preprocessing Hub in your browser.

- Obtain the pattern knowledge information from the designated S3 bucket (

SampleDataBucketName) to your native machine. The next screenshots present the bucket particulars from CloudFormation stack’s outputs and the contents of the pattern knowledge bucket.

With these particulars, now you can take a look at the pipeline by importing the next pattern multimodal information by the Insurance coverage Unstructured Knowledge Preprocessing Hub Portal:

- Claims doc bundle (

ClaimDemandPackage.pdf) - Car restore estimate (

collision_center_estimate.xlsx) - Collision video with supported audio (

carcollision.mp4) - First discover of loss audio transcript (

fnol.mp4) - Insurance coverage coverage doc (

ABC_Insurance_Policy.docx)

Every multimodal knowledge kind might be processed by a collection of brokers:

- Supervisor Agent – Initiates the processing

- Classification Collaborator Agent – Categorizes the multimodal knowledge

- Specialised processing brokers – Deal with domain-specific processing

Lastly, the processed information, together with their enriched metadata, are saved within the S3 knowledge lake. Now, let’s proceed to the precise use circumstances.

Use Case 1: Claims doc bundle

This use case demonstrates the entire workflow for processing a multimodal claims doc bundle. By importing a PDF doc to the pipeline, the system mechanically classifies the doc kind, extracts important metadata, and categorizes every web page into particular parts.

- Select Add File within the UI and select the pdf file.

The file add would possibly take a while relying on the doc dimension.

- When the add is full, you may affirm that the extracted metadata values are follows:

- Declare Quantity: 0112233445

- Coverage Quantity: SF9988776655

- Date of Loss: 2025-01-01

- Claimant Title: Jane Doe

The Classification Collaborator Agent identifies the doc as a Claims Doc Package deal. Metadata (similar to declare ID and incident date) is mechanically extracted and displayed for evaluate.

- For this use case, no adjustments are made—merely select Proceed Preprocessing to proceed.

The processing stage would possibly take as much as quarter-hour to finish. Quite than manually checking the S3 bucket (recognized within the CloudFormation stack outputs as KnowledgeBaseDataBucket) to confirm that 72 information—one for every web page and its corresponding metadata JSON—have been generated, you may monitor the progress by periodically selecting Test Queue Standing. This allows you to view the present state of the processing queue in actual time.

The pipeline additional categorizes every web page into particular varieties (for instance, lawyer letter, police report, medical payments, physician’s report, well being types, x-rays). It additionally generates corresponding markup textual content information and metadata JSON information.

Lastly, the processed textual content and metadata JSON information are saved within the unstructured S3 knowledge lake.

The next diagram illustrates the entire workflow.

Use Case 2: Collision middle workbook for car restore estimate

On this use case, we add a collision middle workbook to set off the workflow that converts the file, extracts restore estimate particulars, and levels the information for evaluate earlier than remaining storage.

- Select Add File and select the xlsx workbook.

- Await the add to finish and ensure that the extracted metadata is correct:

- Declare Quantity: CLM20250215

- Coverage Quantity: SF9988776655

- Claimant Title: John Smith

- Car: Truck

The Doc Conversion Agent converts the file to PDF if wanted, or the Classification Collaborator Agent identifies it as a restore estimate. The Car Restore Estimate Processing Agent extracts value traces, half numbers, and labor hours.

- Evaluate and replace the displayed metadata as obligatory, then select Proceed Preprocessing to set off remaining storage.

The finalized file and metadata are saved in Amazon S3.

The next diagram illustrates this workflow.

Use Case 3: Collision video with audio transcript

For this use case, we add a video displaying the accident scene to set off a workflow that analyzes each visible and audio knowledge, extracts key frames for collision severity, and levels metadata for evaluate earlier than remaining storage.

- Select Add File and select the mp4 video.

- Wait till the add is full, then evaluate the collision state of affairs and alter the displayed metadata to right omissions or inaccuracies as follows:

- Declare Quantity: 0112233445

- Coverage Quantity: SF9988776655

- Date of Loss: 01-01-2025

- Claimant Title: Jane Doe

- Coverage Holder Title: John Smith

The Classification Collaborator Agent directs the video to both the Audio/Video Transcript or Car Injury Evaluation agent. Key frames are analyzed to find out collision severity.

- Evaluate and replace the displayed metadata (for instance, coverage quantity, location), then select Proceed Preprocessing to provoke remaining storage.

Ultimate transcripts and metadata are saved in Amazon S3, prepared for superior analytics similar to verifying story consistency.

The next diagram illustrates this workflow.

Use Case 4: Audio transcript between claimant and customer support affiliate

Subsequent, we add a video that captures the claimant reporting an accident to set off the workflow that extracts an audio transcript and identifies key metadata for evaluate earlier than remaining storage.

- Select Add File and select mp4.

- Wait till the add is full, then evaluate the decision state of affairs and alter the displayed metadata to right any omissions or inaccuracies as follows:

- Declare Quantity: Not Assigned But

- Coverage Quantity: SF9988776655

- Claimant Title: Jane Doe

- Coverage Holder Title: John Smith

- Date Of Loss: January 1, 2025 8:30 AM

The Classification Collaborator Agent routes the file to the Audio/Video Transcript Agent for processing. Key metadata attributes are mechanically recognized from the decision.

- Evaluate and proper any incomplete metadata, then select Proceed Preprocessing to proceed.

Ultimate transcripts and metadata are saved in Amazon S3, prepared for superior analytics (for instance, verifying story consistency).

The next diagram illustrates this workflow.

Use Case 5: Auto insurance coverage coverage doc

For our remaining use case, we add an insurance coverage coverage doc to set off the workflow that converts and classifies the doc, extracts key metadata for evaluate, and shops the finalized output in Amazon S3.

- Select Add File and select docx.

- Wait till the add is full, and ensure that the extracted metadata values are as follows:

- Coverage Quantity: SF9988776655

- Coverage kind: Auto Insurance coverage

- Efficient Date: 12/12/2024

- Coverage Holder Title: John Smith

The Doc Conversion Agent transforms the doc right into a standardized PDF format if required. The Classification Collaborator Agent then routes it to the Doc Classification Agent for categorization as an Auto Insurance coverage Coverage Doc. Key metadata attributes are mechanically recognized and offered for person evaluate.

- Evaluate and proper incomplete metadata, then select Proceed Preprocessing to set off remaining storage.

The finalized coverage doc in markup format, together with its metadata, is saved in Amazon S3—prepared for superior analytics similar to verifying story consistency.

The next diagram illustrates this workflow.

Comparable workflows might be utilized to different varieties of insurance coverage multimodal knowledge and paperwork by importing them on the Knowledge Preprocessing Hub Portal. At any time when wanted, this course of might be enhanced by introducing specialised downstream Amazon Bedrock brokers that collaborate with the present Supervisor Agent, Classification Agent, and Conversion Brokers.

Amazon Bedrock Data Bases integration

To make use of the newly processed knowledge within the knowledge lake, full the next steps to ingest the information in Amazon Bedrock Data Bases and work together with the information lake utilizing a structured workflow. This integration permits for dynamic querying throughout completely different doc varieties, enabling deeper insights from multimodal knowledge.

- Select Chat with Your Paperwork to open the chat interface.

- Select Sync Data Base to provoke the job that ingests and indexes the newly processed information and the out there metadata into the Amazon Bedrock data base.

- After the sync is full (which could take a few minutes), enter your queries within the textual content field. For instance, set Coverage Quantity to SF9988776655 and check out asking:

- “Retrieve particulars of all claims filed towards the coverage quantity by a number of claimants.”

- “What’s the nature of Jane Doe’s declare, and what paperwork have been submitted?”

- “Has the policyholder John Smith submitted any claims for car repairs, and are there any estimates on file?”

- Select Ship and evaluate the system’s response.

This integration permits cross-document evaluation, so you may question throughout multimodal knowledge varieties like transcripts, photos, claims doc packages, restore estimates, and declare data to disclose buyer 360-degree insights out of your domain-aware multi-agent pipeline. By synthesizing knowledge from a number of sources, the system can correlate data, uncover hidden patterns, and determine relationships that may not have been evident in remoted paperwork.

A key enabler of this intelligence is the wealthy metadata layer generated throughout preprocessing. Area consultants actively validate and refine this metadata, offering accuracy and consistency throughout various doc varieties. By reviewing key attributes—similar to declare numbers, policyholder particulars, and occasion timelines—area consultants improve the metadata basis, making it extra dependable for downstream AI-driven evaluation.

With wealthy metadata in place, the system can now infer relationships between paperwork extra successfully, enabling use circumstances similar to:

- Figuring out a number of claims tied to a single coverage

- Detecting inconsistencies in submitted paperwork

- Monitoring the entire lifecycle of a declare from FNOL to decision

By repeatedly bettering metadata by human validation, the system turns into extra adaptive, paving the way in which for future automation, the place problem resolver brokers can proactively determine and self-correct lacking and inconsistent metadata with minimal guide intervention through the knowledge ingestion course of.

Clear up

To keep away from surprising fees, full the next steps to scrub up your sources:

- Delete the contents from the S3 buckets talked about within the outputs of the CloudFormation stack.

- Delete the deployed stack utilizing the AWS CloudFormation console.

Conclusion

By reworking unstructured insurance coverage knowledge into metadata‐wealthy outputs, you may accomplish the next:

- Speed up fraud detection by cross‐referencing multimodal knowledge

- Improve buyer 360-degree insights by uniting claims, calls, and repair data

- Assist actual‐time selections by AI‐assisted search and analytics

As this multi‐agent collaboration pipeline matures, specialised problem resolver brokers and refined LLM prompts can additional scale back human involvement—unlocking finish‐to‐finish automation and improved determination‐making. Finally, this area‐conscious strategy future‐proofs your claims processing workflows by harnessing uncooked, unstructured knowledge as actionable enterprise intelligence.

To get began with this resolution, take the next subsequent steps:

- Deploy the CloudFormation stack and experiment with the pattern knowledge.

- Refine area guidelines or agent prompts based mostly in your workforce’s suggestions.

- Use the metadata in your S3 knowledge lake for superior analytics like actual‐time threat evaluation or fraud detection.

- Join an Amazon Bedrock data base to

KnowledgeBaseDataBucketfor superior Q&A and RAG.

With a multi‐agent structure in place, your insurance coverage knowledge ceases to be a scattered legal responsibility, turning into as an alternative a unified supply of excessive‐worth insights.

Seek advice from the next extra sources to discover additional:

Concerning the Creator

Piyali Kamra is a seasoned enterprise architect and a hands-on technologist who has over 20 years of expertise constructing and executing giant scale enterprise IT initiatives throughout geographies. She believes that constructing giant scale enterprise methods will not be an actual science however extra like an artwork, the place you may’t all the time select the perfect expertise that comes to 1’s thoughts however moderately instruments and applied sciences should be rigorously chosen based mostly on the workforce’s tradition , strengths, weaknesses and dangers, in tandem with having a futuristic imaginative and prescient as to the way you wish to form your product a number of years down the highway.

Piyali Kamra is a seasoned enterprise architect and a hands-on technologist who has over 20 years of expertise constructing and executing giant scale enterprise IT initiatives throughout geographies. She believes that constructing giant scale enterprise methods will not be an actual science however extra like an artwork, the place you may’t all the time select the perfect expertise that comes to 1’s thoughts however moderately instruments and applied sciences should be rigorously chosen based mostly on the workforce’s tradition , strengths, weaknesses and dangers, in tandem with having a futuristic imaginative and prescient as to the way you wish to form your product a number of years down the highway.