AI brokers proceed to achieve momentum, as companies use the facility of generative AI to reinvent buyer experiences and automate complicated workflows. We’re seeing Amazon Bedrock Brokers utilized in funding analysis, insurance coverage claims processing, root trigger evaluation, promoting campaigns, and far more. Brokers use the reasoning functionality of basis fashions (FMs) to interrupt down user-requested duties into a number of steps. They use developer-provided directions to create an orchestration plan and perform that plan by securely invoking firm APIs and accessing information bases utilizing Retrieval Augmented Era (RAG) to precisely deal with the person’s request.

Though organizations see the advantage of brokers which can be outlined, configured, and examined as managed sources, we’ve more and more seen the necessity for a further, extra dynamic technique to invoke brokers. Organizations want options that alter on the fly—whether or not to check new approaches, reply to altering enterprise guidelines, or customise options for various purchasers. That is the place the brand new inline brokers functionality in Amazon Bedrock Brokers turns into transformative. It means that you can dynamically alter your agent’s habits at runtime by altering its directions, instruments, guardrails, information bases, prompts, and even the FMs it makes use of—all with out redeploying your utility.

On this put up, we discover find out how to construct an utility utilizing Amazon Bedrock inline brokers, demonstrating how a single AI assistant can adapt its capabilities dynamically primarily based on person roles.

Inline brokers in Amazon Bedrock Brokers

This runtime flexibility enabled by inline brokers opens highly effective new potentialities, similar to:

- Fast prototyping – Inline brokers reduce the time-consuming create/replace/put together cycles historically required for agent configuration adjustments. Builders can immediately check totally different combos of fashions, instruments, and information bases, dramatically accelerating the event course of.

- A/B testing and experimentation – Information science groups can systematically consider totally different model-tool combos, measure efficiency metrics, and analyze response patterns in managed environments. This empirical strategy allows quantitative comparability of configurations earlier than manufacturing deployment.

- Subscription-based personalization – Software program firms can adapt options primarily based on every buyer’s subscription stage, offering extra superior instruments for premium customers.

- Persona-based knowledge supply integration – Establishments can alter content material complexity and tone primarily based on the person’s profile, offering persona-appropriate explanations and sources by altering the information bases related to the agent on the fly.

- Dynamic device choice – Builders can create functions with a whole lot of APIs, and shortly and precisely perform duties by dynamically selecting a small subset of APIs for the agent to think about for a given request. That is notably useful for big software program as a service (SaaS) platforms needing multi-tenant scaling.

Inline brokers broaden your choices for constructing and deploying agentic options with Amazon Bedrock Brokers. For workloads needing managed and versioned agent sources with a pre-determined and examined configuration (particular mannequin, directions, instruments, and so forth), builders can proceed to make use of InvokeAgent on sources created with CreateAgent. For workloads that want dynamic runtime habits adjustments for every agent invocation, you should use the brand new InvokeInlineAgent API. With both strategy, your brokers can be safe and scalable, with configurable guardrails, a versatile set of mannequin inference choices, native entry to information bases, code interpretation, session reminiscence, and extra.

Resolution overview

Our HR assistant instance reveals find out how to construct a single AI assistant that adapts to totally different person roles utilizing the brand new inline agent capabilities in Amazon Bedrock Brokers. When customers work together with the assistant, the assistant dynamically configures agent capabilities (similar to mannequin, directions, information bases, motion teams, and guardrails) primarily based on the person’s position and their particular choices. This strategy creates a versatile system that adjusts its performance in actual time, making it extra environment friendly than creating separate brokers for every person position or device mixture. The entire code for this HR assistant instance is accessible on our GitHub repo.

This dynamic device choice allows a customized expertise. When an worker logs in with out direct reviews, they see a set of instruments that they’ve entry to primarily based on their position. They’ll choose from choices like requesting trip time, checking firm insurance policies utilizing the information base, utilizing a code interpreter for knowledge evaluation, or submitting expense reviews. The inline agent assistant is then configured with solely these chosen instruments, permitting it to help the worker with their chosen duties. In a real-world instance, the person wouldn’t must make the choice, as a result of the appliance would make that call and routinely configure the agent invocation at runtime. We make it express on this utility in an effort to exhibit the affect.

Equally, when a supervisor logs in to the identical system, they see an prolonged set of instruments reflecting their extra permissions. Along with the employee-level instruments, managers have entry to capabilities like operating efficiency evaluations. They’ll choose which instruments they need to use for his or her present session, immediately configuring the inline agent with their decisions.

The inclusion of data bases can also be adjusted primarily based on the person’s position. Staff and managers see totally different ranges of firm coverage data, with managers getting extra entry to confidential knowledge like efficiency assessment and compensation particulars. For this demo, we’ve applied metadata filtering to retrieve solely the suitable stage of paperwork primarily based on the person’s entry stage, additional enhancing effectivity and safety.

Let’s have a look at how the interface adapts to totally different person roles.

The worker view gives entry to important HR features like trip requests, expense submissions, and firm coverage lookups. Customers can choose which of those instruments they need to use for his or her present session.

The supervisor view extends these choices to incorporate supervisory features like compensation administration, demonstrating how the inline agent might be configured with a broader set of instruments primarily based on person permissions.

The supervisor view extends these capabilities to incorporate supervisory features like compensation administration, demonstrating how the inline agent dynamically adjusts its accessible instruments primarily based on person permissions. With out inline brokers, we would want to construct and preserve two separate brokers.

As proven within the previous screenshots, the identical HR assistant provides totally different device choices primarily based on the person’s position. An worker sees choices like Information Base, Apply Trip Instrument, and Submit Expense, whereas a supervisor has extra choices like Efficiency Analysis. Customers can choose which instruments they need to add to the agent for his or her present interplay.

This flexibility permits for fast adaptation to person wants and preferences. For example, if the corporate introduces a brand new coverage for creating enterprise journey requests, the device catalog might be shortly up to date to incorporate a Create Enterprise Journey Reservation device. Staff can then select so as to add this new device to their agent configuration when they should plan a enterprise journey, or the appliance may routinely achieve this primarily based on their position.

With Amazon Bedrock inline brokers, you’ll be able to create a catalog of actions that’s dynamically chosen by the appliance or by customers of the appliance. This will increase the extent of flexibility and flexibility of your options, making them an ideal match for navigating the complicated, ever-changing panorama of recent enterprise operations. Customers have extra management over their AI assistant’s capabilities, and the system stays environment friendly by solely loading the mandatory instruments for every interplay.

Technical basis: Dynamic configuration and motion choice

Inline brokers enable dynamic configuration at runtime, enabling a single agent to successfully carry out the work of many. By specifying motion teams and modifying directions on the fly, even inside the identical session, you’ll be able to create versatile AI functions that adapt to varied situations with out a number of agent deployments.

The next are key factors about inline brokers:

- Runtime configuration – Change the agent’s configuration, together with its FM, at runtime. This allows fast experimentation and adaptation with out redeploying the appliance, lowering improvement cycles.

- Governance at device stage – Apply governance and entry management on the device stage. With brokers altering dynamically at runtime, tool-level governance helps preserve safety and compliance whatever the agent’s configuration.

- Agent effectivity – Present solely mandatory instruments and directions at runtime to cut back token utilization and enhance the agent accuracy. With fewer instruments to select from, it’s easier for the agent to pick the correct one, lowering hallucinations within the device choice course of. This strategy may also result in decrease prices and improved latency in comparison with static brokers as a result of eradicating pointless instruments, information bases, and directions reduces the variety of enter and output tokens being processed by the agent’s giant language mannequin (LLM).

- Versatile motion catalog – Create reusable actions for dynamic choice primarily based on particular wants. This modular strategy simplifies upkeep, updates, and scalability of your AI functions.

The next are examples of reusable actions:

- Enterprise system integration – Join with techniques like Salesforce, GitHub, or databases

- Utility instruments – Carry out frequent duties similar to sending emails or managing calendars

- Workforce-specific API entry – Work together with specialised inside instruments and providers

- Information processing – Analyze textual content, structured knowledge, or different data

- Exterior providers – Fetch climate updates, inventory costs, or carry out net searches

- Specialised ML fashions – Use particular machine studying (ML) fashions for focused duties

When utilizing inline brokers, you configure parameters for the next:

- Contextual device choice primarily based on person intent or dialog circulation

- Adaptation to totally different person roles and permissions

- Switching between communication types or personas

- Mannequin choice primarily based on job complexity

The inline agent makes use of the configuration you present at runtime, permitting for extremely versatile AI assistants that effectively deal with numerous duties throughout totally different enterprise contexts.

Constructing an HR assistant utilizing inline brokers

Let’s have a look at how we constructed our HR Assistant utilizing Amazon Bedrock inline brokers:

- Create a device catalog – We developed a demo catalog of HR-related instruments, together with:

- Information Base – Utilizing Amazon Bedrock Information Bases for accessing firm insurance policies and pointers primarily based on the position of the appliance person. To be able to filter the information base content material primarily based on the person’s position, you additionally want to supply a metadata file specifying the kind of worker’s roles that may entry every file

- Apply Trip – For requesting and monitoring time without work.

- Expense Report – For submitting and managing expense reviews.

- Code Interpreter – For performing calculations and knowledge evaluation.

- Compensation Administration – for conducting and reviewing worker compensation assessments (supervisor solely entry).

- Set dialog tone – We outlined a number of dialog tones to swimsuit totally different interplay types:

- Skilled – For formal, business-like interactions.

- Informal – For pleasant, on a regular basis assist.

- Enthusiastic – For upbeat, encouraging help.

- Implement entry management – We applied role-based entry management. The applying backend checks the person’s position (worker or supervisor) and gives entry to acceptable instruments and knowledge and passes this data to the inline agent. The position data can also be used to configure metadata filtering within the information bases to generate related responses. The system permits for dynamic device use at runtime. Customers can swap personas or add and take away instruments throughout their session, permitting the agent to adapt to totally different dialog wants in actual time.

- Combine the agent with different providers and instruments – We related the inline agent to:

- Amazon Bedrock Information Bases for firm insurance policies, with metadata filtering for role-based entry.

- AWS Lambda features for executing particular actions (similar to submitting trip requests or expense reviews).

- A code interpreter device for performing calculations and knowledge evaluation.

- Create the UI – We created a Flask-based UI that performs the next actions:

- Shows accessible instruments primarily based on the person’s position.

- Permits customers to pick totally different personas.

- Offers a chat window for interacting with the HR assistant.

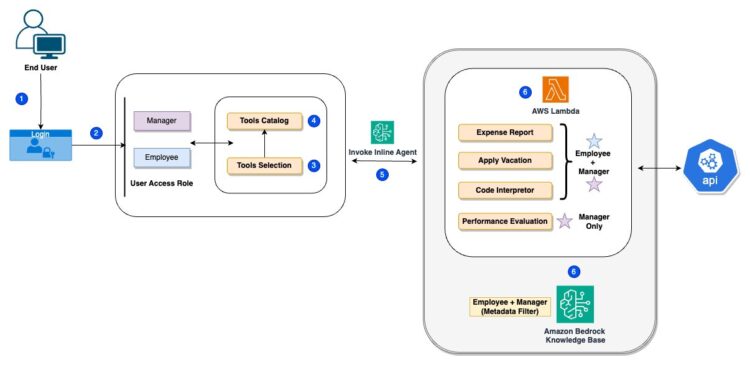

To grasp how this dynamic role-based performance works beneath the hood, let’s look at the next system structure diagram.

As proven in previous structure diagram, the system works as follows:

- The tip-user logs in and is recognized as both a supervisor or an worker.

- The person selects the instruments that they’ve entry to and makes a request to the HR assistant.

- The agent breaks down the issues and makes use of the accessible instruments to unravel for the question in steps, which can embrace:

- Amazon Bedrock Information Bases (with metadata filtering for role-based entry).

- Lambda features for particular actions.

- Code interpreter device for calculations.

- Compensation device (accessible solely to managers to submit base pay elevate requests).

- The applying makes use of the Amazon Bedrock inline agent to dynamically move within the acceptable instruments primarily based on the person’s position and request.

- The agent makes use of the chosen instruments to course of the request and supply a response to the person.

This strategy gives a versatile, scalable resolution that may shortly adapt to totally different person roles and altering enterprise wants.

Conclusion

On this put up, we launched the Amazon Bedrock inline agent performance and highlighted its utility to an HR use case. We dynamically chosen instruments primarily based on the person’s roles and permissions, tailored directions to set a dialog tone, and chosen totally different fashions at runtime. With inline brokers, you’ll be able to remodel the way you construct and deploy AI assistants. By dynamically adapting instruments, directions, and fashions at runtime, you’ll be able to:

- Create customized experiences for various person roles

- Optimize prices by matching mannequin capabilities to job complexity

- Streamline improvement and upkeep

- Scale effectively with out managing a number of agent configurations

For organizations demanding extremely dynamic habits—whether or not you’re an AI startup, SaaS supplier, or enterprise resolution staff—inline brokers supply a scalable strategy to constructing clever assistants that develop along with your wants. To get began, discover our GitHub repo and HR assistant demo utility, which exhibit key implementation patterns and greatest practices.

To study extra about find out how to be most profitable in your agent journey, learn our two-part weblog collection:

To get began with Amazon Bedrock Brokers, try the next GitHub repository with instance code.

Concerning the authors

Ishan Singh is a Generative AI Information Scientist at Amazon Net Companies, the place he helps prospects construct revolutionary and accountable generative AI options and merchandise. With a robust background in AI/ML, Ishan focuses on constructing Generative AI options that drive enterprise worth. Outdoors of labor, he enjoys taking part in volleyball, exploring native bike trails, and spending time along with his spouse and canine, Beau.

Ishan Singh is a Generative AI Information Scientist at Amazon Net Companies, the place he helps prospects construct revolutionary and accountable generative AI options and merchandise. With a robust background in AI/ML, Ishan focuses on constructing Generative AI options that drive enterprise worth. Outdoors of labor, he enjoys taking part in volleyball, exploring native bike trails, and spending time along with his spouse and canine, Beau.

Maira Ladeira Tanke is a Senior Generative AI Information Scientist at AWS. With a background in machine studying, she has over 10 years of expertise architecting and constructing AI functions with prospects throughout industries. As a technical lead, she helps prospects speed up their achievement of enterprise worth by means of generative AI options on Amazon Bedrock. In her free time, Maira enjoys touring, taking part in together with her cat, and spending time together with her household someplace heat.

Maira Ladeira Tanke is a Senior Generative AI Information Scientist at AWS. With a background in machine studying, she has over 10 years of expertise architecting and constructing AI functions with prospects throughout industries. As a technical lead, she helps prospects speed up their achievement of enterprise worth by means of generative AI options on Amazon Bedrock. In her free time, Maira enjoys touring, taking part in together with her cat, and spending time together with her household someplace heat.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct generative AI options. His focus since early 2023 has been main resolution structure efforts for the launch of Amazon Bedrock, the flagship generative AI providing from AWS for builders. Mark’s work covers a variety of use instances, with a main curiosity in generative AI, brokers, and scaling ML throughout the enterprise. He has helped firms in insurance coverage, monetary providers, media and leisure, healthcare, utilities, and manufacturing. Previous to becoming a member of AWS, Mark was an architect, developer, and expertise chief for over 25 years, together with 19 years in monetary providers. Mark holds six AWS certifications, together with the ML Specialty Certification.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct generative AI options. His focus since early 2023 has been main resolution structure efforts for the launch of Amazon Bedrock, the flagship generative AI providing from AWS for builders. Mark’s work covers a variety of use instances, with a main curiosity in generative AI, brokers, and scaling ML throughout the enterprise. He has helped firms in insurance coverage, monetary providers, media and leisure, healthcare, utilities, and manufacturing. Previous to becoming a member of AWS, Mark was an architect, developer, and expertise chief for over 25 years, together with 19 years in monetary providers. Mark holds six AWS certifications, together with the ML Specialty Certification.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply captivated with exploring the probabilities of generative AI. He collaborates with prospects to assist them construct well-architected functions on the AWS platform, and is devoted to fixing expertise challenges and helping with their cloud journey.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply captivated with exploring the probabilities of generative AI. He collaborates with prospects to assist them construct well-architected functions on the AWS platform, and is devoted to fixing expertise challenges and helping with their cloud journey.

Ashrith Chirutani is a Software program Improvement Engineer at Amazon Net Companies (AWS). He focuses on backend system design, distributed architectures, and scalable options, contributing to the event and launch of high-impact techniques at Amazon. Outdoors of labor, he spends his time taking part in ping pong and mountain climbing by means of Cascade trails, having fun with the outside as a lot as he enjoys constructing techniques.

Ashrith Chirutani is a Software program Improvement Engineer at Amazon Net Companies (AWS). He focuses on backend system design, distributed architectures, and scalable options, contributing to the event and launch of high-impact techniques at Amazon. Outdoors of labor, he spends his time taking part in ping pong and mountain climbing by means of Cascade trails, having fun with the outside as a lot as he enjoys constructing techniques.

Shubham Divekar is a Software program Improvement Engineer at Amazon Net Companies (AWS), working in Brokers for Amazon Bedrock. He focuses on creating scalable techniques on the cloud that allow AI functions frameworks and orchestrations. Shubham additionally has a background in constructing distributed, scalable, high-volume-high-throughput techniques in IoT architectures.

Shubham Divekar is a Software program Improvement Engineer at Amazon Net Companies (AWS), working in Brokers for Amazon Bedrock. He focuses on creating scalable techniques on the cloud that allow AI functions frameworks and orchestrations. Shubham additionally has a background in constructing distributed, scalable, high-volume-high-throughput techniques in IoT architectures.

Vivek Bhadauria is a Principal Engineer for Amazon Bedrock. He focuses on constructing deep learning-based AI and pc imaginative and prescient options for AWS prospects. Oustide of labor, Vivek enjoys trekking and following cricket.

Vivek Bhadauria is a Principal Engineer for Amazon Bedrock. He focuses on constructing deep learning-based AI and pc imaginative and prescient options for AWS prospects. Oustide of labor, Vivek enjoys trekking and following cricket.